Marija Slavkovik

Transparency and Proportionality in Post-Processing Algorithmic Bias Correction

May 23, 2025

Abstract:Algorithmic decision-making systems sometimes produce errors or skewed predictions toward a particular group, leading to unfair results. Debiasing practices, applied at different stages of the development of such systems, occasionally introduce new forms of unfairness or exacerbate existing inequalities. We focus on post-processing techniques that modify algorithmic predictions to achieve fairness in classification tasks, examining the unintended consequences of these interventions. To address this challenge, we develop a set of measures that quantify the disparity in the flips applied to the solution in the post-processing stage. The proposed measures will help practitioners: (1) assess the proportionality of the debiasing strategy used, (2) have transparency to explain the effects of the strategy in each group, and (3) based on those results, analyze the possibility of the use of some other approaches for bias mitigation or to solve the problem. We introduce a methodology for applying the proposed metrics during the post-processing stage and illustrate its practical application through an example. This example demonstrates how analyzing the proportionality of the debiasing strategy complements traditional fairness metrics, providing a deeper perspective to ensure fairer outcomes across all groups.

Uncovering Fairness through Data Complexity as an Early Indicator

Apr 08, 2025Abstract:Fairness constitutes a concern within machine learning (ML) applications. Currently, there is no study on how disparities in classification complexity between privileged and unprivileged groups could influence the fairness of solutions, which serves as a preliminary indicator of potential unfairness. In this work, we investigate this gap, specifically, we focus on synthetic datasets designed to capture a variety of biases ranging from historical bias to measurement and representational bias to evaluate how various complexity metrics differences correlate with group fairness metrics. We then apply association rule mining to identify patterns that link disproportionate complexity differences between groups with fairness-related outcomes, offering data-centric indicators to guide bias mitigation. Our findings are also validated by their application in real-world problems, providing evidence that quantifying group-wise classification complexity can uncover early indicators of potential fairness challenges. This investigation helps practitioners to proactively address bias in classification tasks.

Am I Being Treated Fairly? A Conceptual Framework for Individuals to Ascertain Fairness

Apr 03, 2025Abstract:Current fairness metrics and mitigation techniques provide tools for practitioners to asses how non-discriminatory Automatic Decision Making (ADM) systems are. What if I, as an individual facing a decision taken by an ADM system, would like to know: Am I being treated fairly? We explore how to create the affordance for users to be able to ask this question of ADM. In this paper, we argue for the reification of fairness not only as a property of ADM, but also as an epistemic right of an individual to acquire information about the decisions that affect them and use that information to contest and seek effective redress against those decisions, in case they are proven to be discriminatory. We examine key concepts from existing research not only in algorithmic fairness but also in explainable artificial intelligence, accountability, and contestability. Integrating notions from these domains, we propose a conceptual framework to ascertain fairness by combining different tools that empower the end-users of ADM systems. Our framework shifts the focus from technical solutions aimed at practitioners to mechanisms that enable individuals to understand, challenge, and verify the fairness of decisions, and also serves as a blueprint for organizations and policymakers, bridging the gap between technical requirements and practical, user-centered accountability.

Reinforcement Learning and Machine ethics:a systematic review

Jul 02, 2024Abstract:Machine ethics is the field that studies how ethical behaviour can be accomplished by autonomous systems. While there exist some systematic reviews aiming to consolidate the state of the art in machine ethics prior to 2020, these tend to not include work that uses reinforcement learning agents as entities whose ethical behaviour is to be achieved. The reason for this is that only in the last years we have witnessed an increase in machine ethics studies within reinforcement learning. We present here a systematic review of reinforcement learning for machine ethics and machine ethics within reinforcement learning. Additionally, we highlight trends in terms of ethics specifications, components and frameworks of reinforcement learning, and environments used to result in ethical behaviour. Our systematic review aims to consolidate the work in machine ethics and reinforcement learning thus completing the gap in the state of the art machine ethics landscape

Value Engineering for Autonomous Agents

Feb 17, 2023Abstract:Machine Ethics (ME) is concerned with the design of Artificial Moral Agents (AMAs), i.e. autonomous agents capable of reasoning and behaving according to moral values. Previous approaches have treated values as labels associated with some actions or states of the world, rather than as integral components of agent reasoning. It is also common to disregard that a value-guided agent operates alongside other value-guided agents in an environment governed by norms, thus omitting the social dimension of AMAs. In this blue sky paper, we propose a new AMA paradigm grounded in moral and social psychology, where values are instilled into agents as context-dependent goals. These goals intricately connect values at individual levels to norms at a collective level by evaluating the outcomes most incentivized by the norms in place. We argue that this type of normative reasoning, where agents are endowed with an understanding of norms' moral implications, leads to value-awareness in autonomous agents. Additionally, this capability paves the way for agents to align the norms enforced in their societies with respect to the human values instilled in them, by complementing the value-based reasoning on norms with agreement mechanisms to help agents collectively agree on the best set of norms that suit their human values. Overall, our agent model goes beyond the treatment of values as inert labels by connecting them to normative reasoning and to the social functionalities needed to integrate value-aware agents into our modern hybrid human-computer societies.

Against Algorithmic Exploitation of Human Vulnerabilities

Jan 12, 2023

Abstract:Decisions such as which movie to watch next, which song to listen to, or which product to buy online, are increasingly influenced by recommender systems and user models that incorporate information on users' past behaviours, preferences, and digitally created content. Machine learning models that enable recommendations and that are trained on user data may unintentionally leverage information on human characteristics that are considered vulnerabilities, such as depression, young age, or gambling addiction. The use of algorithmic decisions based on latent vulnerable state representations could be considered manipulative and could have a deteriorating impact on the condition of vulnerable individuals. In this paper, we are concerned with the problem of machine learning models inadvertently modelling vulnerabilities, and want to raise awareness for this issue to be considered in legislation and AI ethics. Hence, we define and describe common vulnerabilities, and illustrate cases where they are likely to play a role in algorithmic decision-making. We propose a set of requirements for methods to detect the potential for vulnerability modelling, detect whether vulnerable groups are treated differently by a model, and detect whether a model has created an internal representation of vulnerability. We conclude that explainable artificial intelligence methods may be necessary for detecting vulnerability exploitation by machine learning-based recommendation systems.

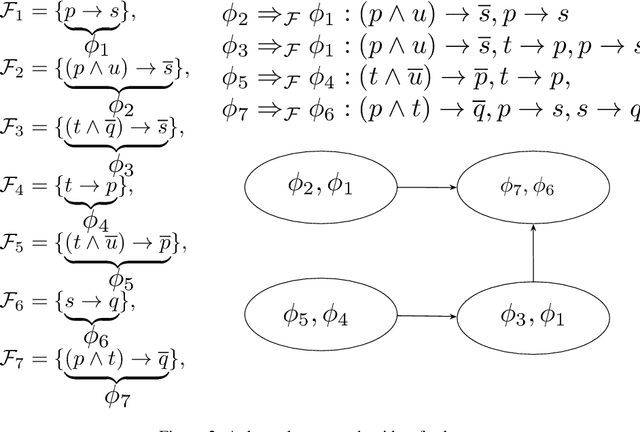

Finding Common Ground for Incoherent Horn Expressions

Sep 14, 2022

Abstract:Autonomous systems that operate in a shared environment with people need to be able to follow the rules of the society they occupy. While laws are unique for one society, different people and institutions may use different rules to guide their conduct. We study the problem of reaching a common ground among possibly incoherent rules of conduct. We formally define a notion of common ground and discuss the main properties of this notion. Then, we identify three sufficient conditions on the class of Horn expressions for which common grounds are guaranteed to exist. We provide a polynomial time algorithm that computes common grounds, under these conditions. We also show that if any of the three conditions is removed then common grounds for the resulting (larger) class may not exist.

Automated detection of dark patterns in cookie banners: how to do it poorly and why it is hard to do it any other way

Apr 21, 2022

Abstract:Cookie banners, the pop ups that appear to collect your consent for data collection, are a tempting ground for dark patterns. Dark patterns are design elements that are used to influence the user's choice towards an option that is not in their interest. The use of dark patterns renders consent elicitation meaningless and voids the attempts to improve a fair collection and use of data. Can machine learning be used to automatically detect the presence of dark patterns in cookie banners? In this work, a dataset of cookie banners of 300 news websites was used to train a prediction model that does exactly that. The machine learning pipeline we used includes feature engineering, parameter search, training a Gradient Boosted Tree classifier and evaluation. The accuracy of the trained model is promising, but allows a lot of room for improvement. We provide an in-depth analysis of the interdisciplinary challenges that automated dark pattern detection poses to artificial intelligence. The dataset and all the code created using machine learning is available at the url to repository removed for review.

Explainability for identification of vulnerable groups in machine learning models

Mar 01, 2022

Abstract:If a prediction model identifies vulnerable individuals or groups, the use of that model may become an ethical issue. But can we know that this is what a model does? Machine learning fairness as a field is focused on the just treatment of individuals and groups under information processing with machine learning methods. While considerable attention has been given to mitigating discrimination of protected groups, vulnerable groups have not received the same attention. Unlike protected groups, which can be regarded as always vulnerable, a vulnerable group may be vulnerable in one context but not in another. This raises new challenges on how and when to protect vulnerable individuals and groups under machine learning. Methods from explainable artificial intelligence (XAI), in contrast, do consider more contextual issues and are concerned with answering the question "why was this decision made?". Neither existing fairness nor existing explainability methods allow us to ascertain if a prediction model identifies vulnerability. We discuss this problem and propose approaches for analysing prediction models in this respect.

The social dilemma in AI development and why we have to solve it

Jul 28, 2021

Abstract:While the demand for ethical artificial intelligence (AI) systems increases, the number of unethical uses of AI accelerates, even though there is no shortage of ethical guidelines. We argue that a main underlying cause for this is that AI developers face a social dilemma in AI development ethics, preventing the widespread adaptation of ethical best practices. We define the social dilemma for AI development and describe why the current crisis in AI development ethics cannot be solved without relieving AI developers of their social dilemma. We argue that AI development must be professionalised to overcome the social dilemma, and discuss how medicine can be used as a template in this process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge