Helena Webb

From Spoken Thoughts to Automated Driving Commentary: Predicting and Explaining Intelligent Vehicles' Actions

Apr 19, 2022

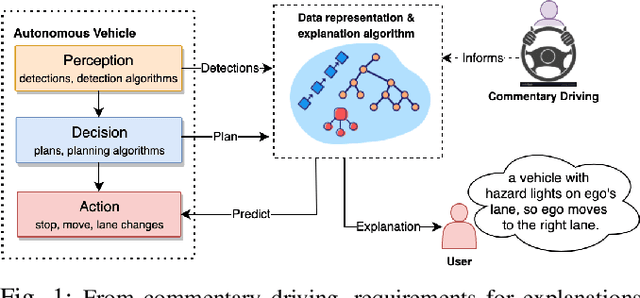

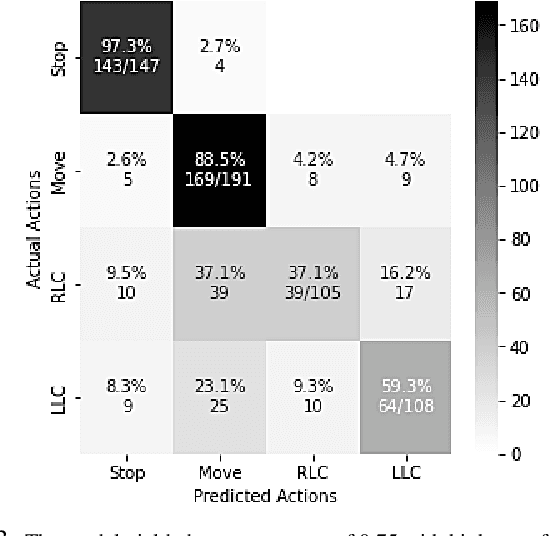

Abstract:Commentary driving is a technique in which drivers verbalise their observations, assessments and intentions. By speaking out their thoughts, both learning and expert drivers are able to create a better understanding and awareness of their surroundings. In the intelligent vehicle context, automated driving commentary can provide intelligible explanations about driving actions, and thereby assist a driver or an end-user during driving operations in challenging and safety-critical scenarios. In this paper, we conducted a field study in which we deployed a research vehicle in an urban environment to obtain data. While collecting sensor data of the vehicle's surroundings, we obtained driving commentary from a driving instructor using the think-aloud protocol. We analysed the driving commentary and uncovered an explanation style; the driver first announces his observations, announces his plans, and then makes general remarks. He also made counterfactual comments. We successfully demonstrated how factual and counterfactual natural language explanations that follow this style could be automatically generated using a simple tree-based approach. Generated explanations for longitudinal actions (e.g., stop and move) were deemed more intelligible and plausible by human judges compared to lateral actions, such as lane changes. We discussed how our approach can be built on in the future to realise more robust and effective explainability for driver assistance as well as partial and conditional automation of driving functions.

Institutionalising Ethics in AI through Broader Impact Requirements

May 30, 2021

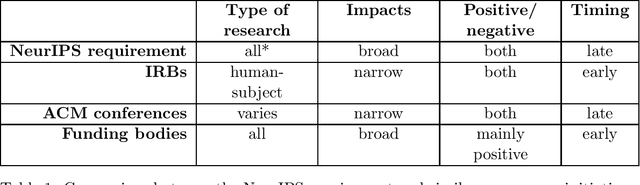

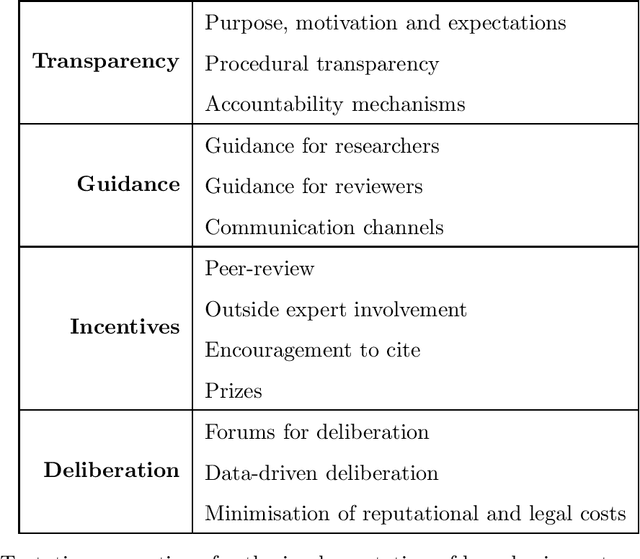

Abstract:Turning principles into practice is one of the most pressing challenges of artificial intelligence (AI) governance. In this article, we reflect on a novel governance initiative by one of the world's largest AI conferences. In 2020, the Conference on Neural Information Processing Systems (NeurIPS) introduced a requirement for submitting authors to include a statement on the broader societal impacts of their research. Drawing insights from similar governance initiatives, including institutional review boards (IRBs) and impact requirements for funding applications, we investigate the risks, challenges and potential benefits of such an initiative. Among the challenges, we list a lack of recognised best practice and procedural transparency, researcher opportunity costs, institutional and social pressures, cognitive biases, and the inherently difficult nature of the task. The potential benefits, on the other hand, include improved anticipation and identification of impacts, better communication with policy and governance experts, and a general strengthening of the norms around responsible research. To maximise the chance of success, we recommend measures to increase transparency, improve guidance, create incentives to engage earnestly with the process, and facilitate public deliberation on the requirement's merits and future. Perhaps the most important contribution from this analysis are the insights we can gain regarding effective community-based governance and the role and responsibility of the AI research community more broadly.

Explanations in Autonomous Driving: A Survey

Mar 11, 2021

Abstract:The automotive industry is seen to have witnessed an increasing level of development in the past decades; from manufacturing manually operated vehicles to manufacturing vehicles with high level of automation. With the recent developments in Artificial Intelligence (AI), automotive companies now employ high performance AI models to enable vehicles to perceive their environment and make driving decisions with little or no influence from a human. With the hope to deploy autonomous vehicles (AV) on a commercial scale, the acceptance of AV by society becomes paramount and may largely depend on their degree of transparency, trustworthiness, and compliance to regulations. The assessment of these acceptance requirements can be facilitated through the provision of explanations for AVs' behaviour. Explainability is therefore seen as an important requirement for AVs. AVs should be able to explain what they have 'seen', done and might do in environments where they operate. In this paper, we provide a comprehensive survey of the existing work in explainable autonomous driving. First, we open by providing a motivation for explanations and examining existing standards related to AVs. Second, we identify and categorise the different stakeholders involved in the development, use, and regulation of AVs and show their perceived need for explanation. Third, we provide a taxonomy of explanations and reviewed previous work on explanation in the different AV operations. Finally, we draw a close by pointing out pertinent challenges and future research directions. This survey serves to provide fundamental knowledge required of researchers who are interested in explanation in autonomous driving.

Robot Accident Investigation: a case study in Responsible Robotics

May 15, 2020

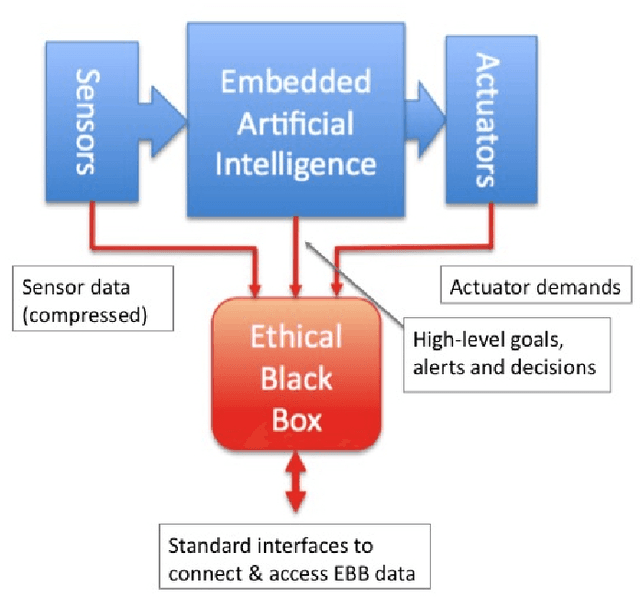

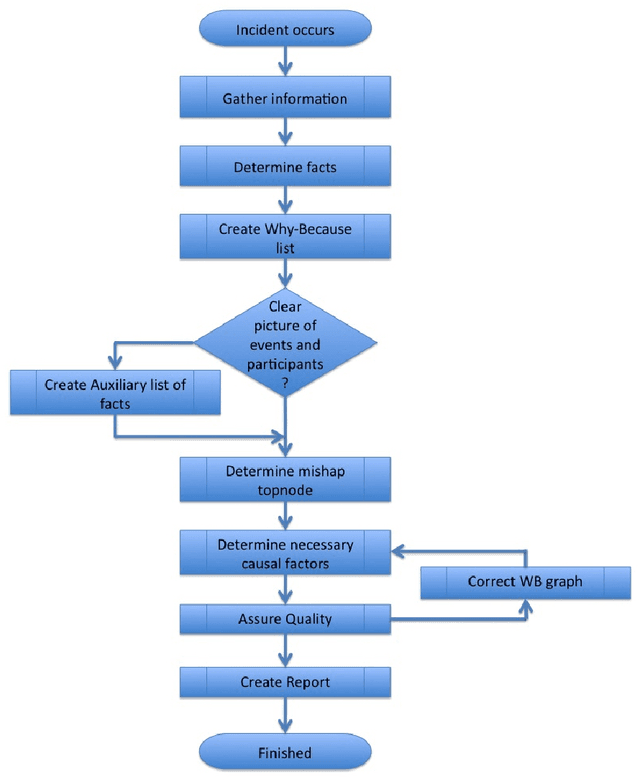

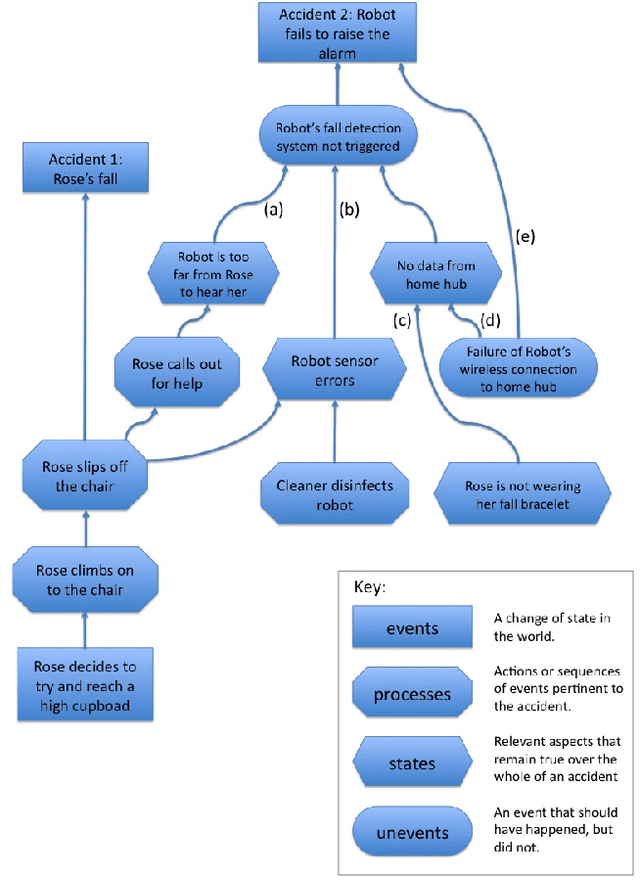

Abstract:Robot accidents are inevitable. Although rare, they have been happening since assembly-line robots were first introduced in the 1960s. But a new generation of social robots are now becoming commonplace. Often with sophisticated embedded artificial intelligence (AI) social robots might be deployed as care robots to assist elderly or disabled people to live independently. Smart robot toys offer a compelling interactive play experience for children and increasingly capable autonomous vehicles (AVs) the promise of hands-free personal transport and fully autonomous taxis. Unlike industrial robots which are deployed in safety cages, social robots are designed to operate in human environments and interact closely with humans; the likelihood of robot accidents is therefore much greater for social robots than industrial robots. This paper sets out a draft framework for social robot accident investigation; a framework which proposes both the technology and processes that would allow social robot accidents to be investigated with no less rigour than we expect of air or rail accident investigations. The paper also places accident investigation within the practice of responsible robotics, and makes the case that social robotics without accident investigation would be no less irresponsible than aviation without air accident investigation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge