Ines Hipolito

EcoNet: Multiagent Planning and Control Of Household Energy Resources Using Active Inference

Dec 14, 2025Abstract:Advances in automated systems afford new opportunities for intelligent management of energy at household, local area, and utility scales. Home Energy Management Systems (HEMS) can play a role by optimizing the schedule and use of household energy devices and resources. One challenge is that the goals of a household can be complex and conflicting. For example, a household might wish to reduce energy costs and grid-associated greenhouse gas emissions, yet keep room temperatures comfortable. Another challenge is that an intelligent HEMS agent must make decisions under uncertainty. An agent must plan actions into the future, but weather and solar generation forecasts, for example, provide inherently uncertain estimates of future conditions. This paper introduces EcoNet, a Bayesian approach to household and neighborhood energy management that is based on active inference. The aim is to improve energy management and coordination, while accommodating uncertainties and taking into account potentially conditional and conflicting goals and preferences. Simulation results are presented and discussed.

Modeling Sustainable Resource Management using Active Inference

Jun 11, 2024

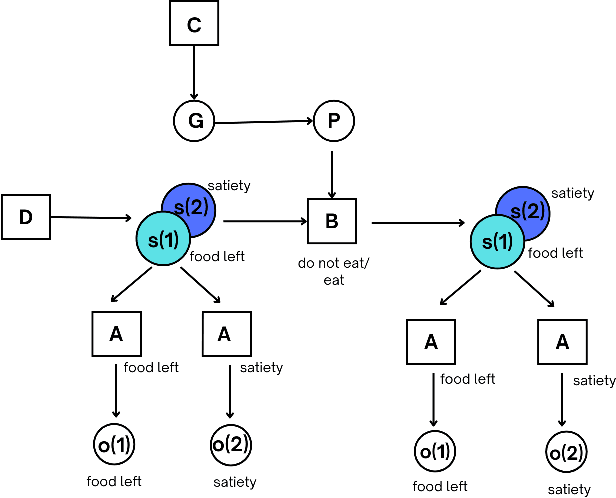

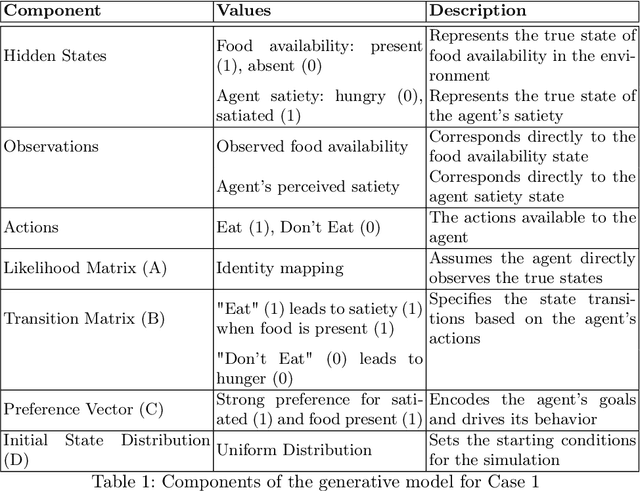

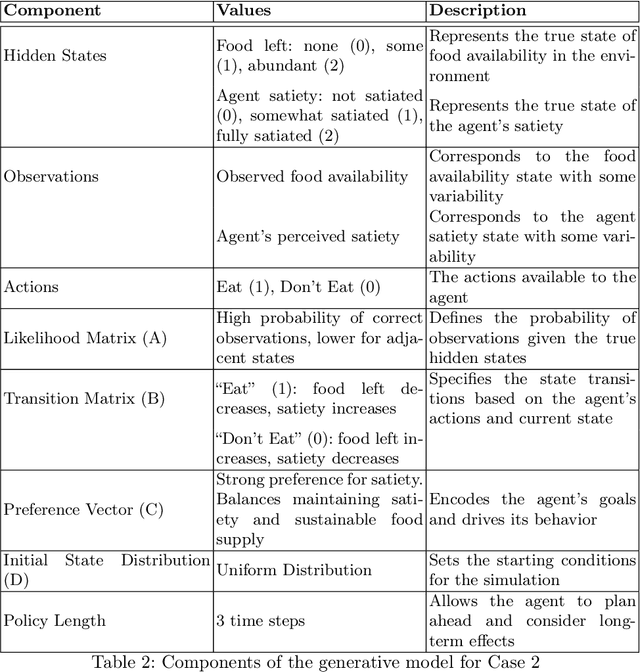

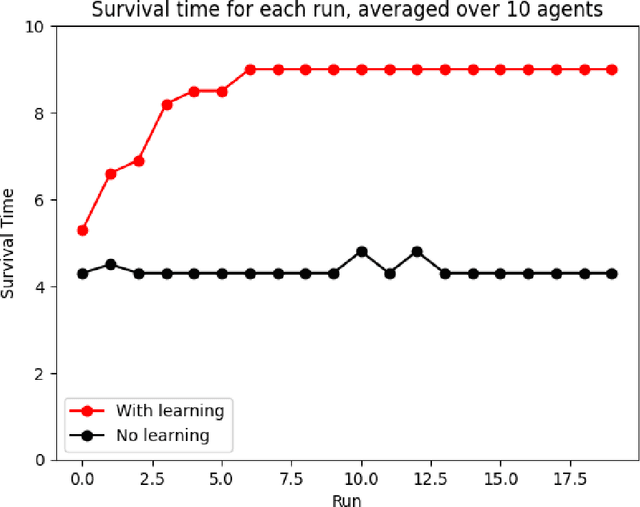

Abstract:Active inference helps us simulate adaptive behavior and decision-making in biological and artificial agents. Building on our previous work exploring the relationship between active inference, well-being, resilience, and sustainability, we present a computational model of an agent learning sustainable resource management strategies in both static and dynamic environments. The agent's behavior emerges from optimizing its own well-being, represented by prior preferences, subject to beliefs about environmental dynamics. In a static environment, the agent learns to consistently consume resources to satisfy its needs. In a dynamic environment where resources deplete and replenish based on the agent's actions, the agent adapts its behavior to balance immediate needs with long-term resource availability. This demonstrates how active inference can give rise to sustainable and resilient behaviors in the face of changing environmental conditions. We discuss the implications of our model, its limitations, and suggest future directions for integrating more complex agent-environment interactions. Our work highlights active inference's potential for understanding and shaping sustainable behaviors.

A Path Towards Legal Autonomy: An interoperable and explainable approach to extracting, transforming, loading and computing legal information using large language models, expert systems and Bayesian networks

Mar 27, 2024Abstract:Legal autonomy - the lawful activity of artificial intelligence agents - can be achieved in one of two ways. It can be achieved either by imposing constraints on AI actors such as developers, deployers and users, and on AI resources such as data, or by imposing constraints on the range and scope of the impact that AI agents can have on the environment. The latter approach involves encoding extant rules concerning AI driven devices into the software of AI agents controlling those devices (e.g., encoding rules about limitations on zones of operations into the agent software of an autonomous drone device). This is a challenge since the effectivity of such an approach requires a method of extracting, loading, transforming and computing legal information that would be both explainable and legally interoperable, and that would enable AI agents to reason about the law. In this paper, we sketch a proof of principle for such a method using large language models (LLMs), expert legal systems known as legal decision paths, and Bayesian networks. We then show how the proposed method could be applied to extant regulation in matters of autonomous cars, such as the California Vehicle Code.

Enactive Artificial Intelligence: Subverting Gender Norms in Robot-Human Interaction

Jan 24, 2023Abstract:Enactive Artificial Intelligence (eAI) motivates new directions towards gender-inclusive AI. Beyond a mirror reflecting our values, AI design has a profound impact on shaping the enaction of cultural identities. The traditionally unrepresentative, white, cisgender, heterosexual dominant narratives are partial, and thereby active vehicles of social marginalisation. Drawing from enactivism, the paper first characterises AI design as a cultural practice; which is then specified in feminist technoscience principles, i.e. how gender and other embodied identity markers are entangled in AI. These principles are then discussed in the specific case of feminist human-robot interaction. The paper, then, stipulates the conditions for eAI: an eAI robot is a robot that (1) plays a cultural role in individual and social identity, (2) this role takes the form of human-robot dynamical interaction, and (3) interaction is embodied. Drawing from eAI, finally, the paper offers guidelines for I. eAI gender-inclusive AI, and II. subverting existing gender norms of robot design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge