Mahault Albarracin

EcoNet: Multiagent Planning and Control Of Household Energy Resources Using Active Inference

Dec 14, 2025Abstract:Advances in automated systems afford new opportunities for intelligent management of energy at household, local area, and utility scales. Home Energy Management Systems (HEMS) can play a role by optimizing the schedule and use of household energy devices and resources. One challenge is that the goals of a household can be complex and conflicting. For example, a household might wish to reduce energy costs and grid-associated greenhouse gas emissions, yet keep room temperatures comfortable. Another challenge is that an intelligent HEMS agent must make decisions under uncertainty. An agent must plan actions into the future, but weather and solar generation forecasts, for example, provide inherently uncertain estimates of future conditions. This paper introduces EcoNet, a Bayesian approach to household and neighborhood energy management that is based on active inference. The aim is to improve energy management and coordination, while accommodating uncertainties and taking into account potentially conditional and conflicting goals and preferences. Simulation results are presented and discussed.

A Computational Model of Inclusive Pedagogy: From Understanding to Application

May 02, 2025Abstract:Human education transcends mere knowledge transfer, it relies on co-adaptation dynamics -- the mutual adjustment of teaching and learning strategies between agents. Despite its centrality, computational models of co-adaptive teacher-student interactions (T-SI) remain underdeveloped. We argue that this gap impedes Educational Science in testing and scaling contextual insights across diverse settings, and limits the potential of Machine Learning systems, which struggle to emulate and adaptively support human learning processes. To address this, we present a computational T-SI model that integrates contextual insights on human education into a testable framework. We use the model to evaluate diverse T-SI strategies in a realistic synthetic classroom setting, simulating student groups with unequal access to sensory information. Results show that strategies incorporating co-adaptation principles (e.g., bidirectional agency) outperform unilateral approaches (i.e., where only the teacher or the student is active), improving the learning outcomes for all learning types. Beyond the testing and scaling of context-dependent educational insights, our model enables hypothesis generation in controlled yet adaptable environments. This work bridges non-computational theories of human education with scalable, inclusive AI in Education systems, providing a foundation for equitable technologies that dynamically adapt to learner needs.

Navigation under uncertainty: Trajectory prediction and occlusion reasoning with switching dynamical systems

Oct 14, 2024Abstract:Predicting future trajectories of nearby objects, especially under occlusion, is a crucial task in autonomous driving and safe robot navigation. Prior works typically neglect to maintain uncertainty about occluded objects and only predict trajectories of observed objects using high-capacity models such as Transformers trained on large datasets. While these approaches are effective in standard scenarios, they can struggle to generalize to the long-tail, safety-critical scenarios. In this work, we explore a conceptual framework unifying trajectory prediction and occlusion reasoning under the same class of structured probabilistic generative model, namely, switching dynamical systems. We then present some initial experiments illustrating its capabilities using the Waymo open dataset.

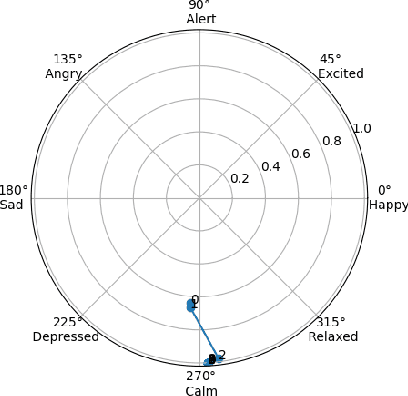

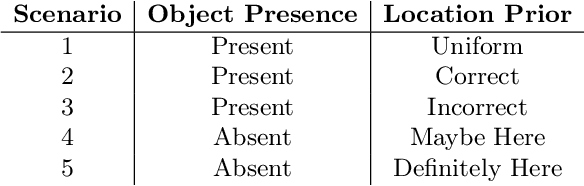

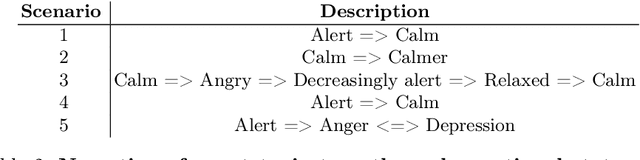

Free Energy in a Circumplex Model of Emotion

Jul 02, 2024

Abstract:Previous active inference accounts of emotion translate fluctuations in free energy to a sense of emotion, mainly focusing on valence. However, in affective science, emotions are often represented as multi-dimensional. In this paper, we propose to adopt a Circumplex Model of emotion by mapping emotions into a two-dimensional spectrum of valence and arousal. We show how one can derive a valence and arousal signal from an agent's expected free energy, relating arousal to the entropy of posterior beliefs and valence to utility less expected utility. Under this formulation, we simulate artificial agents engaged in a search task. We show that the manipulation of priors and object presence results in commonsense variability in emotional states.

Belief sharing: a blessing or a curse

Jul 02, 2024Abstract:When collaborating with multiple parties, communicating relevant information is of utmost importance to efficiently completing the tasks at hand. Under active inference, communication can be cast as sharing beliefs between free-energy minimizing agents, where one agent's beliefs get transformed into an observation modality for the other. However, the best approach for transforming beliefs into observations remains an open question. In this paper, we demonstrate that naively sharing posterior beliefs can give rise to the negative social dynamics of echo chambers and self-doubt. We propose an alternate belief sharing strategy which mitigates these issues.

Modeling Sustainable Resource Management using Active Inference

Jun 11, 2024

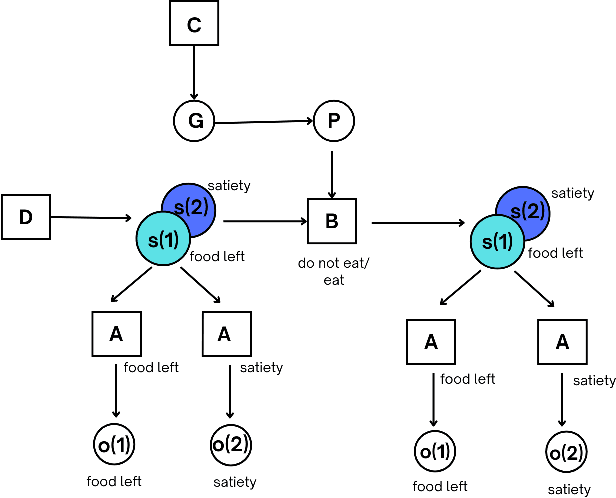

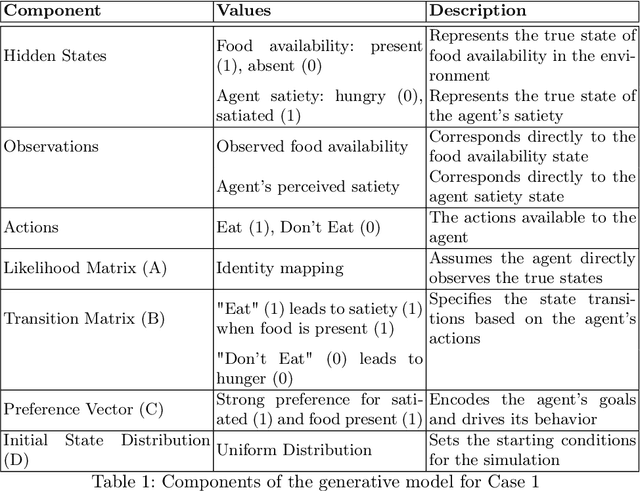

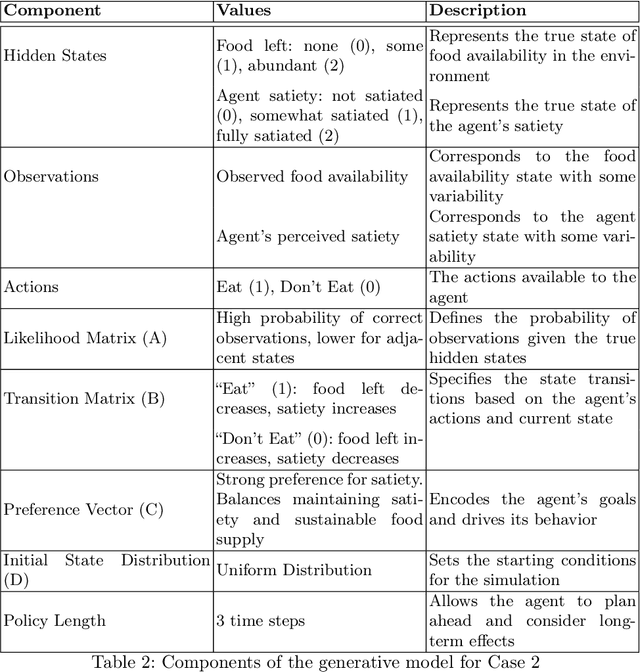

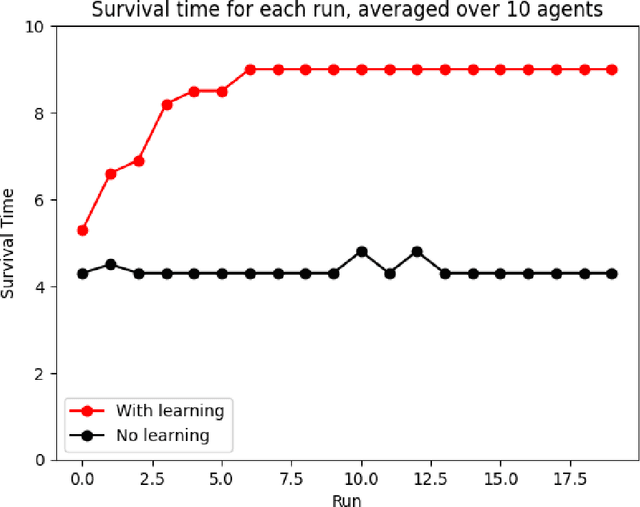

Abstract:Active inference helps us simulate adaptive behavior and decision-making in biological and artificial agents. Building on our previous work exploring the relationship between active inference, well-being, resilience, and sustainability, we present a computational model of an agent learning sustainable resource management strategies in both static and dynamic environments. The agent's behavior emerges from optimizing its own well-being, represented by prior preferences, subject to beliefs about environmental dynamics. In a static environment, the agent learns to consistently consume resources to satisfy its needs. In a dynamic environment where resources deplete and replenish based on the agent's actions, the agent adapts its behavior to balance immediate needs with long-term resource availability. This demonstrates how active inference can give rise to sustainable and resilient behaviors in the face of changing environmental conditions. We discuss the implications of our model, its limitations, and suggest future directions for integrating more complex agent-environment interactions. Our work highlights active inference's potential for understanding and shaping sustainable behaviors.

A Path Towards Legal Autonomy: An interoperable and explainable approach to extracting, transforming, loading and computing legal information using large language models, expert systems and Bayesian networks

Mar 27, 2024Abstract:Legal autonomy - the lawful activity of artificial intelligence agents - can be achieved in one of two ways. It can be achieved either by imposing constraints on AI actors such as developers, deployers and users, and on AI resources such as data, or by imposing constraints on the range and scope of the impact that AI agents can have on the environment. The latter approach involves encoding extant rules concerning AI driven devices into the software of AI agents controlling those devices (e.g., encoding rules about limitations on zones of operations into the agent software of an autonomous drone device). This is a challenge since the effectivity of such an approach requires a method of extracting, loading, transforming and computing legal information that would be both explainable and legally interoperable, and that would enable AI agents to reason about the law. In this paper, we sketch a proof of principle for such a method using large language models (LLMs), expert legal systems known as legal decision paths, and Bayesian networks. We then show how the proposed method could be applied to extant regulation in matters of autonomous cars, such as the California Vehicle Code.

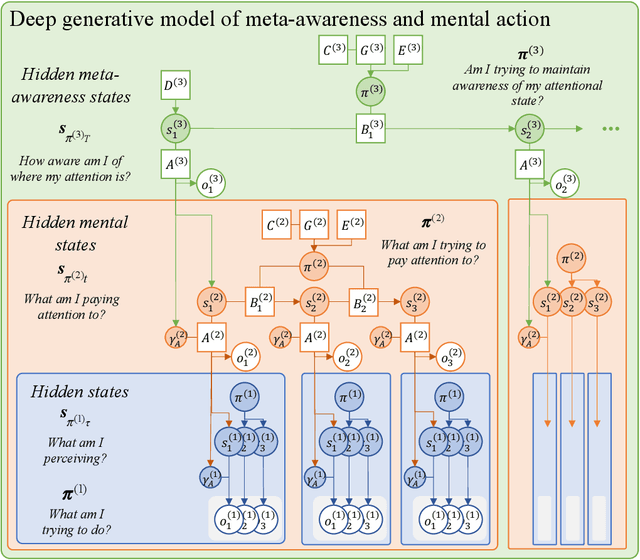

Designing explainable artificial intelligence with active inference: A framework for transparent introspection and decision-making

Jun 06, 2023

Abstract:This paper investigates the prospect of developing human-interpretable, explainable artificial intelligence (AI) systems based on active inference and the free energy principle. We first provide a brief overview of active inference, and in particular, of how it applies to the modeling of decision-making, introspection, as well as the generation of overt and covert actions. We then discuss how active inference can be leveraged to design explainable AI systems, namely, by allowing us to model core features of ``introspective'' processes and by generating useful, human-interpretable models of the processes involved in decision-making. We propose an architecture for explainable AI systems using active inference. This architecture foregrounds the role of an explicit hierarchical generative model, the operation of which enables the AI system to track and explain the factors that contribute to its own decisions, and whose structure is designed to be interpretable and auditable by human users. We outline how this architecture can integrate diverse sources of information to make informed decisions in an auditable manner, mimicking or reproducing aspects of human-like consciousness and introspection. Finally, we discuss the implications of our findings for future research in AI, and the potential ethical considerations of developing AI systems with (the appearance of) introspective capabilities.

Designing Ecosystems of Intelligence from First Principles

Dec 02, 2022

Abstract:This white paper lays out a vision of research and development in the field of artificial intelligence for the next decade (and beyond). Its denouement is a cyber-physical ecosystem of natural and synthetic sense-making, in which humans are integral participants$\unicode{x2014}$what we call ''shared intelligence''. This vision is premised on active inference, a formulation of adaptive behavior that can be read as a physics of intelligence, and which inherits from the physics of self-organization. In this context, we understand intelligence as the capacity to accumulate evidence for a generative model of one's sensed world$\unicode{x2014}$also known as self-evidencing. Formally, this corresponds to maximizing (Bayesian) model evidence, via belief updating over several scales: i.e., inference, learning, and model selection. Operationally, this self-evidencing can be realized via (variational) message passing or belief propagation on a factor graph. Crucially, active inference foregrounds an existential imperative of intelligent systems; namely, curiosity or the resolution of uncertainty. This same imperative underwrites belief sharing in ensembles of agents, in which certain aspects (i.e., factors) of each agent's generative world model provide a common ground or frame of reference. Active inference plays a foundational role in this ecology of belief sharing$\unicode{x2014}$leading to a formal account of collective intelligence that rests on shared narratives and goals. We also consider the kinds of communication protocols that must be developed to enable such an ecosystem of intelligences and motivate the development of a shared hyper-spatial modeling language and transaction protocol, as a first$\unicode{x2014}$and key$\unicode{x2014}$step towards such an ecology.

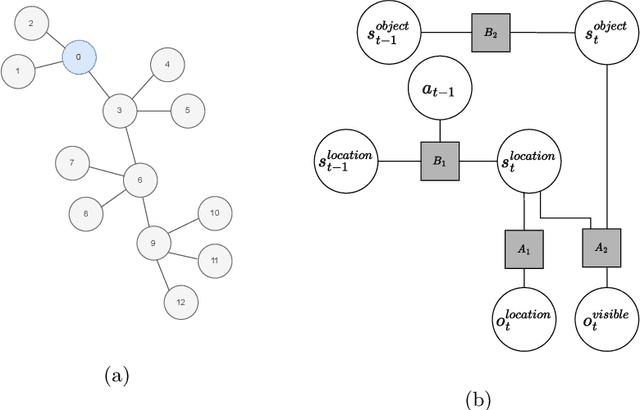

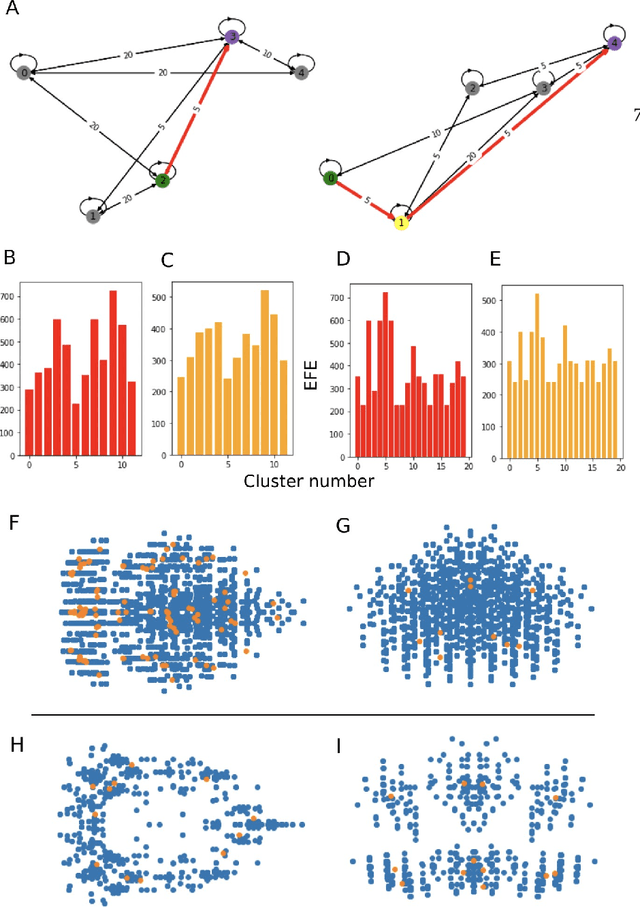

Efficient search of active inference policy spaces using k-means

Sep 07, 2022

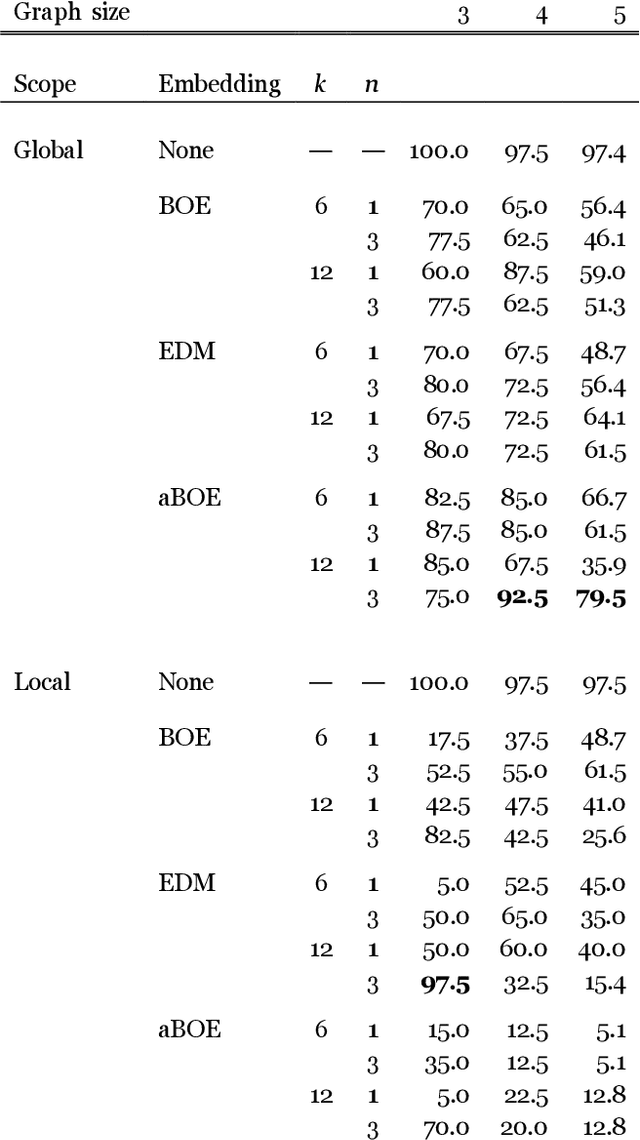

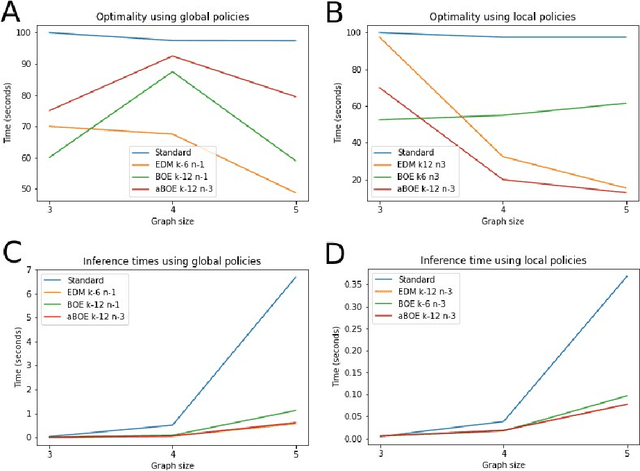

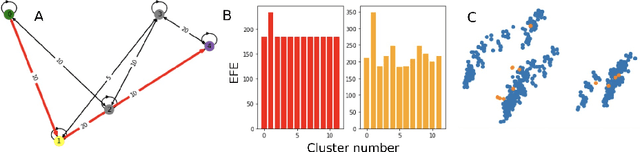

Abstract:We develop an approach to policy selection in active inference that allows us to efficiently search large policy spaces by mapping each policy to its embedding in a vector space. We sample the expected free energy of representative points in the space, then perform a more thorough policy search around the most promising point in this initial sample. We consider various approaches to creating the policy embedding space, and propose using k-means clustering to select representative points. We apply our technique to a goal-oriented graph-traversal problem, for which naive policy selection is intractable for even moderately large graphs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge