Karl Friston

Active inference and artificial reasoning

Dec 24, 2025

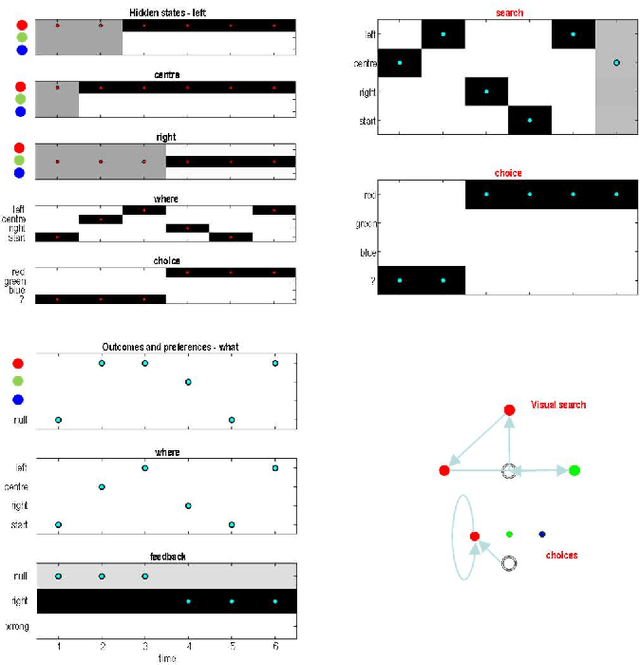

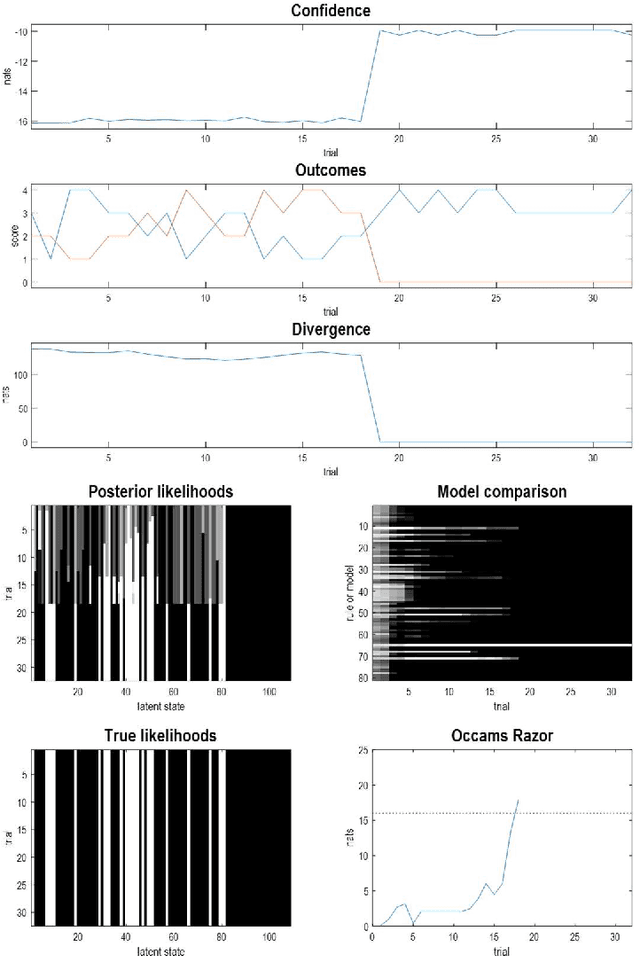

Abstract:This technical note considers the sampling of outcomes that provide the greatest amount of information about the structure of underlying world models. This generalisation furnishes a principled approach to structure learning under a plausible set of generative models or hypotheses. In active inference, policies - i.e., combinations of actions - are selected based on their expected free energy, which comprises expected information gain and value. Information gain corresponds to the KL divergence between predictive posteriors with, and without, the consequences of action. Posteriors over models can be evaluated quickly and efficiently using Bayesian Model Reduction, based upon accumulated posterior beliefs about model parameters. The ensuing information gain can then be used to select actions that disambiguate among alternative models, in the spirit of optimal experimental design. We illustrate this kind of active selection or reasoning using partially observed discrete models; namely, a 'three-ball' paradigm used previously to describe artificial insight and 'aha moments' via (synthetic) introspection or sleep. We focus on the sample efficiency afforded by seeking outcomes that resolve the greatest uncertainty about the world model, under which outcomes are generated.

EcoNet: Multiagent Planning and Control Of Household Energy Resources Using Active Inference

Dec 14, 2025Abstract:Advances in automated systems afford new opportunities for intelligent management of energy at household, local area, and utility scales. Home Energy Management Systems (HEMS) can play a role by optimizing the schedule and use of household energy devices and resources. One challenge is that the goals of a household can be complex and conflicting. For example, a household might wish to reduce energy costs and grid-associated greenhouse gas emissions, yet keep room temperatures comfortable. Another challenge is that an intelligent HEMS agent must make decisions under uncertainty. An agent must plan actions into the future, but weather and solar generation forecasts, for example, provide inherently uncertain estimates of future conditions. This paper introduces EcoNet, a Bayesian approach to household and neighborhood energy management that is based on active inference. The aim is to improve energy management and coordination, while accommodating uncertainties and taking into account potentially conditional and conflicting goals and preferences. Simulation results are presented and discussed.

AXIOM: Learning to Play Games in Minutes with Expanding Object-Centric Models

May 30, 2025Abstract:Current deep reinforcement learning (DRL) approaches achieve state-of-the-art performance in various domains, but struggle with data efficiency compared to human learning, which leverages core priors about objects and their interactions. Active inference offers a principled framework for integrating sensory information with prior knowledge to learn a world model and quantify the uncertainty of its own beliefs and predictions. However, active inference models are usually crafted for a single task with bespoke knowledge, so they lack the domain flexibility typical of DRL approaches. To bridge this gap, we propose a novel architecture that integrates a minimal yet expressive set of core priors about object-centric dynamics and interactions to accelerate learning in low-data regimes. The resulting approach, which we call AXIOM, combines the usual data efficiency and interpretability of Bayesian approaches with the across-task generalization usually associated with DRL. AXIOM represents scenes as compositions of objects, whose dynamics are modeled as piecewise linear trajectories that capture sparse object-object interactions. The structure of the generative model is expanded online by growing and learning mixture models from single events and periodically refined through Bayesian model reduction to induce generalization. AXIOM masters various games within only 10,000 interaction steps, with both a small number of parameters compared to DRL, and without the computational expense of gradient-based optimization.

Self-orthogonalizing attractor neural networks emerging from the free energy principle

May 28, 2025Abstract:Attractor dynamics are a hallmark of many complex systems, including the brain. Understanding how such self-organizing dynamics emerge from first principles is crucial for advancing our understanding of neuronal computations and the design of artificial intelligence systems. Here we formalize how attractor networks emerge from the free energy principle applied to a universal partitioning of random dynamical systems. Our approach obviates the need for explicitly imposed learning and inference rules and identifies emergent, but efficient and biologically plausible inference and learning dynamics for such self-organizing systems. These result in a collective, multi-level Bayesian active inference process. Attractors on the free energy landscape encode prior beliefs; inference integrates sensory data into posterior beliefs; and learning fine-tunes couplings to minimize long-term surprise. Analytically and via simulations, we establish that the proposed networks favor approximately orthogonalized attractor representations, a consequence of simultaneously optimizing predictive accuracy and model complexity. These attractors efficiently span the input subspace, enhancing generalization and the mutual information between hidden causes and observable effects. Furthermore, while random data presentation leads to symmetric and sparse couplings, sequential data fosters asymmetric couplings and non-equilibrium steady-state dynamics, offering a natural extension to conventional Boltzmann Machines. Our findings offer a unifying theory of self-organizing attractor networks, providing novel insights for AI and neuroscience.

Robust Decision-Making Via Free Energy Minimization

Mar 17, 2025Abstract:Despite their groundbreaking performance, state-of-the-art autonomous agents can misbehave when training and environmental conditions become inconsistent, with minor mismatches leading to undesirable behaviors or even catastrophic failures. Robustness towards these training/environment ambiguities is a core requirement for intelligent agents and its fulfillment is a long-standing challenge when deploying agents in the real world. Here, departing from mainstream views seeking robustness through training, we introduce DR-FREE, a free energy model that installs this core property by design. It directly wires robustness into the agent decision-making mechanisms via free energy minimization. By combining a robust extension of the free energy principle with a novel resolution engine, DR-FREE returns a policy that is optimal-yet-robust against ambiguity. Moreover, for the first time, it reveals the mechanistic role of ambiguity on optimal decisions and requisite Bayesian belief updating. We evaluate DR-FREE on an experimental testbed involving real rovers navigating an ambiguous environment filled with obstacles. Across all the experiments, DR-FREE enables robots to successfully navigate towards their goal even when, in contrast, standard free energy minimizing agents that do not use DR-FREE fail. In short, DR-FREE can tackle scenarios that elude previous methods: this milestone may inspire both deployment in multi-agent settings and, at a perhaps deeper level, the quest for a biologically plausible explanation of how natural agents - with little or no training - survive in capricious environments.

Brain in the Dark: Design Principles for Neuromimetic Inference under the Free Energy Principle

Feb 13, 2025Abstract:Deep learning has revolutionised artificial intelligence (AI) by enabling automatic feature extraction and function approximation from raw data. However, it faces challenges such as a lack of out-of-distribution generalisation, catastrophic forgetting and poor interpretability. In contrast, biological neural networks, such as those in the human brain, do not suffer from these issues, inspiring AI researchers to explore neuromimetic deep learning, which aims to replicate brain mechanisms within AI models. A foundational theory for this approach is the Free Energy Principle (FEP), which despite its potential, is often considered too complex to understand and implement in AI as it requires an interdisciplinary understanding across a variety of fields. This paper seeks to demystify the FEP and provide a comprehensive framework for designing neuromimetic models with human-like perception capabilities. We present a roadmap for implementing these models and a Pytorch code repository for applying FEP in a predictive coding network.

From pixels to planning: scale-free active inference

Jul 27, 2024

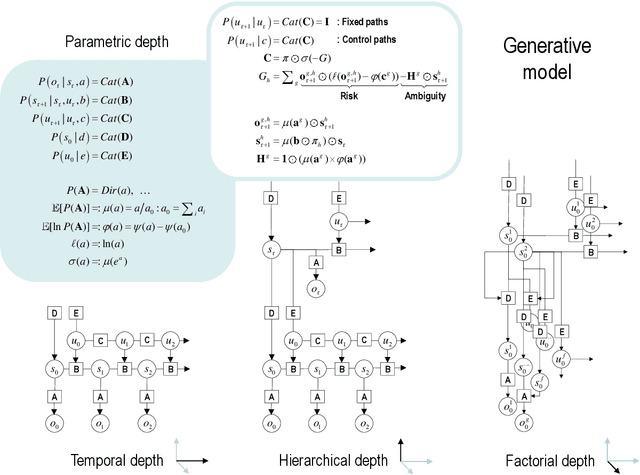

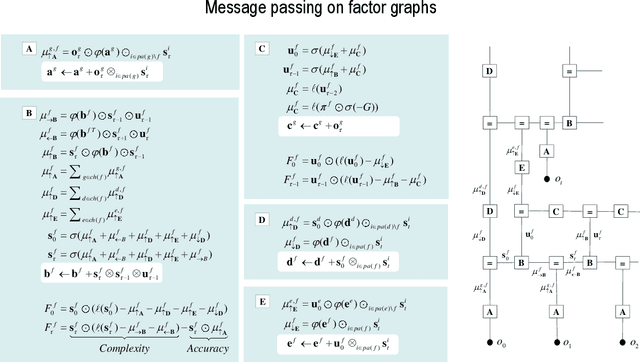

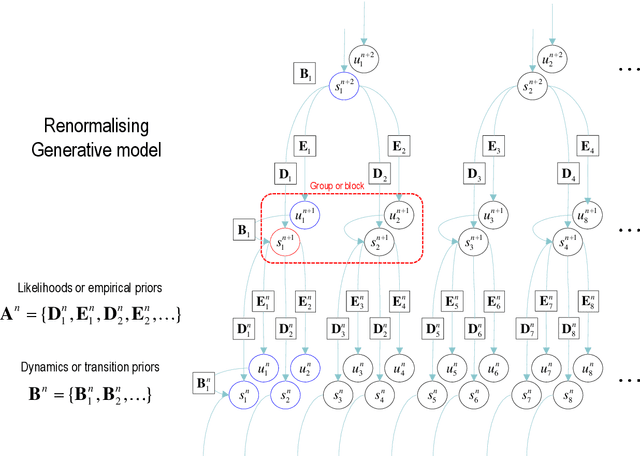

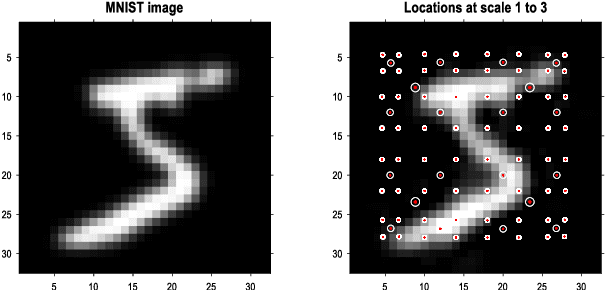

Abstract:This paper describes a discrete state-space model -- and accompanying methods -- for generative modelling. This model generalises partially observed Markov decision processes to include paths as latent variables, rendering it suitable for active inference and learning in a dynamic setting. Specifically, we consider deep or hierarchical forms using the renormalisation group. The ensuing renormalising generative models (RGM) can be regarded as discrete homologues of deep convolutional neural networks or continuous state-space models in generalised coordinates of motion. By construction, these scale-invariant models can be used to learn compositionality over space and time, furnishing models of paths or orbits; i.e., events of increasing temporal depth and itinerancy. This technical note illustrates the automatic discovery, learning and deployment of RGMs using a series of applications. We start with image classification and then consider the compression and generation of movies and music. Finally, we apply the same variational principles to the learning of Atari-like games.

Localising the Seizure Onset Zone from Single-Pulse Electrical Stimulation Responses with a Transformer

Mar 29, 2024Abstract:Epilepsy is one of the most common neurological disorders, and many patients require surgical intervention when medication fails to control seizures. For effective surgical outcomes, precise localisation of the epileptogenic focus - often approximated through the Seizure Onset Zone (SOZ) - is critical yet remains a challenge. Active probing through electrical stimulation is already standard clinical practice for identifying epileptogenic areas. This paper advances the application of deep learning for SOZ localisation using Single Pulse Electrical Stimulation (SPES) responses. We achieve this by introducing Transformer models that incorporate cross-channel attention. We evaluate these models on held-out patient test sets to assess their generalisability to unseen patients and electrode placements. Our study makes three key contributions: Firstly, we implement an existing deep learning model to compare two SPES analysis paradigms - namely, divergent and convergent. These paradigms evaluate outward and inward effective connections, respectively. Our findings reveal a notable improvement in moving from a divergent (AUROC: 0.574) to a convergent approach (AUROC: 0.666), marking the first application of the latter in this context. Secondly, we demonstrate the efficacy of the Transformer models in handling heterogeneous electrode placements, increasing the AUROC to 0.730. Lastly, by incorporating inter-trial variability, we further refine the Transformer models, with an AUROC of 0.745, yielding more consistent predictions across patients. These advancements provide a deeper insight into SOZ localisation and represent a significant step in modelling patient-specific intracranial EEG electrode placements in SPES. Future work will explore integrating these models into clinical decision-making processes to bridge the gap between deep learning research and practical healthcare applications.

Brain-Inspired Computational Intelligence via Predictive Coding

Aug 15, 2023

Abstract:Artificial intelligence (AI) is rapidly becoming one of the key technologies of this century. The majority of results in AI thus far have been achieved using deep neural networks trained with the error backpropagation learning algorithm. However, the ubiquitous adoption of this approach has highlighted some important limitations such as substantial computational cost, difficulty in quantifying uncertainty, lack of robustness, unreliability, and biological implausibility. It is possible that addressing these limitations may require schemes that are inspired and guided by neuroscience theories. One such theory, called predictive coding (PC), has shown promising performance in machine intelligence tasks, exhibiting exciting properties that make it potentially valuable for the machine learning community: PC can model information processing in different brain areas, can be used in cognitive control and robotics, and has a solid mathematical grounding in variational inference, offering a powerful inversion scheme for a specific class of continuous-state generative models. With the hope of foregrounding research in this direction, we survey the literature that has contributed to this perspective, highlighting the many ways that PC might play a role in the future of machine learning and computational intelligence at large.

Hierarchical generative modelling for autonomous robots

Aug 15, 2023

Abstract:Humans can produce complex whole-body motions when interacting with their surroundings, by planning, executing and combining individual limb movements. We investigated this fundamental aspect of motor control in the setting of autonomous robotic operations. We approach this problem by hierarchical generative modelling equipped with multi-level planning-for autonomous task completion-that mimics the deep temporal architecture of human motor control. Here, temporal depth refers to the nested time scales at which successive levels of a forward or generative model unfold, for example, delivering an object requires a global plan to contextualise the fast coordination of multiple local movements of limbs. This separation of temporal scales also motivates robotics and control. Specifically, to achieve versatile sensorimotor control, it is advantageous to hierarchically structure the planning and low-level motor control of individual limbs. We use numerical and physical simulation to conduct experiments and to establish the efficacy of this formulation. Using a hierarchical generative model, we show how a humanoid robot can autonomously complete a complex task that necessitates a holistic use of locomotion, manipulation, and grasping. Specifically, we demonstrate the ability of a humanoid robot that can retrieve and transport a box, open and walk through a door to reach the destination, approach and kick a football, while showing robust performance in presence of body damage and ground irregularities. Our findings demonstrated the effectiveness of using human-inspired motor control algorithms, and our method provides a viable hierarchical architecture for the autonomous completion of challenging goal-directed tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge