Mehran H. Bazargani

Brain in the Dark: Design Principles for Neuromimetic Inference under the Free Energy Principle

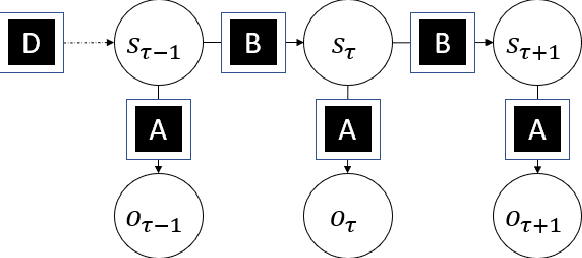

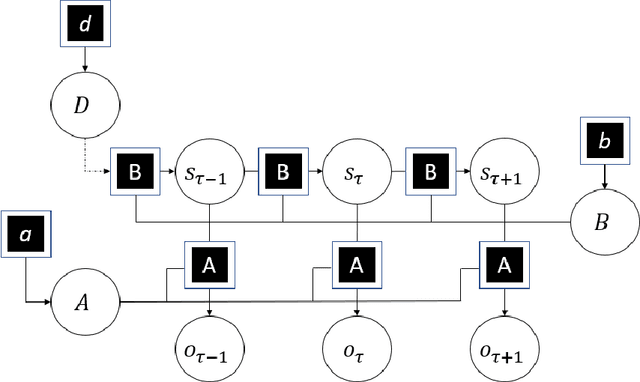

Feb 13, 2025Abstract:Deep learning has revolutionised artificial intelligence (AI) by enabling automatic feature extraction and function approximation from raw data. However, it faces challenges such as a lack of out-of-distribution generalisation, catastrophic forgetting and poor interpretability. In contrast, biological neural networks, such as those in the human brain, do not suffer from these issues, inspiring AI researchers to explore neuromimetic deep learning, which aims to replicate brain mechanisms within AI models. A foundational theory for this approach is the Free Energy Principle (FEP), which despite its potential, is often considered too complex to understand and implement in AI as it requires an interdisciplinary understanding across a variety of fields. This paper seeks to demystify the FEP and provide a comprehensive framework for designing neuromimetic models with human-like perception capabilities. We present a roadmap for implementing these models and a Pytorch code repository for applying FEP in a predictive coding network.

Brain in the Dark: Design Principles for Neuro-mimetic Learning and Inference

Jul 14, 2023

Abstract:Even though the brain operates in pure darkness, within the skull, it can infer the most likely causes of its sensory input. An approach to modelling this inference is to assume that the brain has a generative model of the world, which it can invert to infer the hidden causes behind its sensory stimuli, that is, perception. This assumption raises key questions: how to formulate the problem of designing brain-inspired generative models, how to invert them for the tasks of inference and learning, what is the appropriate loss function to be optimised, and, most importantly, what are the different choices of mean field approximation (MFA) and their implications for variational inference (VI).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge