Inês Hipólito

Artificial Intelligence (AI) and the Relationship between Agency, Autonomy, and Moral Patiency

Apr 11, 2025Abstract:The proliferation of Artificial Intelligence (AI) systems exhibiting complex and seemingly agentive behaviours necessitates a critical philosophical examination of their agency, autonomy, and moral status. In this paper we undertake a systematic analysis of the differences between basic, autonomous, and moral agency in artificial systems. We argue that while current AI systems are highly sophisticated, they lack genuine agency and autonomy because: they operate within rigid boundaries of pre-programmed objectives rather than exhibiting true goal-directed behaviour within their environment; they cannot authentically shape their engagement with the world; and they lack the critical self-reflection and autonomy competencies required for full autonomy. Nonetheless, we do not rule out the possibility of future systems that could achieve a limited form of artificial moral agency without consciousness through hybrid approaches to ethical decision-making. This leads us to suggest, by appealing to the necessity of consciousness for moral patiency, that such non-conscious AMAs might represent a case that challenges traditional assumptions about the necessary connection between moral agency and moral patiency.

Designing explainable artificial intelligence with active inference: A framework for transparent introspection and decision-making

Jun 06, 2023

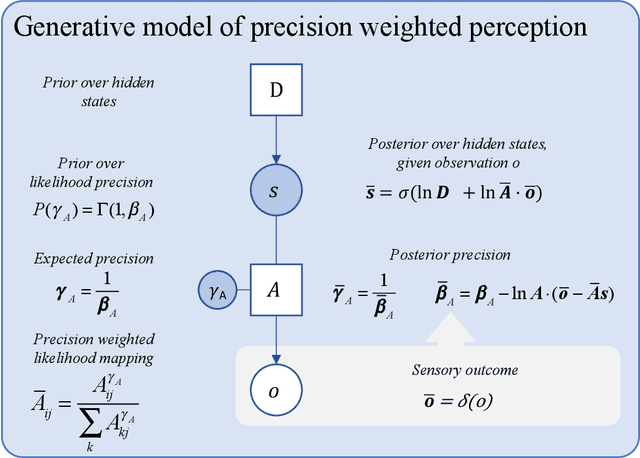

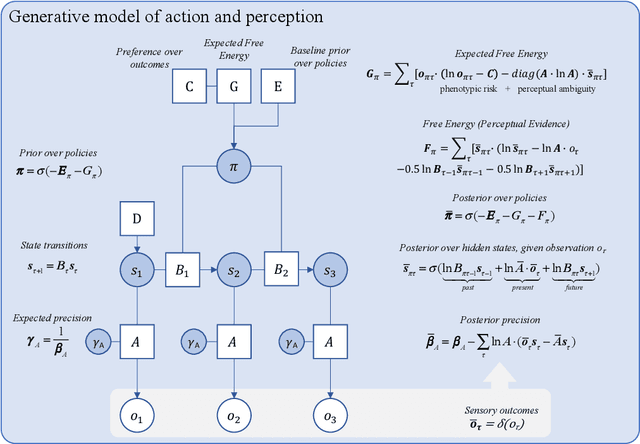

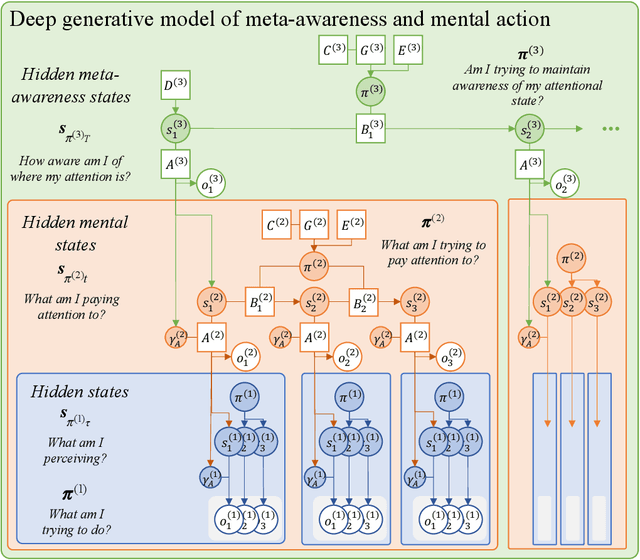

Abstract:This paper investigates the prospect of developing human-interpretable, explainable artificial intelligence (AI) systems based on active inference and the free energy principle. We first provide a brief overview of active inference, and in particular, of how it applies to the modeling of decision-making, introspection, as well as the generation of overt and covert actions. We then discuss how active inference can be leveraged to design explainable AI systems, namely, by allowing us to model core features of ``introspective'' processes and by generating useful, human-interpretable models of the processes involved in decision-making. We propose an architecture for explainable AI systems using active inference. This architecture foregrounds the role of an explicit hierarchical generative model, the operation of which enables the AI system to track and explain the factors that contribute to its own decisions, and whose structure is designed to be interpretable and auditable by human users. We outline how this architecture can integrate diverse sources of information to make informed decisions in an auditable manner, mimicking or reproducing aspects of human-like consciousness and introspection. Finally, we discuss the implications of our findings for future research in AI, and the potential ethical considerations of developing AI systems with (the appearance of) introspective capabilities.

Rejecting Cognitivism: Computational Phenomenology for Deep Learning

Feb 16, 2023Abstract:We propose a non-representationalist framework for deep learning relying on a novel method: computational phenomenology, a dialogue between the first-person perspective (relying on phenomenology) and the mechanisms of computational models. We thereby reject the modern cognitivist interpretation of deep learning, according to which artificial neural networks encode representations of external entities. This interpretation mainly relies on neuro-representationalism, a position that combines a strong ontological commitment towards scientific theoretical entities and the idea that the brain operates on symbolic representations of these entities. We proceed as follows: after offering a review of cognitivism and neuro-representationalism in the field of deep learning, we first elaborate a phenomenological critique of these positions; we then sketch out computational phenomenology and distinguish it from existing alternatives; finally we apply this new method to deep learning models trained on specific tasks, in order to formulate a conceptual framework of deep-learning, that allows one to think of artificial neural networks' mechanisms in terms of lived experience.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge