Edmund R. Hunt

Practical Handling of Dynamic Environments in Decentralised Multi-Robot Patrol

Sep 16, 2025Abstract:Persistent monitoring using robot teams is of interest in fields such as security, environmental monitoring, and disaster recovery. Performing such monitoring in a fully on-line decentralised fashion has significant potential advantages for robustness, adaptability, and scalability of monitoring solutions, including, in principle, the capacity to effectively adapt in real-time to a changing environment. We examine this through the lens of multi-robot patrol, in which teams of patrol robots must persistently minimise time between visits to points of interest, within environments where traversability of routes is highly dynamic. These dynamics must be observed by patrol agents and accounted for in a fully decentralised on-line manner. In this work, we present a new method of monitoring and adjusting for environment dynamics in a decentralised multi-robot patrol team. We demonstrate that our method significantly outperforms realistic baselines in highly dynamic scenarios, and also investigate dynamic scenarios in which explicitly accounting for environment dynamics may be unnecessary or impractical.

Lightweight Decentralized Neural Network-Based Strategies for Multi-Robot Patrolling

Dec 16, 2024

Abstract:The problem of decentralized multi-robot patrol has previously been approached primarily with hand-designed strategies for minimization of 'idlenes' over the vertices of a graph-structured environment. Here we present two lightweight neural network-based strategies to tackle this problem, and show that they significantly outperform existing strategies in both idleness minimization and against an intelligent intruder model, as well as presenting an examination of robustness to communication failure. Our results also indicate important considerations for future strategy design.

Express Yourself: Enabling large-scale public events involving multi-human-swarm interaction for social applications with MOSAIX

Nov 15, 2024

Abstract:Robot swarms have the potential to help groups of people with social tasks, given their ability to scale to large numbers of robots and users. Developing multi-human-swarm interaction is therefore crucial to support multiple people interacting with the swarm simultaneously - which is an area that is scarcely researched, unlike single-human, single-robot or single-human, multi-robot interaction. Moreover, most robots are still confined to laboratory settings. In this paper, we present our work with MOSAIX, a swarm of robot Tiles, that facilitated ideation at a science museum. 63 robots were used as a swarm of smart sticky notes, collecting input from the public and aggregating it based on themes, providing an evolving visualization tool that engaged visitors and fostered their participation. Our contribution lies in creating a large-scale (63 robots and 294 attendees) public event, with a completely decentralized swarm system in real-life settings. We also discuss learnings we obtained that might help future researchers create multi-human-swarm interaction with the public.

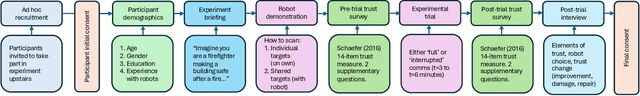

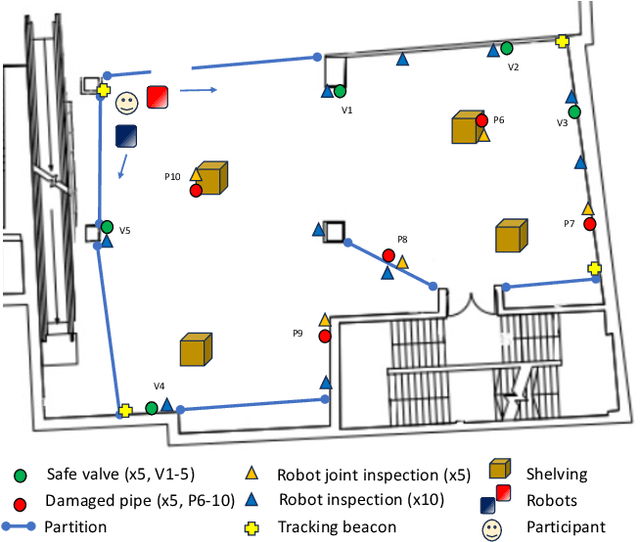

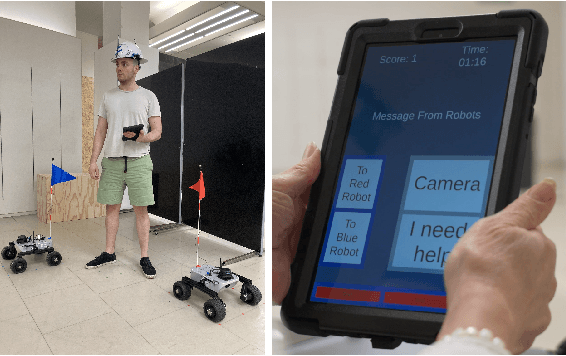

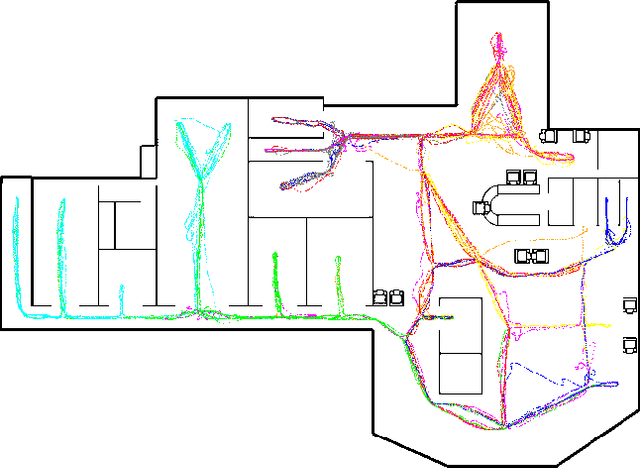

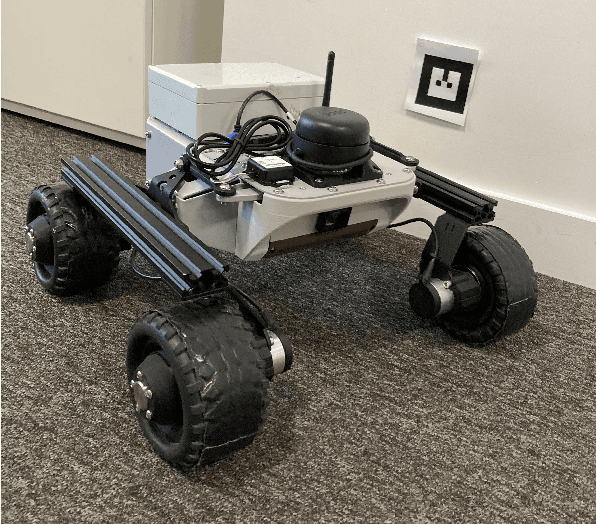

Co-Movement and Trust Development in Human-Robot Teams

Sep 30, 2024

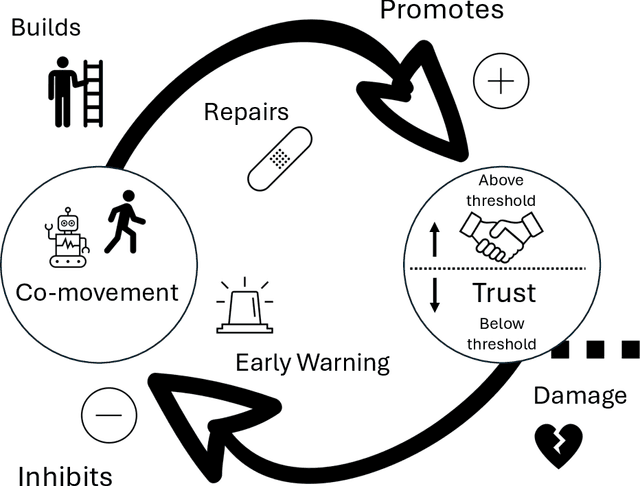

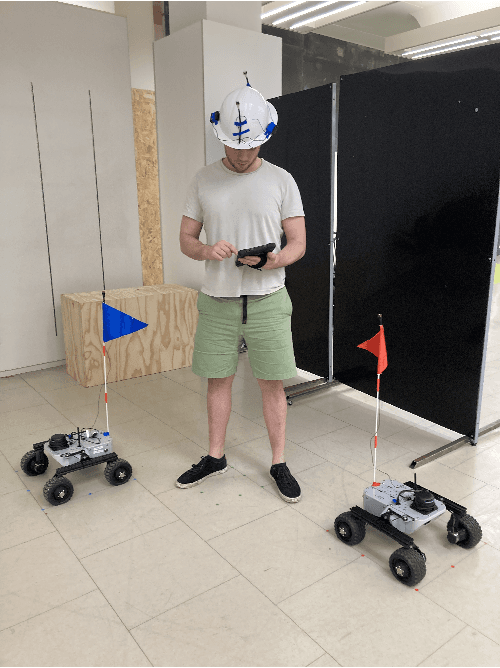

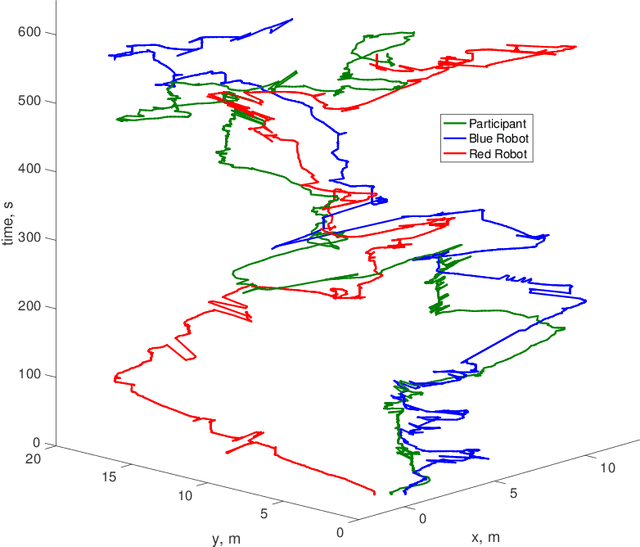

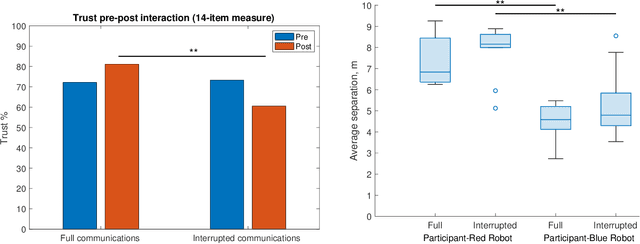

Abstract:For humans and robots to form an effective human-robot team (HRT) there must be sufficient trust between team members throughout a mission. We analyze data from an HRT experiment focused on trust dynamics in teams of one human and two robots, where trust was manipulated by robots becoming temporarily unresponsive. Whole-body movement tracking was achieved using ultrasound beacons, alongside communications and performance logs from a human-robot interface. We find evidence that synchronization between time series of human-robot movement, within a certain spatial proximity, is correlated with changes in self-reported trust. This suggests that the interplay of proxemics and kinesics, i.e. moving together through space, where implicit communication via coordination can occur, could play a role in building and maintaining trust in human-robot teams. Thus, quantitative indicators of coordination dynamics between team members could be used to predict trust over time and also provide early warning signals of the need for timely trust repair if trust is damaged. Hence, we aim to develop the metrology of trust in mobile human-robot teams.

Swift Trust in Mobile Ad Hoc Human-Robot Teams

Aug 18, 2024

Abstract:Integrating robots into teams of humans is anticipated to bring significant capability improvements for tasks such as searching potentially hazardous buildings. Trust between humans and robots is recognized as a key enabler for human-robot teaming (HRT) activity: if trust during a mission falls below sufficient levels for cooperative tasks to be completed, it could critically affect success. Changes in trust could be particularly problematic in teams that have formed on an ad hoc basis (as might be expected in emergency situations) where team members may not have previously worked together. In such ad hoc teams, a foundational level of 'swift trust' may be fragile and challenging to sustain in the face of inevitable setbacks. We present results of an experiment focused on understanding trust building, violation and repair processes in ad hoc teams (one human and two robots). Trust violation occurred through robots becoming unresponsive, with limited communication and feedback. We perform exploratory analysis of a variety of data, including communications and performance logs, trust surveys and post-experiment interviews, toward understanding how autonomous systems can be designed into interdependent ad hoc human-robot teams where swift trust can be sustained.

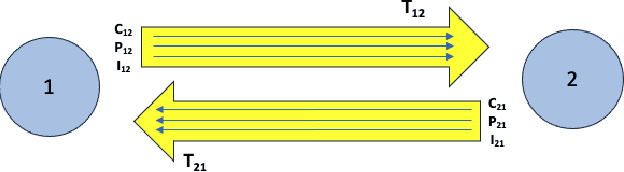

Multi-Robot Strategies for Communication-Constrained Exploration and Electrostatic Anomaly Characterization

May 01, 2024Abstract:Exploration of extreme or remote environments such as Mars is often recognized as an opportunity for multi-robot systems. However, this poses challenges for maintaining robust inter-robot communication without preexisting infrastructure. It may be that robots can only share information when they are physically in close proximity with each other. At the same time, atmospheric phenomena such as dust devils are poorly understood and characterization of their electrostatic properties is of scientific interest. We perform a comparative analysis of two multi-robot communication strategies: a distributed approach, with pairwise intermittent rendezvous, and a centralized, fixed base station approach. We also introduce and evaluate the effectiveness of an algorithm designed to predict the location and strength of electrostatic anomalies, assuming robot proximity. Using an agent-based simulation, we assess the performance of these strategies in a 2D grid cell representation of a Martian environment. Results indicate that a decentralized rendezvous system consistently outperforms a fixed base station system in terms of exploration speed and in reducing the risk of data loss. We also find that inter-robot data sharing improves performance when trying to predict the location and strength of an electrostatic anomaly. These findings indicate the importance of appropriate communication strategies for efficient multi-robot science missions.

Collective Anomaly Perception During Multi-Robot Patrol: Constrained Interactions Can Promote Accurate Consensus

Dec 19, 2023

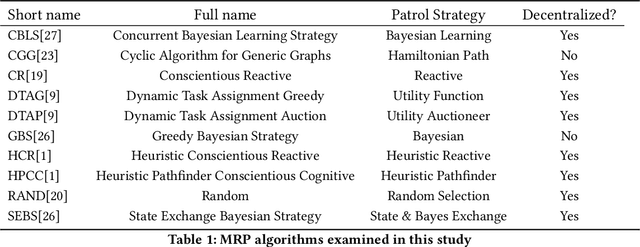

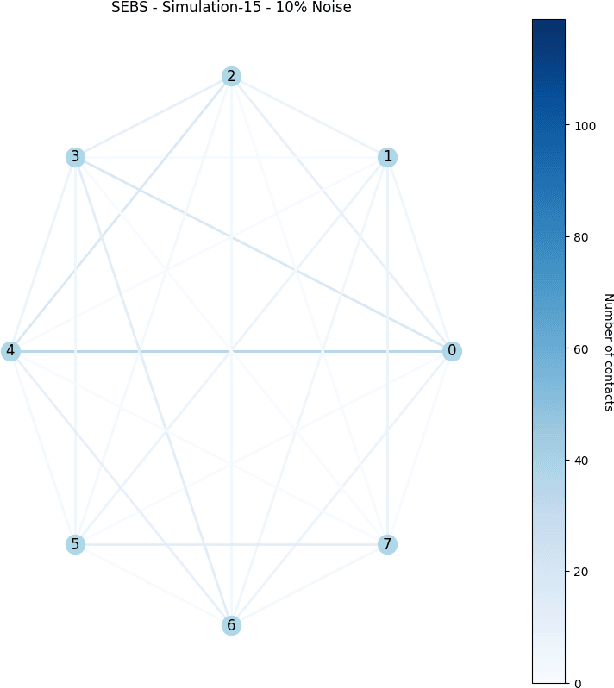

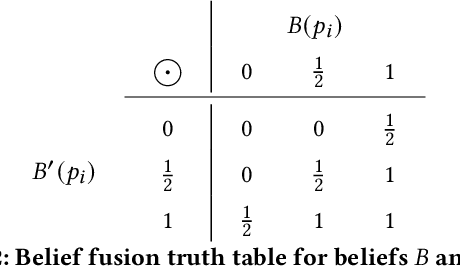

Abstract:An important real-world application of multi-robot systems is multi-robot patrolling (MRP), where robots must carry out the activity of going through an area at regular intervals. Motivations for MRP include the detection of anomalies that may represent security threats. While MRP algorithms show some maturity in development, a key potential advantage has been unexamined: the ability to exploit collective perception of detected anomalies to prioritize the location ordering of security checks. This is because noisy individual-level detection of an anomaly may be compensated for by group-level consensus formation regarding whether an anomaly is likely to be truly present. Here, we examine the performance of unmodified idleness-based patrolling algorithms when given the additional objective of reaching an environmental perception consensus via local pairwise communication and a quorum threshold. We find that generally, MRP algorithms that promote physical mixing of robots, as measured by a higher connectivity of their emergent communication network, reach consensus more quickly. However, when there is noise present in anomaly detection, a more moderate (constrained) level of connectivity is preferable because it reduces the spread of false positive detections, as measured by a group-level F-score. These findings can inform user choice of MRP algorithm and future algorithm development.

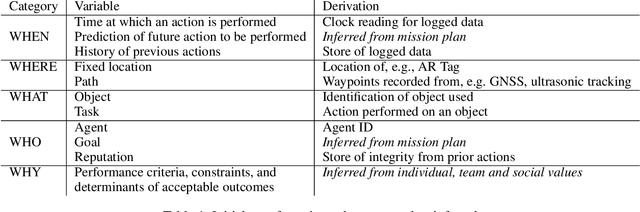

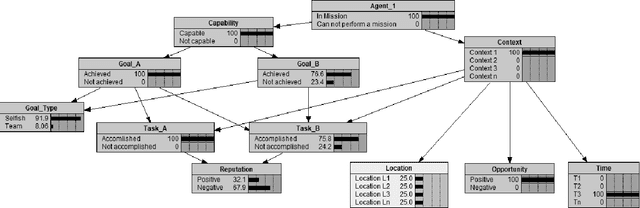

Steps Towards Satisficing Distributed Dynamic Team Trust

Sep 11, 2023

Abstract:Defining and measuring trust in dynamic, multiagent teams is important in a range of contexts, particularly in defense and security domains. Team members should be trusted to work towards agreed goals and in accordance with shared values. In this paper, our concern is with the definition of goals and values such that it is possible to define 'trust' in a way that is interpretable, and hence usable, by both humans and robots. We argue that the outcome of team activity can be considered in terms of 'goal', 'individual/team values', and 'legal principles'. We question whether alignment is possible at the level of 'individual/team values', or only at the 'goal' and 'legal principles' levels. We argue for a set of metrics to define trust in human-robot teams that are interpretable by human or robot team members, and consider an experiment that could demonstrate the notion of 'satisficing trust' over the course of a simulated mission.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge