Sally Maynard

Steps Towards Satisficing Distributed Dynamic Team Trust

Sep 11, 2023

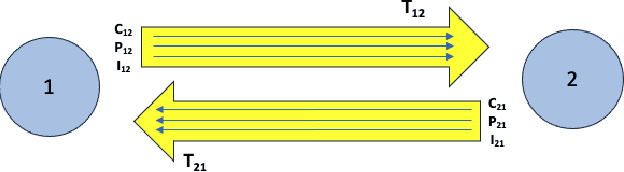

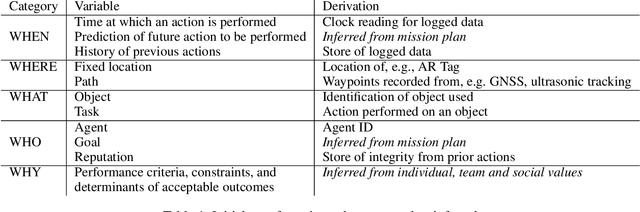

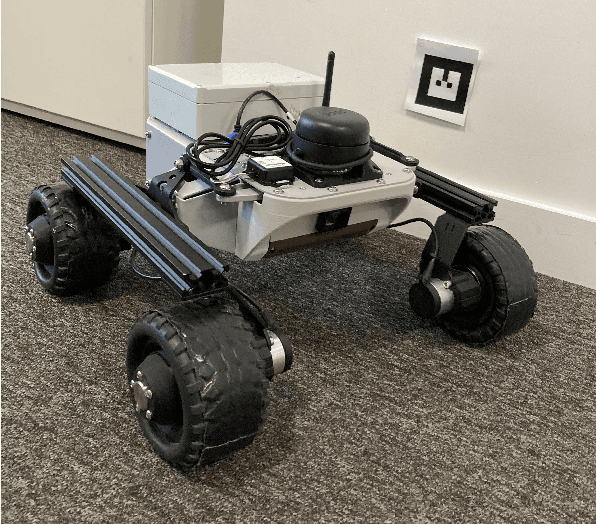

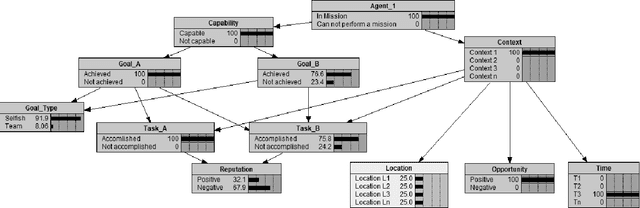

Abstract:Defining and measuring trust in dynamic, multiagent teams is important in a range of contexts, particularly in defense and security domains. Team members should be trusted to work towards agreed goals and in accordance with shared values. In this paper, our concern is with the definition of goals and values such that it is possible to define 'trust' in a way that is interpretable, and hence usable, by both humans and robots. We argue that the outcome of team activity can be considered in terms of 'goal', 'individual/team values', and 'legal principles'. We question whether alignment is possible at the level of 'individual/team values', or only at the 'goal' and 'legal principles' levels. We argue for a set of metrics to define trust in human-robot teams that are interpretable by human or robot team members, and consider an experiment that could demonstrate the notion of 'satisficing trust' over the course of a simulated mission.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge