Sanja Milivojevic

Co-Movement and Trust Development in Human-Robot Teams

Sep 30, 2024

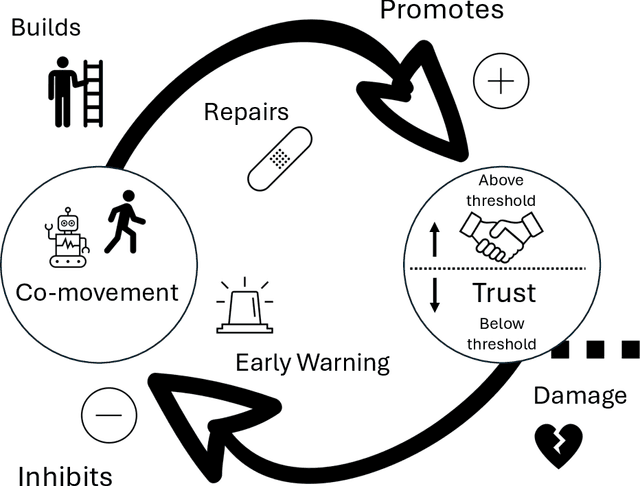

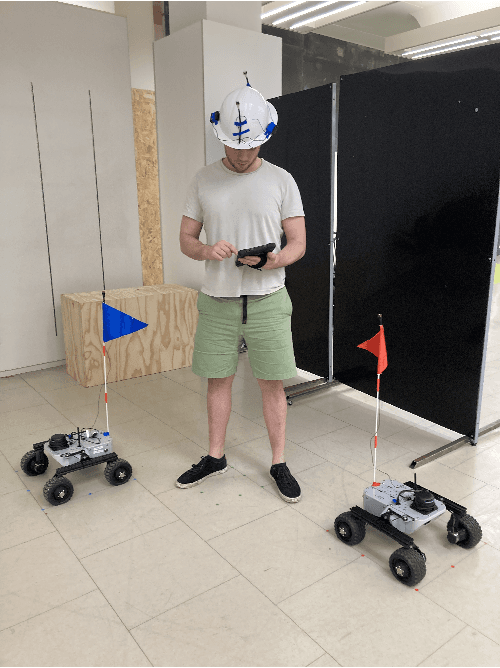

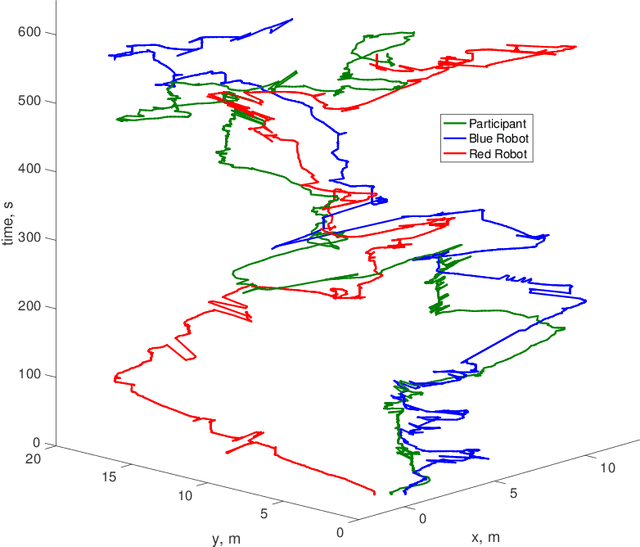

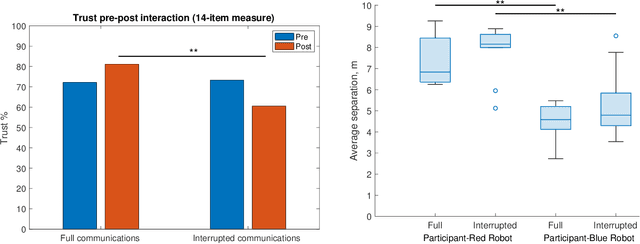

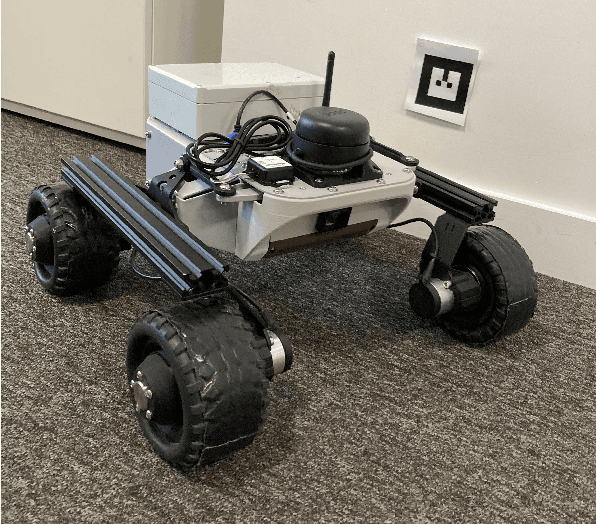

Abstract:For humans and robots to form an effective human-robot team (HRT) there must be sufficient trust between team members throughout a mission. We analyze data from an HRT experiment focused on trust dynamics in teams of one human and two robots, where trust was manipulated by robots becoming temporarily unresponsive. Whole-body movement tracking was achieved using ultrasound beacons, alongside communications and performance logs from a human-robot interface. We find evidence that synchronization between time series of human-robot movement, within a certain spatial proximity, is correlated with changes in self-reported trust. This suggests that the interplay of proxemics and kinesics, i.e. moving together through space, where implicit communication via coordination can occur, could play a role in building and maintaining trust in human-robot teams. Thus, quantitative indicators of coordination dynamics between team members could be used to predict trust over time and also provide early warning signals of the need for timely trust repair if trust is damaged. Hence, we aim to develop the metrology of trust in mobile human-robot teams.

Swift Trust in Mobile Ad Hoc Human-Robot Teams

Aug 18, 2024

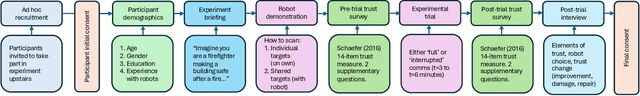

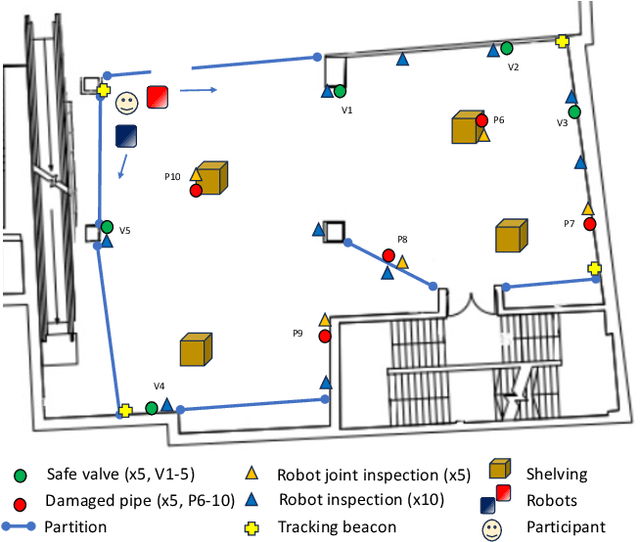

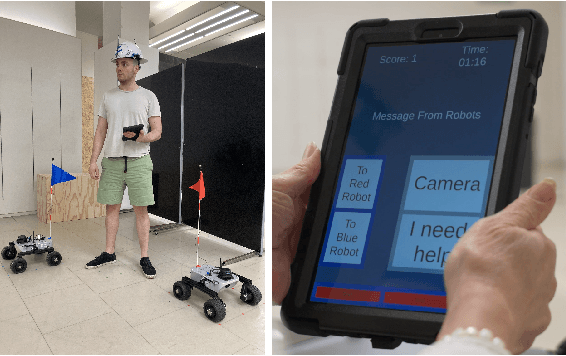

Abstract:Integrating robots into teams of humans is anticipated to bring significant capability improvements for tasks such as searching potentially hazardous buildings. Trust between humans and robots is recognized as a key enabler for human-robot teaming (HRT) activity: if trust during a mission falls below sufficient levels for cooperative tasks to be completed, it could critically affect success. Changes in trust could be particularly problematic in teams that have formed on an ad hoc basis (as might be expected in emergency situations) where team members may not have previously worked together. In such ad hoc teams, a foundational level of 'swift trust' may be fragile and challenging to sustain in the face of inevitable setbacks. We present results of an experiment focused on understanding trust building, violation and repair processes in ad hoc teams (one human and two robots). Trust violation occurred through robots becoming unresponsive, with limited communication and feedback. We perform exploratory analysis of a variety of data, including communications and performance logs, trust surveys and post-experiment interviews, toward understanding how autonomous systems can be designed into interdependent ad hoc human-robot teams where swift trust can be sustained.

Steps Towards Satisficing Distributed Dynamic Team Trust

Sep 11, 2023

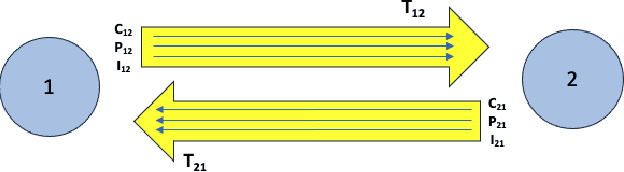

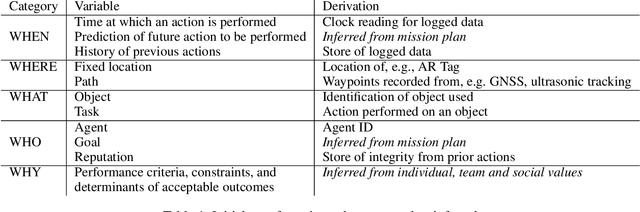

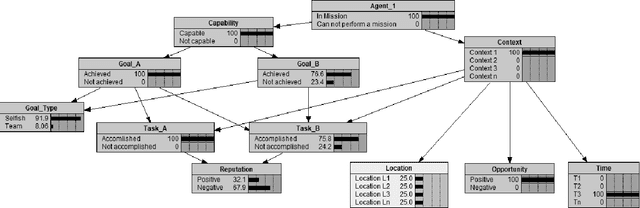

Abstract:Defining and measuring trust in dynamic, multiagent teams is important in a range of contexts, particularly in defense and security domains. Team members should be trusted to work towards agreed goals and in accordance with shared values. In this paper, our concern is with the definition of goals and values such that it is possible to define 'trust' in a way that is interpretable, and hence usable, by both humans and robots. We argue that the outcome of team activity can be considered in terms of 'goal', 'individual/team values', and 'legal principles'. We question whether alignment is possible at the level of 'individual/team values', or only at the 'goal' and 'legal principles' levels. We argue for a set of metrics to define trust in human-robot teams that are interpretable by human or robot team members, and consider an experiment that could demonstrate the notion of 'satisficing trust' over the course of a simulated mission.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge