Nicola Webb

A Simulated real-world upper-body Exoskeleton Accident and Investigation

Nov 21, 2024

Abstract:This paper describes the enactment of a simulated (mock) accident involving an upper-body exoskeleton and its investigation. The accident scenario is enacted by role-playing volunteers, one of whom is wearing the exoskeleton. Following the mock accident, investigators - also volunteers - interview both the subject of the accident and relevant witnesses. The investigators then consider the witness testimony alongside robot data logged by the ethical black box, in order to address the three key questions: what happened?, why did it happen?, and how can we make changes to prevent the accident happening again? This simulated accident scenario is one of a series we have run as part of the RoboTIPS project, with the overall aim of developing and testing both processes and technologies to support social robot accident investigation.

Co-Movement and Trust Development in Human-Robot Teams

Sep 30, 2024

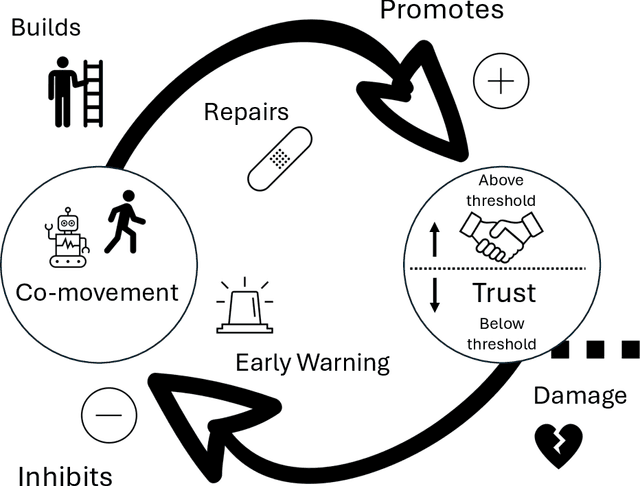

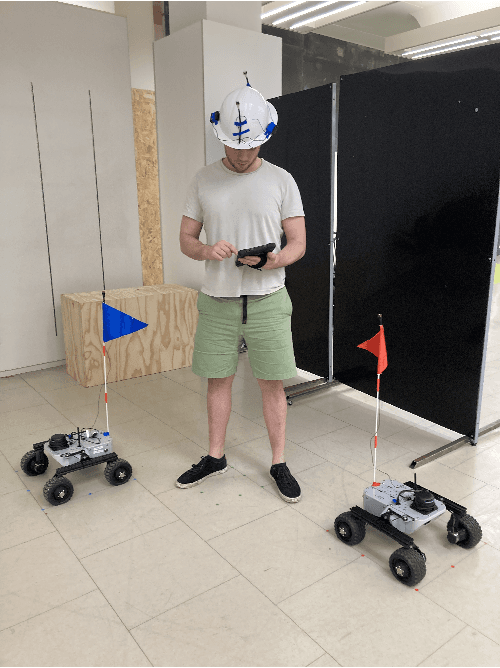

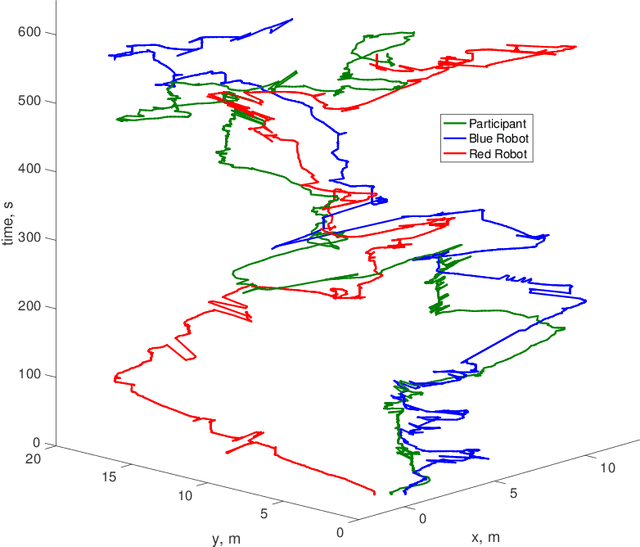

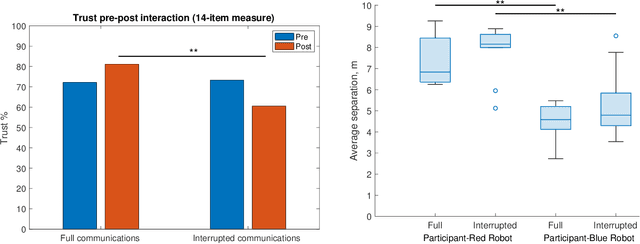

Abstract:For humans and robots to form an effective human-robot team (HRT) there must be sufficient trust between team members throughout a mission. We analyze data from an HRT experiment focused on trust dynamics in teams of one human and two robots, where trust was manipulated by robots becoming temporarily unresponsive. Whole-body movement tracking was achieved using ultrasound beacons, alongside communications and performance logs from a human-robot interface. We find evidence that synchronization between time series of human-robot movement, within a certain spatial proximity, is correlated with changes in self-reported trust. This suggests that the interplay of proxemics and kinesics, i.e. moving together through space, where implicit communication via coordination can occur, could play a role in building and maintaining trust in human-robot teams. Thus, quantitative indicators of coordination dynamics between team members could be used to predict trust over time and also provide early warning signals of the need for timely trust repair if trust is damaged. Hence, we aim to develop the metrology of trust in mobile human-robot teams.

Swift Trust in Mobile Ad Hoc Human-Robot Teams

Aug 18, 2024

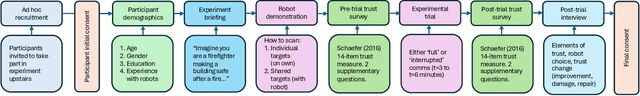

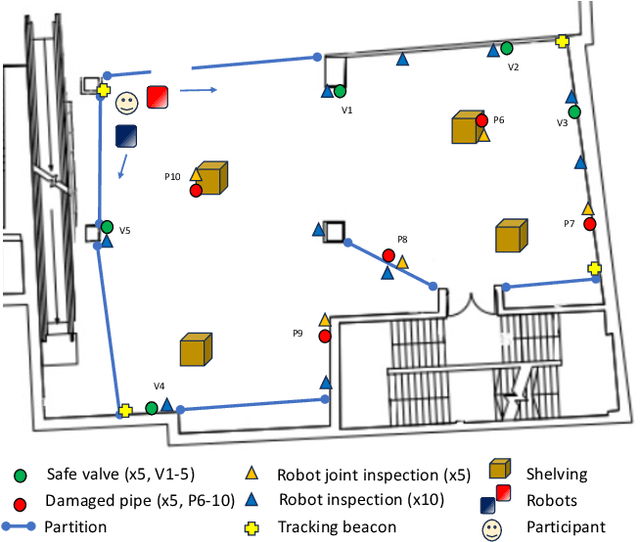

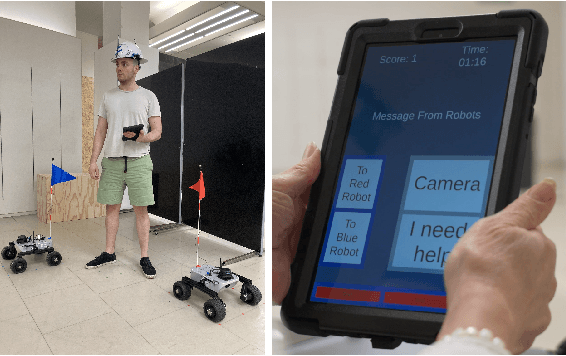

Abstract:Integrating robots into teams of humans is anticipated to bring significant capability improvements for tasks such as searching potentially hazardous buildings. Trust between humans and robots is recognized as a key enabler for human-robot teaming (HRT) activity: if trust during a mission falls below sufficient levels for cooperative tasks to be completed, it could critically affect success. Changes in trust could be particularly problematic in teams that have formed on an ad hoc basis (as might be expected in emergency situations) where team members may not have previously worked together. In such ad hoc teams, a foundational level of 'swift trust' may be fragile and challenging to sustain in the face of inevitable setbacks. We present results of an experiment focused on understanding trust building, violation and repair processes in ad hoc teams (one human and two robots). Trust violation occurred through robots becoming unresponsive, with limited communication and feedback. We perform exploratory analysis of a variety of data, including communications and performance logs, trust surveys and post-experiment interviews, toward understanding how autonomous systems can be designed into interdependent ad hoc human-robot teams where swift trust can be sustained.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge