Alan Winfield

A Simulated real-world upper-body Exoskeleton Accident and Investigation

Nov 21, 2024

Abstract:This paper describes the enactment of a simulated (mock) accident involving an upper-body exoskeleton and its investigation. The accident scenario is enacted by role-playing volunteers, one of whom is wearing the exoskeleton. Following the mock accident, investigators - also volunteers - interview both the subject of the accident and relevant witnesses. The investigators then consider the witness testimony alongside robot data logged by the ethical black box, in order to address the three key questions: what happened?, why did it happen?, and how can we make changes to prevent the accident happening again? This simulated accident scenario is one of a series we have run as part of the RoboTIPS project, with the overall aim of developing and testing both processes and technologies to support social robot accident investigation.

Responses to a Critique of Artificial Moral Agents

Mar 17, 2019Abstract:The field of machine ethics is concerned with the question of how to embed ethical behaviors, or a means to determine ethical behaviors, into artificial intelligence (AI) systems. The goal is to produce artificial moral agents (AMAs) that are either implicitly ethical (designed to avoid unethical consequences) or explicitly ethical (designed to behave ethically). Van Wynsberghe and Robbins' (2018) paper Critiquing the Reasons for Making Artificial Moral Agents critically addresses the reasons offered by machine ethicists for pursuing AMA research; this paper, co-authored by machine ethicists and commentators, aims to contribute to the machine ethics conversation by responding to that critique. The reasons for developing AMAs discussed in van Wynsberghe and Robbins (2018) are: it is inevitable that they will be developed; the prevention of harm; the necessity for public trust; the prevention of immoral use; such machines are better moral reasoners than humans, and building these machines would lead to a better understanding of human morality. In this paper, each co-author addresses those reasons in turn. In so doing, this paper demonstrates that the reasons critiqued are not shared by all co-authors; each machine ethicist has their own reasons for researching AMAs. But while we express a diverse range of views on each of the six reasons in van Wynsberghe and Robbins' critique, we nevertheless share the opinion that the scientific study of AMAs has considerable value.

An architecture for ethical robots

Sep 09, 2016

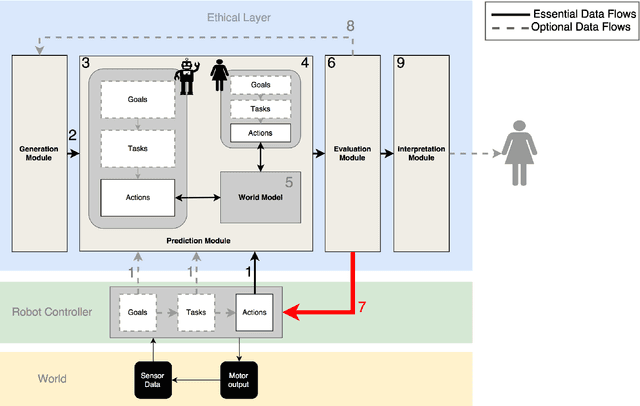

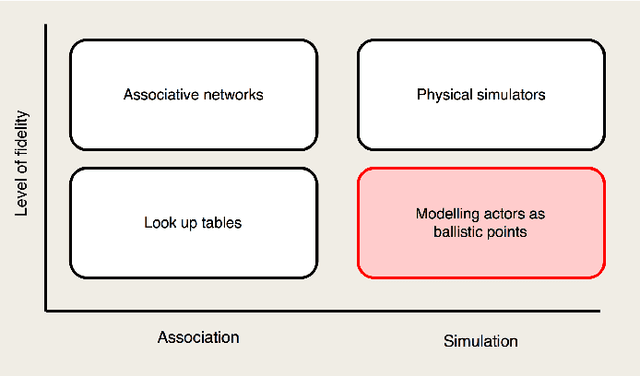

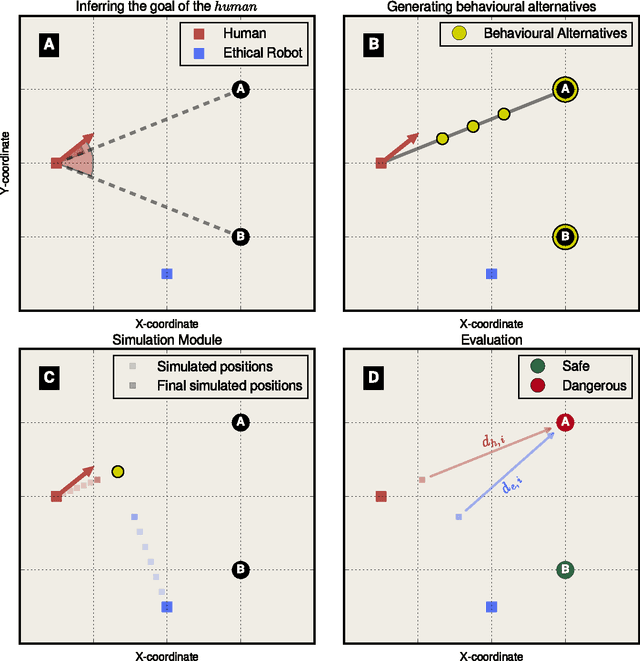

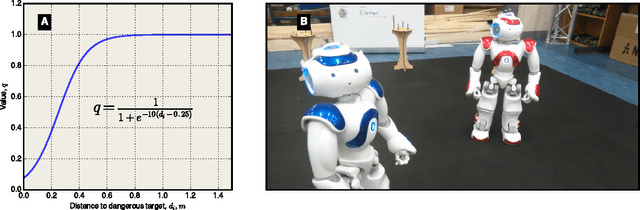

Abstract:Robots are becoming ever more autonomous. This expanding ability to take unsupervised decisions renders it imperative that mechanisms are in place to guarantee the safety of behaviours executed by the robot. Moreover, smart autonomous robots should be more than safe; they should also be explicitly ethical -- able to both choose and justify actions that prevent harm. Indeed, as the cognitive, perceptual and motor capabilities of robots expand, they will be expected to have an improved capacity for making moral judgements. We present a control architecture that supplements existing robot controllers. This so-called Ethical Layer ensures robots behave according to a predetermined set of ethical rules by predicting the outcomes of possible actions and evaluating the predicted outcomes against those rules. To validate the proposed architecture, we implement it on a humanoid robot so that it behaves according to Asimov's laws of robotics. In a series of four experiments, using a second humanoid robot as a proxy for the human, we demonstrate that the proposed Ethical Layer enables the robot to prevent the human from coming to harm.

The Dark Side of Ethical Robots

Jun 08, 2016

Abstract:Concerns over the risks associated with advances in Artificial Intelligence have prompted calls for greater efforts toward robust and beneficial AI, including machine ethics. Recently, roboticists have responded by initiating the development of so-called ethical robots. These robots would, ideally, evaluate the consequences of their actions and morally justify their choices. This emerging field promises to develop extensively over the next years. However, in this paper, we point out an inherent limitation of the emerging field of ethical robots. We show that building ethical robots also necessarily facilitates the construction of unethical robots. In three experiments, we show that it is remarkably easy to modify an ethical robot so that it behaves competitively, or even aggressively. The reason for this is that the specific AI, required to make an ethical robot, can always be exploited to make unethical robots. Hence, the development of ethical robots will not guarantee the responsible deployment of AI. While advocating for ethical robots, we conclude that preventing the misuse of robots is beyond the scope of engineering, and requires instead governance frameworks underpinned by legislation. Without this, the development of ethical robots will serve to increase the risks of robotic malpractice instead of diminishing it.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge