Dieter Vanderelst

Rapid Learning of Spatial Representations for Goal-Directed Navigation Based on a Novel Model of Hippocampal Place Fields

Jun 07, 2022

Abstract:The discovery of place cells and other spatially modulated neurons in the hippocampal complex of rodents has been crucial to elucidating the neural basis of spatial cognition. More recently, the replay of neural sequences encoding previously experienced trajectories has been observed during consummatory behavior potentially with implications for quick memory consolidation and behavioral planning. Several promising models for robotic navigation and reinforcement learning have been proposed based on these and previous findings. Most of these models, however, use carefully engineered neural networks and are tested in simple environments. In this paper, we develop a self-organized model incorporating place cells and replay, and demonstrate its utility for rapid one-shot learning in non-trivial environments with obstacles.

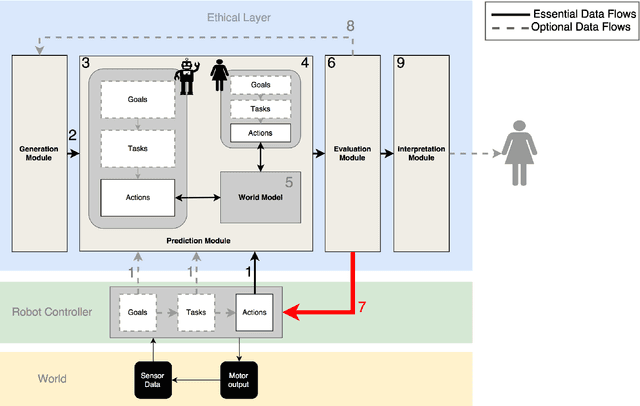

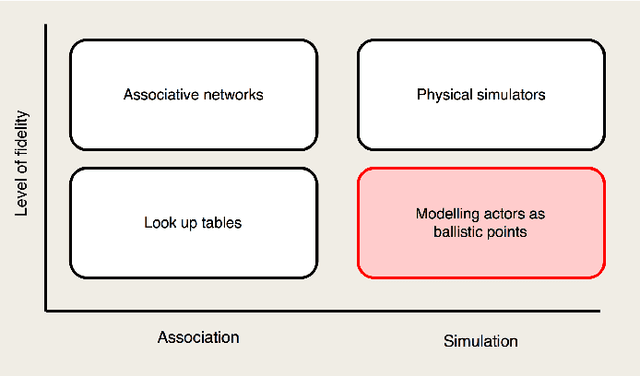

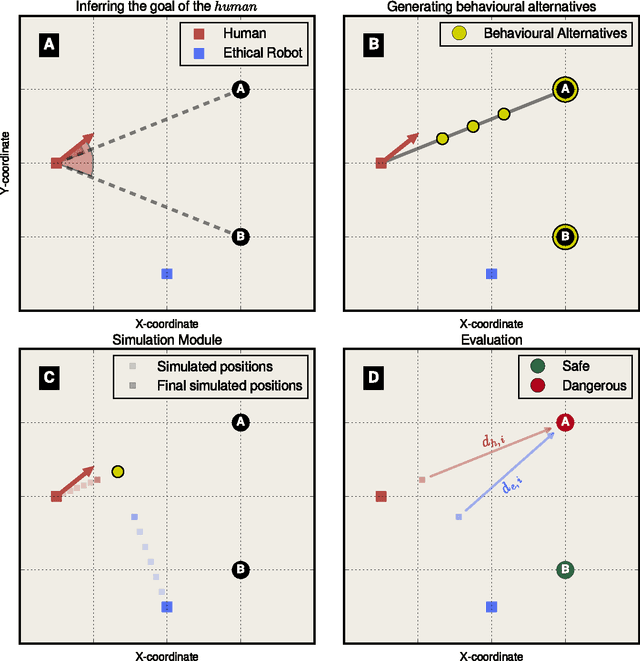

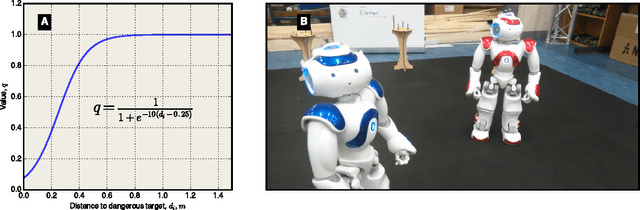

An architecture for ethical robots

Sep 09, 2016

Abstract:Robots are becoming ever more autonomous. This expanding ability to take unsupervised decisions renders it imperative that mechanisms are in place to guarantee the safety of behaviours executed by the robot. Moreover, smart autonomous robots should be more than safe; they should also be explicitly ethical -- able to both choose and justify actions that prevent harm. Indeed, as the cognitive, perceptual and motor capabilities of robots expand, they will be expected to have an improved capacity for making moral judgements. We present a control architecture that supplements existing robot controllers. This so-called Ethical Layer ensures robots behave according to a predetermined set of ethical rules by predicting the outcomes of possible actions and evaluating the predicted outcomes against those rules. To validate the proposed architecture, we implement it on a humanoid robot so that it behaves according to Asimov's laws of robotics. In a series of four experiments, using a second humanoid robot as a proxy for the human, we demonstrate that the proposed Ethical Layer enables the robot to prevent the human from coming to harm.

The Dark Side of Ethical Robots

Jun 08, 2016

Abstract:Concerns over the risks associated with advances in Artificial Intelligence have prompted calls for greater efforts toward robust and beneficial AI, including machine ethics. Recently, roboticists have responded by initiating the development of so-called ethical robots. These robots would, ideally, evaluate the consequences of their actions and morally justify their choices. This emerging field promises to develop extensively over the next years. However, in this paper, we point out an inherent limitation of the emerging field of ethical robots. We show that building ethical robots also necessarily facilitates the construction of unethical robots. In three experiments, we show that it is remarkably easy to modify an ethical robot so that it behaves competitively, or even aggressively. The reason for this is that the specific AI, required to make an ethical robot, can always be exploited to make unethical robots. Hence, the development of ethical robots will not guarantee the responsible deployment of AI. While advocating for ethical robots, we conclude that preventing the misuse of robots is beyond the scope of engineering, and requires instead governance frameworks underpinned by legislation. Without this, the development of ethical robots will serve to increase the risks of robotic malpractice instead of diminishing it.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge