An architecture for ethical robots

Paper and Code

Sep 09, 2016

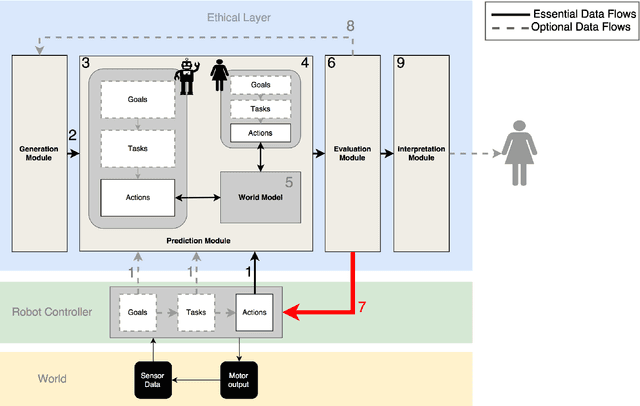

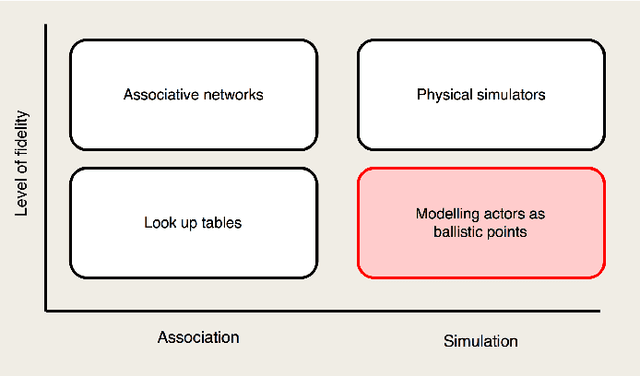

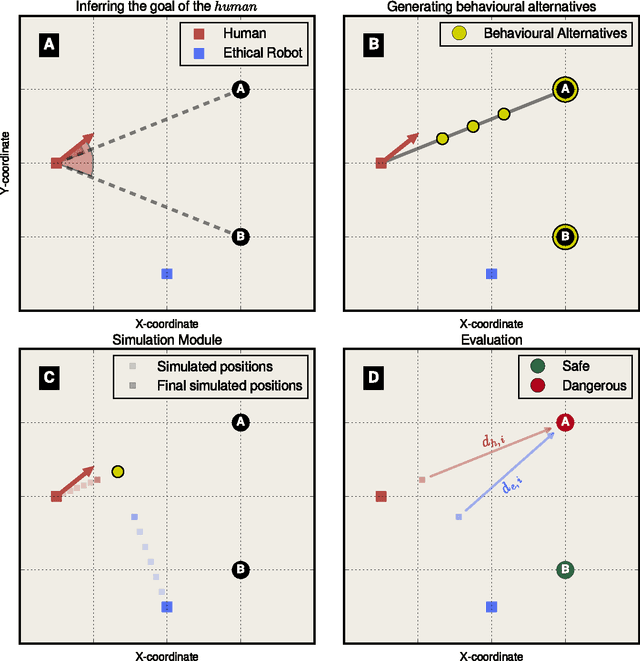

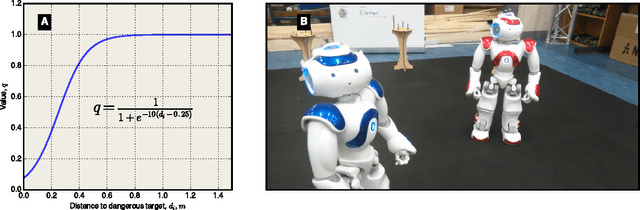

Robots are becoming ever more autonomous. This expanding ability to take unsupervised decisions renders it imperative that mechanisms are in place to guarantee the safety of behaviours executed by the robot. Moreover, smart autonomous robots should be more than safe; they should also be explicitly ethical -- able to both choose and justify actions that prevent harm. Indeed, as the cognitive, perceptual and motor capabilities of robots expand, they will be expected to have an improved capacity for making moral judgements. We present a control architecture that supplements existing robot controllers. This so-called Ethical Layer ensures robots behave according to a predetermined set of ethical rules by predicting the outcomes of possible actions and evaluating the predicted outcomes against those rules. To validate the proposed architecture, we implement it on a humanoid robot so that it behaves according to Asimov's laws of robotics. In a series of four experiments, using a second humanoid robot as a proxy for the human, we demonstrate that the proposed Ethical Layer enables the robot to prevent the human from coming to harm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge