Leonard Bruns

Visible Structure Retrieval for Lightweight Image-Based Relocalisation

Nov 16, 2025Abstract:Accurate camera pose estimation from an image observation in a previously mapped environment is commonly done through structure-based methods: by finding correspondences between 2D keypoints on the image and 3D structure points in the map. In order to make this correspondence search tractable in large scenes, existing pipelines either rely on search heuristics, or perform image retrieval to reduce the search space by comparing the current image to a database of past observations. However, these approaches result in elaborate pipelines or storage requirements that grow with the number of past observations. In this work, we propose a new paradigm for making structure-based relocalisation tractable. Instead of relying on image retrieval or search heuristics, we learn a direct mapping from image observations to the visible scene structure in a compact neural network. Given a query image, a forward pass through our novel visible structure retrieval network allows obtaining the subset of 3D structure points in the map that the image views, thus reducing the search space of 2D-3D correspondences. We show that our proposed method enables performing localisation with an accuracy comparable to the state of the art, while requiring lower computational and storage footprint.

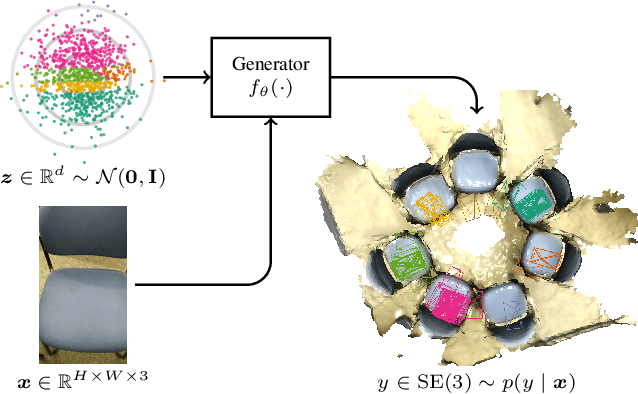

Conditional Variational Autoencoders for Probabilistic Pose Regression

Oct 07, 2024

Abstract:Robots rely on visual relocalization to estimate their pose from camera images when they lose track. One of the challenges in visual relocalization is repetitive structures in the operation environment of the robot. This calls for probabilistic methods that support multiple hypotheses for robot's pose. We propose such a probabilistic method to predict the posterior distribution of camera poses given an observed image. Our proposed training strategy results in a generative model of camera poses given an image, which can be used to draw samples from the pose posterior distribution. Our method is streamlined and well-founded in theory and outperforms existing methods on localization in presence of ambiguities.

Neural Graph Mapping for Dense SLAM with Efficient Loop Closure

May 06, 2024Abstract:Existing neural field-based SLAM methods typically employ a single monolithic field as their scene representation. This prevents efficient incorporation of loop closure constraints and limits scalability. To address these shortcomings, we propose a neural mapping framework which anchors lightweight neural fields to the pose graph of a sparse visual SLAM system. Our approach shows the ability to integrate large-scale loop closures, while limiting necessary reintegration. Furthermore, we verify the scalability of our approach by demonstrating successful building-scale mapping taking multiple loop closures into account during the optimization, and show that our method outperforms existing state-of-the-art approaches on large scenes in terms of quality and runtime. Our code is available at https://kth-rpl.github.io/neural_graph_mapping/.

Transitional Grid Maps: Efficient Analytical Inference of Dynamic Environments under Limited Sensing

Jan 12, 2024Abstract:Autonomous agents rely on sensor data to construct representations of their environment, essential for predicting future events and planning their own actions. However, sensor measurements suffer from limited range, occlusions, and sensor noise. These challenges become more evident in dynamic environments, where efficiently inferring the state of the environment based on sensor readings from different times is still an open problem. This work focuses on inferring the state of the dynamic part of the environment, i.e., where dynamic objects might be, based on previous observations and constraints on their dynamics. We formalize the problem and introduce Transitional Grid Maps (TGMs), an efficient analytical solution. TGMs are based on a set of novel assumptions that hold in many practical scenarios. They significantly reduce the complexity of the problem, enabling continuous prediction and updating of the entire dynamic map based on the known static map (see Fig.1), differentiating them from other alternatives. We compare our approach with a state-of-the-art particle filter, obtaining more prudent predictions in occluded scenarios and on-par results on unoccluded tracking.

RGB-D-Based Categorical Object Pose and Shape Estimation: Methods, Datasets, and Evaluation

Jan 19, 2023Abstract:Recently, various methods for 6D pose and shape estimation of objects at a per-category level have been proposed. This work provides an overview of the field in terms of methods, datasets, and evaluation protocols. First, an overview of existing works and their commonalities and differences is provided. Second, we take a critical look at the predominant evaluation protocol, including metrics and datasets. Based on the findings, we propose a new set of metrics, contribute new annotations for the Redwood dataset, and evaluate state-of-the-art methods in a fair comparison. The results indicate that existing methods do not generalize well to unconstrained orientations and are actually heavily biased towards objects being upright. We provide an easy-to-use evaluation toolbox with well-defined metrics, methods, and dataset interfaces, which allows evaluation and comparison with various state-of-the-art approaches (https://github.com/roym899/pose_and_shape_evaluation).

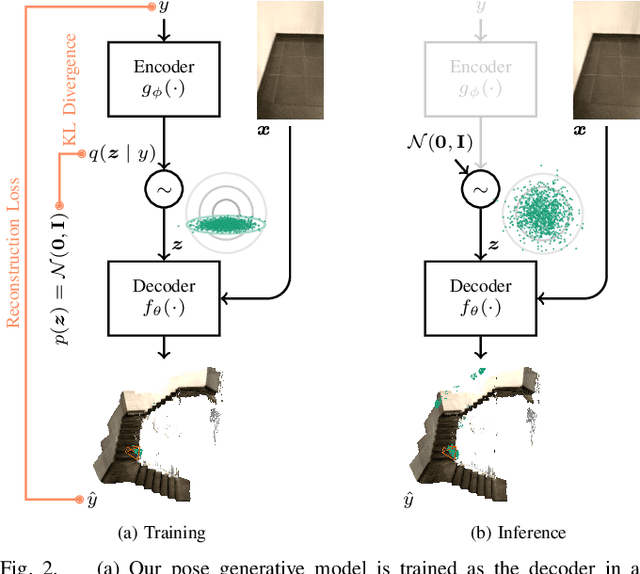

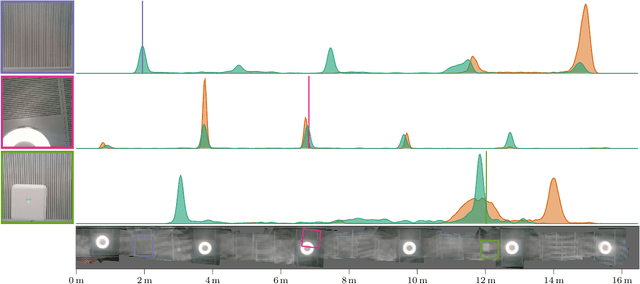

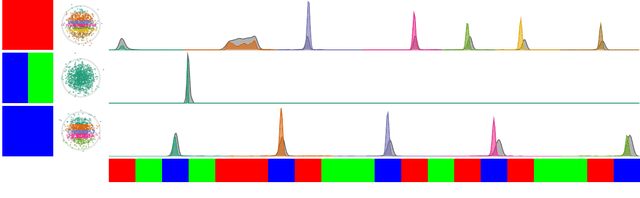

A Probabilistic Framework for Visual Localization in Ambiguous Scenes

Jan 05, 2023

Abstract:Visual localization allows autonomous robots to relocalize when losing track of their pose by matching their current observation with past ones. However, ambiguous scenes pose a challenge for such systems, as repetitive structures can be viewed from many distinct, equally likely camera poses, which means it is not sufficient to produce a single best pose hypothesis. In this work, we propose a probabilistic framework that for a given image predicts the arbitrarily shaped posterior distribution of its camera pose. We do this via a novel formulation of camera pose regression using variational inference, which allows sampling from the predicted distribution. Our method outperforms existing methods on localization in ambiguous scenes. Code and data will be released at https://github.com/efreidun/vapor.

SDFEst: Categorical Pose and Shape Estimation of Objects from RGB-D using Signed Distance Fields

Jul 11, 2022

Abstract:Rich geometric understanding of the world is an important component of many robotic applications such as planning and manipulation. In this paper, we present a modular pipeline for pose and shape estimation of objects from RGB-D images given their category. The core of our method is a generative shape model, which we integrate with a novel initialization network and a differentiable renderer to enable 6D pose and shape estimation from a single or multiple views. We investigate the use of discretized signed distance fields as an efficient shape representation for fast analysis-by-synthesis optimization. Our modular framework enables multi-view optimization and extensibility. We demonstrate the benefits of our approach over state-of-the-art methods in several experiments on both synthetic and real data. We open-source our approach at https://github.com/roym899/sdfest.

SDF-based RGB-D Camera Tracking in Neural Scene Representations

May 04, 2022

Abstract:We consider the problem of tracking the 6D pose of a moving RGB-D camera in a neural scene representation. Different such representations have recently emerged, and we investigate the suitability of them for the task of camera tracking. In particular, we propose to track an RGB-D camera using a signed distance field-based representation and show that compared to density-based representations, tracking can be sped up, which enables more robust and accurate pose estimates when computation time is limited.

On the Evaluation of RGB-D-based Categorical Pose and Shape Estimation

Feb 21, 2022

Abstract:Recently, various methods for 6D pose and shape estimation of objects have been proposed. Typically, these methods evaluate their pose estimation in terms of average precision, and reconstruction quality with chamfer distance. In this work we take a critical look at this predominant evaluation protocol including metrics and datasets. We propose a new set of metrics, contribute new annotations for the Redwood dataset and evaluate state-of-the-art methods in a fair comparison. We find that existing methods do not generalize well to unconstrained orientations, and are actually heavily biased towards objects being upright. We contribute an easy-to-use evaluation toolbox with well-defined metrics, method and dataset interfaces, which readily allows evaluation and comparison with various state-of-the-art approaches (see https://github.com/roym899/pose_and_shape_evaluation ).

Dispertio: Optimal Sampling for Safe Deterministic Sampling-Based Motion Planning

Sep 30, 2019

Abstract:A key challenge in robotics is the efficient generation of optimal robot motion with safety guarantees in cluttered environments. Recently, deterministic optimal sampling-based motion planners have been shown to achieve good performance towards this end, in particular in terms of planning efficiency, final solution cost, quality guarantees as well as non-probabilistic completeness. Yet their application is still limited to relatively simple systems (i.e., linear, holonomic, Euclidean state spaces). In this work, we extend this technique to the class of symmetric and optimal driftless systems by presenting Dispertio, an offline dispersion optimization technique for computing sampling sets, aware of differential constraints, for sampling-based robot motion planning. We prove that the approach, when combined with PRM*, is deterministically complete and retains asymptotic optimality. Furthermore, in our experiments we show that the proposed deterministic sampling technique outperforms several baselines and alternative methods in terms of planning efficiency and solution cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge