José Manuel Gaspar Sánchez

Transitional Grid Maps: Efficient Analytical Inference of Dynamic Environments under Limited Sensing

Jan 12, 2024Abstract:Autonomous agents rely on sensor data to construct representations of their environment, essential for predicting future events and planning their own actions. However, sensor measurements suffer from limited range, occlusions, and sensor noise. These challenges become more evident in dynamic environments, where efficiently inferring the state of the environment based on sensor readings from different times is still an open problem. This work focuses on inferring the state of the dynamic part of the environment, i.e., where dynamic objects might be, based on previous observations and constraints on their dynamics. We formalize the problem and introduce Transitional Grid Maps (TGMs), an efficient analytical solution. TGMs are based on a set of novel assumptions that hold in many practical scenarios. They significantly reduce the complexity of the problem, enabling continuous prediction and updating of the entire dynamic map based on the known static map (see Fig.1), differentiating them from other alternatives. We compare our approach with a state-of-the-art particle filter, obtaining more prudent predictions in occluded scenarios and on-par results on unoccluded tracking.

Overcoming the Fear of the Dark: Occlusion-Aware Model-Predictive Planning for Automated Vehicles Using Risk Fields

Sep 27, 2023

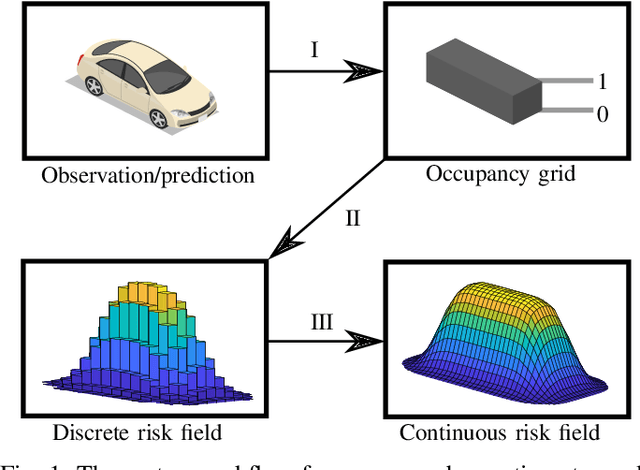

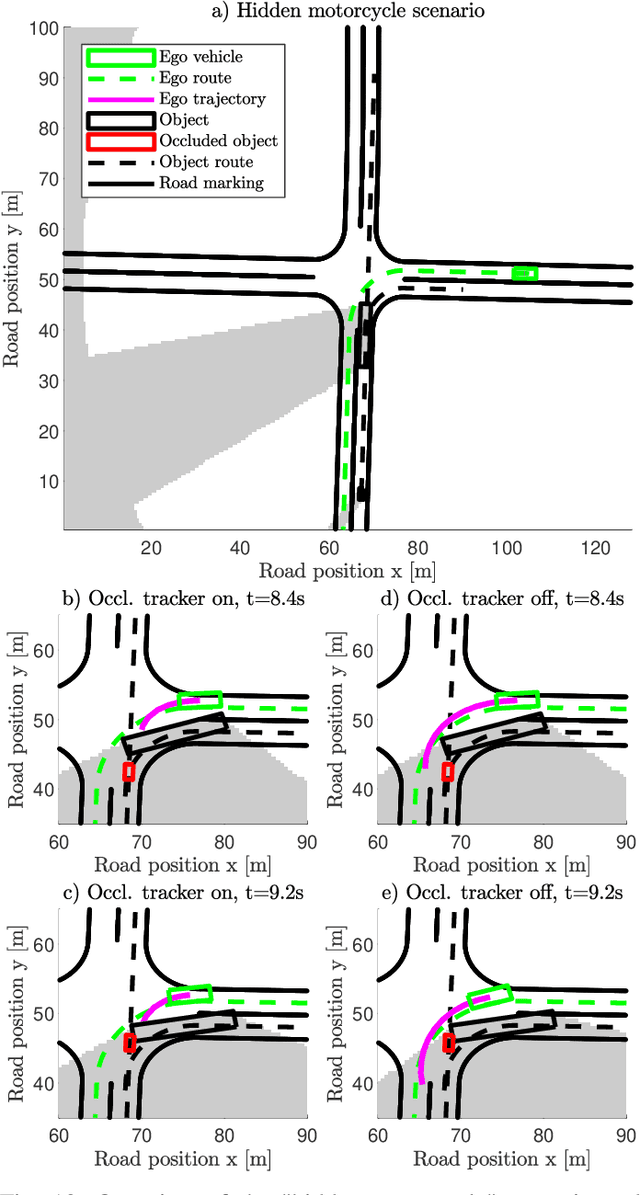

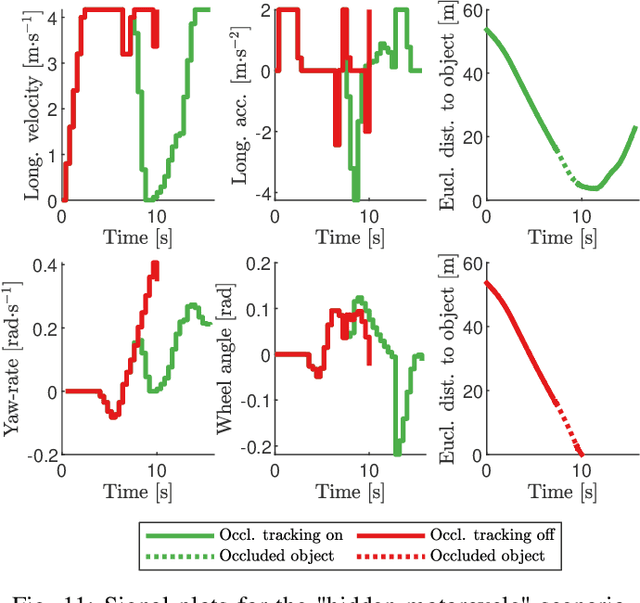

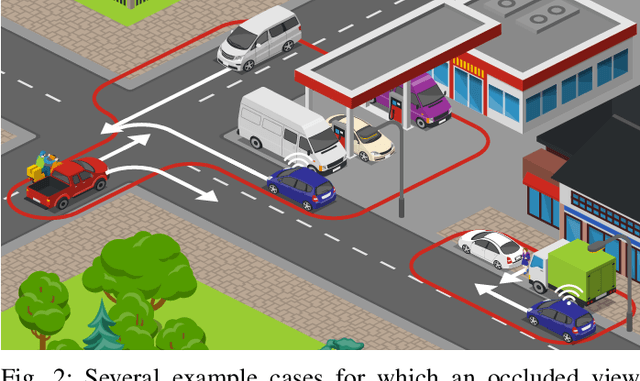

Abstract:As vehicle automation advances, motion planning algorithms face escalating challenges in achieving safe and efficient navigation. Existing Advanced Driver Assistance Systems (ADAS) primarily focus on basic tasks, leaving unexpected scenarios for human intervention, which can be error-prone. Motion planning approaches for higher levels of automation in the state-of-the-art are primarily oriented toward the use of risk- or anti-collision constraints, using over-approximates of the shapes and sizes of other road users to prevent collisions. These methods however suffer from conservative behavior and the risk of infeasibility in high-risk initial conditions. In contrast, our work introduces a novel multi-objective trajectory generation approach. We propose an innovative method for constructing risk fields that accommodates diverse entity shapes and sizes, which allows us to also account for the presence of potentially occluded objects. This methodology is integrated into an occlusion-aware trajectory generator, enabling dynamic and safe maneuvering through intricate environments while anticipating (potentially hidden) road users and traveling along the infrastructure toward a specific goal. Through theoretical underpinnings and simulations, we validate the effectiveness of our approach. This paper bridges crucial gaps in motion planning for automated vehicles, offering a pathway toward safer and more adaptable autonomous navigation in complex urban contexts.

Finding Critical Scenarios for Automated Driving Systems: A Systematic Literature Review

Oct 16, 2021

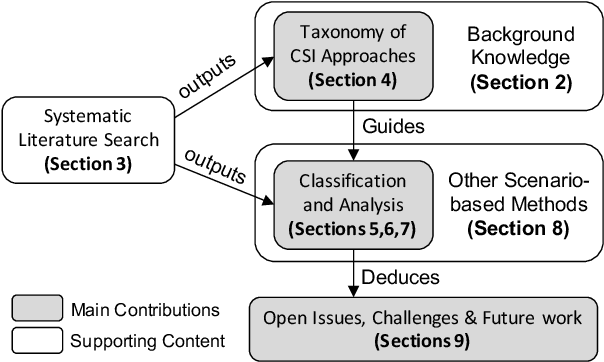

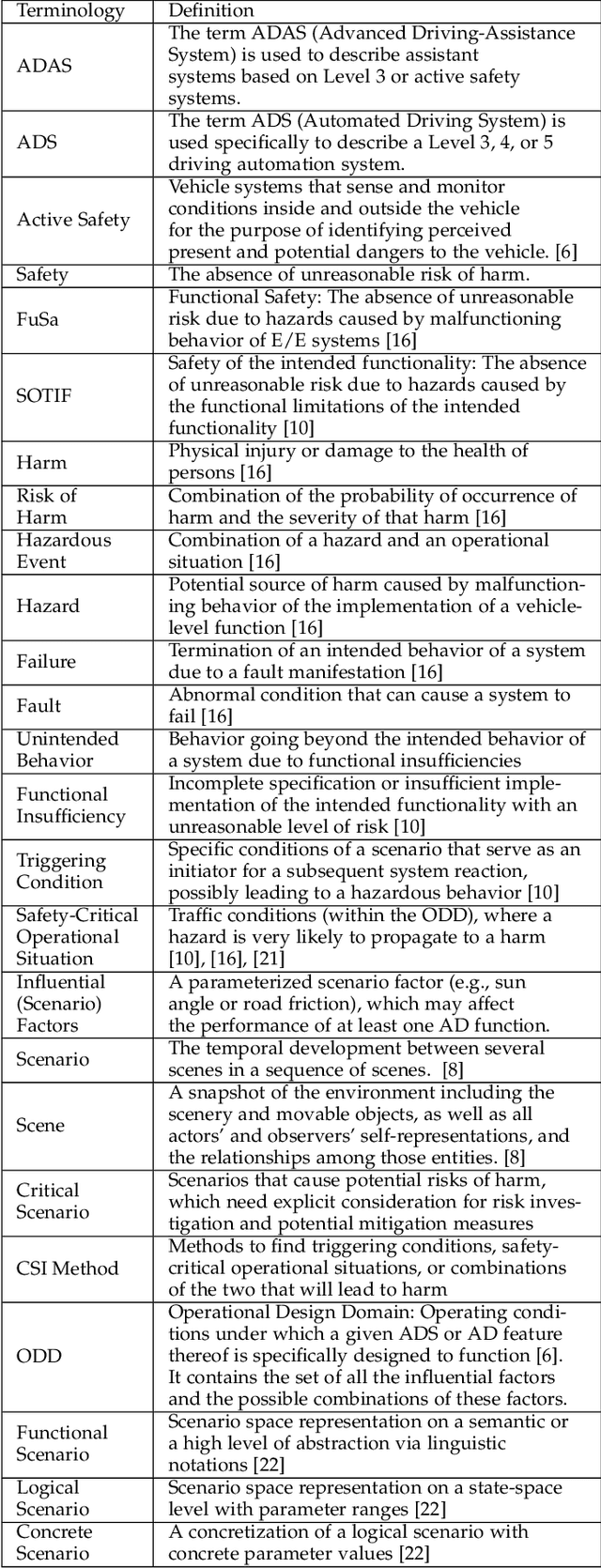

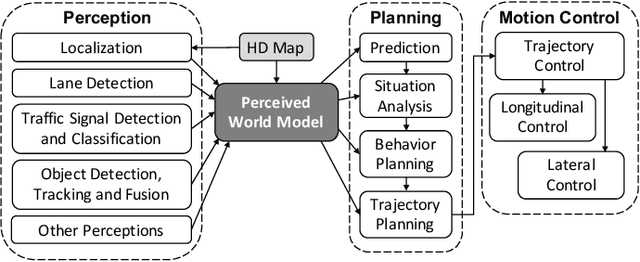

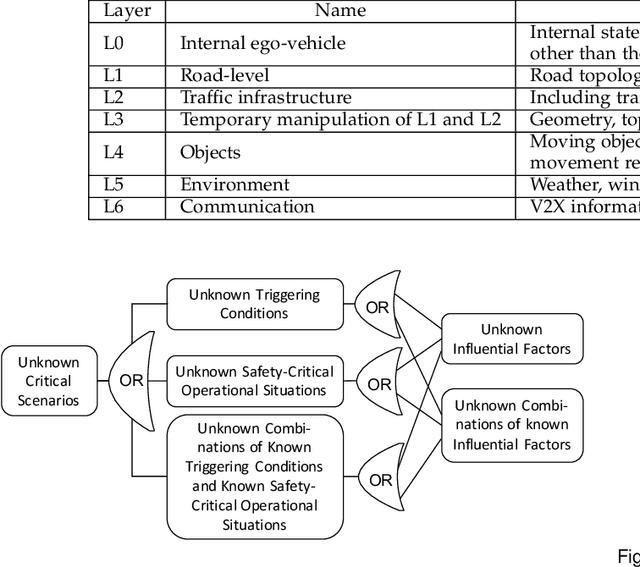

Abstract:Scenario-based approaches have been receiving a huge amount of attention in research and engineering of automated driving systems. Due to the complexity and uncertainty of the driving environment, and the complexity of the driving task itself, the number of possible driving scenarios that an ADS or ADAS may encounter is virtually infinite. Therefore it is essential to be able to reason about the identification of scenarios and in particular critical ones that may impose unacceptable risk if not considered. Critical scenarios are particularly important to support design, verification and validation efforts, and as a basis for a safety case. In this paper, we present the results of a systematic literature review in the context of autonomous driving. The main contributions are: (i) introducing a comprehensive taxonomy for critical scenario identification methods; (ii) giving an overview of the state-of-the-art research based on the taxonomy encompassing 86 papers between 2017 and 2020; and (iii) identifying open issues and directions for further research. The provided taxonomy comprises three main perspectives encompassing the problem definition (the why), the solution (the methods to derive scenarios), and the assessment of the established scenarios. In addition, we discuss open research issues considering the perspectives of coverage, practicability, and scenario space explosion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge