Waqas Ali

An Improved Fault Diagnosis Strategy for Induction Motors Using Weighted Probability Ensemble Deep Learning

Dec 24, 2024

Abstract:Early detection of faults in induction motors is crucial for ensuring uninterrupted operations in industrial settings. Among the various fault types encountered in induction motors, bearing, rotor, and stator faults are the most prevalent. This paper introduces a Weighted Probability Ensemble Deep Learning (WPEDL) methodology, tailored for effectively diagnosing induction motor faults using high-dimensional data extracted from vibration and current features. The Short-Time Fourier Transform (STFT) is employed to extract features from both vibration and current signals. The performance of the WPEDL fault diagnosis method is compared against conventional deep learning models, demonstrating the superior efficacy of the proposed system. The multi-class fault diagnosis system based on WPEDL achieves high accuracies across different fault types: 99.05% for bearing (vibrational signal), 99.10%, and 99.50% for rotor (current and vibration signal), and 99.60%, and 99.52% for stator faults (current and vibration signal) respectively. To evaluate the robustness of our multi-class classification decisions, tests have been conducted on a combined dataset of 52,000 STFT images encompassing all three faults. Our proposed model outperforms other models, achieving an accuracy of 98.89%. The findings underscore the effectiveness and reliability of the WPEDL approach for early-stage fault diagnosis in IMs, offering promising insights for enhancing industrial operational efficiency and reliability.

Leveraging Deep Learning with Multi-Head Attention for Accurate Extraction of Medicine from Handwritten Prescriptions

Dec 24, 2024Abstract:Extracting medication names from handwritten doctor prescriptions is challenging due to the wide variability in handwriting styles and prescription formats. This paper presents a robust method for extracting medicine names using a combination of Mask R-CNN and Transformer-based Optical Character Recognition (TrOCR) with Multi-Head Attention and Positional Embeddings. A novel dataset, featuring diverse handwritten prescriptions from various regions of Pakistan, was utilized to fine-tune the model on different handwriting styles. The Mask R-CNN model segments the prescription images to focus on the medicinal sections, while the TrOCR model, enhanced by Multi-Head Attention and Positional Embeddings, transcribes the isolated text. The transcribed text is then matched against a pre-existing database for accurate identification. The proposed approach achieved a character error rate (CER) of 1.4% on standard benchmarks, highlighting its potential as a reliable and efficient tool for automating medicine name extraction.

HD-maps as Prior Information for Globally Consistent Mapping in GPS-denied Environments

Jul 28, 2024

Abstract:In recent years, prior maps have become a mainstream tool in autonomous navigation. However, commonly available prior maps are still tailored to control-and-decision tasks, and the use of these maps for localization remains largely unexplored. To bridge this gap, we propose a lidar-based localization and mapping (LOAM) system that can exploit the common HD-maps in autonomous driving scenarios. Specifically, we propose a technique to extract information from the drivable area and ground surface height components of the HD-maps to construct 4DOF pose priors. These pose priors are then further integrated into the pose-graph optimization problem to create a globally consistent 3D map. Experiments show that our scheme can significantly improve the global consistency of the map compared to state-of-the-art lidar-only approaches, proven to be a useful technology to enhance the system's robustness, especially in GPS-denied environment. Moreover, our work also serves as a first step towards long-term navigation of robots in familiar environment, by updating a map. In autonomous driving this could enable updating the HD-maps without sourcing a new from a third party company, which is expensive and introduces delays from change in the world to updated map.

A life-long SLAM approach using adaptable local maps based on rasterized LIDAR images

Jul 15, 2021

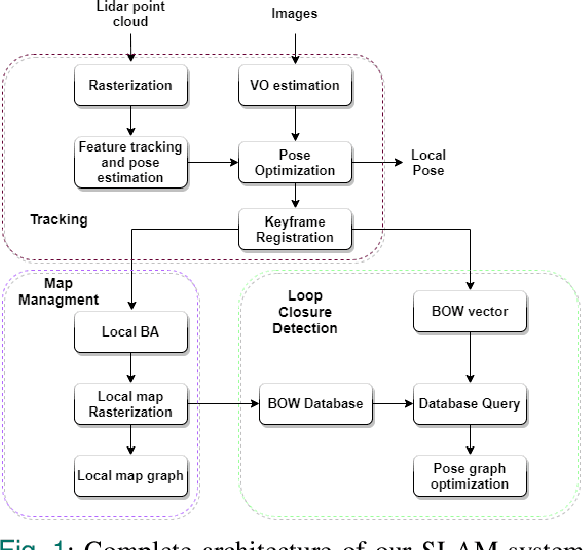

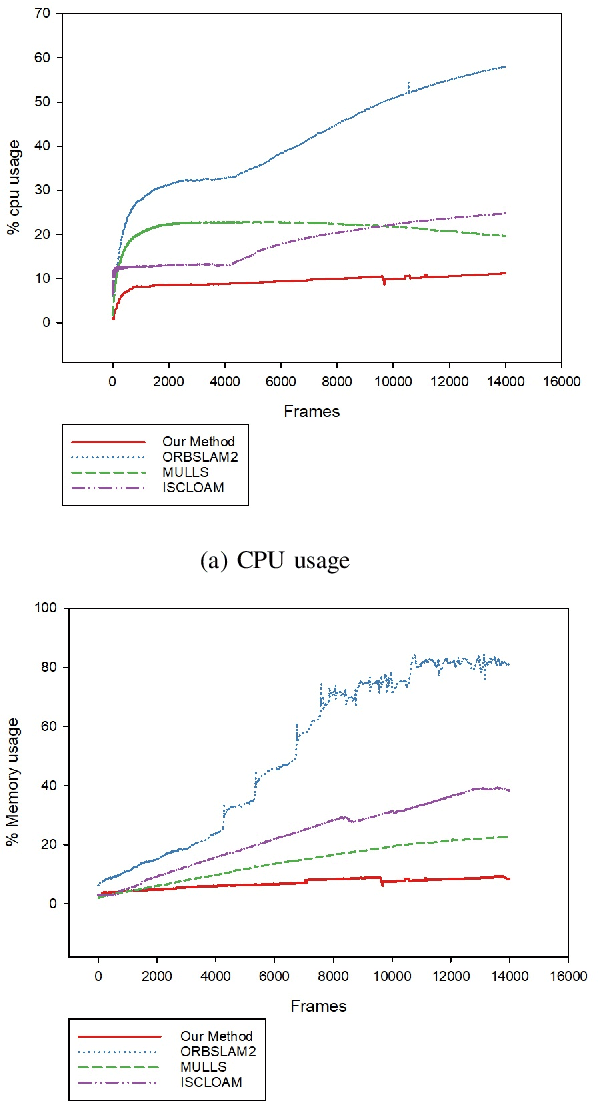

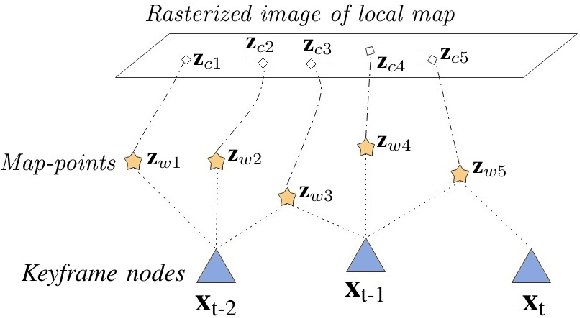

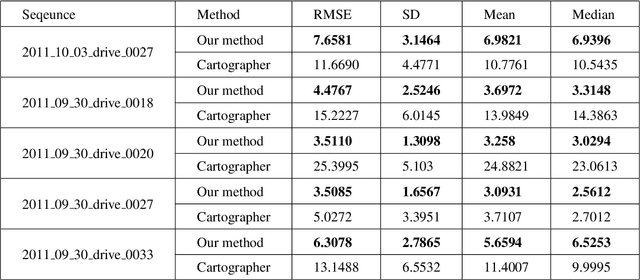

Abstract:Most real-time autonomous robot applications require a robot to traverse through a dynamic space for a long time. In some cases, a robot needs to work in the same environment. Such applications give rise to the problem of a life-long SLAM system. Life-long SLAM presents two main challenges i.e. the tracking should not fail in a dynamic environment and the need for a robust and efficient mapping strategy. The system should update maps with new information; while also keeping track of older observations. But, mapping for a long time can require higher computational requirements. In this paper, we propose a solution to the problem of life-long SLAM. We represent the global map as a set of rasterized images of local maps along with a map management system responsible for updating local maps and keeping track of older values. We also present an efficient approach of using the bag of visual words method for loop closure detection and relocalization. We evaluate the performance of our system on the KITTI dataset and an indoor dataset. Our loop closure system reported recall and precision of above 90 percent. The computational cost of our system is much lower as compared to state-of-the-art methods. Our method reports lower computational requirements even for long-term operation.

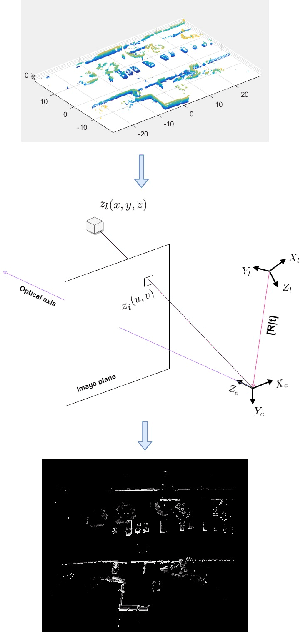

6-DOF Feature based LIDAR SLAM using ORB Features from Rasterized Images of 3D LIDAR Point Cloud

Mar 19, 2021

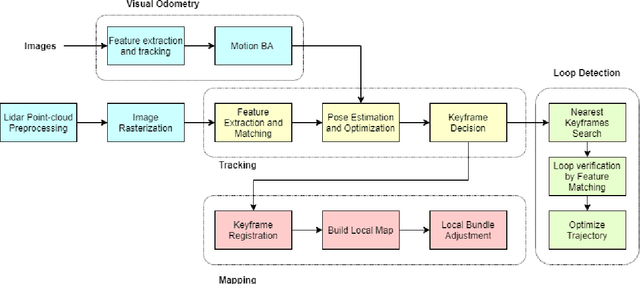

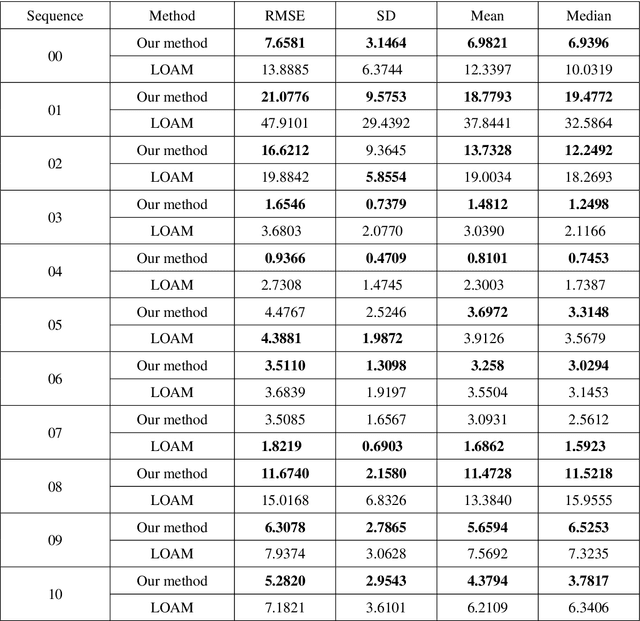

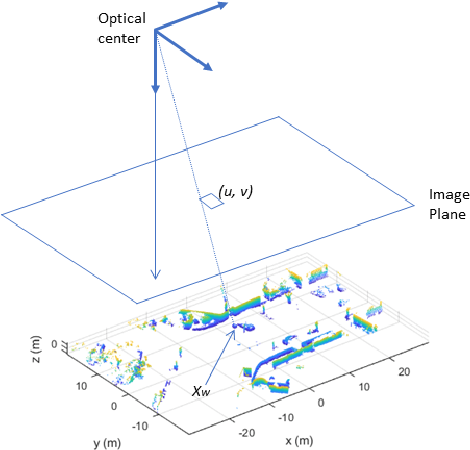

Abstract:An accurate and computationally efficient SLAM algorithm is vital for modern autonomous vehicles. To make a lightweight the algorithm, most SLAM systems rely on feature detection from images for vision SLAM or point cloud for laser-based methods. Feature detection through a 3D point cloud becomes a computationally challenging task. In this paper, we propose a feature detection method by projecting a 3D point cloud to form an image and apply the vision-based feature detection technique. The proposed method gives repeatable and stable features in a variety of environments. Based on such features, we build a 6-DOF SLAM system consisting of tracking, mapping, and loop closure threads. For loop detection, we employ a 2-step approach i.e. nearest key-frames detection and loop candidate verification by matching features extracted from rasterized LIDAR images. Furthermore, we utilize a key-frame structure to achieve a lightweight SLAM system. The proposed system is evaluated with implementation on the KITTI dataset and the University of Michigan Ford Campus dataset. Through experimental results, we show that the algorithm presented in this paper can substantially reduce the computational cost of feature detection from the point cloud and the whole SLAM system while giving accurate results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge