Usman Ali

Shanghai Jiao Tong University

Test-Time Adaptation for Anomaly Segmentation via Topology-Aware Optimal Transport Chaining

Jan 28, 2026Abstract:Deep topological data analysis (TDA) offers a principled framework for capturing structural invariants such as connectivity and cycles that persist across scales, making it a natural fit for anomaly segmentation (AS). Unlike thresholdbased binarisation, which produces brittle masks under distribution shift, TDA allows anomalies to be characterised as disruptions to global structure rather than local fluctuations. We introduce TopoOT, a topology-aware optimal transport (OT) framework that integrates multi-filtration persistence diagrams (PDs) with test-time adaptation (TTA). Our key innovation is Optimal Transport Chaining, which sequentially aligns PDs across thresholds and filtrations, yielding geodesic stability scores that identify features consistently preserved across scales. These stabilityaware pseudo-labels supervise a lightweight head trained online with OT-consistency and contrastive objectives, ensuring robust adaptation under domain shift. Across standard 2D and 3D anomaly detection benchmarks, TopoOT achieves state-of-the-art performance, outperforming the most competitive methods by up to +24.1% mean F1 on 2D datasets and +10.2% on 3D AS benchmarks.

Hybrid Firefly Algorithm and Sperm Swarm Optimization Algorithm using Newton-Raphson Method (HFASSON) and its application in CR-VANET

Feb 03, 2025Abstract:This paper proposes a new hybrid algorithm, combining FA, SSO, and the N-R method to accelerate convergence towards global optima, named the Hybrid Firefly Algorithm and Sperm Swarm Optimization with Newton-Raphson (HFASSON). The performance of HFASSON is evaluated using 23 benchmark functions from the CEC 2017 suite, tested in 30, 50, and 100 dimensions. A statistical comparison is performed to assess the effectiveness of HFASSON against FA, SSO, HFASSO, and five hybrid algorithms: Water Cycle Moth Flame Optimization (WCMFO), Hybrid Particle Swarm Optimization and Genetic Algorithm (HPSOGA), Hybrid Sperm Swarm Optimization and Gravitational Search Algorithm (HSSOGSA), Grey Wolf and Cuckoo Search Algorithm (GWOCS), and Hybrid Firefly Genetic Algorithm (FAGA). Results from the Friedman rank test show the superior performance of HFASSON. Additionally, HFASSON is applied to Cognitive Radio Vehicular Ad-hoc Networks (CR-VANET), outperforming basic CR-VANET in spectrum utilization. These findings demonstrate HFASSON's efficiency in wireless network applications.

A Multimodal Lightweight Approach to Fault Diagnosis of Induction Motors in High-Dimensional Dataset

Jan 07, 2025

Abstract:An accurate AI-based diagnostic system for induction motors (IMs) holds the potential to enhance proactive maintenance, mitigating unplanned downtime and curbing overall maintenance costs within an industrial environment. Notably, among the prevalent faults in IMs, a Broken Rotor Bar (BRB) fault is frequently encountered. Researchers have proposed various fault diagnosis approaches using signal processing (SP), machine learning (ML), deep learning (DL), and hybrid architectures for BRB faults. One limitation in the existing literature is the training of these architectures on relatively small datasets, risking overfitting when implementing such systems in industrial environments. This paper addresses this limitation by implementing large-scale data of BRB faults by using a transfer-learning-based lightweight DL model named ShuffleNetV2 for diagnosing one, two, three, and four BRB faults using current and vibration signal data. Spectral images for training and testing are generated using a Short-Time Fourier Transform (STFT). The dataset comprises 57,500 images, with 47,500 used for training and 10,000 for testing. Remarkably, the ShuffleNetV2 model exhibited superior performance, in less computational cost as well as accurately classifying 98.856% of spectral images. To further enhance the visualization of harmonic sidebands resulting from broken bars, Fast Fourier Transform (FFT) is applied to current and vibration data. The paper also provides insights into the training and testing times for each model, contributing to a comprehensive understanding of the proposed fault diagnosis methodology. The findings of our research provide valuable insights into the performance and efficiency of different ML and DL models, offering a foundation for the development of robust fault diagnosis systems for induction motors in industrial settings.

An Improved Fault Diagnosis Strategy for Induction Motors Using Weighted Probability Ensemble Deep Learning

Dec 24, 2024

Abstract:Early detection of faults in induction motors is crucial for ensuring uninterrupted operations in industrial settings. Among the various fault types encountered in induction motors, bearing, rotor, and stator faults are the most prevalent. This paper introduces a Weighted Probability Ensemble Deep Learning (WPEDL) methodology, tailored for effectively diagnosing induction motor faults using high-dimensional data extracted from vibration and current features. The Short-Time Fourier Transform (STFT) is employed to extract features from both vibration and current signals. The performance of the WPEDL fault diagnosis method is compared against conventional deep learning models, demonstrating the superior efficacy of the proposed system. The multi-class fault diagnosis system based on WPEDL achieves high accuracies across different fault types: 99.05% for bearing (vibrational signal), 99.10%, and 99.50% for rotor (current and vibration signal), and 99.60%, and 99.52% for stator faults (current and vibration signal) respectively. To evaluate the robustness of our multi-class classification decisions, tests have been conducted on a combined dataset of 52,000 STFT images encompassing all three faults. Our proposed model outperforms other models, achieving an accuracy of 98.89%. The findings underscore the effectiveness and reliability of the WPEDL approach for early-stage fault diagnosis in IMs, offering promising insights for enhancing industrial operational efficiency and reliability.

Leveraging Deep Learning with Multi-Head Attention for Accurate Extraction of Medicine from Handwritten Prescriptions

Dec 24, 2024Abstract:Extracting medication names from handwritten doctor prescriptions is challenging due to the wide variability in handwriting styles and prescription formats. This paper presents a robust method for extracting medicine names using a combination of Mask R-CNN and Transformer-based Optical Character Recognition (TrOCR) with Multi-Head Attention and Positional Embeddings. A novel dataset, featuring diverse handwritten prescriptions from various regions of Pakistan, was utilized to fine-tune the model on different handwriting styles. The Mask R-CNN model segments the prescription images to focus on the medicinal sections, while the TrOCR model, enhanced by Multi-Head Attention and Positional Embeddings, transcribes the isolated text. The transcribed text is then matched against a pre-existing database for accurate identification. The proposed approach achieved a character error rate (CER) of 1.4% on standard benchmarks, highlighting its potential as a reliable and efficient tool for automating medicine name extraction.

Towards Fault Diagnosis in Induction Motor using Fractional Fourier Transform

Dec 24, 2024

Abstract:A method for determining the current signature faults using Fractional Fourier Transform (FrFT) has been developed. The method has been applied to the real-time steady-state current of the inverter-fed high power induction motor for fault determination. The method incorporates calculating the relative norm error to find the threshold value between healthy and unhealthy induction motor at different operating frequencies. The experimental results demonstrate that the total harmonics distortion of unhealthy motor is much larger than the healthy motor, and the threshold relative norm error value of different healthy induction motors is less than 0.3, and the threshold relative norm error value of unhealthy induction motor is greater than 0.5. The developed method can function as a simple operator-assisted tool for determining induction motor faults in real-time.

Gated-Attention Feature-Fusion Based Framework for Poverty Prediction

Nov 29, 2024Abstract:This research paper addresses the significant challenge of accurately estimating poverty levels using deep learning, particularly in developing regions where traditional methods like household surveys are often costly, infrequent, and quickly become outdated. To address these issues, we propose a state-of-the-art Convolutional Neural Network (CNN) architecture, extending the ResNet50 model by incorporating a Gated-Attention Feature-Fusion Module (GAFM). Our architecture is designed to improve the model's ability to capture and combine both global and local features from satellite images, leading to more accurate poverty estimates. The model achieves a 75% R2 score, significantly outperforming existing leading methods in poverty mapping. This improvement is due to the model's capacity to focus on and refine the most relevant features, filtering out unnecessary data, which makes it a powerful tool for remote sensing and poverty estimation.

Locally-Focused Face Representation for Sketch-to-Image Generation Using Noise-Induced Refinement

Nov 28, 2024Abstract:This paper presents a novel deep-learning framework that significantly enhances the transformation of rudimentary face sketches into high-fidelity colour images. Employing a Convolutional Block Attention-based Auto-encoder Network (CA2N), our approach effectively captures and enhances critical facial features through a block attention mechanism within an encoder-decoder architecture. Subsequently, the framework utilises a noise-induced conditional Generative Adversarial Network (cGAN) process that allows the system to maintain high performance even on domains unseen during the training. These enhancements lead to considerable improvements in image realism and fidelity, with our model achieving superior performance metrics that outperform the best method by FID margin of 17, 23, and 38 on CelebAMask-HQ, CUHK, and CUFSF datasets; respectively. The model sets a new state-of-the-art in sketch-to-image generation, can generalize across sketch types, and offers a robust solution for applications such as criminal identification in law enforcement.

F-3DGS: Factorized Coordinates and Representations for 3D Gaussian Splatting

May 28, 2024

Abstract:The neural radiance field (NeRF) has made significant strides in representing 3D scenes and synthesizing novel views. Despite its advancements, the high computational costs of NeRF have posed challenges for its deployment in resource-constrained environments and real-time applications. As an alternative to NeRF-like neural rendering methods, 3D Gaussian Splatting (3DGS) offers rapid rendering speeds while maintaining excellent image quality. However, as it represents objects and scenes using a myriad of Gaussians, it requires substantial storage to achieve high-quality representation. To mitigate the storage overhead, we propose Factorized 3D Gaussian Splatting (F-3DGS), a novel approach that drastically reduces storage requirements while preserving image quality. Inspired by classical matrix and tensor factorization techniques, our method represents and approximates dense clusters of Gaussians with significantly fewer Gaussians through efficient factorization. We aim to efficiently represent dense 3D Gaussians by approximating them with a limited amount of information for each axis and their combinations. This method allows us to encode a substantially large number of Gaussians along with their essential attributes -- such as color, scale, and rotation -- necessary for rendering using a relatively small number of elements. Extensive experimental results demonstrate that F-3DGS achieves a significant reduction in storage costs while maintaining comparable quality in rendered images.

Sharp-NeRF: Grid-based Fast Deblurring Neural Radiance Fields Using Sharpness Prior

Jan 01, 2024

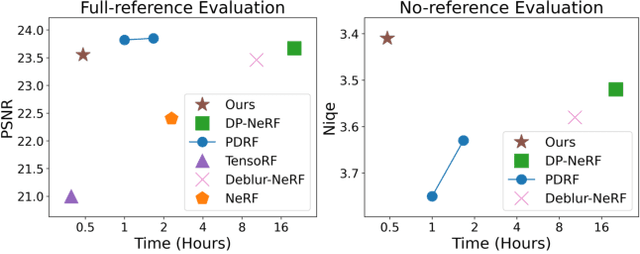

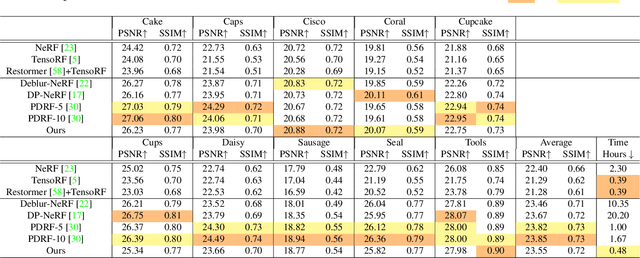

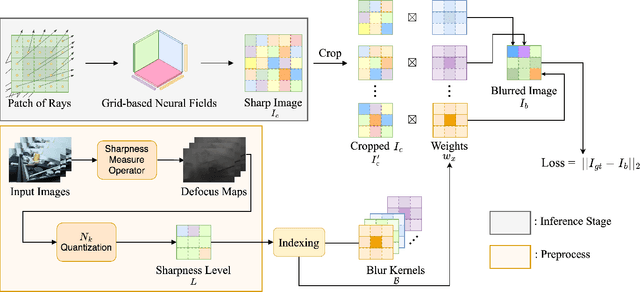

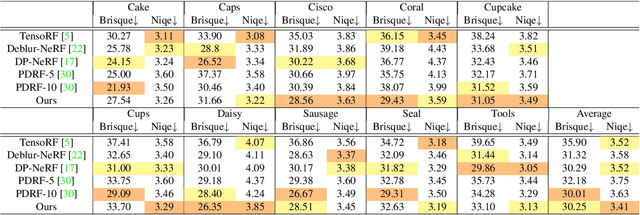

Abstract:Neural Radiance Fields (NeRF) have shown remarkable performance in neural rendering-based novel view synthesis. However, NeRF suffers from severe visual quality degradation when the input images have been captured under imperfect conditions, such as poor illumination, defocus blurring, and lens aberrations. Especially, defocus blur is quite common in the images when they are normally captured using cameras. Although few recent studies have proposed to render sharp images of considerably high-quality, yet they still face many key challenges. In particular, those methods have employed a Multi-Layer Perceptron (MLP) based NeRF, which requires tremendous computational time. To overcome these shortcomings, this paper proposes a novel technique Sharp-NeRF -- a grid-based NeRF that renders clean and sharp images from the input blurry images within half an hour of training. To do so, we used several grid-based kernels to accurately model the sharpness/blurriness of the scene. The sharpness level of the pixels is computed to learn the spatially varying blur kernels. We have conducted experiments on the benchmarks consisting of blurry images and have evaluated full-reference and non-reference metrics. The qualitative and quantitative results have revealed that our approach renders the sharp novel views with vivid colors and fine details, and it has considerably faster training time than the previous works. Our project page is available at https://benhenryl.github.io/SharpNeRF/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge