Thien-Minh Nguyen

PERAL: Perception-Aware Motion Control for Passive LiDAR Excitation in Spherical Robots

Sep 18, 2025Abstract:Autonomous mobile robots increasingly rely on LiDAR-IMU odometry for navigation and mapping, yet horizontally mounted LiDARs such as the MID360 capture few near-ground returns, limiting terrain awareness and degrading performance in feature-scarce environments. Prior solutions - static tilt, active rotation, or high-density sensors - either sacrifice horizontal perception or incur added actuators, cost, and power. We introduce PERAL, a perception-aware motion control framework for spherical robots that achieves passive LiDAR excitation without dedicated hardware. By modeling the coupling between internal differential-drive actuation and sensor attitude, PERAL superimposes bounded, non-periodic oscillations onto nominal goal- or trajectory-tracking commands, enriching vertical scan diversity while preserving navigation accuracy. Implemented on a compact spherical robot, PERAL is validated across laboratory, corridor, and tactical environments. Experiments demonstrate up to 96 percent map completeness, a 27 percent reduction in trajectory tracking error, and robust near-ground human detection, all at lower weight, power, and cost compared with static tilt, active rotation, and fixed horizontal baselines. The design and code will be open-sourced upon acceptance.

Aerial Target Encirclement and Interception with Noisy Range Observations

Aug 11, 2025Abstract:This paper proposes a strategy to encircle and intercept a non-cooperative aerial point-mass moving target by leveraging noisy range measurements for state estimation. In this approach, the guardians actively ensure the observability of the target by using an anti-synchronization (AS), 3D ``vibrating string" trajectory, which enables rapid position and velocity estimation based on the Kalman filter. Additionally, a novel anti-target controller is designed for the guardians to enable adaptive transitions from encircling a protected target to encircling, intercepting, and neutralizing a hostile target, taking into consideration the input constraints of the guardians. Based on the guaranteed uniform observability, the exponentially bounded stability of the state estimation error and the convergence of the encirclement error are rigorously analyzed. Simulation results and real-world UAV experiments are presented to further validate the effectiveness of the system design.

EGS-SLAM: RGB-D Gaussian Splatting SLAM with Events

Aug 09, 2025

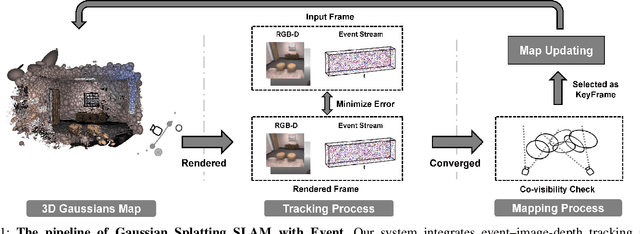

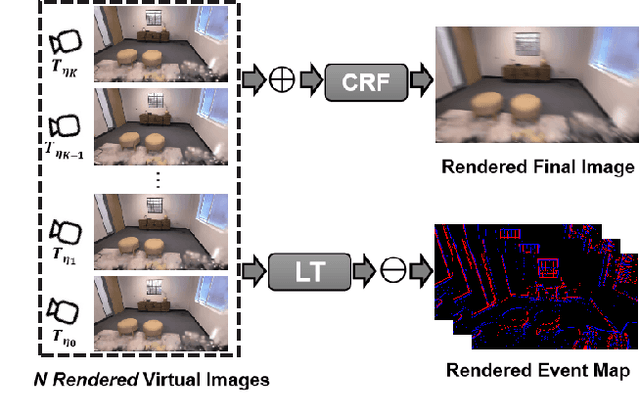

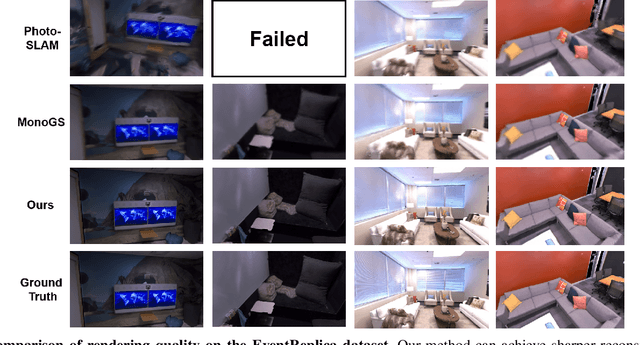

Abstract:Gaussian Splatting SLAM (GS-SLAM) offers a notable improvement over traditional SLAM methods, enabling photorealistic 3D reconstruction that conventional approaches often struggle to achieve. However, existing GS-SLAM systems perform poorly under persistent and severe motion blur commonly encountered in real-world scenarios, leading to significantly degraded tracking accuracy and compromised 3D reconstruction quality. To address this limitation, we propose EGS-SLAM, a novel GS-SLAM framework that fuses event data with RGB-D inputs to simultaneously reduce motion blur in images and compensate for the sparse and discrete nature of event streams, enabling robust tracking and high-fidelity 3D Gaussian Splatting reconstruction. Specifically, our system explicitly models the camera's continuous trajectory during exposure, supporting event- and blur-aware tracking and mapping on a unified 3D Gaussian Splatting scene. Furthermore, we introduce a learnable camera response function to align the dynamic ranges of events and images, along with a no-event loss to suppress ringing artifacts during reconstruction. We validate our approach on a new dataset comprising synthetic and real-world sequences with significant motion blur. Extensive experimental results demonstrate that EGS-SLAM consistently outperforms existing GS-SLAM systems in both trajectory accuracy and photorealistic 3D Gaussian Splatting reconstruction. The source code will be available at https://github.com/Chensiyu00/EGS-SLAM.

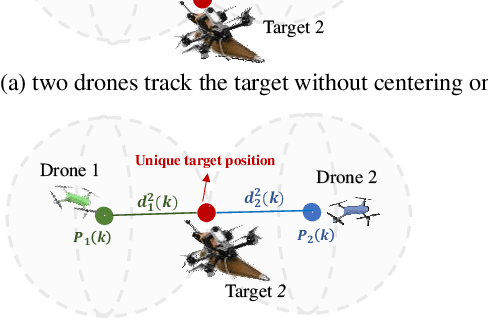

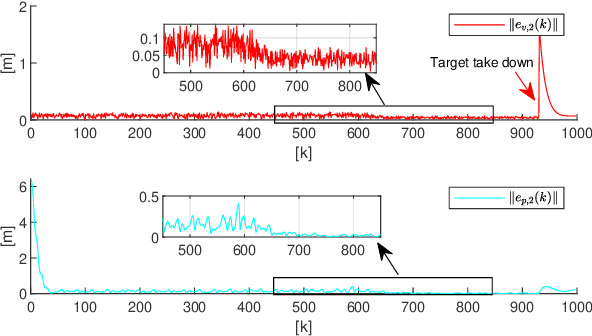

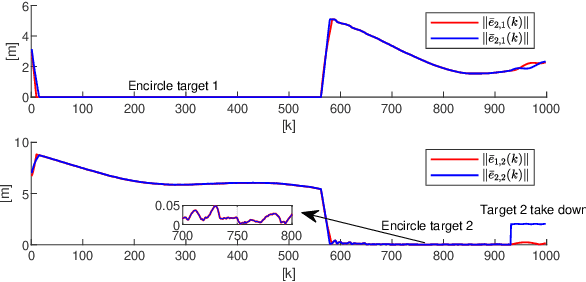

Autonomous 3D Moving Target Encirclement and Interception with Range measurement

Jun 16, 2025

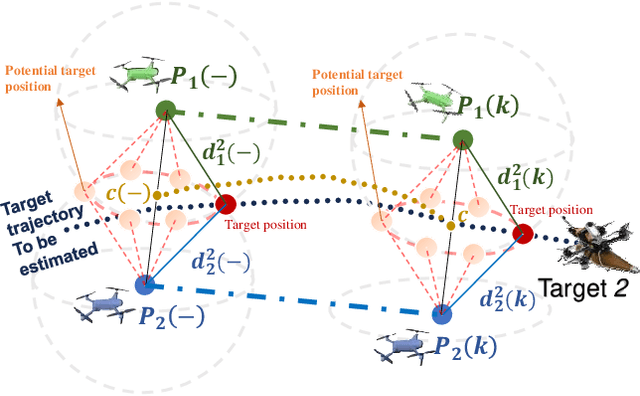

Abstract:Commercial UAVs are an emerging security threat as they are capable of carrying hazardous payloads or disrupting air traffic. To counter UAVs, we introduce an autonomous 3D target encirclement and interception strategy. Unlike traditional ground-guided systems, this strategy employs autonomous drones to track and engage non-cooperative hostile UAVs, which is effective in non-line-of-sight conditions, GPS denial, and radar jamming, where conventional detection and neutralization from ground guidance fail. Using two noisy real-time distances measured by drones, guardian drones estimate the relative position from their own to the target using observation and velocity compensation methods, based on anti-synchronization (AS) and an X$-$Y circular motion combined with vertical jitter. An encirclement control mechanism is proposed to enable UAVs to adaptively transition from encircling and protecting a target to encircling and monitoring a hostile target. Upon breaching a warning threshold, the UAVs may even employ a suicide attack to neutralize the hostile target. We validate this strategy through real-world UAV experiments and simulated analysis in MATLAB, demonstrating its effectiveness in detecting, encircling, and intercepting hostile drones. More details: https://youtu.be/5eHW56lPVto.

Tire Wear Aware Trajectory Tracking Control for Multi-axle Swerve-drive Autonomous Mobile Robots

Jun 05, 2025Abstract:Multi-axle Swerve-drive Autonomous Mobile Robots (MS-AGVs) equipped with independently steerable wheels are commonly used for high-payload transportation. In this work, we present a novel model predictive control (MPC) method for MS-AGV trajectory tracking that takes tire wear minimization consideration in the objective function. To speed up the problem-solving process, we propose a hierarchical controller design and simplify the dynamic model by integrating the \textit{magic formula tire model} and \textit{simplified tire wear model}. In the experiment, the proposed method can be solved by simulated annealing in real-time on a normal personal computer and by incorporating tire wear into the objective function, tire wear is reduced by 19.19\% while maintaining the tracking accuracy in curve-tracking experiments. In the more challenging scene: the desired trajectory is offset by 60 degrees from the vehicle's heading, the reduction in tire wear increased to 65.20\% compared to the kinematic model without considering the tire wear optimization.

Cooperative Aerial Robot Inspection Challenge: A Benchmark for Heterogeneous Multi-UAV Planning and Lessons Learned

Jan 14, 2025

Abstract:We propose the Cooperative Aerial Robot Inspection Challenge (CARIC), a simulation-based benchmark for motion planning algorithms in heterogeneous multi-UAV systems. CARIC features UAV teams with complementary sensors, realistic constraints, and evaluation metrics prioritizing inspection quality and efficiency. It offers a ready-to-use perception-control software stack and diverse scenarios to support the development and evaluation of task allocation and motion planning algorithms. Competitions using CARIC were held at IEEE CDC 2023 and the IROS 2024 Workshop on Multi-Robot Perception and Navigation, attracting innovative solutions from research teams worldwide. This paper examines the top three teams from CDC 2023, analyzing their exploration, inspection, and task allocation strategies while drawing insights into their performance across scenarios. The results highlight the task's complexity and suggest promising directions for future research in cooperative multi-UAV systems.

Large-Scale UWB Anchor Calibration and One-Shot Localization Using Gaussian Process

Dec 22, 2024

Abstract:Ultra-wideband (UWB) is gaining popularity with devices like AirTags for precise home item localization but faces significant challenges when scaled to large environments like seaports. The main challenges are calibration and localization in obstructed conditions, which are common in logistics environments. Traditional calibration methods, dependent on line-of-sight (LoS), are slow, costly, and unreliable in seaports and warehouses, making large-scale localization a significant pain point in the industry. To overcome these challenges, we propose a UWB-LiDAR fusion-based calibration and one-shot localization framework. Our method uses Gaussian Processes to estimate anchor position from continuous-time LiDAR Inertial Odometry with sampled UWB ranges. This approach ensures accurate and reliable calibration with just one round of sampling in large-scale areas, I.e., 600x450 square meter. With the LoS issues, UWB-only localization can be problematic, even when anchor positions are known. We demonstrate that by applying a UWB-range filter, the search range for LiDAR loop closure descriptors is significantly reduced, improving both accuracy and speed. This concept can be applied to other loop closure detection methods, enabling cost-effective localization in large-scale warehouses and seaports. It significantly improves precision in challenging environments where UWB-only and LiDAR-Inertial methods fall short, as shown in the video \url{https://youtu.be/oY8jQKdM7lU }. We will open-source our datasets and calibration codes for community use.

GPTR: Gaussian Process Trajectory Representation for Continuous-Time Motion Estimation

Oct 31, 2024

Abstract:Continuous-time trajectory representation has gained significant popularity in recent years, as it offers an elegant formulation that allows the fusion of a larger number of sensors and sensing modalities, overcoming limitations of traditional discrete-time frameworks. To bolster the adoption of the continuous-time paradigm, we propose a so-called Gaussian Process Trajectory Representation (GPTR) framework for continuous-time motion estimation (CTME) tasks. Our approach stands out by employing a third-order random jerk model, featuring closed-form expressions for both rotational and translational state derivatives. This model provides smooth, continuous trajectory representations that are crucial for precise estimation of complex motion. To support the wider robotics and computer vision communities, we have made the source code for GPTR available as a light-weight header-only library. This format was chosen for its ease of integration, allowing developers to incorporate GPTR into existing systems without needing extensive code modifications. Moreover, we also provide a set of optimization examples with LiDAR, camera, IMU, UWB factors, and closed-form analytical Jacobians under the proposed GP framework. Our experiments demonstrate the efficacy and efficiency of GP-based trajectory representation in various motion estimation tasks, and the examples can serve as the prototype to help researchers quickly develop future applications such as batch optimization, calibration, sensor fusion, trajectory planning, etc., with continuous-time trajectory representation. Our project is accessible at https://github.com/brytsknguyen/gptr .

Robust Loop Closure by Textual Cues in Challenging Environments

Oct 21, 2024

Abstract:Loop closure is an important task in robot navigation. However, existing methods mostly rely on some implicit or heuristic features of the environment, which can still fail to work in common environments such as corridors, tunnels, and warehouses. Indeed, navigating in such featureless, degenerative, and repetitive (FDR) environments would also pose a significant challenge even for humans, but explicit text cues in the surroundings often provide the best assistance. This inspires us to propose a multi-modal loop closure method based on explicit human-readable textual cues in FDR environments. Specifically, our approach first extracts scene text entities based on Optical Character Recognition (OCR), then creates a local map of text cues based on accurate LiDAR odometry and finally identifies loop closure events by a graph-theoretic scheme. Experiment results demonstrate that this approach has superior performance over existing methods that rely solely on visual and LiDAR sensors. To benefit the community, we release the source code and datasets at \url{https://github.com/TongxingJin/TXTLCD}.

Graph Optimality-Aware Stochastic LiDAR Bundle Adjustment with Progressive Spatial Smoothing

Oct 18, 2024

Abstract:Large-scale LiDAR Bundle Adjustment (LBA) for refining sensor orientation and point cloud accuracy simultaneously is a fundamental task in photogrammetry and robotics, particularly as low-cost 3D sensors are increasingly used for 3D mapping in complex scenes. Unlike pose-graph-based methods that rely solely on pairwise relationships between LiDAR frames, LBA leverages raw LiDAR correspondences to achieve more precise results, especially when initial pose estimates are unreliable for low-cost sensors. However, existing LBA methods face challenges such as simplistic planar correspondences, extensive observations, and dense normal matrices in the least-squares problem, which limit robustness, efficiency, and scalability. To address these issues, we propose a Graph Optimality-aware Stochastic Optimization scheme with Progressive Spatial Smoothing, namely PSS-GOSO, to achieve \textit{robust}, \textit{efficient}, and \textit{scalable} LBA. The Progressive Spatial Smoothing (PSS) module extracts \textit{robust} LiDAR feature association exploiting the prior structure information obtained by the polynomial smooth kernel. The Graph Optimality-aware Stochastic Optimization (GOSO) module first sparsifies the graph according to optimality for an \textit{efficient} optimization. GOSO then utilizes stochastic clustering and graph marginalization to solve the large-scale state estimation problem for a \textit{scalable} LBA. We validate PSS-GOSO across diverse scenes captured by various platforms, demonstrating its superior performance compared to existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge