Savvas Papaioannou

Adaptive Monitoring of Stochastic Fire Front Processes via Information-seeking Predictive Control

Jan 16, 2026Abstract:We consider the problem of adaptively monitoring a wildfire front using a mobile agent (e.g., a drone), whose trajectory determines where sensor data is collected and thus influences the accuracy of fire propagation estimation. This is a challenging problem, as the stochastic nature of wildfire evolution requires the seamless integration of sensing, estimation, and control, often treated separately in existing methods. State-of-the-art methods either impose linear-Gaussian assumptions to establish optimality or rely on approximations and heuristics, often without providing explicit performance guarantees. To address these limitations, we formulate the fire front monitoring task as a stochastic optimal control problem that integrates sensing, estimation, and control. We derive an optimal recursive Bayesian estimator for a class of stochastic nonlinear elliptical-growth fire front models. Subsequently, we transform the resulting nonlinear stochastic control problem into a finite-horizon Markov decision process and design an information-seeking predictive control law obtained via a lower confidence bound-based adaptive search algorithm with asymptotic convergence to the optimal policy.

VLM-RRT: Vision Language Model Guided RRT Search for Autonomous UAV Navigation

May 29, 2025Abstract:Path planning is a fundamental capability of autonomous Unmanned Aerial Vehicles (UAVs), enabling them to efficiently navigate toward a target region or explore complex environments while avoiding obstacles. Traditional pathplanning methods, such as Rapidly-exploring Random Trees (RRT), have proven effective but often encounter significant challenges. These include high search space complexity, suboptimal path quality, and slow convergence, issues that are particularly problematic in high-stakes applications like disaster response, where rapid and efficient planning is critical. To address these limitations and enhance path-planning efficiency, we propose Vision Language Model RRT (VLM-RRT), a hybrid approach that integrates the pattern recognition capabilities of Vision Language Models (VLMs) with the path-planning strengths of RRT. By leveraging VLMs to provide initial directional guidance based on environmental snapshots, our method biases sampling toward regions more likely to contain feasible paths, significantly improving sampling efficiency and path quality. Extensive quantitative and qualitative experiments with various state-of-the-art VLMs demonstrate the effectiveness of this proposed approach.

Rolling Horizon Coverage Control with Collaborative Autonomous Agents

Apr 08, 2025

Abstract:This work proposes a coverage controller that enables an aerial team of distributed autonomous agents to collaboratively generate non-myopic coverage plans over a rolling finite horizon, aiming to cover specific points on the surface area of a 3D object of interest. The collaborative coverage problem, formulated, as a distributed model predictive control problem, optimizes the agents' motion and camera control inputs, while considering inter-agent constraints aiming at reducing work redundancy. The proposed coverage controller integrates constraints based on light-path propagation techniques to predict the parts of the object's surface that are visible with regard to the agents' future anticipated states. This work also demonstrates how complex, non-linear visibility assessment constraints can be converted into logical expressions that are embedded as binary constraints into a mixed-integer optimization framework. The proposed approach has been demonstrated through simulations and practical applications for inspecting buildings with unmanned aerial vehicles (UAVs).

Jointly-optimized Trajectory Generation and Camera Control for 3D Coverage Planning

Apr 08, 2025Abstract:This work proposes a jointly optimized trajectory generation and camera control approach, enabling an autonomous agent, such as an unmanned aerial vehicle (UAV) operating in 3D environments, to plan and execute coverage trajectories that maximally cover the surface area of a 3D object of interest. Specifically, the UAV's kinematic and camera control inputs are jointly optimized over a rolling planning horizon to achieve complete 3D coverage of the object. The proposed controller incorporates ray-tracing into the planning process to simulate the propagation of light rays, thereby determining the visible parts of the object through the UAV's camera. This integration enables the generation of precise look-ahead coverage trajectories. The coverage planning problem is formulated as a rolling finite-horizon optimal control problem and solved using mixed-integer programming techniques. Extensive real-world and synthetic experiments validate the performance of the proposed approach.

Cooperative Aerial Robot Inspection Challenge: A Benchmark for Heterogeneous Multi-UAV Planning and Lessons Learned

Jan 14, 2025

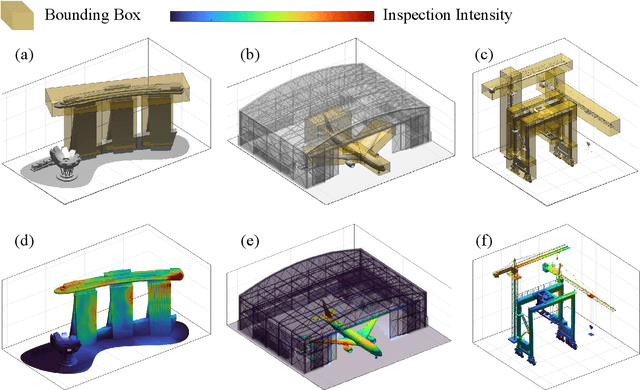

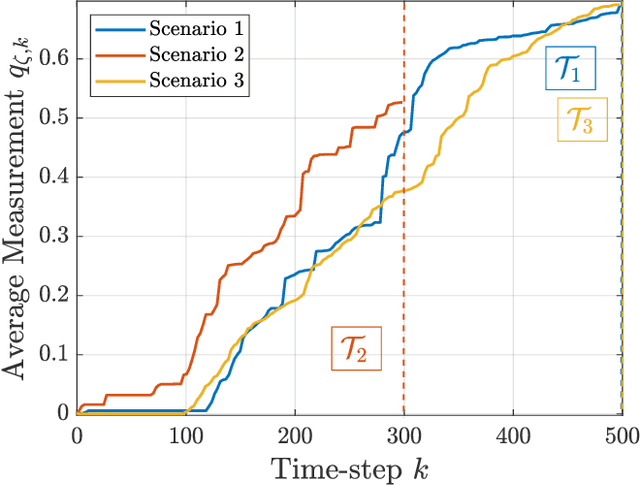

Abstract:We propose the Cooperative Aerial Robot Inspection Challenge (CARIC), a simulation-based benchmark for motion planning algorithms in heterogeneous multi-UAV systems. CARIC features UAV teams with complementary sensors, realistic constraints, and evaluation metrics prioritizing inspection quality and efficiency. It offers a ready-to-use perception-control software stack and diverse scenarios to support the development and evaluation of task allocation and motion planning algorithms. Competitions using CARIC were held at IEEE CDC 2023 and the IROS 2024 Workshop on Multi-Robot Perception and Navigation, attracting innovative solutions from research teams worldwide. This paper examines the top three teams from CDC 2023, analyzing their exploration, inspection, and task allocation strategies while drawing insights into their performance across scenarios. The results highlight the task's complexity and suggest promising directions for future research in cooperative multi-UAV systems.

Automated Real-Time Inspection in Indoor and Outdoor 3D Environments with Cooperative Aerial Robots

Apr 18, 2024

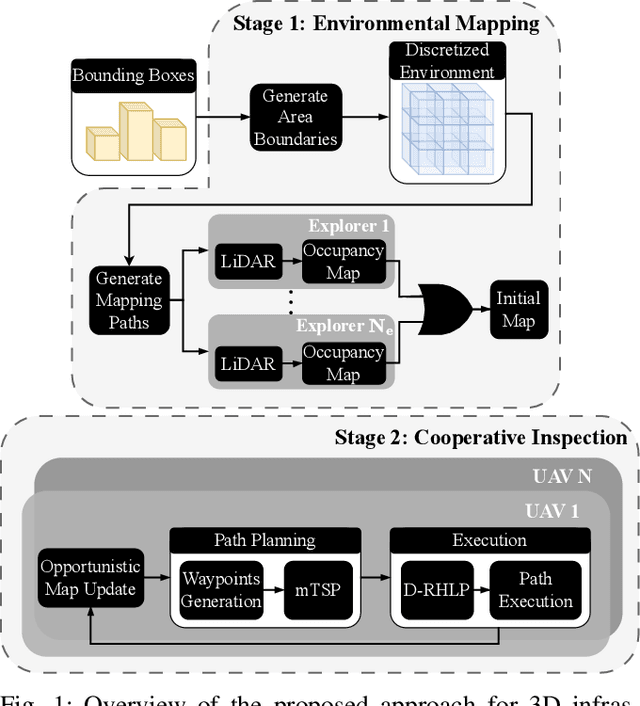

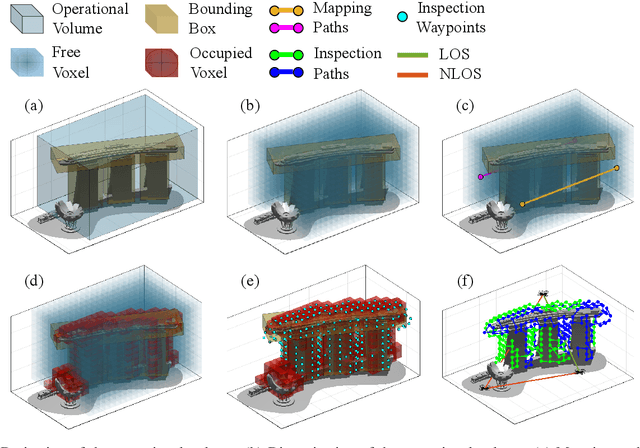

Abstract:This work introduces a cooperative inspection system designed to efficiently control and coordinate a team of distributed heterogeneous UAV agents for the inspection of 3D structures in cluttered, unknown spaces. Our proposed approach employs a two-stage innovative methodology. Initially, it leverages the complementary sensing capabilities of the robots to cooperatively map the unknown environment. It then generates optimized, collision-free inspection paths, thereby ensuring comprehensive coverage of the structure's surface area. The effectiveness of our system is demonstrated through qualitative and quantitative results from extensive Gazebo-based simulations that closely replicate real-world inspection scenarios, highlighting its ability to thoroughly inspect real-world-like 3D structures.

Synergising Human-like Responses and Machine Intelligence for Planning in Disaster Response

Apr 15, 2024

Abstract:In the rapidly changing environments of disaster response, planning and decision-making for autonomous agents involve complex and interdependent choices. Although recent advancements have improved traditional artificial intelligence (AI) approaches, they often struggle in such settings, particularly when applied to agents operating outside their well-defined training parameters. To address these challenges, we propose an attention-based cognitive architecture inspired by Dual Process Theory (DPT). This framework integrates, in an online fashion, rapid yet heuristic (human-like) responses (System 1) with the slow but optimized planning capabilities of machine intelligence (System 2). We illustrate how a supervisory controller can dynamically determine in real-time the engagement of either system to optimize mission objectives by assessing their performance across a number of distinct attributes. Evaluated for trajectory planning in dynamic environments, our framework demonstrates that this synergistic integration effectively manages complex tasks by optimizing multiple mission objectives.

Cooperative Receding Horizon 3D Coverage Control with a Team of Networked Aerial Agents

Jan 28, 2024Abstract:This work proposes a receding horizon coverage control approach which allows multiple autonomous aerial agents to work cooperatively in order cover the total surface area of a 3D object of interest. The cooperative coverage problem which is posed in this work as an optimal control problem, jointly optimizes the agents' kinematic and camera control inputs, while considering coupling constraints amongst the team of agents which aim at minimizing the duplication of work. To generate look-ahead coverage trajectories over a finite planning horizon, the proposed approach integrates visibility constraints into the proposed coverage controller in order to determine the visible part of the object with respect to the agents' future states. In particular, we show how non-linear and non-convex visibility determination constraints can be transformed into logical constraints which can easily be embedded into a mixed integer optimization program.

Unscented Optimal Control for 3D Coverage Planning with an Autonomous UAV Agent

Jun 30, 2023

Abstract:We propose a novel probabilistically robust controller for the guidance of an unmanned aerial vehicle (UAV) in coverage planning missions, which can simultaneously optimize both the UAV's motion, and camera control inputs for the 3D coverage of a given object of interest. Specifically, the coverage planning problem is formulated in this work as an optimal control problem with logical constraints to enable the UAV agent to jointly: a) select a series of discrete camera field-of-view states which satisfy a set of coverage constraints, and b) optimize its motion control inputs according to a specified mission objective. We show how this hybrid optimal control problem can be solved with standard optimization tools by converting the logical expressions in the constraints into equality/inequality constraints involving only continuous variables. Finally, probabilistic robustness is achieved by integrating the unscented transformation to the proposed controller, thus enabling the design of robust open-loop coverage plans which take into account the future posterior distribution of the UAV's state inside the planning horizon.

UAV-based Receding Horizon Control for 3D Inspection Planning

Apr 20, 2023

Abstract:Nowadays, unmanned aerial vehicles or UAVs are being used for a wide range of tasks, including infrastructure inspection, automated monitoring and coverage. This paper investigates the problem of 3D inspection planning with an autonomous UAV agent which is subject to dynamical and sensing constraints. We propose a receding horizon 3D inspection planning control approach for generating optimal trajectories which enable an autonomous UAV agent to inspect a finite number of feature-points scattered on the surface of a cuboid-like structure of interest. The inspection planning problem is formulated as a constrained open-loop optimal control problem and is solved using mixed integer programming (MIP) optimization. Quantitative and qualitative evaluation demonstrates the effectiveness of the proposed approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge