Shenghai Yuan

Accurate Calibration and Robust LiDAR-Inertial Odometry for Spinning Actuated LiDAR Systems

Jan 24, 2026Abstract:Accurate calibration and robust localization are fundamental for downstream tasks in spinning actuated LiDAR applications. Existing methods, however, require parameterizing extrinsic parameters based on different mounting configurations, limiting their generalizability. Additionally, spinning actuated LiDAR inevitably scans featureless regions, which complicates the balance between scanning coverage and localization robustness. To address these challenges, this letter presents a targetless LiDAR-motor calibration (LM-Calibr) on the basis of the Denavit-Hartenberg convention and an environmental adaptive LiDAR-inertial odometry (EVA-LIO). LM-Calibr supports calibration of LiDAR-motor systems with various mounting configurations. Extensive experiments demonstrate its accuracy and convergence across different scenarios, mounting angles, and initial values. Additionally, EVA-LIO adaptively selects downsample rates and map resolutions according to spatial scale. This adaptivity enables the actuator to operate at maximum speed, thereby enhancing scanning completeness while ensuring robust localization, even when LiDAR briefly scans featureless areas. The source code and hardware design are available on GitHub: \textcolor{blue}{\href{https://github.com/zijiechenrobotics/lm_calibr}{github.com/zijiechenrobotics/lm\_calibr}}. The video is available at \textcolor{blue}{\href{https://youtu.be/cZyyrkmeoSk}{youtu.be/cZyyrkmeoSk}}

Focal Guidance: Unlocking Controllability from Semantic-Weak Layers in Video Diffusion Models

Jan 12, 2026Abstract:The task of Image-to-Video (I2V) generation aims to synthesize a video from a reference image and a text prompt. This requires diffusion models to reconcile high-frequency visual constraints and low-frequency textual guidance during the denoising process. However, while existing I2V models prioritize visual consistency, how to effectively couple this dual guidance to ensure strong adherence to the text prompt remains underexplored. In this work, we observe that in Diffusion Transformer (DiT)-based I2V models, certain intermediate layers exhibit weak semantic responses (termed Semantic-Weak Layers), as indicated by a measurable drop in text-visual similarity. We attribute this to a phenomenon called Condition Isolation, where attention to visual features becomes partially detached from text guidance and overly relies on learned visual priors. To address this, we propose Focal Guidance (FG), which enhances the controllability from Semantic-Weak Layers. FG comprises two mechanisms: (1) Fine-grained Semantic Guidance (FSG) leverages CLIP to identify key regions in the reference frame and uses them as anchors to guide Semantic-Weak Layers. (2) Attention Cache transfers attention maps from semantically responsive layers to Semantic-Weak Layers, injecting explicit semantic signals and alleviating their over-reliance on the model's learned visual priors, thereby enhancing adherence to textual instructions. To further validate our approach and address the lack of evaluation in this direction, we introduce a benchmark for assessing instruction following in I2V models. On this benchmark, Focal Guidance proves its effectiveness and generalizability, raising the total score on Wan2.1-I2V to 0.7250 (+3.97\%) and boosting the MMDiT-based HunyuanVideo-I2V to 0.5571 (+7.44\%).

ReCCur: A Recursive Corner-Case Curation Framework for Robust Vision-Language Understanding in Open and Edge Scenarios

Jan 06, 2026Abstract:Corner cases are rare or extreme scenarios that drive real-world failures, but they are difficult to curate at scale: web data are noisy, labels are brittle, and edge deployments preclude large retraining. We present ReCCur (Recursive Corner-Case Curation), a low-compute framework that converts noisy web imagery into auditable fine-grained labels via a multi-agent recursive pipeline. First, large-scale data acquisition and filtering expands a domain vocabulary with a vision-language model (VLM), crawls the web, and enforces tri-modal (image, description, keyword) consistency with light human spot checks to yield refined candidates. Next, mixture-of-experts knowledge distillation uses complementary encoders (e.g., CLIP, DINOv2, BEiT) for kNN voting with dual-confidence activation and uncertainty sampling, converging to a high-precision set. Finally, region-evidence VLM adversarial labeling pairs a proposer (multi-granularity regions and semantic cues) with a validator (global and local chained consistency) to produce explainable labels and close the loop. On realistic corner-case scenarios (e.g., flooded-car inspection), ReCCur runs on consumer-grade GPUs, steadily improves purity and separability, and requires minimal human supervision, providing a practical substrate for downstream training and evaluation under resource constraints. Code and dataset will be released.

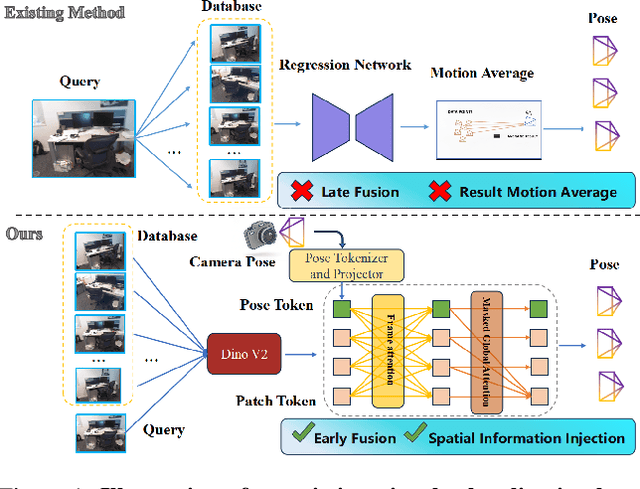

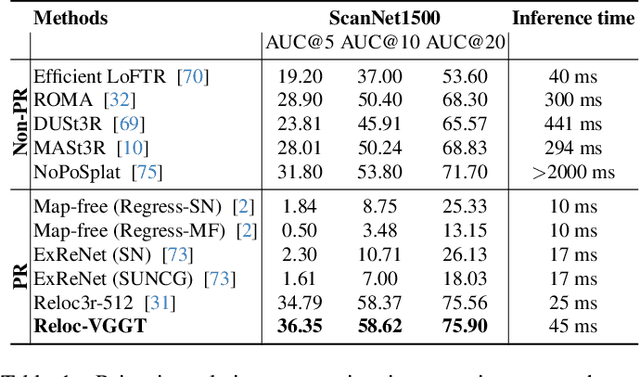

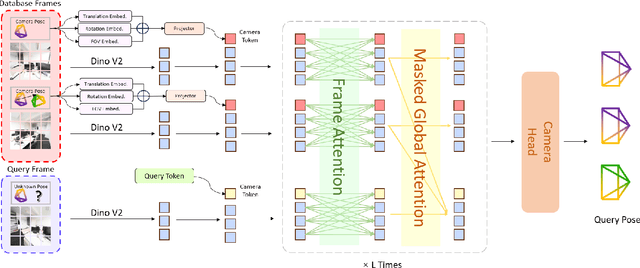

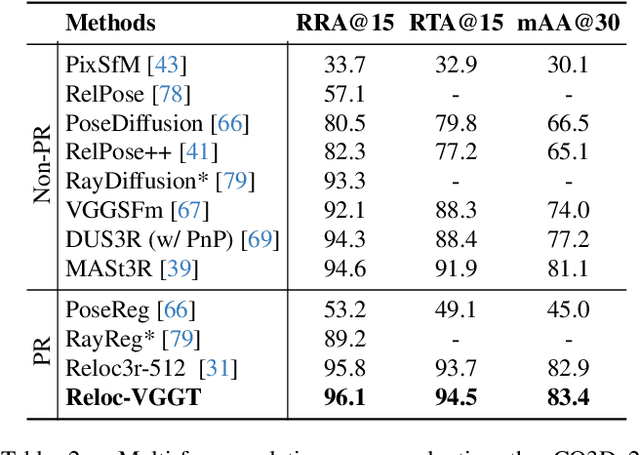

Reloc-VGGT: Visual Re-localization with Geometry Grounded Transformer

Dec 26, 2025

Abstract:Visual localization has traditionally been formulated as a pair-wise pose regression problem. Existing approaches mainly estimate relative poses between two images and employ a late-fusion strategy to obtain absolute pose estimates. However, the late motion average is often insufficient for effectively integrating spatial information, and its accuracy degrades in complex environments. In this paper, we present the first visual localization framework that performs multi-view spatial integration through an early-fusion mechanism, enabling robust operation in both structured and unstructured environments. Our framework is built upon the VGGT backbone, which encodes multi-view 3D geometry, and we introduce a pose tokenizer and projection module to more effectively exploit spatial relationships from multiple database views. Furthermore, we propose a novel sparse mask attention strategy that reduces computational cost by avoiding the quadratic complexity of global attention, thereby enabling real-time performance at scale. Trained on approximately eight million posed image pairs, Reloc-VGGT demonstrates strong accuracy and remarkable generalization ability. Extensive experiments across diverse public datasets consistently validate the effectiveness and efficiency of our approach, delivering high-quality camera pose estimates in real time while maintaining robustness to unseen environments. Our code and models will be publicly released upon acceptance.https://github.com/dtc111111/Reloc-VGGT.

STARC: See-Through-Wall Augmented Reality Framework for Human-Robot Collaboration in Emergency Response

Sep 19, 2025Abstract:In emergency response missions, first responders must navigate cluttered indoor environments where occlusions block direct line-of-sight, concealing both life-threatening hazards and victims in need of rescue. We present STARC, a see-through AR framework for human-robot collaboration that fuses mobile-robot mapping with responder-mounted LiDAR sensing. A ground robot running LiDAR-inertial odometry performs large-area exploration and 3D human detection, while helmet- or handheld-mounted LiDAR on the responder is registered to the robot's global map via relative pose estimation. This cross-LiDAR alignment enables consistent first-person projection of detected humans and their point clouds - rendered in AR with low latency - into the responder's view. By providing real-time visualization of hidden occupants and hazards, STARC enhances situational awareness and reduces operator risk. Experiments in simulation, lab setups, and tactical field trials confirm robust pose alignment, reliable detections, and stable overlays, underscoring the potential of our system for fire-fighting, disaster relief, and other safety-critical operations. Code and design will be open-sourced upon acceptance.

PERAL: Perception-Aware Motion Control for Passive LiDAR Excitation in Spherical Robots

Sep 18, 2025Abstract:Autonomous mobile robots increasingly rely on LiDAR-IMU odometry for navigation and mapping, yet horizontally mounted LiDARs such as the MID360 capture few near-ground returns, limiting terrain awareness and degrading performance in feature-scarce environments. Prior solutions - static tilt, active rotation, or high-density sensors - either sacrifice horizontal perception or incur added actuators, cost, and power. We introduce PERAL, a perception-aware motion control framework for spherical robots that achieves passive LiDAR excitation without dedicated hardware. By modeling the coupling between internal differential-drive actuation and sensor attitude, PERAL superimposes bounded, non-periodic oscillations onto nominal goal- or trajectory-tracking commands, enriching vertical scan diversity while preserving navigation accuracy. Implemented on a compact spherical robot, PERAL is validated across laboratory, corridor, and tactical environments. Experiments demonstrate up to 96 percent map completeness, a 27 percent reduction in trajectory tracking error, and robust near-ground human detection, all at lower weight, power, and cost compared with static tilt, active rotation, and fixed horizontal baselines. The design and code will be open-sourced upon acceptance.

Energy-Constrained Navigation for Planetary Rovers under Hybrid RTG-Solar Power

Sep 18, 2025Abstract:Future planetary exploration rovers must operate for extended durations on hybrid power inputs that combine steady radioisotope thermoelectric generator (RTG) output with variable solar photovoltaic (PV) availability. While energy-aware planning has been studied for aerial and underwater robots under battery limits, few works for ground rovers explicitly model power flow or enforce instantaneous power constraints. Classical terrain-aware planners emphasize slope or traversability, and trajectory optimization methods typically focus on geometric smoothness and dynamic feasibility, neglecting energy feasibility. We present an energy-constrained trajectory planning framework that explicitly integrates physics-based models of translational, rotational, and resistive power with baseline subsystem loads, under hybrid RTG-solar input. By incorporating both cumulative energy budgets and instantaneous power constraints into SE(2)-based polynomial trajectory optimization, the method ensures trajectories that are simultaneously smooth, dynamically feasible, and power-compliant. Simulation results on lunar-like terrain show that our planner generates trajectories with peak power within 0.55 percent of the prescribed limit, while existing methods exceed limits by over 17 percent. This demonstrates a principled and practical approach to energy-aware autonomy for long-duration planetary missions.

DVDP: An End-to-End Policy for Mobile Robot Visual Docking with RGB-D Perception

Sep 16, 2025Abstract:Automatic docking has long been a significant challenge in the field of mobile robotics. Compared to other automatic docking methods, visual docking methods offer higher precision and lower deployment costs, making them an efficient and promising choice for this task. However, visual docking methods impose strict requirements on the robot's initial position at the start of the docking process. To overcome the limitations of current vision-based methods, we propose an innovative end-to-end visual docking method named DVDP(direct visual docking policy). This approach requires only a binocular RGB-D camera installed on the mobile robot to directly output the robot's docking path, achieving end-to-end automatic docking. Furthermore, we have collected a large-scale dataset of mobile robot visual automatic docking dataset through a combination of virtual and real environments using the Unity 3D platform and actual mobile robot setups. We developed a series of evaluation metrics to quantify the performance of the end-to-end visual docking method. Extensive experiments, including benchmarks against leading perception backbones adapted into our framework, demonstrate that our method achieves superior performance. Finally, real-world deployment on the SCOUT Mini confirmed DVDP's efficacy, with our model generating smooth, feasible docking trajectories that meet physical constraints and reach the target pose.

AEOS: Active Environment-aware Optimal Scanning Control for UAV LiDAR-Inertial Odometry in Complex Scenes

Sep 11, 2025

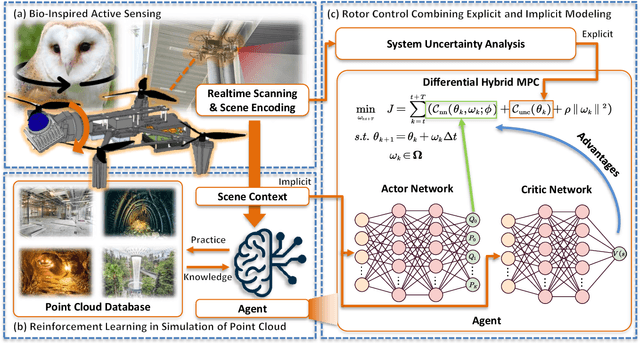

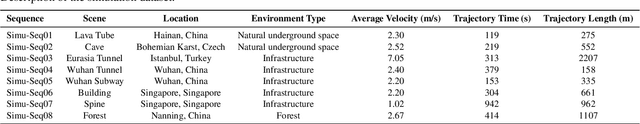

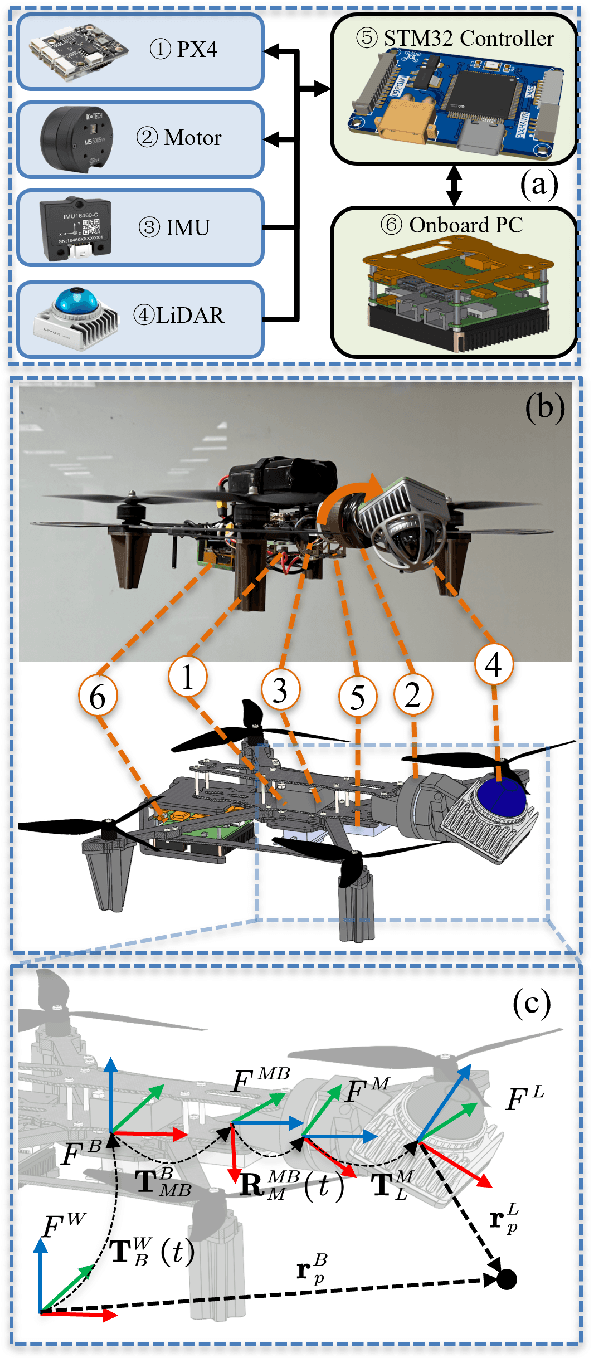

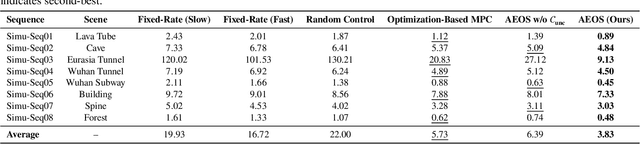

Abstract:LiDAR-based 3D perception and localization on unmanned aerial vehicles (UAVs) are fundamentally limited by the narrow field of view (FoV) of compact LiDAR sensors and the payload constraints that preclude multi-sensor configurations. Traditional motorized scanning systems with fixed-speed rotations lack scene awareness and task-level adaptability, leading to degraded odometry and mapping performance in complex, occluded environments. Inspired by the active sensing behavior of owls, we propose AEOS (Active Environment-aware Optimal Scanning), a biologically inspired and computationally efficient framework for adaptive LiDAR control in UAV-based LiDAR-Inertial Odometry (LIO). AEOS combines model predictive control (MPC) and reinforcement learning (RL) in a hybrid architecture: an analytical uncertainty model predicts future pose observability for exploitation, while a lightweight neural network learns an implicit cost map from panoramic depth representations to guide exploration. To support scalable training and generalization, we develop a point cloud-based simulation environment with real-world LiDAR maps across diverse scenes, enabling sim-to-real transfer. Extensive experiments in both simulation and real-world environments demonstrate that AEOS significantly improves odometry accuracy compared to fixed-rate, optimization-only, and fully learned baselines, while maintaining real-time performance under onboard computational constraints. The project page can be found at https://kafeiyin00.github.io/AEOS/.

SEER-VAR: Semantic Egocentric Environment Reasoner for Vehicle Augmented Reality

Aug 24, 2025Abstract:We present SEER-VAR, a novel framework for egocentric vehicle-based augmented reality (AR) that unifies semantic decomposition, Context-Aware SLAM Branches (CASB), and LLM-driven recommendation. Unlike existing systems that assume static or single-view settings, SEER-VAR dynamically separates cabin and road scenes via depth-guided vision-language grounding. Two SLAM branches track egocentric motion in each context, while a GPT-based module generates context-aware overlays such as dashboard cues and hazard alerts. To support evaluation, we introduce EgoSLAM-Drive, a real-world dataset featuring synchronized egocentric views, 6DoF ground-truth poses, and AR annotations across diverse driving scenarios. Experiments demonstrate that SEER-VAR achieves robust spatial alignment and perceptually coherent AR rendering across varied environments. As one of the first to explore LLM-based AR recommendation in egocentric driving, we address the lack of comparable systems through structured prompting and detailed user studies. Results show that SEER-VAR enhances perceived scene understanding, overlay relevance, and driver ease, providing an effective foundation for future research in this direction. Code and dataset will be made open source.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge