Jiyao Wang

CleanUpBench: Embodied Sweeping and Grasping Benchmark

Aug 07, 2025Abstract:Embodied AI benchmarks have advanced navigation, manipulation, and reasoning, but most target complex humanoid agents or large-scale simulations that are far from real-world deployment. In contrast, mobile cleaning robots with dual mode capabilities, such as sweeping and grasping, are rapidly emerging as realistic and commercially viable platforms. However, no benchmark currently exists that systematically evaluates these agents in structured, multi-target cleaning tasks, revealing a critical gap between academic research and real-world applications. We introduce CleanUpBench, a reproducible and extensible benchmark for evaluating embodied agents in realistic indoor cleaning scenarios. Built on NVIDIA Isaac Sim, CleanUpBench simulates a mobile service robot equipped with a sweeping mechanism and a six-degree-of-freedom robotic arm, enabling interaction with heterogeneous objects. The benchmark includes manually designed environments and one procedurally generated layout to assess generalization, along with a comprehensive evaluation suite covering task completion, spatial efficiency, motion quality, and control performance. To support comparative studies, we provide baseline agents based on heuristic strategies and map-based planning. CleanUpBench bridges the gap between low-level skill evaluation and full-scene testing, offering a scalable testbed for grounded, embodied intelligence in everyday settings.

Not Only Consistency: Enhance Test-Time Adaptation with Spatio-temporal Inconsistency for Remote Physiological Measurement

Jul 10, 2025Abstract:Remote photoplethysmography (rPPG) has emerged as a promising non-invasive method for monitoring physiological signals using the camera. Although various domain adaptation and generalization methods were proposed to promote the adaptability of deep-based rPPG models in unseen deployment environments, considerations in aspects like privacy concerns and real-time adaptation restrict their application in real-world deployment. Thus, we aim to propose a novel fully Test-Time Adaptation (TTA) strategy tailored for rPPG tasks in this work. Specifically, based on prior knowledge in physiology and our observations, we noticed not only there is spatio-temporal consistency in the frequency domain of rPPG signals, but also that inconsistency in the time domain was significant. Given this, by leveraging both consistency and inconsistency priors, we introduce an innovative expert knowledge-based self-supervised \textbf{C}onsistency-\textbf{i}n\textbf{C}onsistency-\textbf{i}ntegration (\textbf{CiCi}) framework to enhances model adaptation during inference. Besides, our approach further incorporates a gradient dynamic control mechanism to mitigate potential conflicts between priors, ensuring stable adaptation across instances. Through extensive experiments on five diverse datasets under the TTA protocol, our method consistently outperforms existing techniques, presenting state-of-the-art performance in real-time self-supervised adaptation without accessing source data. The code will be released later.

Sage Deer: A Super-Aligned Driving Generalist Is Your Copilot

May 15, 2025Abstract:The intelligent driving cockpit, an important part of intelligent driving, needs to match different users' comfort, interaction, and safety needs. This paper aims to build a Super-Aligned and GEneralist DRiving agent, SAGE DeeR. Sage Deer achieves three highlights: (1) Super alignment: It achieves different reactions according to different people's preferences and biases. (2) Generalist: It can understand the multi-view and multi-mode inputs to reason the user's physiological indicators, facial emotions, hand movements, body movements, driving scenarios, and behavioral decisions. (3) Self-Eliciting: It can elicit implicit thought chains in the language space to further increase generalist and super-aligned abilities. Besides, we collected multiple data sets and built a large-scale benchmark. This benchmark measures the deer's perceptual decision-making ability and the super alignment's accuracy.

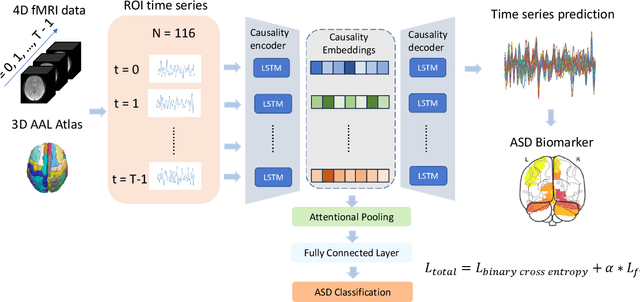

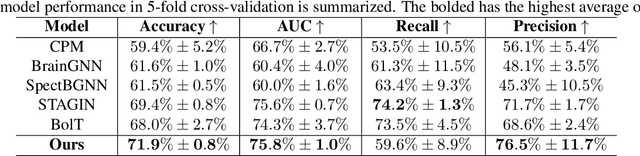

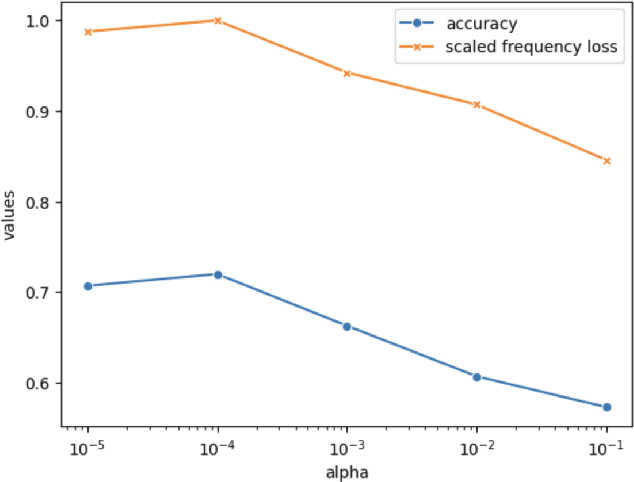

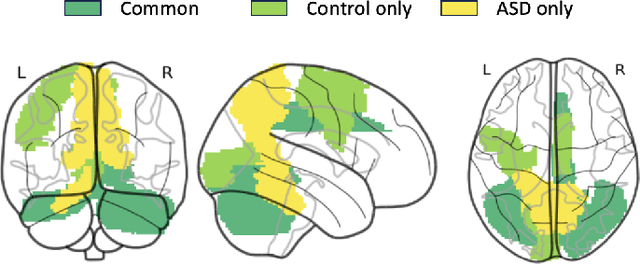

Causal Modeling of fMRI Time-series for Interpretable Autism Spectrum Disorder Classification

Feb 21, 2025

Abstract:Autism spectrum disorder (ASD) is a neurological and developmental disorder that affects social and communicative behaviors. It emerges in early life and is generally associated with lifelong disabilities. Thus, accurate and early diagnosis could facilitate treatment outcomes for those with ASD. Functional magnetic resonance imaging (fMRI) is a useful tool that measures changes in brain signaling to facilitate our understanding of ASD. Much effort is being made to identify ASD biomarkers using various connectome-based machine learning and deep learning classifiers. However, correlation-based models cannot capture the non-linear interactions between brain regions. To solve this problem, we introduce a causality-inspired deep learning model that uses time-series information from fMRI and captures causality among ROIs useful for ASD classification. The model is compared with other baseline and state-of-the-art models with 5-fold cross-validation on the ABIDE dataset. We filtered the dataset by choosing all the images with mean FD less than 15mm to ensure data quality. Our proposed model achieved the highest average classification accuracy of 71.9% and an average AUC of 75.8%. Moreover, the inter-ROI causality interpretation of the model suggests that the left precuneus, right precuneus, and cerebellum are placed in the top 10 ROIs in inter-ROI causality among the ASD population. In contrast, these ROIs are not ranked in the top 10 in the control population. We have validated our findings with the literature and found that abnormalities in these ROIs are often associated with ASD.

STAHGNet: Modeling Hybrid-grained Heterogenous Dependency Efficiently for Traffic Prediction

Dec 23, 2024

Abstract:Traffic flow prediction plays a critical role in the intelligent transportation system, and it is also a challenging task because of the underlying complex Spatio-temporal patterns and heterogeneities evolving across time. However, most present works mostly concentrate on solely capturing Spatial-temporal dependency or extracting implicit similarity graphs, but the hybrid-granularity evolution is ignored in their modeling process. In this paper, we proposed a novel data-driven end-to-end framework, named Spatio-Temporal Aware Hybrid Graph Network (STAHGNet), to couple the hybrid-grained heterogeneous correlations in series simultaneously through an elaborately Hybrid Graph Attention Module (HGAT) and Coarse-granularity Temporal Graph (CTG) generator. Furthermore, an automotive feature engineering with domain knowledge and a random neighbor sampling strategy is utilized to improve efficiency and reduce computational complexity. The MAE, RMSE, and MAPE are used for evaluation metrics. Tested on four real-life datasets, our proposal outperforms eight classical baselines and four state-of-the-art (SOTA) methods (e.g., MAE 14.82 on PeMSD3; MAE 18.92 on PeMSD4). Besides, extensive experiments and visualizations verify the effectiveness of each component in STAHGNet. In terms of computational cost, STAHGNet saves at least four times the space compared to the previous SOTA models. The proposed model will be beneficial for more efficient TFP as well as intelligent transport system construction.

Efficient Mixture-of-Expert for Video-based Driver State and Physiological Multi-task Estimation in Conditional Autonomous Driving

Oct 28, 2024

Abstract:Road safety remains a critical challenge worldwide, with approximately 1.35 million fatalities annually attributed to traffic accidents, often due to human errors. As we advance towards higher levels of vehicle automation, challenges still exist, as driving with automation can cognitively over-demand drivers if they engage in non-driving-related tasks (NDRTs), or lead to drowsiness if driving was the sole task. This calls for the urgent need for an effective Driver Monitoring System (DMS) that can evaluate cognitive load and drowsiness in SAE Level-2/3 autonomous driving contexts. In this study, we propose a novel multi-task DMS, termed VDMoE, which leverages RGB video input to monitor driver states non-invasively. By utilizing key facial features to minimize computational load and integrating remote Photoplethysmography (rPPG) for physiological insights, our approach enhances detection accuracy while maintaining efficiency. Additionally, we optimize the Mixture-of-Experts (MoE) framework to accommodate multi-modal inputs and improve performance across different tasks. A novel prior-inclusive regularization method is introduced to align model outputs with statistical priors, thus accelerating convergence and mitigating overfitting risks. We validate our method with the creation of a new dataset (MCDD), which comprises RGB video and physiological indicators from 42 participants, and two public datasets. Our findings demonstrate the effectiveness of VDMoE in monitoring driver states, contributing to safer autonomous driving systems. The code and data will be released.

STNAGNN: Spatiotemporal Node Attention Graph Neural Network for Task-based fMRI Analysis

Jun 17, 2024Abstract:Task-based fMRI uses actions or stimuli to trigger task-specific brain responses and measures them using BOLD contrast. Despite the significant task-induced spatiotemporal brain activation fluctuations, most studies on task-based fMRI ignore the task context information aligned with fMRI and consider task-based fMRI a coherent sequence. In this paper, we show that using the task structures as data-driven guidance is effective for spatiotemporal analysis. We propose STNAGNN, a GNN-based spatiotemporal architecture, and validate its performance in an autism classification task. The trained model is also interpreted for identifying autism-related spatiotemporal brain biomarkers.

PhysMLE: Generalizable and Priors-Inclusive Multi-task Remote Physiological Measurement

May 10, 2024

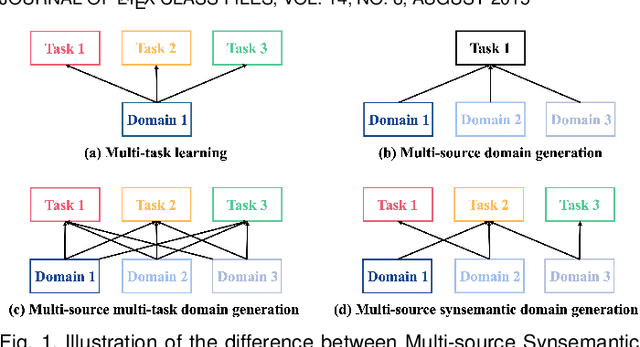

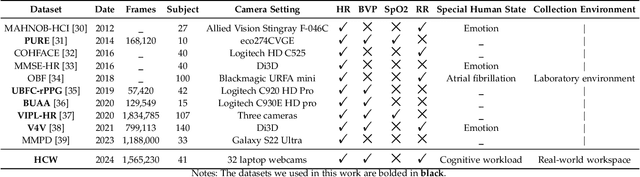

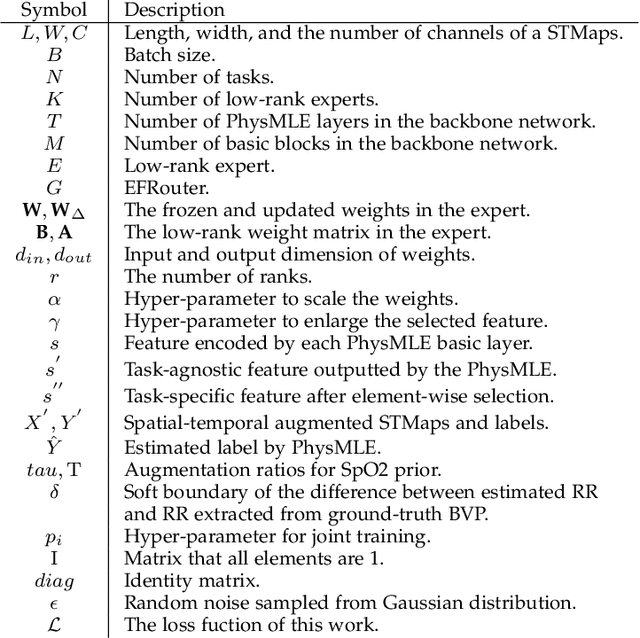

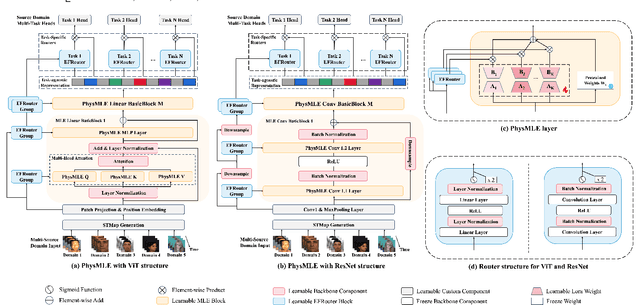

Abstract:Remote photoplethysmography (rPPG) has been widely applied to measure heart rate from face videos. To increase the generalizability of the algorithms, domain generalization (DG) attracted increasing attention in rPPG. However, when rPPG is extended to simultaneously measure more vital signs (e.g., respiration and blood oxygen saturation), achieving generalizability brings new challenges. Although partial features shared among different physiological signals can benefit multi-task learning, the sparse and imbalanced target label space brings the seesaw effect over task-specific feature learning. To resolve this problem, we designed an end-to-end Mixture of Low-rank Experts for multi-task remote Physiological measurement (PhysMLE), which is based on multiple low-rank experts with a novel router mechanism, thereby enabling the model to adeptly handle both specifications and correlations within tasks. Additionally, we introduced prior knowledge from physiology among tasks to overcome the imbalance of label space under real-world multi-task physiological measurement. For fair and comprehensive evaluations, this paper proposed a large-scale multi-task generalization benchmark, named Multi-Source Synsemantic Domain Generalization (MSSDG) protocol. Extensive experiments with MSSDG and intra-dataset have shown the effectiveness and efficiency of PhysMLE. In addition, a new dataset was collected and made publicly available to meet the needs of the MSSDG.

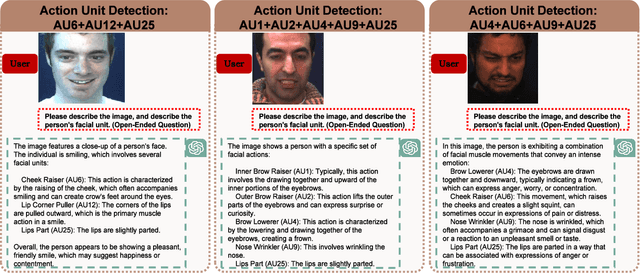

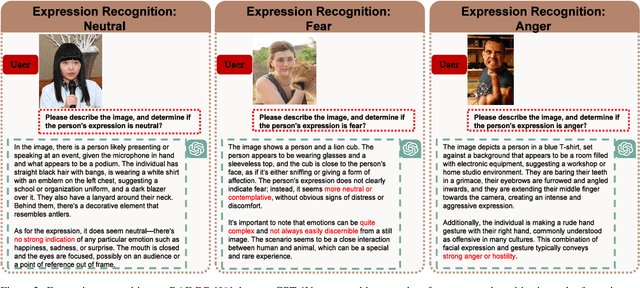

GPT as Psychologist? Preliminary Evaluations for GPT-4V on Visual Affective Computing

Mar 09, 2024

Abstract:Multimodal language models (MLMs) are designed to process and integrate information from multiple sources, such as text, speech, images, and videos. Despite its success in language understanding, it is critical to evaluate the performance of downstream tasks for better human-centric applications. This paper assesses the application of MLMs with 5 crucial abilities for affective computing, spanning from visual affective tasks and reasoning tasks. The results show that GPT4 has high accuracy in facial action unit recognition and micro-expression detection while its general facial expression recognition performance is not accurate. We also highlight the challenges of achieving fine-grained micro-expression recognition and the potential for further study and demonstrate the versatility and potential of GPT4 for handling advanced tasks in emotion recognition and related fields by integrating with task-related agents for more complex tasks, such as heart rate estimation through signal processing. In conclusion, this paper provides valuable insights into the potential applications and challenges of MLMs in human-centric computing. The interesting samples are available at \url{https://github.com/LuPaoPao/GPT4Affectivity}.

Learning Sequential Information in Task-based fMRI for Synthetic Data Augmentation

Aug 29, 2023

Abstract:Insufficiency of training data is a persistent issue in medical image analysis, especially for task-based functional magnetic resonance images (fMRI) with spatio-temporal imaging data acquired using specific cognitive tasks. In this paper, we propose an approach for generating synthetic fMRI sequences that can then be used to create augmented training datasets in downstream learning tasks. To synthesize high-resolution task-specific fMRI, we adapt the $\alpha$-GAN structure, leveraging advantages of both GAN and variational autoencoder models, and propose different alternatives in aggregating temporal information. The synthetic images are evaluated from multiple perspectives including visualizations and an autism spectrum disorder (ASD) classification task. The results show that the synthetic task-based fMRI can provide effective data augmentation in learning the ASD classification task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge