Peizheng Li

Latency-aware Human-in-the-Loop Reinforcement Learning for Semantic Communications

Feb 17, 2026Abstract:Semantic communication promises task-aligned transmission but must reconcile semantic fidelity with stringent latency guarantees in immersive and safety-critical services. This paper introduces a time-constrained human-in-the-loop reinforcement learning (TC-HITL-RL) framework that embeds human feedback, semantic utility, and latency control within a semantic-aware Open radio access network (RAN) architecture. We formulate semantic adaptation driven by human feedback as a constrained Markov decision process (CMDP) whose state captures semantic quality, human preferences, queue slack, and channel dynamics, and solve it via a primal--dual proximal policy optimization algorithm with action shielding and latency-aware reward shaping. The resulting policy preserves PPO-level semantic rewards while tightening the variability of both air-interface and near-real-time RAN intelligent controller processing budgets. Simulations over point-to-multipoint links with heterogeneous deadlines show that TC-HITL-RL consistently meets per-user timing constraints, outperforms baseline schedulers in reward, and stabilizes resource consumption, providing a practical blueprint for latency-aware semantic adaptation.

A Multi-Year Urban Streetlight Imagery Dataset for Visual Monitoring and Spatio-Temporal Drift Detection

Dec 13, 2025

Abstract:We present a large-scale, longitudinal visual dataset of urban streetlights captured by 22 fixed-angle cameras deployed across Bristol, U.K., from 2021 to 2025. The dataset contains over 526,000 images, collected hourly under diverse lighting, weather, and seasonal conditions. Each image is accompanied by rich metadata, including timestamps, GPS coordinates, and device identifiers. This unique real-world dataset enables detailed investigation of visual drift, anomaly detection, and MLOps strategies in smart city deployments. To promtoe seconardary analysis, we additionally provide a self-supervised framework based on convolutional variational autoencoders (CNN-VAEs). Models are trained separately for each camera node and for day/night image sets. We define two per-sample drift metrics: relative centroid drift, capturing latent space deviation from a baseline quarter, and relative reconstruction error, measuring normalized image-domain degradation. This dataset provides a realistic, fine-grained benchmark for evaluating long-term model stability, drift-aware learning, and deployment-ready vision systems. The images and structured metadata are publicly released in JPEG and CSV formats, supporting reproducibility and downstream applications such as streetlight monitoring, weather inference, and urban scene understanding. The dataset can be found at https://doi.org/10.5281/zenodo.17781192 and https://doi.org/10.5281/zenodo.17859120.

SpaceDrive: Infusing Spatial Awareness into VLM-based Autonomous Driving

Dec 11, 2025Abstract:End-to-end autonomous driving methods built on vision language models (VLMs) have undergone rapid development driven by their universal visual understanding and strong reasoning capabilities obtained from the large-scale pretraining. However, we find that current VLMs struggle to understand fine-grained 3D spatial relationships which is a fundamental requirement for systems interacting with the physical world. To address this issue, we propose SpaceDrive, a spatial-aware VLM-based driving framework that treats spatial information as explicit positional encodings (PEs) instead of textual digit tokens, enabling joint reasoning over semantic and spatial representations. SpaceDrive employs a universal positional encoder to all 3D coordinates derived from multi-view depth estimation, historical ego-states, and text prompts. These 3D PEs are first superimposed to augment the corresponding 2D visual tokens. Meanwhile, they serve as a task-agnostic coordinate representation, replacing the digit-wise numerical tokens as both inputs and outputs for the VLM. This mechanism enables the model to better index specific visual semantics in spatial reasoning and directly regress trajectory coordinates rather than generating digit-by-digit, thereby enhancing planning accuracy. Extensive experiments validate that SpaceDrive achieves state-of-the-art open-loop performance on the nuScenes dataset and the second-best Driving Score of 78.02 on the Bench2Drive closed-loop benchmark over existing VLM-based methods.

SEER-VAR: Semantic Egocentric Environment Reasoner for Vehicle Augmented Reality

Aug 24, 2025Abstract:We present SEER-VAR, a novel framework for egocentric vehicle-based augmented reality (AR) that unifies semantic decomposition, Context-Aware SLAM Branches (CASB), and LLM-driven recommendation. Unlike existing systems that assume static or single-view settings, SEER-VAR dynamically separates cabin and road scenes via depth-guided vision-language grounding. Two SLAM branches track egocentric motion in each context, while a GPT-based module generates context-aware overlays such as dashboard cues and hazard alerts. To support evaluation, we introduce EgoSLAM-Drive, a real-world dataset featuring synchronized egocentric views, 6DoF ground-truth poses, and AR annotations across diverse driving scenarios. Experiments demonstrate that SEER-VAR achieves robust spatial alignment and perceptually coherent AR rendering across varied environments. As one of the first to explore LLM-based AR recommendation in egocentric driving, we address the lack of comparable systems through structured prompting and detailed user studies. Results show that SEER-VAR enhances perceived scene understanding, overlay relevance, and driver ease, providing an effective foundation for future research in this direction. Code and dataset will be made open source.

Flying Base Stations for Offshore Wind Farm Monitoring and Control: Holistic Performance Evaluation and Optimization

Jul 10, 2025Abstract:Ensuring reliable and low-latency communication in offshore wind farms is critical for efficient monitoring and control, yet remains challenging due to the harsh environment and lack of infrastructure. This paper investigates a flying base station (FBS) approach for wide-area monitoring and control in the UK Hornsea offshore wind farm project. By leveraging mobile, flexible FBS platforms in the remote and harsh offshore environment, the proposed system offers real-time connectivity for turbines without the need for deploying permanent infrastructure at the sea. We develop a detailed and practical end-to-end latency model accounting for five key factors: flight duration, connection establishment, turbine state information upload, computational delay, and control transmission, to provide a holistic perspective often missing in prior studies. Furthermore, we combine trajectory planning, beamforming, and resource allocation into a multi-objective optimization framework for the overall latency minimization, specifically designed for large-scale offshore wind farm deployments. Simulation results verify the effectiveness of our proposed method in minimizing latency and enhancing efficiency in FBS-assisted offshore monitoring across various power levels, while consistently outperforming baseline designs.

A Heuristic-Integrated DRL Approach for Phase Optimization in Large-Scale RISs

May 07, 2025Abstract:Optimizing discrete phase shifts in large-scale reconfigurable intelligent surfaces (RISs) is challenging due to their non-convex and non-linear nature. In this letter, we propose a heuristic-integrated deep reinforcement learning (DRL) framework that (1) leverages accumulated actions over multiple steps in the double deep Q-network (DDQN) for RIS column-wise control and (2) integrates a greedy algorithm (GA) into each DRL step to refine the state via fine-grained, element-wise optimization of RIS configurations. By learning from GA-included states, the proposed approach effectively addresses RIS optimization within a small DRL action space, demonstrating its capability to optimize phase-shift configurations of large-scale RISs.

AGO: Adaptive Grounding for Open World 3D Occupancy Prediction

Apr 14, 2025Abstract:Open-world 3D semantic occupancy prediction aims to generate a voxelized 3D representation from sensor inputs while recognizing both known and unknown objects. Transferring open-vocabulary knowledge from vision-language models (VLMs) offers a promising direction but remains challenging. However, methods based on VLM-derived 2D pseudo-labels with traditional supervision are limited by a predefined label space and lack general prediction capabilities. Direct alignment with pretrained image embeddings, on the other hand, fails to achieve reliable performance due to often inconsistent image and text representations in VLMs. To address these challenges, we propose AGO, a novel 3D occupancy prediction framework with adaptive grounding to handle diverse open-world scenarios. AGO first encodes surrounding images and class prompts into 3D and text embeddings, respectively, leveraging similarity-based grounding training with 3D pseudo-labels. Additionally, a modality adapter maps 3D embeddings into a space aligned with VLM-derived image embeddings, reducing modality gaps. Experiments on Occ3D-nuScenes show that AGO improves unknown object prediction in zero-shot and few-shot transfer while achieving state-of-the-art closed-world self-supervised performance, surpassing prior methods by 4.09 mIoU.

TQD-Track: Temporal Query Denoising for 3D Multi-Object Tracking

Apr 04, 2025Abstract:Query denoising has become a standard training strategy for DETR-based detectors by addressing the slow convergence issue. Besides that, query denoising can be used to increase the diversity of training samples for modeling complex scenarios which is critical for Multi-Object Tracking (MOT), showing its potential in MOT application. Existing approaches integrate query denoising within the tracking-by-attention paradigm. However, as the denoising process only happens within the single frame, it cannot benefit the tracker to learn temporal-related information. In addition, the attention mask in query denoising prevents information exchange between denoising and object queries, limiting its potential in improving association using self-attention. To address these issues, we propose TQD-Track, which introduces Temporal Query Denoising (TQD) tailored for MOT, enabling denoising queries to carry temporal information and instance-specific feature representation. We introduce diverse noise types onto denoising queries that simulate real-world challenges in MOT. We analyze our proposed TQD for different tracking paradigms, and find out the paradigm with explicit learned data association module, e.g. tracking-by-detection or alternating detection and association, benefit from TQD by a larger margin. For these paradigms, we further design an association mask in the association module to ensure the consistent interaction between track and detection queries as during inference. Extensive experiments on the nuScenes dataset demonstrate that our approach consistently enhances different tracking methods by only changing the training process, especially the paradigms with explicit association module.

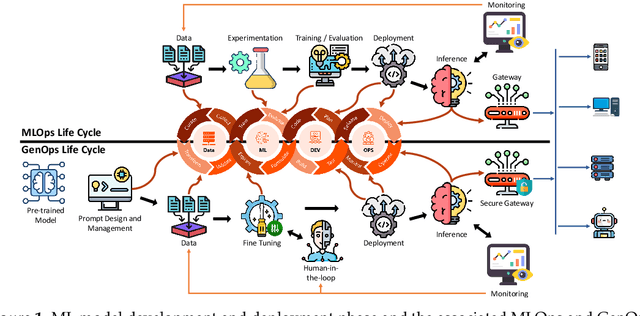

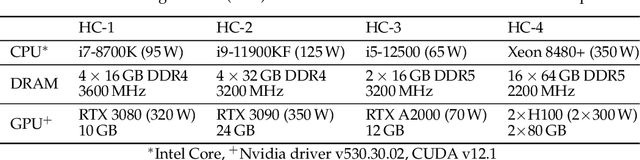

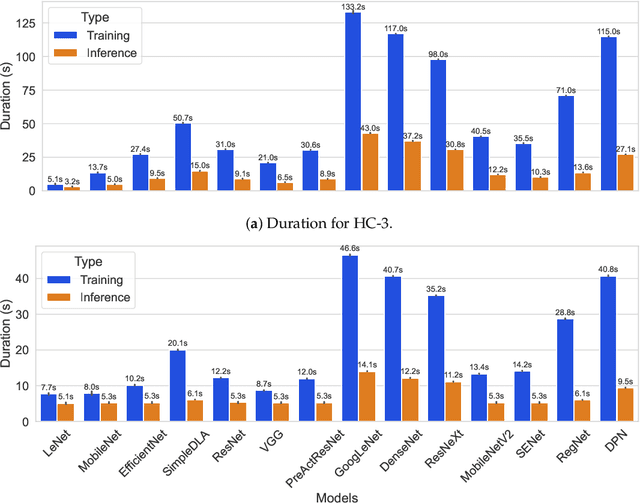

Green MLOps to Green GenOps: An Empirical Study of Energy Consumption in Discriminative and Generative AI Operations

Mar 31, 2025

Abstract:This study presents an empirical investigation into the energy consumption of Discriminative and Generative AI models within real-world MLOps pipelines. For Discriminative models, we examine various architectures and hyperparameters during training and inference and identify energy-efficient practices. For Generative AI, Large Language Models (LLMs) are assessed, focusing primarily on energy consumption across different model sizes and varying service requests. Our study employs software-based power measurements, ensuring ease of replication across diverse configurations, models, and datasets. We analyse multiple models and hardware setups to uncover correlations among various metrics, identifying key contributors to energy consumption. The results indicate that for Discriminative models, optimising architectures, hyperparameters, and hardware can significantly reduce energy consumption without sacrificing performance. For LLMs, energy efficiency depends on balancing model size, reasoning complexity, and request-handling capacity, as larger models do not necessarily consume more energy when utilisation remains low. This analysis provides practical guidelines for designing green and sustainable ML operations, emphasising energy consumption and carbon footprint reductions while maintaining performance. This paper can serve as a benchmark for accurately estimating total energy use across different types of AI models.

Task-Oriented Connectivity for Networked Robotics with Generative AI and Semantic Communications

Mar 09, 2025Abstract:The convergence of robotics, advanced communication networks, and artificial intelligence (AI) holds the promise of transforming industries through fully automated and intelligent operations. In this work, we introduce a novel co-working framework for robots that unifies goal-oriented semantic communication (SemCom) with a Generative AI (GenAI)-agent under a semantic-aware network. SemCom prioritizes the exchange of meaningful information among robots and the network, thereby reducing overhead and latency. Meanwhile, the GenAI-agent leverages generative AI models to interpret high-level task instructions, allocate resources, and adapt to dynamic changes in both network and robotic environments. This agent-driven paradigm ushers in a new level of autonomy and intelligence, enabling complex tasks of networked robots to be conducted with minimal human intervention. We validate our approach through a multi-robot anomaly detection use-case simulation, where robots detect, compress, and transmit relevant information for classification. Simulation results confirm that SemCom significantly reduces data traffic while preserving critical semantic details, and the GenAI-agent ensures task coordination and network adaptation. This synergy provides a robust, efficient, and scalable solution for modern industrial environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge