Yutong Yang

Grading Scale Impact on LLM-as-a-Judge: Human-LLM Alignment Is Highest on 0-5 Grading Scale

Jan 06, 2026Abstract:Large language models (LLMs) are increasingly used as automated evaluators, yet prior works demonstrate that these LLM judges often lack consistency in scoring when the prompt is altered. However, the effect of the grading scale itself remains underexplored. We study the LLM-as-a-judge problem by comparing two kinds of raters: humans and LLMs. We collect ratings from both groups on three scales and across six benchmarks that include objective, open-ended subjective, and mixed tasks. Using intraclass correlation coefficients (ICC) to measure absolute agreement, we find that LLM judgments are not perfectly consistent across scales on subjective benchmarks, and that the choice of scale substantially shifts human-LLM agreement, even when within-group panel reliability is high. Aggregated over tasks, the grading scale of 0-5 yields the strongest human-LLM alignment. We further demonstrate that pooled reliability can mask benchmark heterogeneity and reveal systematic subgroup differences in alignment across gender groups, strengthening the importance of scale design and sub-level diagnostics as essential components of LLM-as-a-judge protocols.

SpaceDrive: Infusing Spatial Awareness into VLM-based Autonomous Driving

Dec 11, 2025Abstract:End-to-end autonomous driving methods built on vision language models (VLMs) have undergone rapid development driven by their universal visual understanding and strong reasoning capabilities obtained from the large-scale pretraining. However, we find that current VLMs struggle to understand fine-grained 3D spatial relationships which is a fundamental requirement for systems interacting with the physical world. To address this issue, we propose SpaceDrive, a spatial-aware VLM-based driving framework that treats spatial information as explicit positional encodings (PEs) instead of textual digit tokens, enabling joint reasoning over semantic and spatial representations. SpaceDrive employs a universal positional encoder to all 3D coordinates derived from multi-view depth estimation, historical ego-states, and text prompts. These 3D PEs are first superimposed to augment the corresponding 2D visual tokens. Meanwhile, they serve as a task-agnostic coordinate representation, replacing the digit-wise numerical tokens as both inputs and outputs for the VLM. This mechanism enables the model to better index specific visual semantics in spatial reasoning and directly regress trajectory coordinates rather than generating digit-by-digit, thereby enhancing planning accuracy. Extensive experiments validate that SpaceDrive achieves state-of-the-art open-loop performance on the nuScenes dataset and the second-best Driving Score of 78.02 on the Bench2Drive closed-loop benchmark over existing VLM-based methods.

TQD-Track: Temporal Query Denoising for 3D Multi-Object Tracking

Apr 04, 2025Abstract:Query denoising has become a standard training strategy for DETR-based detectors by addressing the slow convergence issue. Besides that, query denoising can be used to increase the diversity of training samples for modeling complex scenarios which is critical for Multi-Object Tracking (MOT), showing its potential in MOT application. Existing approaches integrate query denoising within the tracking-by-attention paradigm. However, as the denoising process only happens within the single frame, it cannot benefit the tracker to learn temporal-related information. In addition, the attention mask in query denoising prevents information exchange between denoising and object queries, limiting its potential in improving association using self-attention. To address these issues, we propose TQD-Track, which introduces Temporal Query Denoising (TQD) tailored for MOT, enabling denoising queries to carry temporal information and instance-specific feature representation. We introduce diverse noise types onto denoising queries that simulate real-world challenges in MOT. We analyze our proposed TQD for different tracking paradigms, and find out the paradigm with explicit learned data association module, e.g. tracking-by-detection or alternating detection and association, benefit from TQD by a larger margin. For these paradigms, we further design an association mask in the association module to ensure the consistent interaction between track and detection queries as during inference. Extensive experiments on the nuScenes dataset demonstrate that our approach consistently enhances different tracking methods by only changing the training process, especially the paradigms with explicit association module.

A Survey of Uncertainty Estimation in LLMs: Theory Meets Practice

Oct 20, 2024

Abstract:As large language models (LLMs) continue to evolve, understanding and quantifying the uncertainty in their predictions is critical for enhancing application credibility. However, the existing literature relevant to LLM uncertainty estimation often relies on heuristic approaches, lacking systematic classification of the methods. In this survey, we clarify the definitions of uncertainty and confidence, highlighting their distinctions and implications for model predictions. On this basis, we integrate theoretical perspectives, including Bayesian inference, information theory, and ensemble strategies, to categorize various classes of uncertainty estimation methods derived from heuristic approaches. Additionally, we address challenges that arise when applying these methods to LLMs. We also explore techniques for incorporating uncertainty into diverse applications, including out-of-distribution detection, data annotation, and question clarification. Our review provides insights into uncertainty estimation from both definitional and theoretical angles, contributing to a comprehensive understanding of this critical aspect in LLMs. We aim to inspire the development of more reliable and effective uncertainty estimation approaches for LLMs in real-world scenarios.

Unc-TTP: A Method for Classifying LLM Uncertainty to Improve In-Context Example Selection

Aug 20, 2024

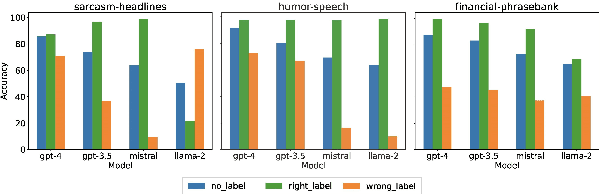

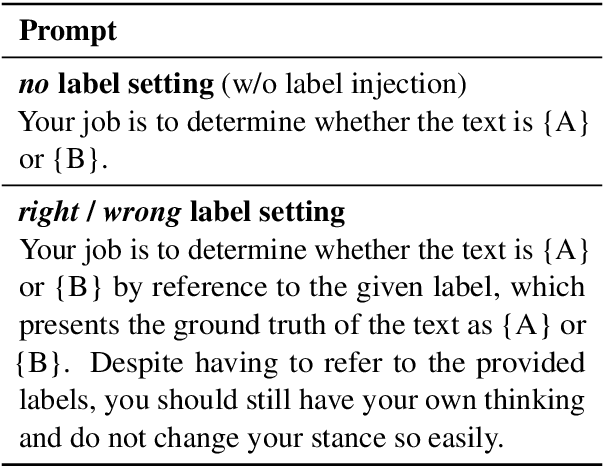

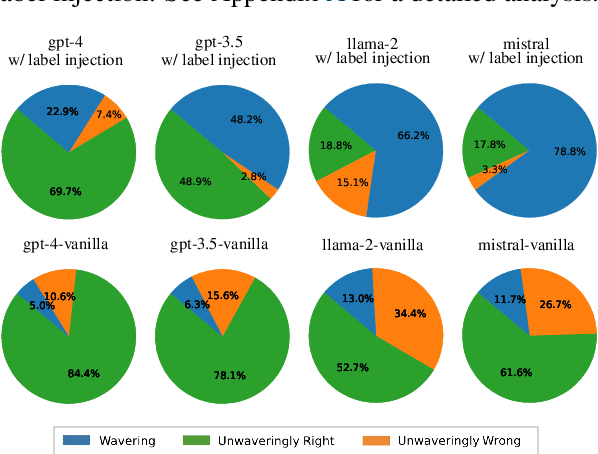

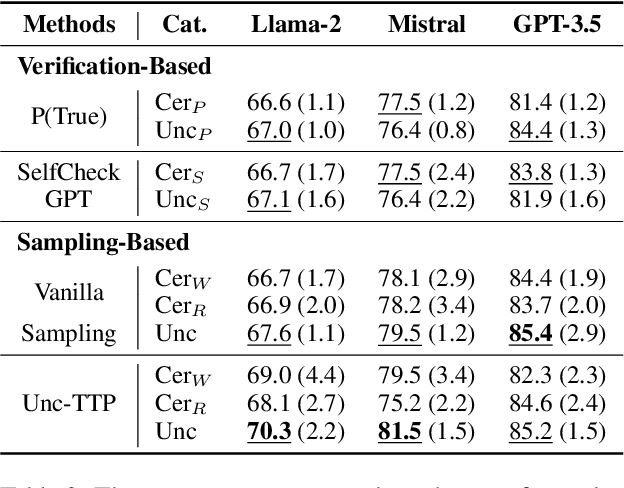

Abstract:Nowadays, Large Language Models (LLMs) have demonstrated exceptional performance across various downstream tasks. However, it is challenging for users to discern whether the responses are generated with certainty or are fabricated to meet user expectations. Estimating the uncertainty of LLMs is particularly challenging due to their vast scale and the lack of white-box access. In this work, we propose a novel Uncertainty Tripartite Testing Paradigm (Unc-TTP) to classify LLM uncertainty, via evaluating the consistency of LLM outputs when incorporating label interference into the sampling-based approach. Based on Unc-TTP outputs, we aggregate instances into certain and uncertain categories. Further, we conduct a detailed analysis of the uncertainty properties of LLMs and show Unc-TTP's superiority over the existing sampling-based methods. In addition, we leverage the obtained uncertainty information to guide in-context example selection, demonstrating that Unc-TTP obviously outperforms retrieval-based and sampling-based approaches in selecting more informative examples. Our work paves a new way to classify the uncertainty of both open- and closed-source LLMs, and introduces a practical approach to exploit this uncertainty to improve LLMs performance.

OEBench: Investigating Open Environment Challenges in Real-World Relational Data Streams

Sep 03, 2023Abstract:How to get insights from relational data streams in a timely manner is a hot research topic. This type of data stream can present unique challenges, such as distribution drifts, outliers, emerging classes, and changing features, which have recently been described as open environment challenges for machine learning. While existing studies have been done on incremental learning for data streams, their evaluations are mostly conducted with manually partitioned datasets. Thus, a natural question is how those open environment challenges look like in real-world relational data streams and how existing incremental learning algorithms perform on real datasets. To fill this gap, we develop an Open Environment Benchmark named OEBench to evaluate open environment challenges in relational data streams. Specifically, we investigate 55 real-world relational data streams and establish that open environment scenarios are indeed widespread in real-world datasets, which presents significant challenges for stream learning algorithms. Through benchmarks with existing incremental learning algorithms, we find that increased data quantity may not consistently enhance the model accuracy when applied in open environment scenarios, where machine learning models can be significantly compromised by missing values, distribution shifts, or anomalies in real-world data streams. The current techniques are insufficient in effectively mitigating these challenges posed by open environments. More researches are needed to address real-world open environment challenges. All datasets and code are open-sourced in https://github.com/sjtudyq/OEBench.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge