Qingwen Zhang

Attack-Resistant Uniform Fairness for Linear and Smooth Contextual Bandits

Feb 04, 2026Abstract:Modern systems, such as digital platforms and service systems, increasingly rely on contextual bandits for online decision-making; however, their deployment can inadvertently create unfair exposure among arms, undermining long-term platform sustainability and supplier trust. This paper studies the contextual bandit problem under a uniform $(1-δ)$-fairness constraint, and addresses its unique vulnerabilities to strategic manipulation. The fairness constraint ensures that preferential treatment is strictly justified by an arm's actual reward across all contexts and time horizons, using uniformity to prevent statistical loopholes. We develop novel algorithms that achieve (nearly) minimax-optimal regret for both linear and smooth reward functions, while maintaining strong $(1-\tilde{O}(1/T))$-fairness guarantees, and further characterize the theoretically inherent yet asymptotically marginal "price of fairness". However, we reveal that such merit-based fairness becomes uniquely susceptible to signal manipulation. We show that an adversary with a minimal $\tilde{O}(1)$ budget can not only degrade overall performance as in traditional attacks, but also selectively induce insidious fairness-specific failures while leaving conspicuous regret measures largely unaffected. To counter this, we design robust variants incorporating corruption-adaptive exploration and error-compensated thresholding. Our approach yields the first minimax-optimal regret bounds under $C$-budgeted attack while preserving $(1-\tilde{O}(1/T))$-fairness. Numerical experiments and a real-world case demonstrate that our algorithms sustain both fairness and efficiency.

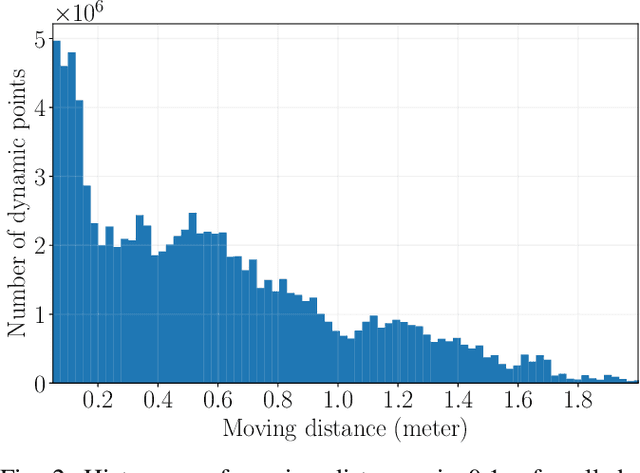

DeltaFlow: An Efficient Multi-frame Scene Flow Estimation Method

Aug 23, 2025

Abstract:Previous dominant methods for scene flow estimation focus mainly on input from two consecutive frames, neglecting valuable information in the temporal domain. While recent trends shift towards multi-frame reasoning, they suffer from rapidly escalating computational costs as the number of frames grows. To leverage temporal information more efficiently, we propose DeltaFlow ($\Delta$Flow), a lightweight 3D framework that captures motion cues via a $\Delta$ scheme, extracting temporal features with minimal computational cost, regardless of the number of frames. Additionally, scene flow estimation faces challenges such as imbalanced object class distributions and motion inconsistency. To tackle these issues, we introduce a Category-Balanced Loss to enhance learning across underrepresented classes and an Instance Consistency Loss to enforce coherent object motion, improving flow accuracy. Extensive evaluations on the Argoverse 2 and Waymo datasets show that $\Delta$Flow achieves state-of-the-art performance with up to 22% lower error and $2\times$ faster inference compared to the next-best multi-frame supervised method, while also demonstrating a strong cross-domain generalization ability. The code is open-sourced at https://github.com/Kin-Zhang/DeltaFlow along with trained model weights.

AGO: Adaptive Grounding for Open World 3D Occupancy Prediction

Apr 14, 2025Abstract:Open-world 3D semantic occupancy prediction aims to generate a voxelized 3D representation from sensor inputs while recognizing both known and unknown objects. Transferring open-vocabulary knowledge from vision-language models (VLMs) offers a promising direction but remains challenging. However, methods based on VLM-derived 2D pseudo-labels with traditional supervision are limited by a predefined label space and lack general prediction capabilities. Direct alignment with pretrained image embeddings, on the other hand, fails to achieve reliable performance due to often inconsistent image and text representations in VLMs. To address these challenges, we propose AGO, a novel 3D occupancy prediction framework with adaptive grounding to handle diverse open-world scenarios. AGO first encodes surrounding images and class prompts into 3D and text embeddings, respectively, leveraging similarity-based grounding training with 3D pseudo-labels. Additionally, a modality adapter maps 3D embeddings into a space aligned with VLM-derived image embeddings, reducing modality gaps. Experiments on Occ3D-nuScenes show that AGO improves unknown object prediction in zero-shot and few-shot transfer while achieving state-of-the-art closed-world self-supervised performance, surpassing prior methods by 4.09 mIoU.

MambaFlow: A Novel and Flow-guided State Space Model for Scene Flow Estimation

Feb 24, 2025Abstract:Scene flow estimation aims to predict 3D motion from consecutive point cloud frames, which is of great interest in autonomous driving field. Existing methods face challenges such as insufficient spatio-temporal modeling and inherent loss of fine-grained feature during voxelization. However, the success of Mamba, a representative state space model (SSM) that enables global modeling with linear complexity, provides a promising solution. In this paper, we propose MambaFlow, a novel scene flow estimation network with a mamba-based decoder. It enables deep interaction and coupling of spatio-temporal features using a well-designed backbone. Innovatively, we steer the global attention modeling of voxel-based features with point offset information using an efficient Mamba-based decoder, learning voxel-to-point patterns that are used to devoxelize shared voxel representations into point-wise features. To further enhance the model's generalization capabilities across diverse scenarios, we propose a novel scene-adaptive loss function that automatically adapts to different motion patterns.Extensive experiments on the Argoverse 2 benchmark demonstrate that MambaFlow achieves state-of-the-art performance with real-time inference speed among existing works, enabling accurate flow estimation in real-world urban scenarios. The code is available at https://github.com/SCNU-RISLAB/MambaFlow.

SSF: Sparse Long-Range Scene Flow for Autonomous Driving

Jan 29, 2025

Abstract:Scene flow enables an understanding of the motion characteristics of the environment in the 3D world. It gains particular significance in the long-range, where object-based perception methods might fail due to sparse observations far away. Although significant advancements have been made in scene flow pipelines to handle large-scale point clouds, a gap remains in scalability with respect to long-range. We attribute this limitation to the common design choice of using dense feature grids, which scale quadratically with range. In this paper, we propose Sparse Scene Flow (SSF), a general pipeline for long-range scene flow, adopting a sparse convolution based backbone for feature extraction. This approach introduces a new challenge: a mismatch in size and ordering of sparse feature maps between time-sequential point scans. To address this, we propose a sparse feature fusion scheme, that augments the feature maps with virtual voxels at missing locations. Additionally, we propose a range-wise metric that implicitly gives greater importance to faraway points. Our method, SSF, achieves state-of-the-art results on the Argoverse2 dataset, demonstrating strong performance in long-range scene flow estimation. Our code will be released at https://github.com/KTH-RPL/SSF.git.

SeFlow: A Self-Supervised Scene Flow Method in Autonomous Driving

Jul 01, 2024

Abstract:Scene flow estimation predicts the 3D motion at each point in successive LiDAR scans. This detailed, point-level, information can help autonomous vehicles to accurately predict and understand dynamic changes in their surroundings. Current state-of-the-art methods require annotated data to train scene flow networks and the expense of labeling inherently limits their scalability. Self-supervised approaches can overcome the above limitations, yet face two principal challenges that hinder optimal performance: point distribution imbalance and disregard for object-level motion constraints. In this paper, we propose SeFlow, a self-supervised method that integrates efficient dynamic classification into a learning-based scene flow pipeline. We demonstrate that classifying static and dynamic points helps design targeted objective functions for different motion patterns. We also emphasize the importance of internal cluster consistency and correct object point association to refine the scene flow estimation, in particular on object details. Our real-time capable method achieves state-of-the-art performance on the self-supervised scene flow task on Argoverse 2 and Waymo datasets. The code is open-sourced at https://github.com/KTH-RPL/SeFlow along with trained model weights.

Hard Cases Detection in Motion Prediction by Vision-Language Foundation Models

May 31, 2024

Abstract:Addressing hard cases in autonomous driving, such as anomalous road users, extreme weather conditions, and complex traffic interactions, presents significant challenges. To ensure safety, it is crucial to detect and manage these scenarios effectively for autonomous driving systems. However, the rarity and high-risk nature of these cases demand extensive, diverse datasets for training robust models. Vision-Language Foundation Models (VLMs) have shown remarkable zero-shot capabilities as being trained on extensive datasets. This work explores the potential of VLMs in detecting hard cases in autonomous driving. We demonstrate the capability of VLMs such as GPT-4v in detecting hard cases in traffic participant motion prediction on both agent and scenario levels. We introduce a feasible pipeline where VLMs, fed with sequential image frames with designed prompts, effectively identify challenging agents or scenarios, which are verified by existing prediction models. Moreover, by taking advantage of this detection of hard cases by VLMs, we further improve the training efficiency of the existing motion prediction pipeline by performing data selection for the training samples suggested by GPT. We show the effectiveness and feasibility of our pipeline incorporating VLMs with state-of-the-art methods on NuScenes datasets. The code is accessible at https://github.com/KTH-RPL/Detect_VLM.

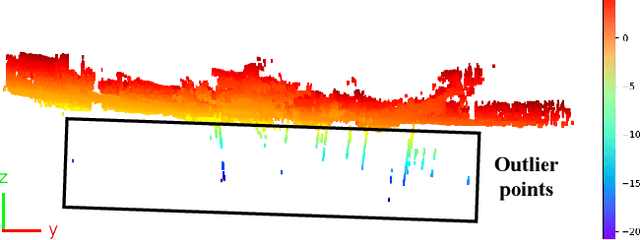

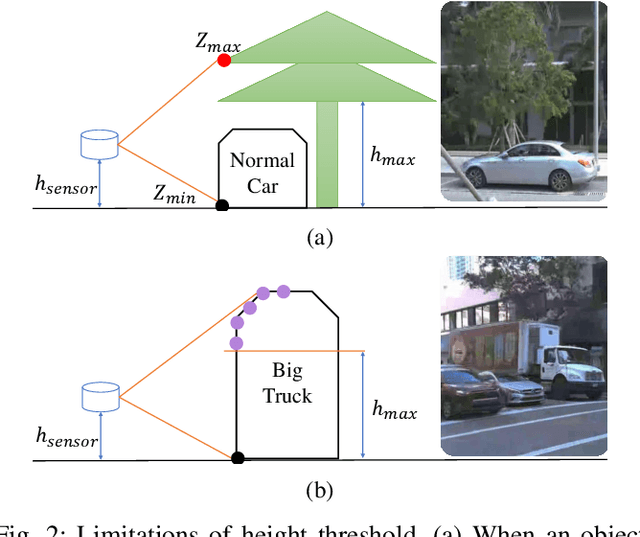

BeautyMap: Binary-Encoded Adaptable Ground Matrix for Dynamic Points Removal in Global Maps

May 12, 2024

Abstract:Global point clouds that correctly represent the static environment features can facilitate accurate localization and robust path planning. However, dynamic objects introduce undesired ghost tracks that are mixed up with the static environment. Existing dynamic removal methods normally fail to balance the performance in computational efficiency and accuracy. In response, we present BeautyMap to efficiently remove the dynamic points while retaining static features for high-fidelity global maps. Our approach utilizes a binary-encoded matrix to efficiently extract the environment features. With a bit-wise comparison between matrices of each frame and the corresponding map region, we can extract potential dynamic regions. Then we use coarse to fine hierarchical segmentation of the $z$-axis to handle terrain variations. The final static restoration module accounts for the range-visibility of each single scan and protects static points out of sight. Comparative experiments underscore BeautyMap's superior performance in both accuracy and efficiency against other dynamic points removal methods. The code is open-sourced at https://github.com/MKJia/BeautyMap.

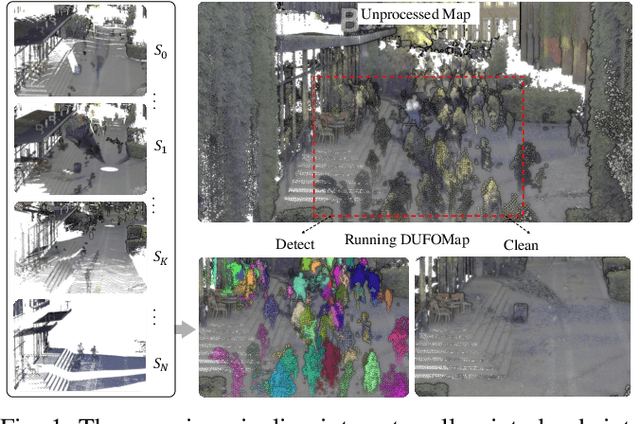

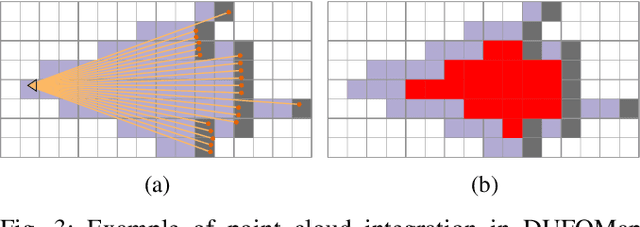

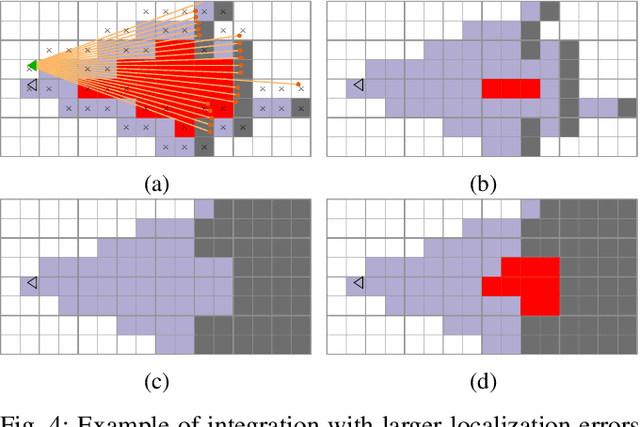

DUFOMap: Efficient Dynamic Awareness Mapping

Mar 03, 2024

Abstract:The dynamic nature of the real world is one of the main challenges in robotics. The first step in dealing with it is to detect which parts of the world are dynamic. A typical benchmark task is to create a map that contains only the static part of the world to support, for example, localization and planning. Current solutions are often applied in post-processing, where parameter tuning allows the user to adjust the setting for a specific dataset. In this paper, we propose DUFOMap, a novel dynamic awareness mapping framework designed for efficient online processing. Despite having the same parameter settings for all scenarios, it performs better or is on par with state-of-the-art methods. Ray casting is utilized to identify and classify fully observed empty regions. Since these regions have been observed empty, it follows that anything inside them at another time must be dynamic. Evaluation is carried out in various scenarios, including outdoor environments in KITTI and Argoverse 2, open areas on the KTH campus, and with different sensor types. DUFOMap outperforms the state of the art in terms of accuracy and computational efficiency. The source code, benchmarks, and links to the datasets utilized are provided. See https://kin-zhang.github.io/dufomap for more details.

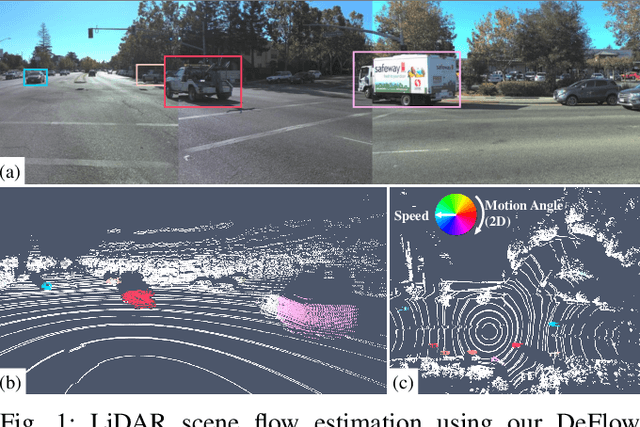

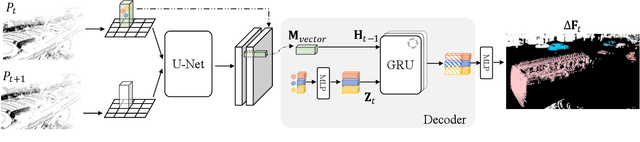

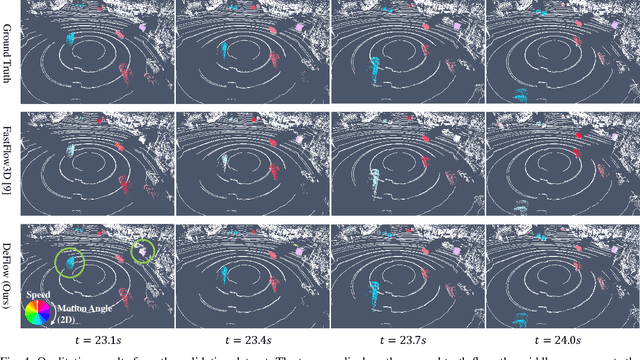

DeFlow: Decoder of Scene Flow Network in Autonomous Driving

Jan 29, 2024

Abstract:Scene flow estimation determines a scene's 3D motion field, by predicting the motion of points in the scene, especially for aiding tasks in autonomous driving. Many networks with large-scale point clouds as input use voxelization to create a pseudo-image for real-time running. However, the voxelization process often results in the loss of point-specific features. This gives rise to a challenge in recovering those features for scene flow tasks. Our paper introduces DeFlow which enables a transition from voxel-based features to point features using Gated Recurrent Unit (GRU) refinement. To further enhance scene flow estimation performance, we formulate a novel loss function that accounts for the data imbalance between static and dynamic points. Evaluations on the Argoverse 2 scene flow task reveal that DeFlow achieves state-of-the-art results on large-scale point cloud data, demonstrating that our network has better performance and efficiency compared to others. The code is open-sourced at https://github.com/KTH-RPL/deflow.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge