Ruoyu Geng

AKF-LIO: LiDAR-Inertial Odometry with Gaussian Map by Adaptive Kalman Filter

Mar 10, 2025Abstract:Existing LiDAR-Inertial Odometry (LIO) systems typically use sensor-specific or environment-dependent measurement covariances during state estimation, leading to laborious parameter tuning and suboptimal performance in challenging conditions (e.g., sensor degeneracy and noisy observations). Therefore, we propose an Adaptive Kalman Filter (AKF) framework that dynamically estimates time-varying noise covariances of LiDAR and Inertial Measurement Unit (IMU) measurements, enabling context-aware confidence weighting between sensors. During LiDAR degeneracy, the system prioritizes IMU data while suppressing contributions from unreliable inputs like moving objects or noisy point clouds. Furthermore, a compact Gaussian-based map representation is introduced to model environmental planarity and spatial noise. A correlated registration strategy ensures accurate plane normal estimation via pseudo-merge, even in unstructured environments like forests. Extensive experiments validate the robustness of the proposed system across diverse environments, including dynamic scenes and geometrically degraded scenarios. Our method achieves reliable localization results across all MARS-LVIG sequences and ranks 8th on the KITTI Odometry Benchmark. The code will be released at https://github.com/xpxie/AKF-LIO.git.

Real-Time Metric-Semantic Mapping for Autonomous Navigation in Outdoor Environments

Nov 30, 2024

Abstract:The creation of a metric-semantic map, which encodes human-prior knowledge, represents a high-level abstraction of environments. However, constructing such a map poses challenges related to the fusion of multi-modal sensor data, the attainment of real-time mapping performance, and the preservation of structural and semantic information consistency. In this paper, we introduce an online metric-semantic mapping system that utilizes LiDAR-Visual-Inertial sensing to generate a global metric-semantic mesh map of large-scale outdoor environments. Leveraging GPU acceleration, our mapping process achieves exceptional speed, with frame processing taking less than 7ms, regardless of scenario scale. Furthermore, we seamlessly integrate the resultant map into a real-world navigation system, enabling metric-semantic-based terrain assessment and autonomous point-to-point navigation within a campus environment. Through extensive experiments conducted on both publicly available and self-collected datasets comprising 24 sequences, we demonstrate the effectiveness of our mapping and navigation methodologies. Code has been publicly released: https://github.com/gogojjh/cobra

PALoc: Advancing SLAM Benchmarking with Prior-Assisted 6-DoF Trajectory Generation and Uncertainty Estimation

Feb 06, 2024Abstract:Accurately generating ground truth (GT) trajectories is essential for Simultaneous Localization and Mapping (SLAM) evaluation, particularly under varying environmental conditions. This study introduces a systematic approach employing a prior map-assisted framework for generating dense six-degree-of-freedom (6-DoF) GT poses for the first time, enhancing the fidelity of both indoor and outdoor SLAM datasets. Our method excels in handling degenerate and stationary conditions frequently encountered in SLAM datasets, thereby increasing robustness and precision. A significant aspect of our approach is the detailed derivation of covariances within the factor graph, enabling an in-depth analysis of pose uncertainty propagation. This analysis crucially contributes to demonstrating specific pose uncertainties and enhancing trajectory reliability from both theoretical and empirical perspectives. Additionally, we provide an open-source toolbox (https://github.com/JokerJohn/Cloud_Map_Evaluation) for map evaluation criteria, facilitating the indirect assessment of overall trajectory precision. Experimental results show at least a 30\% improvement in map accuracy and a 20\% increase in direct trajectory accuracy compared to the Iterative Closest Point (ICP) \cite{sharp2002icp} algorithm across diverse campus environments, with substantially enhanced robustness. Our open-source solution (https://github.com/JokerJohn/PALoc), extensively applied in the FusionPortable\cite{Jiao2022Mar} dataset, is geared towards SLAM benchmark dataset augmentation and represents a significant advancement in SLAM evaluations.

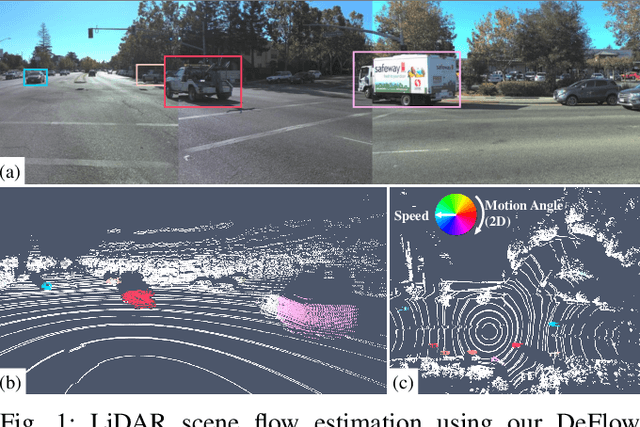

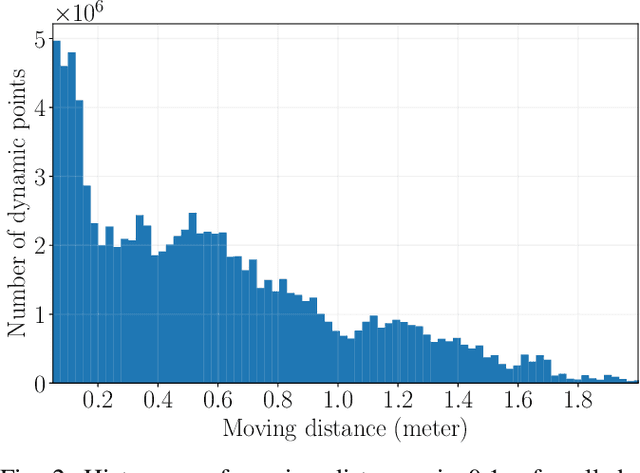

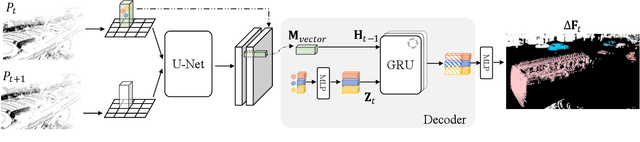

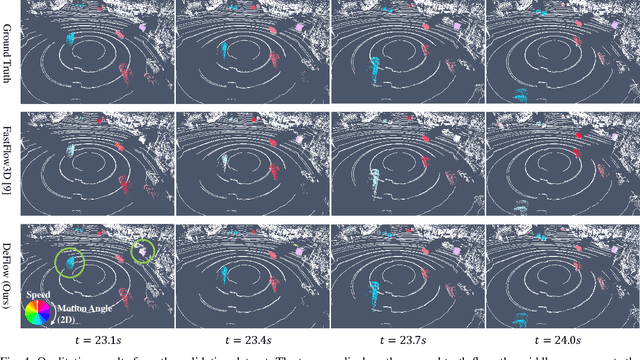

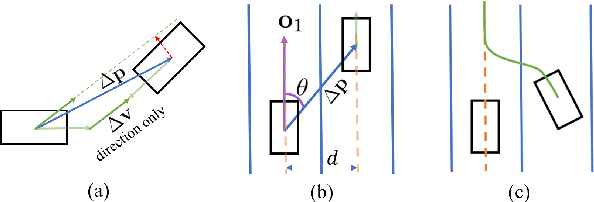

DeFlow: Decoder of Scene Flow Network in Autonomous Driving

Jan 29, 2024

Abstract:Scene flow estimation determines a scene's 3D motion field, by predicting the motion of points in the scene, especially for aiding tasks in autonomous driving. Many networks with large-scale point clouds as input use voxelization to create a pseudo-image for real-time running. However, the voxelization process often results in the loss of point-specific features. This gives rise to a challenge in recovering those features for scene flow tasks. Our paper introduces DeFlow which enables a transition from voxel-based features to point features using Gated Recurrent Unit (GRU) refinement. To further enhance scene flow estimation performance, we formulate a novel loss function that accounts for the data imbalance between static and dynamic points. Evaluations on the Argoverse 2 scene flow task reveal that DeFlow achieves state-of-the-art results on large-scale point cloud data, demonstrating that our network has better performance and efficiency compared to others. The code is open-sourced at https://github.com/KTH-RPL/deflow.

A Dynamic Points Removal Benchmark in Point Cloud Maps

Jul 14, 2023Abstract:In the field of robotics, the point cloud has become an essential map representation. From the perspective of downstream tasks like localization and global path planning, points corresponding to dynamic objects will adversely affect their performance. Existing methods for removing dynamic points in point clouds often lack clarity in comparative evaluations and comprehensive analysis. Therefore, we propose an easy-to-extend unified benchmarking framework for evaluating techniques for removing dynamic points in maps. It includes refactored state-of-art methods and novel metrics to analyze the limitations of these approaches. This enables researchers to dive deep into the underlying reasons behind these limitations. The benchmark makes use of several datasets with different sensor types. All the code and datasets related to our study are publicly available for further development and utilization.

PALoc: Robust Prior-assisted Trajectory Generation for Benchmarking

May 22, 2023Abstract:Evaluating simultaneous localization and mapping (SLAM) algorithms necessitates high-precision and dense ground truth (GT) trajectories. But obtaining desirable GT trajectories is sometimes challenging without GT tracking sensors. As an alternative, in this paper, we propose a novel prior-assisted SLAM system to generate a full six-degree-of-freedom ($6$-DOF) trajectory at around $10$Hz for benchmarking under the framework of the factor graph. Our degeneracy-aware map factor utilizes a prior point cloud map and LiDAR frame for point-to-plane optimization, simultaneously detecting degeneration cases to reduce drift and enhancing the consistency of pose estimation. Our system is seamlessly integrated with cutting-edge odometry via a loosely coupled scheme to generate high-rate and precise trajectories. Moreover, we propose a norm-constrained gravity factor for stationary cases, optimizing pose and gravity to boost performance. Extensive evaluations demonstrate our algorithm's superiority over existing SLAM or map-based methods in diverse scenarios in terms of precision, smoothness, and robustness. Our approach substantially advances reliable and accurate SLAM evaluation methods, fostering progress in robotics research.

* 4 pages, 6 figures

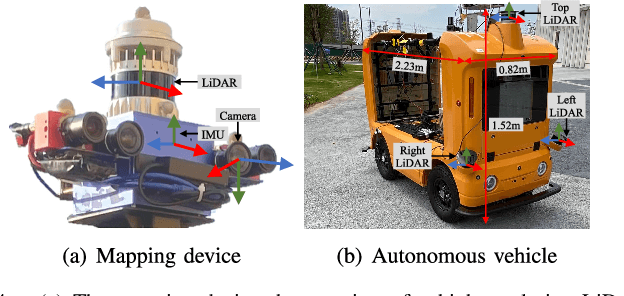

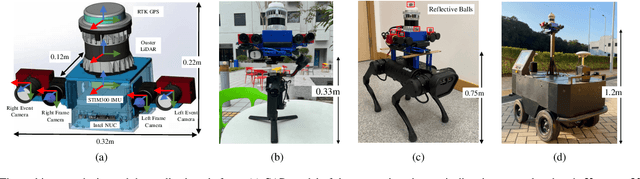

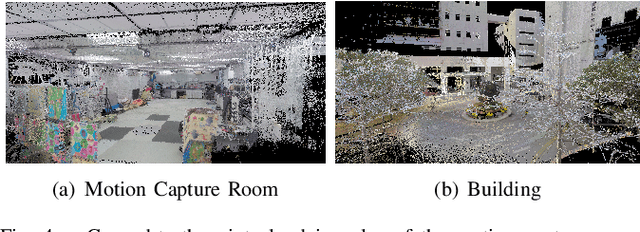

FusionPortable: A Multi-Sensor Campus-Scene Dataset for Evaluation of Localization and Mapping Accuracy on Diverse Platforms

Aug 25, 2022

Abstract:Combining multiple sensors enables a robot to maximize its perceptual awareness of environments and enhance its robustness to external disturbance, crucial to robotic navigation. This paper proposes the FusionPortable benchmark, a complete multi-sensor dataset with a diverse set of sequences for mobile robots. This paper presents three contributions. We first advance a portable and versatile multi-sensor suite that offers rich sensory measurements: 10Hz LiDAR point clouds, 20Hz stereo frame images, high-rate and asynchronous events from stereo event cameras, 200Hz inertial readings from an IMU, and 10Hz GPS signal. Sensors are already temporally synchronized in hardware. This device is lightweight, self-contained, and has plug-and-play support for mobile robots. Second, we construct a dataset by collecting 17 sequences that cover a variety of environments on the campus by exploiting multiple robot platforms for data collection. Some sequences are challenging to existing SLAM algorithms. Third, we provide ground truth for the decouple localization and mapping performance evaluation. We additionally evaluate state-of-the-art SLAM approaches and identify their limitations. The dataset, consisting of raw sensor easurements, ground truth, calibration data, and evaluated algorithms, will be released: https://ram-lab.com/file/site/multi-sensor-dataset.

Real-time Neural Dense Elevation Mapping for Urban Terrain with Uncertainty Estimations

Aug 06, 2022Abstract:Having good knowledge of terrain information is essential for improving the performance of various downstream tasks on complex terrains, especially for the locomotion and navigation of legged robots. We present a novel framework for neural urban terrain reconstruction with uncertainty estimations. It generates dense robot-centric elevation maps online from sparse LiDAR observations. We design a novel pre-processing and point features representation approach that ensures high robustness and computational efficiency when integrating multiple point cloud frames. A Bayesian-GAN model then recovers the detailed terrain structures while simultaneously providing the pixel-wise reconstruction uncertainty. We evaluate the proposed pipeline through extensive simulation and real-world experiments. It demonstrates efficient terrain reconstruction with high quality and real-time performance on a mobile platform, which further benefits the downstream tasks of legged robots. (See https://kin-zhang.github.io/ndem/ for more details.)

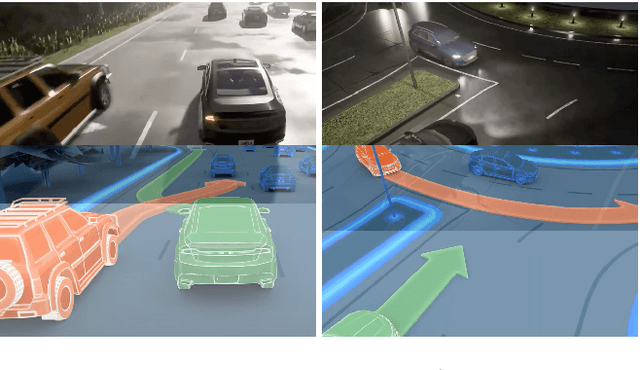

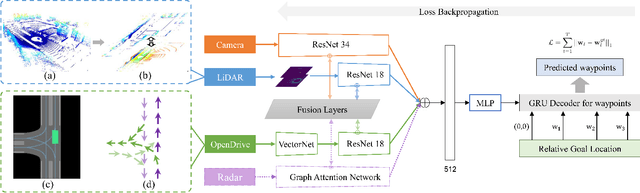

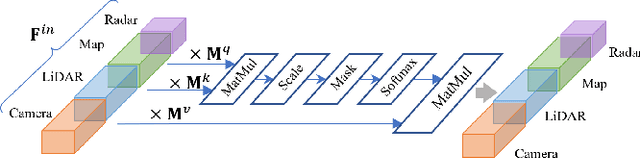

MMFN: Multi-Modal-Fusion-Net for End-to-End Driving

Jul 01, 2022

Abstract:Inspired by the fact that humans use diverse sensory organs to perceive the world, sensors with different modalities are deployed in end-to-end driving to obtain the global context of the 3D scene. In previous works, camera and LiDAR inputs are fused through transformers for better driving performance. These inputs are normally further interpreted as high-level map information to assist navigation tasks. Nevertheless, extracting useful information from the complex map input is challenging, for redundant information may mislead the agent and negatively affect driving performance. We propose a novel approach to efficiently extract features from vectorized High-Definition (HD) maps and utilize them in the end-to-end driving tasks. In addition, we design a new expert to further enhance the model performance by considering multi-road rules. Experimental results prove that both of the proposed improvements enable our agent to achieve superior performance compared with other methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge