Ren Xin

NetRoller: Interfacing General and Specialized Models for End-to-End Autonomous Driving

Jun 17, 2025Abstract:Integrating General Models (GMs) such as Large Language Models (LLMs), with Specialized Models (SMs) in autonomous driving tasks presents a promising approach to mitigating challenges in data diversity and model capacity of existing specialized driving models. However, this integration leads to problems of asynchronous systems, which arise from the distinct characteristics inherent in GMs and SMs. To tackle this challenge, we propose NetRoller, an adapter that incorporates a set of novel mechanisms to facilitate the seamless integration of GMs and specialized driving models. Specifically, our mechanisms for interfacing the asynchronous GMs and SMs are organized into three key stages. NetRoller first harvests semantically rich and computationally efficient representations from the reasoning processes of LLMs using an early stopping mechanism, which preserves critical insights on driving context while maintaining low overhead. It then applies learnable query embeddings, nonsensical embeddings, and positional layer embeddings to facilitate robust and efficient cross-modality translation. At last, it employs computationally efficient Query Shift and Feature Shift mechanisms to enhance the performance of SMs through few-epoch fine-tuning. Based on the mechanisms formalized in these three stages, NetRoller enables specialized driving models to operate at their native frequencies while maintaining situational awareness of the GM. Experiments conducted on the nuScenes dataset demonstrate that integrating GM through NetRoller significantly improves human similarity and safety in planning tasks, and it also achieves noticeable precision improvements in detection and mapping tasks for end-to-end autonomous driving. The code and models are available at https://github.com/Rex-sys-hk/NetRoller .

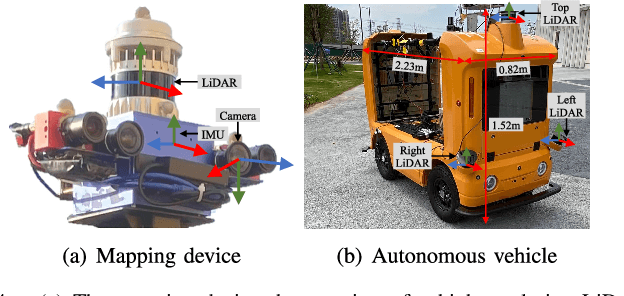

Real-Time Metric-Semantic Mapping for Autonomous Navigation in Outdoor Environments

Nov 30, 2024

Abstract:The creation of a metric-semantic map, which encodes human-prior knowledge, represents a high-level abstraction of environments. However, constructing such a map poses challenges related to the fusion of multi-modal sensor data, the attainment of real-time mapping performance, and the preservation of structural and semantic information consistency. In this paper, we introduce an online metric-semantic mapping system that utilizes LiDAR-Visual-Inertial sensing to generate a global metric-semantic mesh map of large-scale outdoor environments. Leveraging GPU acceleration, our mapping process achieves exceptional speed, with frame processing taking less than 7ms, regardless of scenario scale. Furthermore, we seamlessly integrate the resultant map into a real-world navigation system, enabling metric-semantic-based terrain assessment and autonomous point-to-point navigation within a campus environment. Through extensive experiments conducted on both publicly available and self-collected datasets comprising 24 sequences, we demonstrate the effectiveness of our mapping and navigation methodologies. Code has been publicly released: https://github.com/gogojjh/cobra

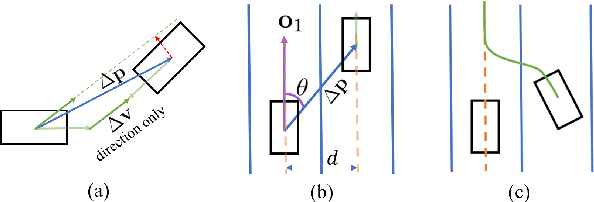

PlanScope: Learning to Plan Within Decision Scope Does Matter

Nov 01, 2024Abstract:In the context of autonomous driving, learning-based methods have been promising for the development of planning modules. During the training process of planning modules, directly minimizing the discrepancy between expert-driving logs and planning output is widely deployed. In general, driving logs consist of suddenly appearing obstacles or swiftly changing traffic signals, which typically necessitate swift and nuanced adjustments in driving maneuvers. Concurrently, future trajectories of the vehicles exhibit their long-term decisions, such as adhering to a reference lane or circumventing stationary obstacles. Due to the unpredictable influence of future events in driving logs, reasoning bias could be naturally introduced to learning based planning modules, which leads to a possible degradation of driving performance. To address this issue, we identify the decisions and their corresponding time horizons, and characterize a so-called decision scope by retaining decisions within derivable horizons only, to mitigate the effect of irrational behaviors caused by unpredictable events. This framework employs wavelet transformation based log preprocessing with an effective loss computation approach, rendering the planning model only sensitive to valuable decisions at the current state. Since frequency domain characteristics are extracted in conjunction with time domain features by wavelets, decision information across various frequency bands within the corresponding time horizon can be suitably captured. Furthermore, to achieve valuable decision learning, this framework leverages a transformer based decoder that incrementally generates the detailed profiles of future decisions over multiple steps. Our experiments demonstrate that our proposed method outperforms baselines in terms of driving scores with closed-loop evaluations on the nuPlan dataset.

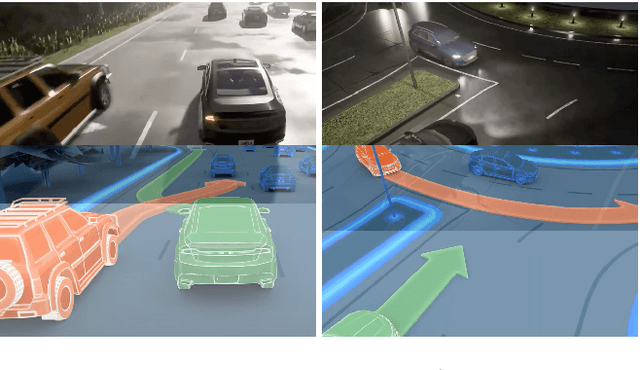

RiskMap: A Unified Driving Context Representation for Autonomous Motion Planning in Urban Driving Environment

Jun 06, 2024Abstract:Planning is complicated by the combination of perception and map information, particularly when driving in heavy traffic. Developing an extendable and efficient representation that visualizes sensor noise and provides constraints to real-time planning tasks is desirable. We aim to develop an extendable map representation offering prior to cost in planning tasks to simplify the planning process of dealing with complex driving scenarios and visualize sensor noise. In this paper, we illustrate a unified context representation empowered by a modern deep learning motion prediction model, representing statistical cognition of motion prediction for human beings. A sampling-based planner is adopted to train and compare the difference in risk map generation methods. The training tools and model structures are investigated illustrating their efficiency in this task.

A Generic Trajectory Planning Method for Constrained All-Wheel-Steering Robots

Apr 15, 2024Abstract:This paper presents a trajectory planning method for wheeled robots with fixed steering axes while the steering angle of each wheel is constrained. In the past, All-Wheel-Steering(AWS) robots, incorporating modes such as rotation-free translation maneuvers, in-situ rotational maneuvers, and proportional steering, exhibited inefficient performance due to time-consuming mode switches. This inefficiency arises from wheel rotation constraints and inter-wheel cooperation requirements. The direct application of a holonomic moving strategy can lead to significant slip angles or even structural failure. Additionally, the limited steering range of AWS wheeled robots exacerbates nonlinearity issues, thereby complicating control processes. To address these challenges, we developed a novel planning method termed Constrained AWS(C-AWS), which integrates second-order discrete search with predictive control techniques. Experimental results demonstrate that our method adeptly generates feasible and smooth trajectories for C-AWS while adhering to steering angle constraints.

Enhancing Campus Mobility: Achievements and Challenges of Autonomous Shuttle "Snow Lion''

Jan 17, 2024Abstract:The rapid evolution of autonomous vehicles (AVs) has significantly influenced global transportation systems. In this context, we present ``Snow Lion'', an autonomous shuttle meticulously designed to revolutionize on-campus transportation, offering a safer and more efficient mobility solution for students, faculty, and visitors. The primary objective of this research is to enhance campus mobility by providing a reliable, efficient, and eco-friendly transportation solution that seamlessly integrates with existing infrastructure and meets the diverse needs of a university setting. To achieve this goal, we delve into the intricacies of the system design, encompassing sensing, perception, localization, planning, and control aspects. We evaluate the autonomous shuttle's performance in real-world scenarios, involving a 1146-kilometer road haul and the transportation of 442 passengers over a two-month period. These experiments demonstrate the effectiveness of our system and offer valuable insights into the intricate process of integrating an autonomous vehicle within campus shuttle operations. Furthermore, a thorough analysis of the lessons derived from this experience furnishes a valuable real-world case study, accompanied by recommendations for future research and development in the field of autonomous driving.

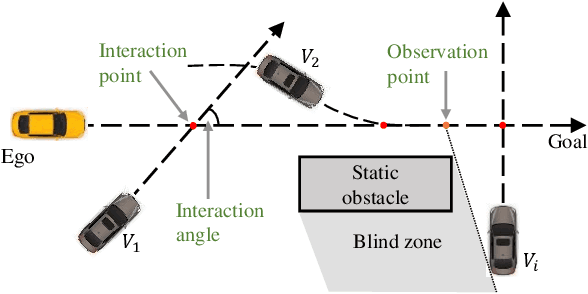

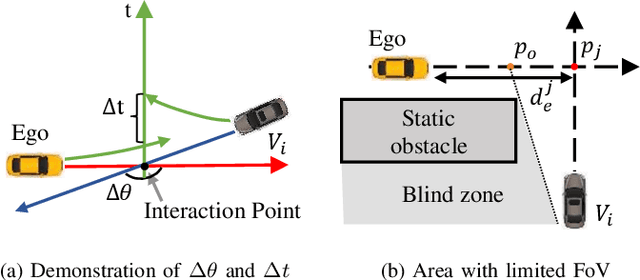

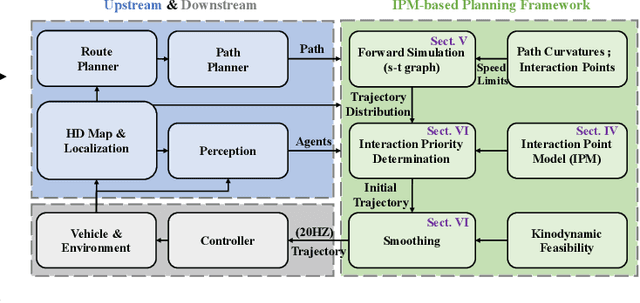

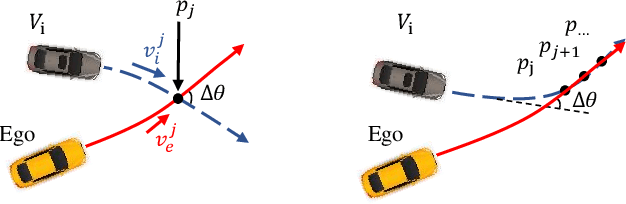

Efficient Speed Planning for Autonomous Driving in Dynamic Environment with Interaction Point Model

Sep 25, 2022

Abstract:Safely interacting with other traffic participants is one of the core requirements for autonomous driving, especially in intersections and occlusions. Most existing approaches are designed for particular scenarios and require significant human labor in parameter tuning to be applied to different situations. To solve this problem, we first propose a learning-based Interaction Point Model (IPM), which describes the interaction between agents with the protection time and interaction priority in a unified manner. We further integrate the proposed IPM into a novel planning framework, demonstrating its effectiveness and robustness through comprehensive simulations in highly dynamic environments.

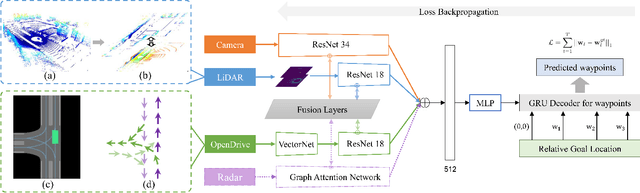

MMFN: Multi-Modal-Fusion-Net for End-to-End Driving

Jul 01, 2022

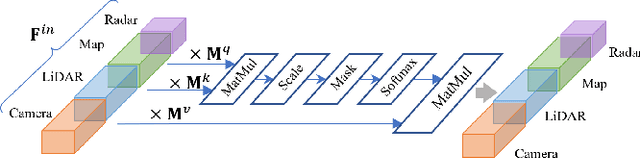

Abstract:Inspired by the fact that humans use diverse sensory organs to perceive the world, sensors with different modalities are deployed in end-to-end driving to obtain the global context of the 3D scene. In previous works, camera and LiDAR inputs are fused through transformers for better driving performance. These inputs are normally further interpreted as high-level map information to assist navigation tasks. Nevertheless, extracting useful information from the complex map input is challenging, for redundant information may mislead the agent and negatively affect driving performance. We propose a novel approach to efficiently extract features from vectorized High-Definition (HD) maps and utilize them in the end-to-end driving tasks. In addition, we design a new expert to further enhance the model performance by considering multi-road rules. Experimental results prove that both of the proposed improvements enable our agent to achieve superior performance compared with other methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge