Yuanhang Li

MLV-Edit: Towards Consistent and Highly Efficient Editing for Minute-Level Videos

Feb 02, 2026Abstract:We propose MLV-Edit, a training-free, flow-based framework that address the unique challenges of minute-level video editing. While existing techniques excel in short-form video manipulation, scaling them to long-duration videos remains challenging due to prohibitive computational overhead and the difficulty of maintaining global temporal consistency across thousands of frames. To address this, MLV-Edit employs a divide-and-conquer strategy for segment-wise editing, facilitated by two core modules: Velocity Blend rectifies motion inconsistencies at segment boundaries by aligning the flow fields of adjacent chunks, eliminating flickering and boundary artifacts commonly observed in fragmented video processing; and Attention Sink anchors local segment features to global reference frames, effectively suppressing cumulative structural drift. Extensive quantitative and qualitative experiments demonstrate that MLV-Edit consistently outperforms state-of-the-art methods in terms of temporal stability and semantic fidelity.

IC-Effect: Precise and Efficient Video Effects Editing via In-Context Learning

Dec 17, 2025

Abstract:We propose \textbf{IC-Effect}, an instruction-guided, DiT-based framework for few-shot video VFX editing that synthesizes complex effects (\eg flames, particles and cartoon characters) while strictly preserving spatial and temporal consistency. Video VFX editing is highly challenging because injected effects must blend seamlessly with the background, the background must remain entirely unchanged, and effect patterns must be learned efficiently from limited paired data. However, existing video editing models fail to satisfy these requirements. IC-Effect leverages the source video as clean contextual conditions, exploiting the contextual learning capability of DiT models to achieve precise background preservation and natural effect injection. A two-stage training strategy, consisting of general editing adaptation followed by effect-specific learning via Effect-LoRA, ensures strong instruction following and robust effect modeling. To further improve efficiency, we introduce spatiotemporal sparse tokenization, enabling high fidelity with substantially reduced computation. We also release a paired VFX editing dataset spanning $15$ high-quality visual styles. Extensive experiments show that IC-Effect delivers high-quality, controllable, and temporally consistent VFX editing, opening new possibilities for video creation.

Real-Time Metric-Semantic Mapping for Autonomous Navigation in Outdoor Environments

Nov 30, 2024

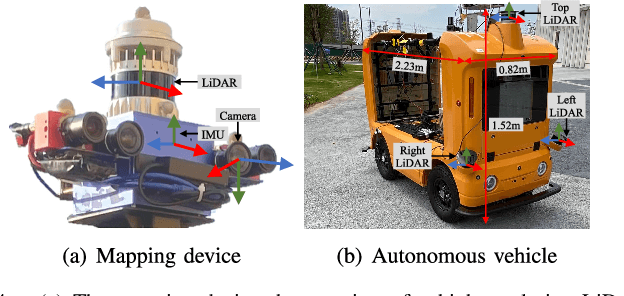

Abstract:The creation of a metric-semantic map, which encodes human-prior knowledge, represents a high-level abstraction of environments. However, constructing such a map poses challenges related to the fusion of multi-modal sensor data, the attainment of real-time mapping performance, and the preservation of structural and semantic information consistency. In this paper, we introduce an online metric-semantic mapping system that utilizes LiDAR-Visual-Inertial sensing to generate a global metric-semantic mesh map of large-scale outdoor environments. Leveraging GPU acceleration, our mapping process achieves exceptional speed, with frame processing taking less than 7ms, regardless of scenario scale. Furthermore, we seamlessly integrate the resultant map into a real-world navigation system, enabling metric-semantic-based terrain assessment and autonomous point-to-point navigation within a campus environment. Through extensive experiments conducted on both publicly available and self-collected datasets comprising 24 sequences, we demonstrate the effectiveness of our mapping and navigation methodologies. Code has been publicly released: https://github.com/gogojjh/cobra

MGCBS: An Optimal and Efficient Algorithm for Solving Multi-Goal Multi-Agent Path Finding Problem

Apr 30, 2024

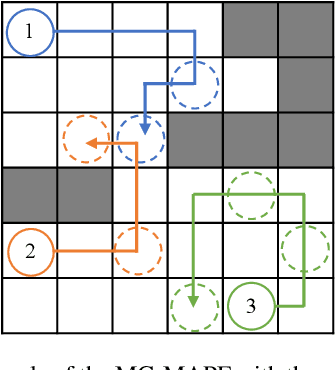

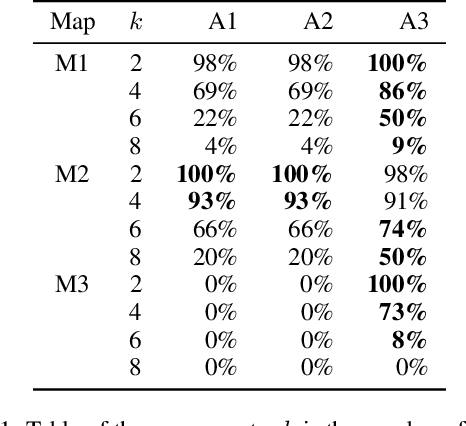

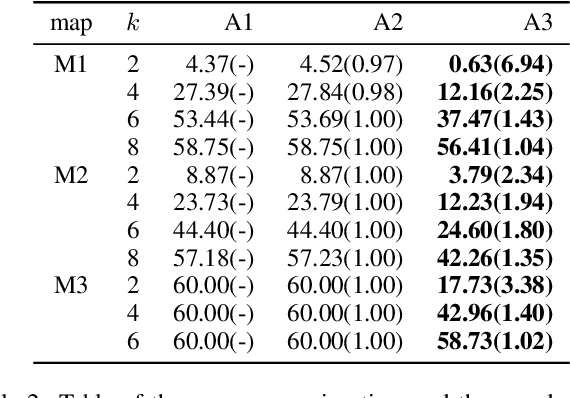

Abstract:With the expansion of the scale of robotics applications, the multi-goal multi-agent pathfinding (MG-MAPF) problem began to gain widespread attention. This problem requires each agent to visit pre-assigned multiple goal points at least once without conflict. Some previous methods have been proposed to solve the MG-MAPF problem based on Decoupling the goal Vertex visiting order search and the Single-agent pathfinding (DVS). However, this paper demonstrates that the methods based on DVS cannot always obtain the optimal solution. To obtain the optimal result, we propose the Multi-Goal Conflict-Based Search (MGCBS), which is based on Decoupling the goal Safe interval visiting order search and the Single-agent pathfinding (DSS). Additionally, we present the Time-Interval-Space Forest (TIS Forest) to enhance the efficiency of MGCBS by maintaining the shortest paths from any start point at any start time step to each safe interval at the goal points. The experiment demonstrates that our method can consistently obtain optimal results and execute up to 7 times faster than the state-of-the-art method in our evaluation.

An Efficient Approach to the Online Multi-Agent Path Finding Problem by Using Sustainable Information

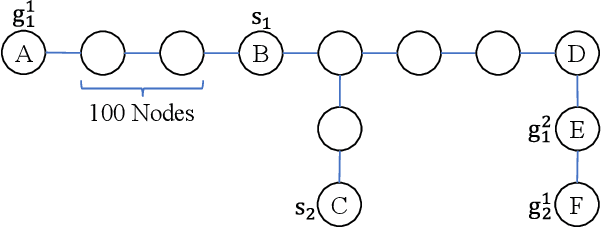

Jan 11, 2023Abstract:Multi-agent path finding (MAPF) is the problem of moving agents to the goal vertex without collision. In the online MAPF problem, new agents may be added to the environment at any time, and the current agents have no information about future agents. The inability of existing online methods to reuse previous planning contexts results in redundant computation and reduces algorithm efficiency. Hence, we propose a three-level approach to solve online MAPF utilizing sustainable information, which can decrease its redundant calculations. The high-level solver, the Sustainable Replan algorithm (SR), manages the planning context and simulates the environment. The middle-level solver, the Sustainable Conflict-Based Search algorithm (SCBS), builds a conflict tree and maintains the planning context. The low-level solver, the Sustainable Reverse Safe Interval Path Planning algorithm (SRSIPP), is an efficient single-agent solver that uses previous planning context to reduce duplicate calculations. Experiments show that our proposed method has significant improvement in terms of computational efficiency. In one of the test scenarios, our algorithm can be 1.48 times faster than SOTA on average under different agent number settings.

Visual Subtitle Feature Enhanced Video Outline Generation

Sep 01, 2022

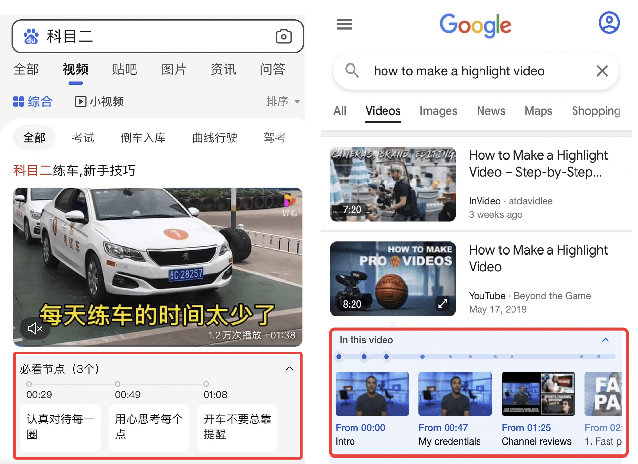

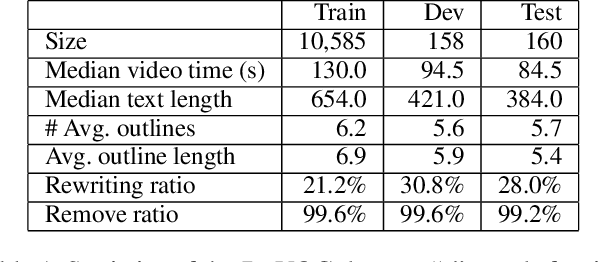

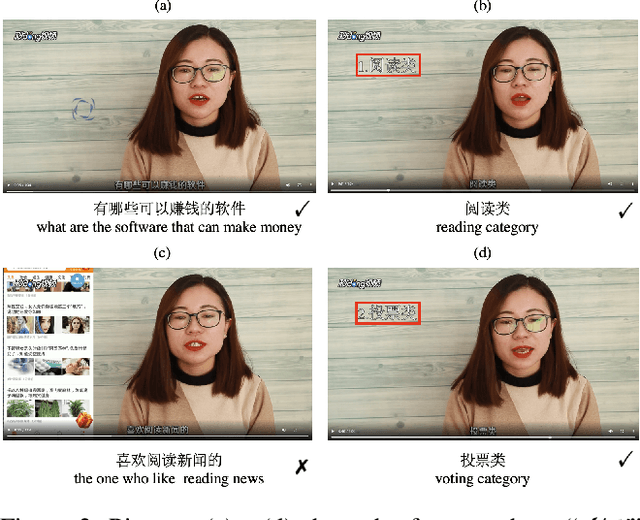

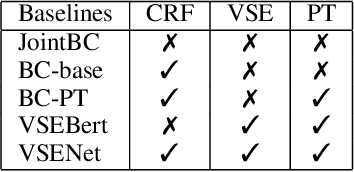

Abstract:With the tremendously increasing number of videos, there is a great demand for techniques that help people quickly navigate to the video segments they are interested in. However, current works on video understanding mainly focus on video content summarization, while little effort has been made to explore the structure of a video. Inspired by textual outline generation, we introduce a novel video understanding task, namely video outline generation (VOG). This task is defined to contain two sub-tasks: (1) first segmenting the video according to the content structure and then (2) generating a heading for each segment. To learn and evaluate VOG, we annotate a 10k+ dataset, called DuVOG. Specifically, we use OCR tools to recognize subtitles of videos. Then annotators are asked to divide subtitles into chapters and title each chapter. In videos, highlighted text tends to be the headline since it is more likely to attract attention. Therefore we propose a Visual Subtitle feature Enhanced video outline generation model (VSENet) which takes as input the textual subtitles together with their visual font sizes and positions. We consider the VOG task as a sequence tagging problem that extracts spans where the headings are located and then rewrites them to form the final outlines. Furthermore, based on the similarity between video outlines and textual outlines, we use a large number of articles with chapter headings to pretrain our model. Experiments on DuVOG show that our model largely outperforms other baseline methods, achieving 77.1 of F1-score for the video segmentation level and 85.0 of ROUGE-L_F0.5 for the headline generation level.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge