Hexiang Wei

Are We Ready for Planetary Exploration Robots? The TAIL-Plus Dataset for SLAM in Granular Environments

Apr 21, 2024Abstract:So far, planetary surface exploration depends on various mobile robot platforms. The autonomous navigation and decision-making of these mobile robots in complex terrains largely rely on their terrain-aware perception, localization and mapping capabilities. In this paper we release the TAIL-Plus dataset, a new challenging dataset in deformable granular environments for planetary exploration robots, which is an extension to our previous work, TAIL (Terrain-Aware multI-modaL) dataset. We conducted field experiments on beaches that are considered as planetary surface analog environments for diverse sandy terrains. In TAIL-Plus dataset, we provide more sequences with multiple loops and expand the scene from day to night. Benefit from our sensor suite with modular design, we use both wheeled and quadruped robots for data collection. The sensors include a 3D LiDAR, three downward RGB-D cameras, a pair of global-shutter color cameras that can be used as a forward-looking stereo camera, an RTK-GPS device and an extra IMU. Our datasets are intended to help researchers developing multi-sensor simultaneous localization and mapping (SLAM) algorithms for robots in unstructured, deformable granular terrains. Our datasets and supplementary materials will be available at \url{https://tailrobot.github.io/}.

FusionPortableV2: A Unified Multi-Sensor Dataset for Generalized SLAM Across Diverse Platforms and Scalable Environments

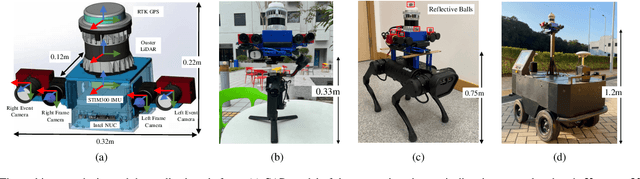

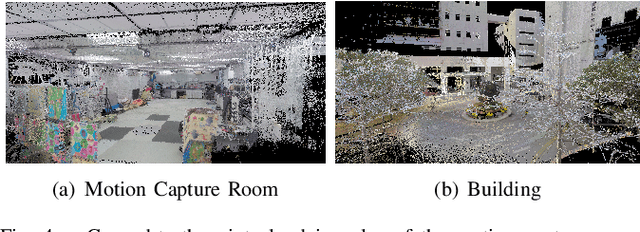

Apr 12, 2024Abstract:Simultaneous Localization and Mapping (SLAM) technology has been widely applied in various robotic scenarios, from rescue operations to autonomous driving. However, the generalization of SLAM algorithms remains a significant challenge, as current datasets often lack scalability in terms of platforms and environments. To address this limitation, we present FusionPortableV2, a multi-sensor SLAM dataset featuring notable sensor diversity, varied motion patterns, and a wide range of environmental scenarios. Our dataset comprises $27$ sequences, spanning over $2.5$ hours and collected from four distinct platforms: a handheld suite, wheeled and legged robots, and vehicles. These sequences cover diverse settings, including buildings, campuses, and urban areas, with a total length of $38.7km$. Additionally, the dataset includes ground-truth (GT) trajectories and RGB point cloud maps covering approximately $0.3km^2$. To validate the utility of our dataset in advancing SLAM research, we assess several state-of-the-art (SOTA) SLAM algorithms. Furthermore, we demonstrate the dataset's broad applicability beyond traditional SLAM tasks by investigating its potential for monocular depth estimation. The complete dataset, including sensor data, GT, and calibration details, is accessible at https://fusionportable.github.io/dataset/fusionportable_v2.

TAIL: A Terrain-Aware Multi-Modal SLAM Dataset for Robot Locomotion in Deformable Granular Environments

Mar 25, 2024Abstract:Terrain-aware perception holds the potential to improve the robustness and accuracy of autonomous robot navigation in the wilds, thereby facilitating effective off-road traversals. However, the lack of multi-modal perception across various motion patterns hinders the solutions of Simultaneous Localization And Mapping (SLAM), especially when confronting non-geometric hazards in demanding landscapes. In this paper, we first propose a Terrain-Aware multI-modaL (TAIL) dataset tailored to deformable and sandy terrains. It incorporates various types of robotic proprioception and distinct ground interactions for the unique challenges and benchmark of multi-sensor fusion SLAM. The versatile sensor suite comprises stereo frame cameras, multiple ground-pointing RGB-D cameras, a rotating 3D LiDAR, an IMU, and an RTK device. This ensemble is hardware-synchronized, well-calibrated, and self-contained. Utilizing both wheeled and quadrupedal locomotion, we efficiently collect comprehensive sequences to capture rich unstructured scenarios. It spans the spectrum of scope, terrain interactions, scene changes, ground-level properties, and dynamic robot characteristics. We benchmark several state-of-the-art SLAM methods against ground truth and provide performance validations. Corresponding challenges and limitations are also reported. All associated resources are accessible upon request at \url{https://tailrobot.github.io/}.

PALoc: Advancing SLAM Benchmarking with Prior-Assisted 6-DoF Trajectory Generation and Uncertainty Estimation

Feb 06, 2024Abstract:Accurately generating ground truth (GT) trajectories is essential for Simultaneous Localization and Mapping (SLAM) evaluation, particularly under varying environmental conditions. This study introduces a systematic approach employing a prior map-assisted framework for generating dense six-degree-of-freedom (6-DoF) GT poses for the first time, enhancing the fidelity of both indoor and outdoor SLAM datasets. Our method excels in handling degenerate and stationary conditions frequently encountered in SLAM datasets, thereby increasing robustness and precision. A significant aspect of our approach is the detailed derivation of covariances within the factor graph, enabling an in-depth analysis of pose uncertainty propagation. This analysis crucially contributes to demonstrating specific pose uncertainties and enhancing trajectory reliability from both theoretical and empirical perspectives. Additionally, we provide an open-source toolbox (https://github.com/JokerJohn/Cloud_Map_Evaluation) for map evaluation criteria, facilitating the indirect assessment of overall trajectory precision. Experimental results show at least a 30\% improvement in map accuracy and a 20\% increase in direct trajectory accuracy compared to the Iterative Closest Point (ICP) \cite{sharp2002icp} algorithm across diverse campus environments, with substantially enhanced robustness. Our open-source solution (https://github.com/JokerJohn/PALoc), extensively applied in the FusionPortable\cite{Jiao2022Mar} dataset, is geared towards SLAM benchmark dataset augmentation and represents a significant advancement in SLAM evaluations.

LCE-Calib: Automatic LiDAR-Frame/Event Camera Extrinsic Calibration With A Globally Optimal Solution

Mar 17, 2023

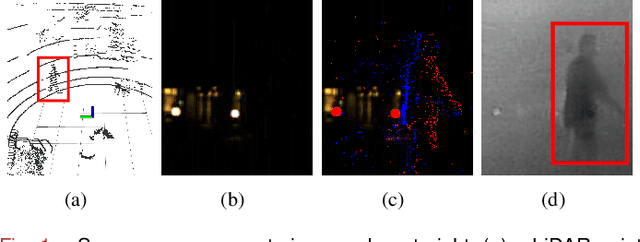

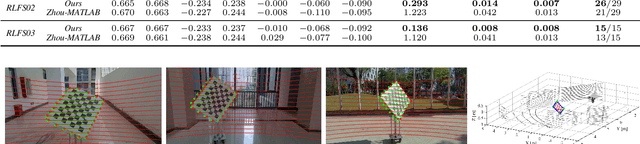

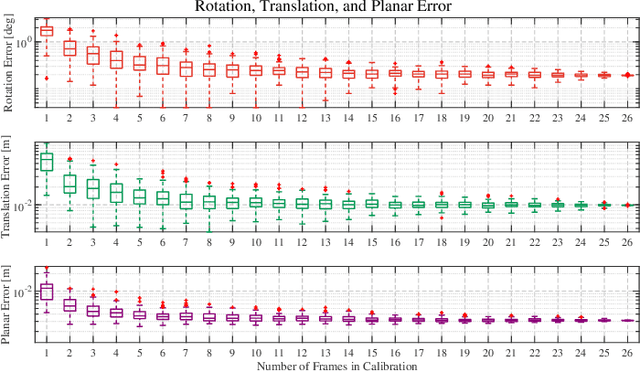

Abstract:The combination of LiDARs and cameras enables a mobile robot to perceive environments with multi-modal data, becoming a key factor in achieving robust perception. Traditional frame cameras are sensitive to changing illumination conditions, motivating us to introduce novel event cameras to make LiDAR-camera fusion more complete and robust. However, to jointly exploit these sensors, the challenging extrinsic calibration problem should be addressed. This paper proposes an automatic checkerboard-based approach to calibrate extrinsics between a LiDAR and a frame/event camera, where four contributions are presented. Firstly, we present an automatic feature extraction and checkerboard tracking method from LiDAR's point clouds. Secondly, we reconstruct realistic frame images from event streams, applying traditional corner detectors to event cameras. Thirdly, we propose an initialization-refinement procedure to estimate extrinsics using point-to-plane and point-to-line constraints in a coarse-to-fine manner. Fourthly, we introduce a unified and globally optimal solution to address two optimization problems in calibration. Our approach has been validated with extensive experiments on 19 simulated and real-world datasets and outperforms the state-of-the-art.

FusionPortable: A Multi-Sensor Campus-Scene Dataset for Evaluation of Localization and Mapping Accuracy on Diverse Platforms

Aug 25, 2022

Abstract:Combining multiple sensors enables a robot to maximize its perceptual awareness of environments and enhance its robustness to external disturbance, crucial to robotic navigation. This paper proposes the FusionPortable benchmark, a complete multi-sensor dataset with a diverse set of sequences for mobile robots. This paper presents three contributions. We first advance a portable and versatile multi-sensor suite that offers rich sensory measurements: 10Hz LiDAR point clouds, 20Hz stereo frame images, high-rate and asynchronous events from stereo event cameras, 200Hz inertial readings from an IMU, and 10Hz GPS signal. Sensors are already temporally synchronized in hardware. This device is lightweight, self-contained, and has plug-and-play support for mobile robots. Second, we construct a dataset by collecting 17 sequences that cover a variety of environments on the campus by exploiting multiple robot platforms for data collection. Some sequences are challenging to existing SLAM algorithms. Third, we provide ground truth for the decouple localization and mapping performance evaluation. We additionally evaluate state-of-the-art SLAM approaches and identify their limitations. The dataset, consisting of raw sensor easurements, ground truth, calibration data, and evaluated algorithms, will be released: https://ram-lab.com/file/site/multi-sensor-dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge