Zhenzhong Jia

Ubiquitous Field Transportation Robots with Robust Wheel-Leg Transformable Modules

Oct 24, 2024

Abstract:This paper introduces two field transportation robots. Both robots are equipped with transformable wheel-leg modules, which can smoothly switch between operation modes and can work in various challenging terrains. SWhegPro, with six S-shaped legs, enables transporting loads in challenging uneven outdoor terrains. SWhegPro3, featuring four three-impeller wheels, has surprising stair-climbing performance in indoor scenarios. Different from ordinary gear-driven transformable mechanisms, the modular wheels we designed driven by self-locking electric push rods can switch modes accurately and stably with high loads, significantly improving the load capacity of the robot in leg mode. This study analyzes the robot's wheel-leg module operation when the terrain parameters change. Through the derivation of mathematical models and calculations based on simplified kinematic models, a method for optimizing the robot parameters and wheel-leg structure parameters is finally proposed.The design and control strategy are then verified through simulations and field experiments in various complex terrains, and the working performance of the two field transportation robots is calculated and analyzed by recording sensor data and proposing evaluation methods.

Are We Ready for Planetary Exploration Robots? The TAIL-Plus Dataset for SLAM in Granular Environments

Apr 21, 2024Abstract:So far, planetary surface exploration depends on various mobile robot platforms. The autonomous navigation and decision-making of these mobile robots in complex terrains largely rely on their terrain-aware perception, localization and mapping capabilities. In this paper we release the TAIL-Plus dataset, a new challenging dataset in deformable granular environments for planetary exploration robots, which is an extension to our previous work, TAIL (Terrain-Aware multI-modaL) dataset. We conducted field experiments on beaches that are considered as planetary surface analog environments for diverse sandy terrains. In TAIL-Plus dataset, we provide more sequences with multiple loops and expand the scene from day to night. Benefit from our sensor suite with modular design, we use both wheeled and quadruped robots for data collection. The sensors include a 3D LiDAR, three downward RGB-D cameras, a pair of global-shutter color cameras that can be used as a forward-looking stereo camera, an RTK-GPS device and an extra IMU. Our datasets are intended to help researchers developing multi-sensor simultaneous localization and mapping (SLAM) algorithms for robots in unstructured, deformable granular terrains. Our datasets and supplementary materials will be available at \url{https://tailrobot.github.io/}.

TAIL: A Terrain-Aware Multi-Modal SLAM Dataset for Robot Locomotion in Deformable Granular Environments

Mar 25, 2024Abstract:Terrain-aware perception holds the potential to improve the robustness and accuracy of autonomous robot navigation in the wilds, thereby facilitating effective off-road traversals. However, the lack of multi-modal perception across various motion patterns hinders the solutions of Simultaneous Localization And Mapping (SLAM), especially when confronting non-geometric hazards in demanding landscapes. In this paper, we first propose a Terrain-Aware multI-modaL (TAIL) dataset tailored to deformable and sandy terrains. It incorporates various types of robotic proprioception and distinct ground interactions for the unique challenges and benchmark of multi-sensor fusion SLAM. The versatile sensor suite comprises stereo frame cameras, multiple ground-pointing RGB-D cameras, a rotating 3D LiDAR, an IMU, and an RTK device. This ensemble is hardware-synchronized, well-calibrated, and self-contained. Utilizing both wheeled and quadrupedal locomotion, we efficiently collect comprehensive sequences to capture rich unstructured scenarios. It spans the spectrum of scope, terrain interactions, scene changes, ground-level properties, and dynamic robot characteristics. We benchmark several state-of-the-art SLAM methods against ground truth and provide performance validations. Corresponding challenges and limitations are also reported. All associated resources are accessible upon request at \url{https://tailrobot.github.io/}.

SWheg: A Wheel-Leg Transformable Robot With Minimalist Actuator Realization

Oct 27, 2022

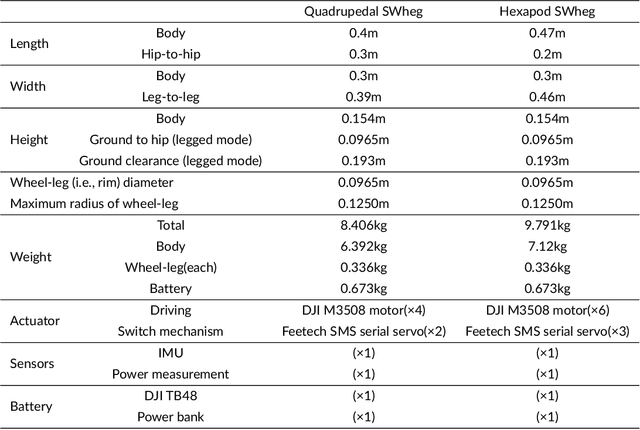

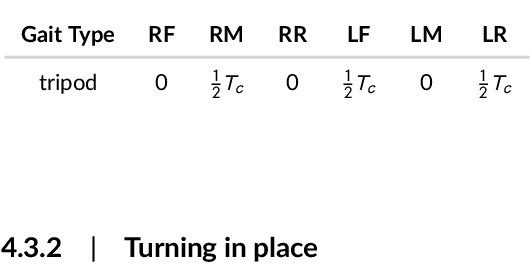

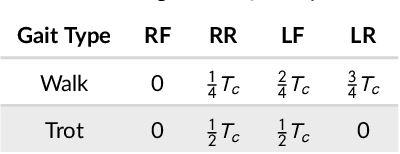

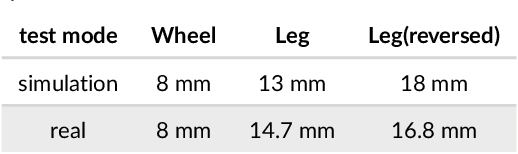

Abstract:This article presents the design, implementation, and performance evaluation of SWheg, a novel modular wheel-leg transformable robot family with minimalist actuator realization. SWheg takes advantage of both wheeled and legged locomotion by seamlessly integrating them on a single platform. In contrast to other designs that use multiple actuators, SWheg uses only one actuator to drive the transformation of all the wheel-leg modules in sync. This means an N-legged SWheg robot requires only N+1 actuators, which can significantly reduce the cost and malfunction rate of the platform. The tendon-driven wheel-leg transformation mechanism based on a four-bar linkage can perform fast morphology transitions between wheels and legs. We validated the design principle with two SWheg robots with four and six wheel-leg modules separately, namely Quadrupedal SWheg and Hexapod SWheg. The design process, mechatronics infrastructure, and the gait behavioral development of both platforms were discussed. The performance of the robot was evaluated in various scenarios, including driving and turning in wheeled mode, step crossing, irregular terrain passing, and stair climbing in legged mode. The comparison between these two platforms was also discussed.

Predict the Rover Mobility over Soft Terrain using Articulated Wheeled Bevameter

Feb 17, 2022

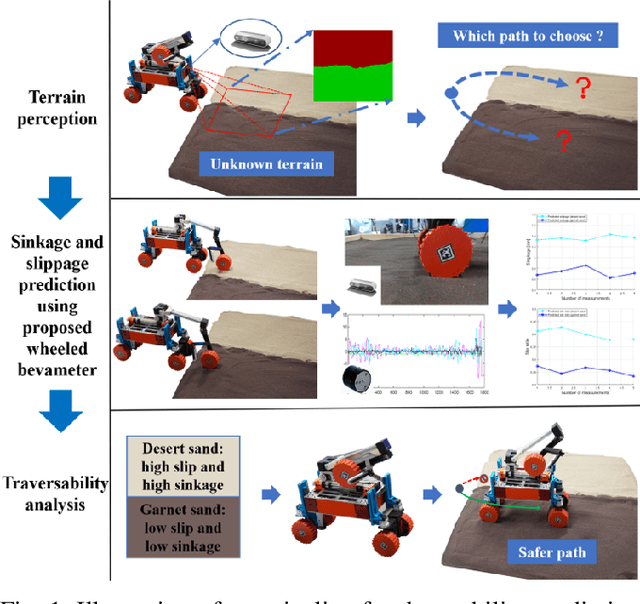

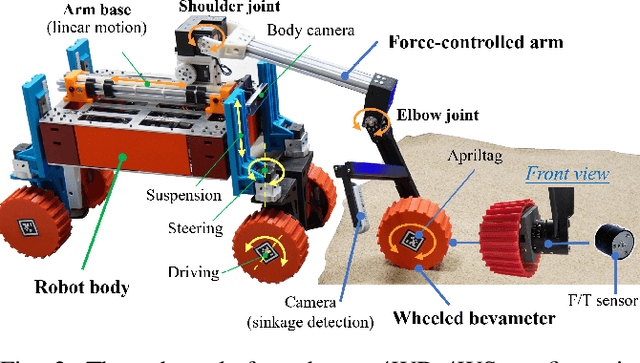

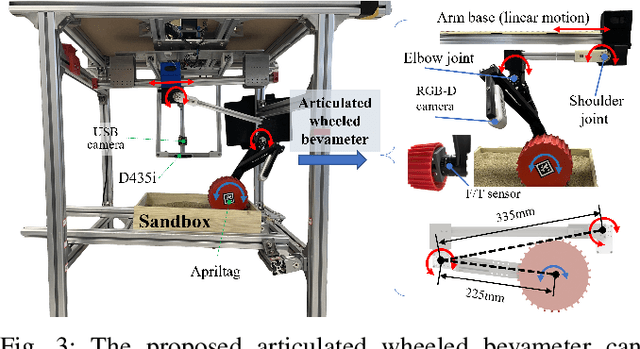

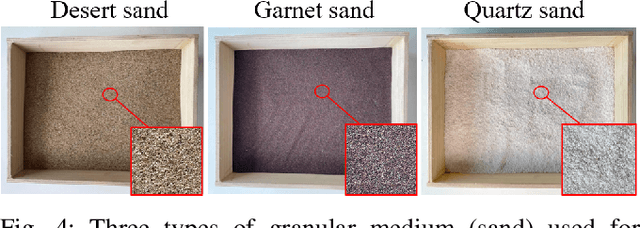

Abstract:Robot mobility is critical for mission success, especially in soft or deformable terrains, where the complex wheel-soil interaction mechanics often leads to excessive wheel slip and sinkage, causing the eventual mission failure. To improve the success rate, online mobility prediction using vision, infrared imaging, or model-based stochastic methods have been used in the literature. This paper proposes an on-board mobility prediction approach using an articulated wheeled bevameter that consists of a force-controlled arm and an instrumented bevameter (with force and vision sensors) as its end-effector. The proposed bevameter, which emulates the traditional terramechanics tests such as pressure-sinkage and shear experiments, can measure contact parameters ahead of the rover's body in real-time, and predict the slip and sinkage of supporting wheels over the probed region. Based on the predicted mobility, the rover can select a safer path in order to avoid dangerous regions such as those covered with quicksand. Compared to the literature, our proposed method can avoid the complicated terramechanics modeling and time-consuming stochastic prediction; it can also mitigate the inaccuracy issues arising in non-contact vision-based methods. We also conduct multiple experiments to validate the proposed approach.

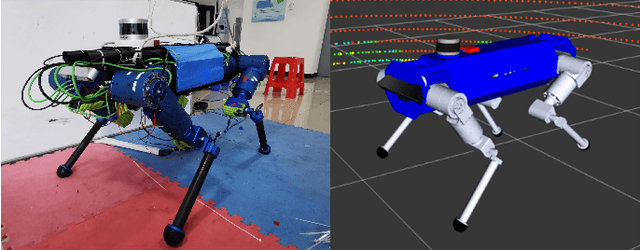

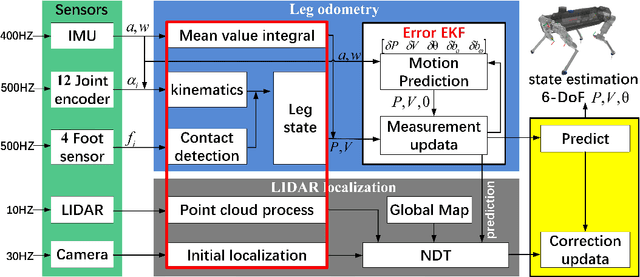

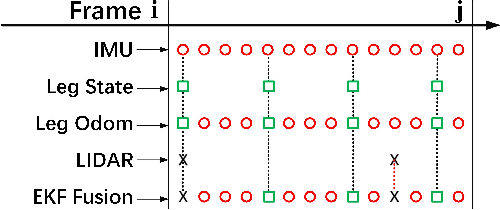

Multi-Sensor State Estimation Fusion on Quadruped Robot Locomotion

Jul 06, 2020

Abstract:In this paper, we present a effective state estimation algorithm that combined with various sensors information (Inertial measurement unit, joints encoder, camera and LIDAR) to enable balance the different frequency and accuracy for the locomotion of our quadruped robot StarDog. Of specific interest is the approach to preprocess a series of sensors measurement which can obtain robust data information, as well as improve the system performance in terms of time and accuracy. In the IMU-centric leg odometry which fuse the inertial and kinematic data, the detection of foot contact state and lateral slip are considered to further import the accuracy of robot's base-link posiiton and velocity. Before use the NDT registration to align the point clouds, we need to feed the requirement by preprocessing these data and obtaining initial localization in map. Also a modular filtering is proposed which can fuse leg odometry based on the inertial-kinematics state estimator of the quadruped robot and LIDAR-based localization method. This algorithm has been tested from the experimental data at indoor building and the motion capture system is used to evaluate the performance, the experimental results can demonstrate the feasibility of low error and high frequency for our quadruped robot system.

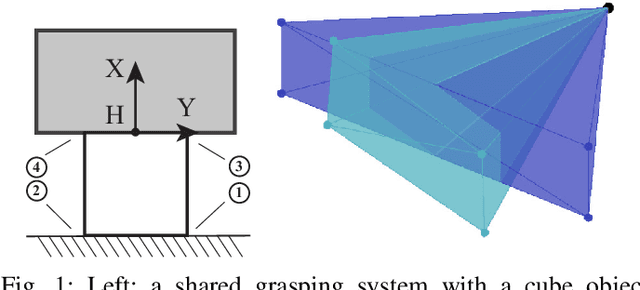

Manipulation with Shared Grasping

Jun 04, 2020

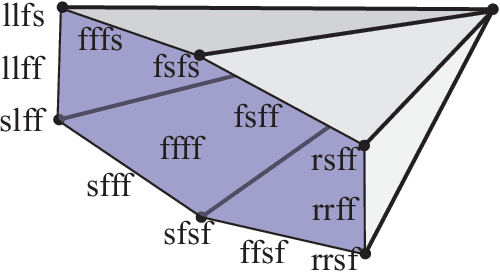

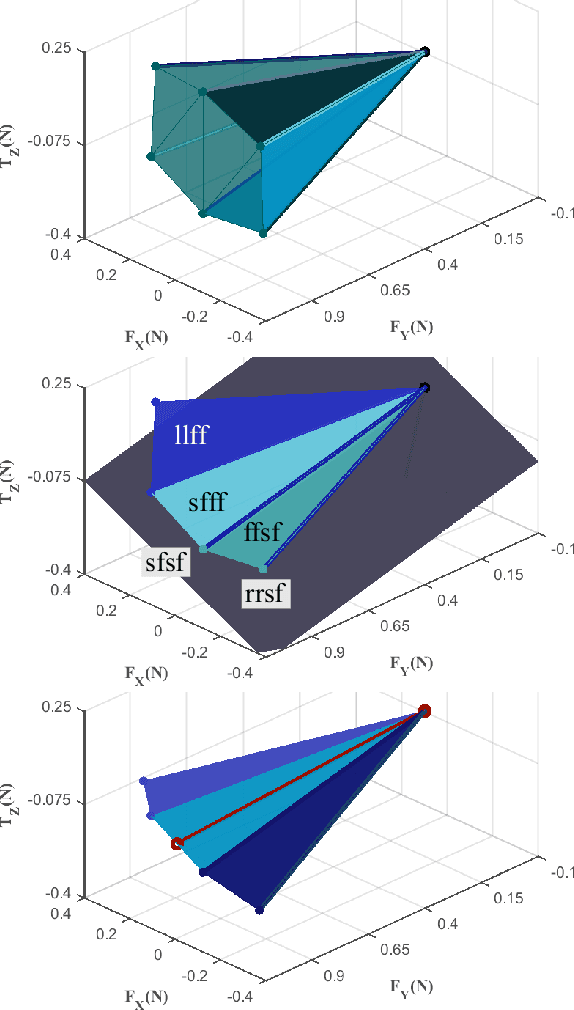

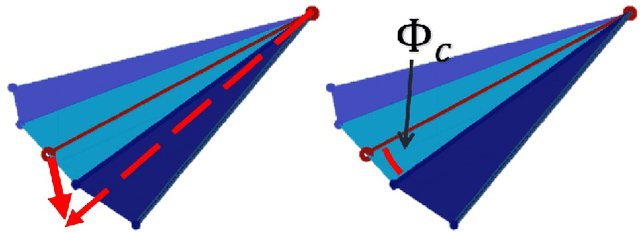

Abstract:A shared grasp is a grasp formed by contacts between the manipulated object and both the robot hand and the environment. By trading off hand contacts for environmental contacts, a shared grasp requires fewer contacts with the hand, and enables manipulation even when a full grasp is not possible. Previous research has used shared grasps for non-prehensile manipulation such as pivoting and tumbling. This paper treats the problem more generally, with methods to select the best shared grasp and robot actions for a desired object motion. The central issue is to evaluate the feasible contact modes: for each contact, whether that contact will remain active, and whether slip will occur. Robustness is important. When a contact mode fails, e.g., when a contact is lost, or when unintentional slip occurs, the operation will fail, and in some cases damage may occur. In this work, we enumerate all feasible contact modes, calculate corresponding controls, and select the most robust candidate. We can also optimize the contact geometry for robustness. This paper employs quasi-static analysis of planar rigid bodies with Coulomb friction to derive the algorithms and controls. Finally, we demonstrate the robustness of shared grasping and the use of our methods in representative experiments and examples. The video can be found at https://youtu.be/tyNhJvRYZNk

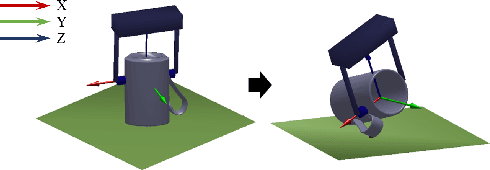

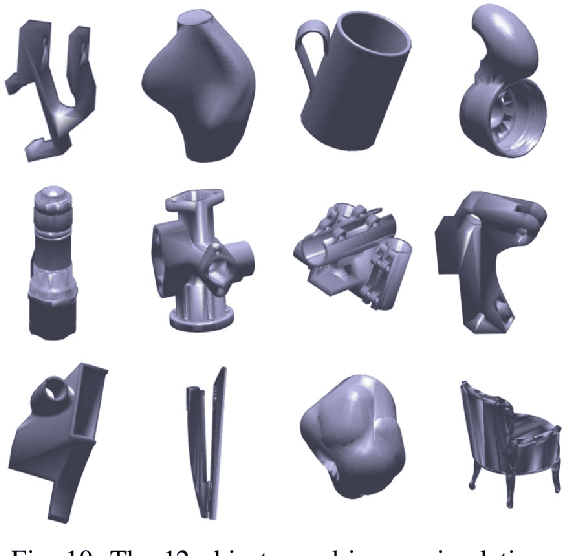

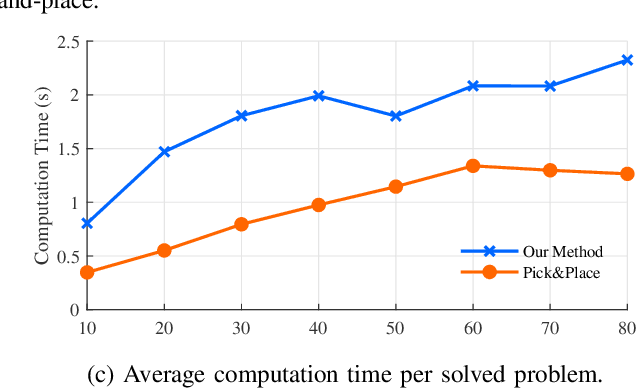

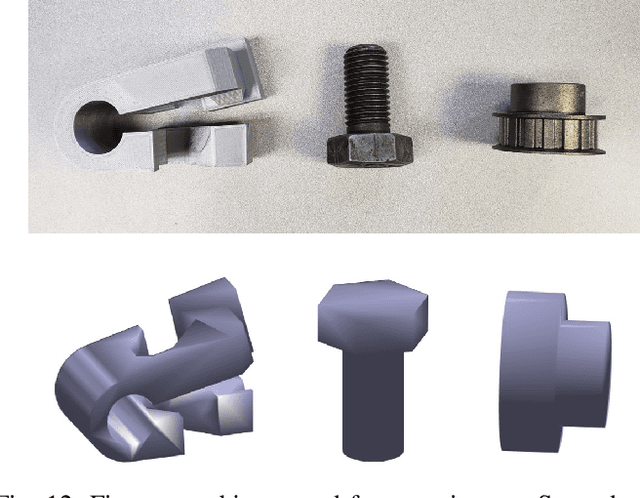

Reorienting Objects in 3D Space Using Pivoting

Dec 05, 2019

Abstract:We consider the problem of reorienting a rigid object with arbitrary known shape on a table using a two-finger pinch gripper. Reorienting problem is challenging because of its non-smoothness and high dimensionality. In this work, we focus on solving reorienting using pivoting, in which we allow the grasped object to rotate between fingers. Pivoting decouples the gripper rotation from the object motion, making it possible to reorient an object under strict robot workspace constraints. We provide detailed mechanical analysis to the 3D pivoting motion on a table, which leads to simple geometric conditions for its stability. To solve reorienting problems, we introduce two motion primitives: pivot-on-support and roll-on-support, and provide an efficient hierarchical motion planning algorithm with the two motion primitives to solve for the gripper motions that reorient an object between arbitrary poses. To handle the uncertainties in modeling and perception, we make conservative plans that work in the worst case, and propose a robust control strategy for executing the motion plan. Finally we discuss the mechanical requirements on the robot and provide a "two-phase" gripper design to implement both pivoting grasp and firm grasp. We demonstrate the effectiveness of our method in simulations and multiple experiments. Our algorithm can solve more reorienting problems with fewer making and breaking contacts, when compared to traditional pick-and-place based methods.

Adversary A3C for Robust Reinforcement Learning

Dec 01, 2019

Abstract:Asynchronous Advantage Actor Critic (A3C) is an effective Reinforcement Learning (RL) algorithm for a wide range of tasks, such as Atari games and robot control. The agent learns policies and value function through trial-and-error interactions with the environment until converging to an optimal policy. Robustness and stability are critical in RL; however, neural network can be vulnerable to noise from unexpected sources and is not likely to withstand very slight disturbances. We note that agents generated from mild environment using A3C are not able to handle challenging environments. Learning from adversarial examples, we proposed an algorithm called Adversary Robust A3C (AR-A3C) to improve the agent's performance under noisy environments. In this algorithm, an adversarial agent is introduced to the learning process to make it more robust against adversarial disturbances, thereby making it more adaptive to noisy environments. Both simulations and real-world experiments are carried out to illustrate the stability of the proposed algorithm. The AR-A3C algorithm outperforms A3C in both clean and noisy environments.

Deep adaptive dynamic programming for nonaffine nonlinear optimal control problem with state constraints

Nov 26, 2019

Abstract:This paper presents a constrained deep adaptive dynamic programming (CDADP) algorithm to solve general nonlinear optimal control problems with known dynamics. Unlike previous ADP algorithms, it can directly deal with problems with state constraints. Both the policy and value function are approximated by deep neural networks (NNs), which directly map the system state to action and value function respectively without needing to use hand-crafted basis function. The proposed algorithm considers the state constraints by transforming the policy improvement process to a constrained optimization problem. Meanwhile, a trust region constraint is added to prevent excessive policy update. We first linearize this constrained optimization problem locally into a quadratically-constrained quadratic programming problem, and then obtain the optimal update of policy network parameters by solving its dual problem. We also propose a series of recovery rules to update the policy in case the primal problem is infeasible. In addition, parallel learners are employed to explore different state spaces and then stabilize and accelerate the learning speed. The vehicle control problem in path-tracking task is used to demonstrate the effectiveness of this proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge