Shengbo Eben Li

On the Equilibrium between Feasible Zone and Uncertain Model in Safe Exploration

Feb 04, 2026Abstract:Ensuring the safety of environmental exploration is a critical problem in reinforcement learning (RL). While limiting exploration to a feasible zone has become widely accepted as a way to ensure safety, key questions remain unresolved: what is the maximum feasible zone achievable through exploration, and how can it be identified? This paper, for the first time, answers these questions by revealing that the goal of safe exploration is to find the equilibrium between the feasible zone and the environment model. This conclusion is based on the understanding that these two components are interdependent: a larger feasible zone leads to a more accurate environment model, and a more accurate model, in turn, enables exploring a larger zone. We propose the first equilibrium-oriented safe exploration framework called safe equilibrium exploration (SEE), which alternates between finding the maximum feasible zone and the least uncertain model. Using a graph formulation of the uncertain model, we prove that the uncertain model obtained by SEE is monotonically refined, the feasible zones monotonically expand, and both converge to the equilibrium of safe exploration. Experiments on classic control tasks show that our algorithm successfully expands the feasible zones with zero constraint violation, and achieves the equilibrium of safe exploration within a few iterations.

Equilibrium of Feasible Zone and Uncertain Model in Safe Exploration

Jan 31, 2026Abstract:Ensuring the safety of environmental exploration is a critical problem in reinforcement learning (RL). While limiting exploration to a feasible zone has become widely accepted as a way to ensure safety, key questions remain unresolved: what is the maximum feasible zone achievable through exploration, and how can it be identified? This paper, for the first time, answers these questions by revealing that the goal of safe exploration is to find the equilibrium between the feasible zone and the environment model. This conclusion is based on the understanding that these two components are interdependent: a larger feasible zone leads to a more accurate environment model, and a more accurate model, in turn, enables exploring a larger zone. We propose the first equilibrium-oriented safe exploration framework called safe equilibrium exploration (SEE), which alternates between finding the maximum feasible zone and the least uncertain model. Using a graph formulation of the uncertain model, we prove that the uncertain model obtained by SEE is monotonically refined, the feasible zones monotonically expand, and both converge to the equilibrium of safe exploration. Experiments on classic control tasks show that our algorithm successfully expands the feasible zones with zero constraint violation, and achieves the equilibrium of safe exploration within a few iterations.

Exchange Policy Optimization Algorithm for Semi-Infinite Safe Reinforcement Learning

Nov 06, 2025Abstract:Safe reinforcement learning (safe RL) aims to respect safety requirements while optimizing long-term performance. In many practical applications, however, the problem involves an infinite number of constraints, known as semi-infinite safe RL (SI-safe RL). Such constraints typically appear when safety conditions must be enforced across an entire continuous parameter space, such as ensuring adequate resource distribution at every spatial location. In this paper, we propose exchange policy optimization (EPO), an algorithmic framework that achieves optimal policy performance and deterministic bounded safety. EPO works by iteratively solving safe RL subproblems with finite constraint sets and adaptively adjusting the active set through constraint expansion and deletion. At each iteration, constraints with violations exceeding the predefined tolerance are added to refine the policy, while those with zero Lagrange multipliers are removed after the policy update. This exchange rule prevents uncontrolled growth of the working set and supports effective policy training. Our theoretical analysis demonstrates that, under mild assumptions, strategies trained via EPO achieve performance comparable to optimal solutions with global constraint violations strictly remaining within a prescribed bound.

Distributional Soft Actor-Critic with Diffusion Policy

Jul 02, 2025

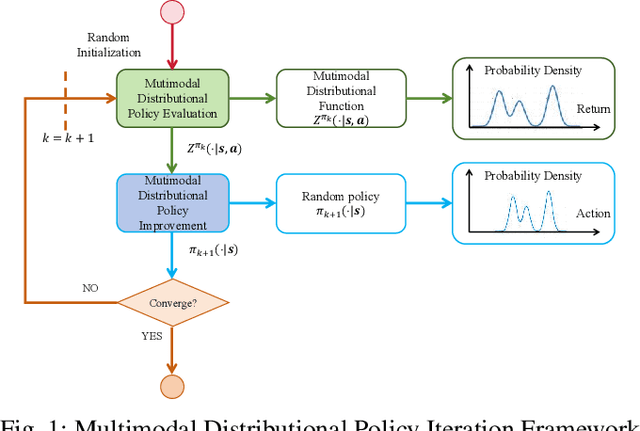

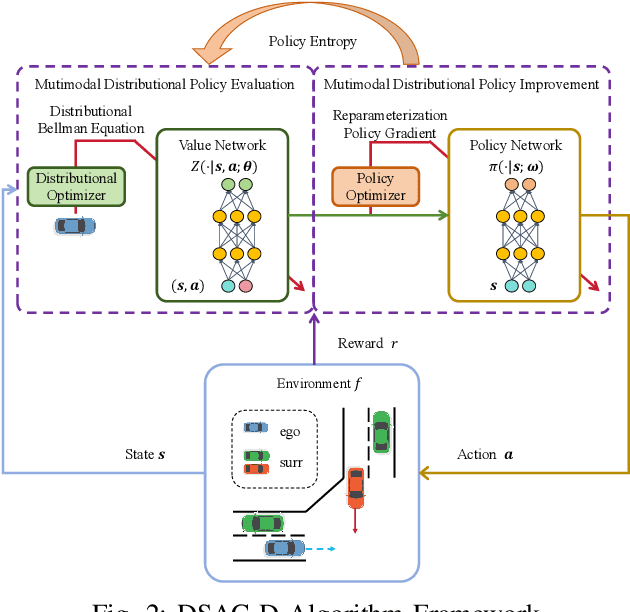

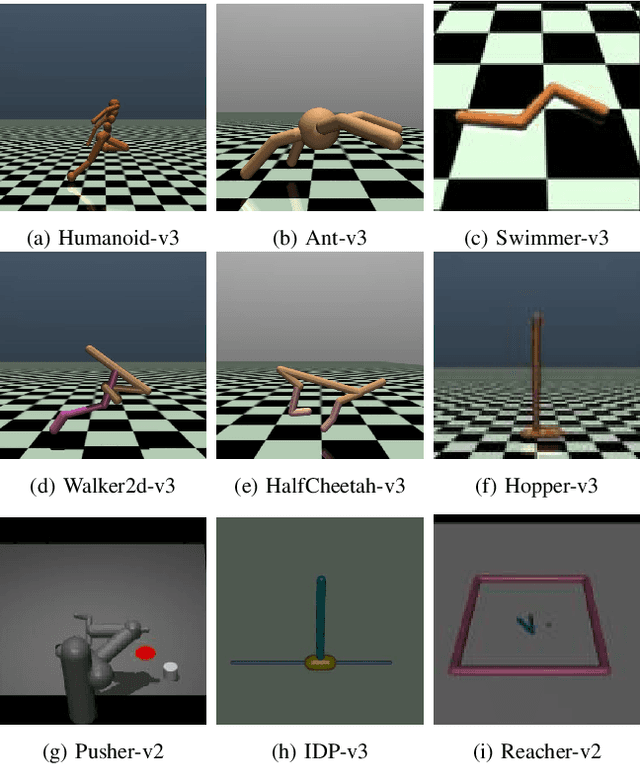

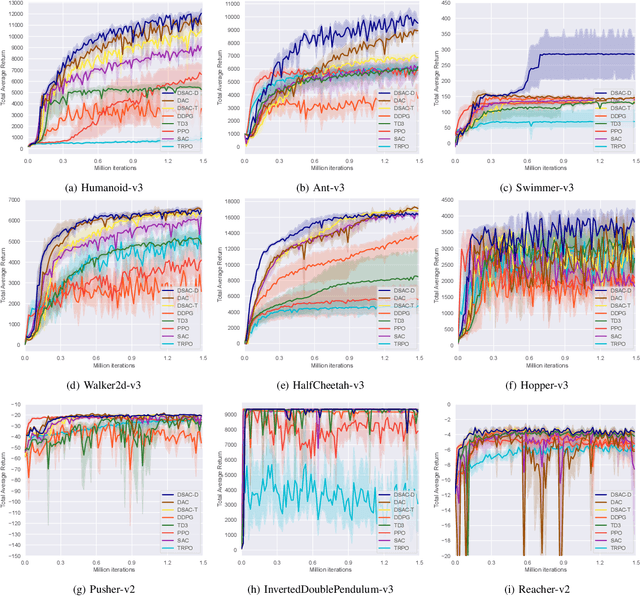

Abstract:Reinforcement learning has been proven to be highly effective in handling complex control tasks. Traditional methods typically use unimodal distributions, such as Gaussian distributions, to model the output of value distributions. However, unimodal distribution often and easily causes bias in value function estimation, leading to poor algorithm performance. This paper proposes a distributional reinforcement learning algorithm called DSAC-D (Distributed Soft Actor Critic with Diffusion Policy) to address the challenges of estimating bias in value functions and obtaining multimodal policy representations. A multimodal distributional policy iteration framework that can converge to the optimal policy was established by introducing policy entropy and value distribution function. A diffusion value network that can accurately characterize the distribution of multi peaks was constructed by generating a set of reward samples through reverse sampling using a diffusion model. Based on this, a distributional reinforcement learning algorithm with dual diffusion of the value network and the policy network was derived. MuJoCo testing tasks demonstrate that the proposed algorithm not only learns multimodal policy, but also achieves state-of-the-art (SOTA) performance in all 9 control tasks, with significant suppression of estimation bias and total average return improvement of over 10\% compared to existing mainstream algorithms. The results of real vehicle testing show that DSAC-D can accurately characterize the multimodal distribution of different driving styles, and the diffusion policy network can characterize multimodal trajectories.

Jump-Start Reinforcement Learning with Self-Evolving Priors for Extreme Monopedal Locomotion

Jul 01, 2025Abstract:Reinforcement learning (RL) has shown great potential in enabling quadruped robots to perform agile locomotion. However, directly training policies to simultaneously handle dual extreme challenges, i.e., extreme underactuation and extreme terrains, as in monopedal hopping tasks, remains highly challenging due to unstable early-stage interactions and unreliable reward feedback. To address this, we propose JumpER (jump-start reinforcement learning via self-evolving priors), an RL training framework that structures policy learning into multiple stages of increasing complexity. By dynamically generating self-evolving priors through iterative bootstrapping of previously learned policies, JumpER progressively refines and enhances guidance, thereby stabilizing exploration and policy optimization without relying on external expert priors or handcrafted reward shaping. Specifically, when integrated with a structured three-stage curriculum that incrementally evolves action modality, observation space, and task objective, JumpER enables quadruped robots to achieve robust monopedal hopping on unpredictable terrains for the first time. Remarkably, the resulting policy effectively handles challenging scenarios that traditional methods struggle to conquer, including wide gaps up to 60 cm, irregularly spaced stairs, and stepping stones with distances varying from 15 cm to 35 cm. JumpER thus provides a principled and scalable approach for addressing locomotion tasks under the dual challenges of extreme underactuation and extreme terrains.

Hierarchical Feature-level Reverse Propagation for Post-Training Neural Networks

Jun 08, 2025Abstract:End-to-end autonomous driving has emerged as a dominant paradigm, yet its highly entangled black-box models pose significant challenges in terms of interpretability and safety assurance. To improve model transparency and training flexibility, this paper proposes a hierarchical and decoupled post-training framework tailored for pretrained neural networks. By reconstructing intermediate feature maps from ground-truth labels, surrogate supervisory signals are introduced at transitional layers to enable independent training of specific components, thereby avoiding the complexity and coupling of conventional end-to-end backpropagation and providing interpretable insights into networks' internal mechanisms. To the best of our knowledge, this is the first method to formalize feature-level reverse computation as well-posed optimization problems, which we rigorously reformulate as systems of linear equations or least squares problems. This establishes a novel and efficient training paradigm that extends gradient backpropagation to feature backpropagation. Extensive experiments on multiple standard image classification benchmarks demonstrate that the proposed method achieves superior generalization performance and computational efficiency compared to traditional training approaches, validating its effectiveness and potential.

Enhanced DACER Algorithm with High Diffusion Efficiency

May 29, 2025Abstract:Due to their expressive capacity, diffusion models have shown great promise in offline RL and imitation learning. Diffusion Actor-Critic with Entropy Regulator (DACER) extended this capability to online RL by using the reverse diffusion process as a policy approximator, trained end-to-end with policy gradient methods, achieving strong performance. However, this comes at the cost of requiring many diffusion steps, which significantly hampers training efficiency, while directly reducing the steps leads to noticeable performance degradation. Critically, the lack of inference efficiency becomes a significant bottleneck for applying diffusion policies in real-time online RL settings. To improve training and inference efficiency while maintaining or even enhancing performance, we propose a Q-gradient field objective as an auxiliary optimization target to guide the denoising process at each diffusion step. Nonetheless, we observe that the independence of the Q-gradient field from the diffusion time step negatively impacts the performance of the diffusion policy. To address this, we introduce a temporal weighting mechanism that enables the model to efficiently eliminate large-scale noise in the early stages and refine actions in the later stages. Experimental results on MuJoCo benchmarks and several multimodal tasks demonstrate that the DACER2 algorithm achieves state-of-the-art performance in most MuJoCo control tasks with only five diffusion steps, while also exhibiting stronger multimodality compared to DACER.

NANO-SLAM : Natural Gradient Gaussian Approximation for Vehicle SLAM

Apr 27, 2025

Abstract:Accurate localization is a challenging task for autonomous vehicles, particularly in GPS-denied environments such as urban canyons and tunnels. In these scenarios, simultaneous localization and mapping (SLAM) offers a more robust alternative to GPS-based positioning, enabling vehicles to determine their position using onboard sensors and surrounding environment's landmarks. Among various vehicle SLAM approaches, Rao-Blackwellized particle filter (RBPF) stands out as one of the most widely adopted methods due to its efficient solution with logarithmic complexity relative to the map size. RBPF approximates the posterior distribution of the vehicle pose using a set of Monte Carlo particles through two main steps: sampling and importance weighting. The key to effective sampling lies in solving a distribution that closely approximates the posterior, known as the sampling distribution, to accelerate convergence. Existing methods typically derive this distribution via linearization, which introduces significant approximation errors due to the inherent nonlinearity of the system. To address this limitation, we propose a novel vehicle SLAM method called \textit{N}atural Gr\textit{a}dient Gaussia\textit{n} Appr\textit{o}ximation (NANO)-SLAM, which avoids linearization errors by modeling the sampling distribution as the solution to an optimization problem over Gaussian parameters and solving it using natural gradient descent. This approach improves the accuracy of the sampling distribution and consequently enhances localization performance. Experimental results on the long-distance Sydney Victoria Park vehicle SLAM dataset show that NANO-SLAM achieves over 50\% improvement in localization accuracy compared to the most widely used vehicle SLAM algorithms, with minimal additional computational cost.

Minds on the Move: Decoding Trajectory Prediction in Autonomous Driving with Cognitive Insights

Feb 27, 2025Abstract:In mixed autonomous driving environments, accurately predicting the future trajectories of surrounding vehicles is crucial for the safe operation of autonomous vehicles (AVs). In driving scenarios, a vehicle's trajectory is determined by the decision-making process of human drivers. However, existing models primarily focus on the inherent statistical patterns in the data, often neglecting the critical aspect of understanding the decision-making processes of human drivers. This oversight results in models that fail to capture the true intentions of human drivers, leading to suboptimal performance in long-term trajectory prediction. To address this limitation, we introduce a Cognitive-Informed Transformer (CITF) that incorporates a cognitive concept, Perceived Safety, to interpret drivers' decision-making mechanisms. Perceived Safety encapsulates the varying risk tolerances across drivers with different driving behaviors. Specifically, we develop a Perceived Safety-aware Module that includes a Quantitative Safety Assessment for measuring the subject risk levels within scenarios, and Driver Behavior Profiling for characterizing driver behaviors. Furthermore, we present a novel module, Leanformer, designed to capture social interactions among vehicles. CITF demonstrates significant performance improvements on three well-established datasets. In terms of long-term prediction, it surpasses existing benchmarks by 12.0% on the NGSIM, 28.2% on the HighD, and 20.8% on the MoCAD dataset. Additionally, its robustness in scenarios with limited or missing data is evident, surpassing most state-of-the-art (SOTA) baselines, and paving the way for real-world applications.

Diffusion-Based Planning for Autonomous Driving with Flexible Guidance

Jan 26, 2025

Abstract:Achieving human-like driving behaviors in complex open-world environments is a critical challenge in autonomous driving. Contemporary learning-based planning approaches such as imitation learning methods often struggle to balance competing objectives and lack of safety assurance,due to limited adaptability and inadequacy in learning complex multi-modal behaviors commonly exhibited in human planning, not to mention their strong reliance on the fallback strategy with predefined rules. We propose a novel transformer-based Diffusion Planner for closed-loop planning, which can effectively model multi-modal driving behavior and ensure trajectory quality without any rule-based refinement. Our model supports joint modeling of both prediction and planning tasks under the same architecture, enabling cooperative behaviors between vehicles. Moreover, by learning the gradient of the trajectory score function and employing a flexible classifier guidance mechanism, Diffusion Planner effectively achieves safe and adaptable planning behaviors. Evaluations on the large-scale real-world autonomous planning benchmark nuPlan and our newly collected 200-hour delivery-vehicle driving dataset demonstrate that Diffusion Planner achieves state-of-the-art closed-loop performance with robust transferability in diverse driving styles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge