LCE-Calib: Automatic LiDAR-Frame/Event Camera Extrinsic Calibration With A Globally Optimal Solution

Paper and Code

Mar 17, 2023

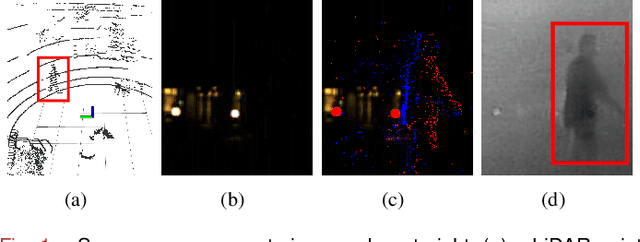

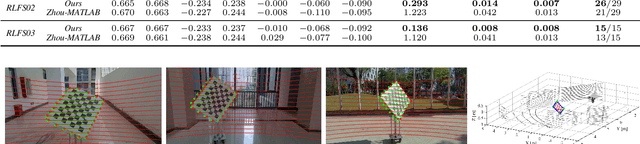

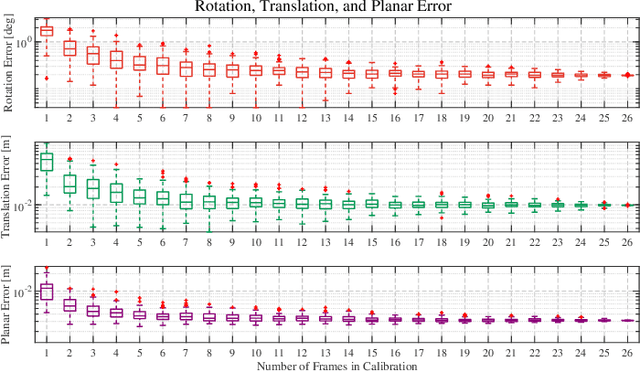

The combination of LiDARs and cameras enables a mobile robot to perceive environments with multi-modal data, becoming a key factor in achieving robust perception. Traditional frame cameras are sensitive to changing illumination conditions, motivating us to introduce novel event cameras to make LiDAR-camera fusion more complete and robust. However, to jointly exploit these sensors, the challenging extrinsic calibration problem should be addressed. This paper proposes an automatic checkerboard-based approach to calibrate extrinsics between a LiDAR and a frame/event camera, where four contributions are presented. Firstly, we present an automatic feature extraction and checkerboard tracking method from LiDAR's point clouds. Secondly, we reconstruct realistic frame images from event streams, applying traditional corner detectors to event cameras. Thirdly, we propose an initialization-refinement procedure to estimate extrinsics using point-to-plane and point-to-line constraints in a coarse-to-fine manner. Fourthly, we introduce a unified and globally optimal solution to address two optimization problems in calibration. Our approach has been validated with extensive experiments on 19 simulated and real-world datasets and outperforms the state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge