Weixuan Zhang

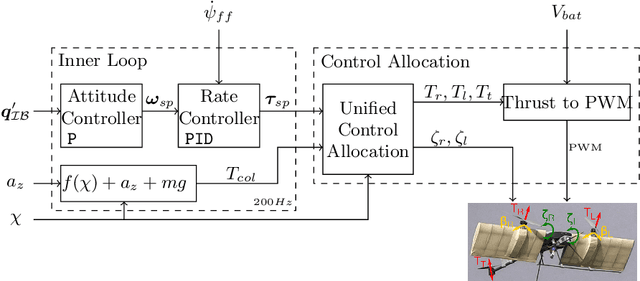

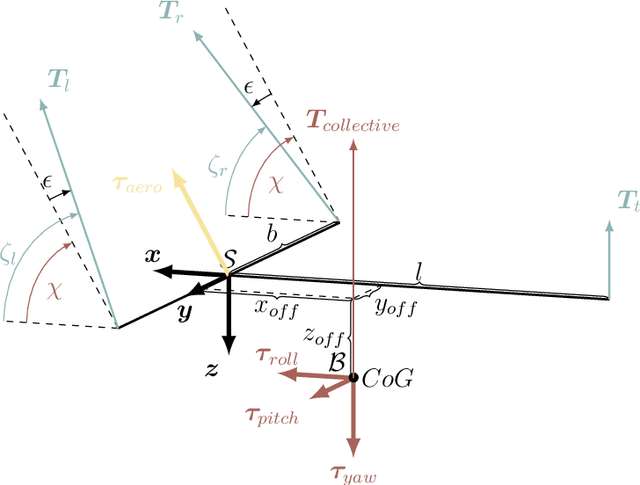

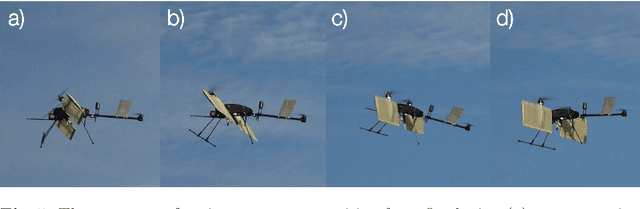

Soliro -- a hybrid dynamic tilt-wing aerial manipulator with minimal actuators

Dec 08, 2023

Abstract:The ability to enter in contact with and manipulate physical objects with a flying robot enables many novel applications, such as contact inspection, painting, drilling, and sample collection. Generally, these aerial robots need more degrees of freedom than a standard quadrotor. While there is active research of over-actuated, omnidirectional MAVs and aerial manipulators as well as VTOL and hybrid platforms, the two concepts have not been combined. We address the problem of conceptualization, characterization, control, and testing of a 5DOF rotary-/fixed-wing hybrid, tilt-rotor, split tilt-wing, nearly omnidirectional aerial robot. We present an elegant solution with a minimal set of actuators and that does not need any classical control surfaces or flaps. The concept is validated in a wind tunnel study and in multiple flights with forward and backward transitions. Fixed-wing flight speeds up to 10 m/s were reached, with a power reduction of 30% as compared to rotary wing flight.

Grounding Description-Driven Dialogue State Trackers with Knowledge-Seeking Turns

Sep 23, 2023Abstract:Schema-guided dialogue state trackers can generalise to new domains without further training, yet they are sensitive to the writing style of the schemata. Augmenting the training set with human or synthetic schema paraphrases improves the model robustness to these variations but can be either costly or difficult to control. We propose to circumvent these issues by grounding the state tracking model in knowledge-seeking turns collected from the dialogue corpus as well as the schema. Including these turns in prompts during finetuning and inference leads to marked improvements in model robustness, as demonstrated by large average joint goal accuracy and schema sensitivity improvements on SGD and SGD-X.

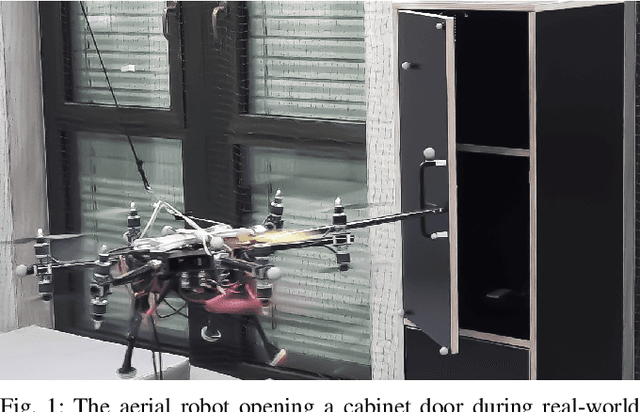

Learning to Open Doors with an Aerial Manipulator

Jul 28, 2023

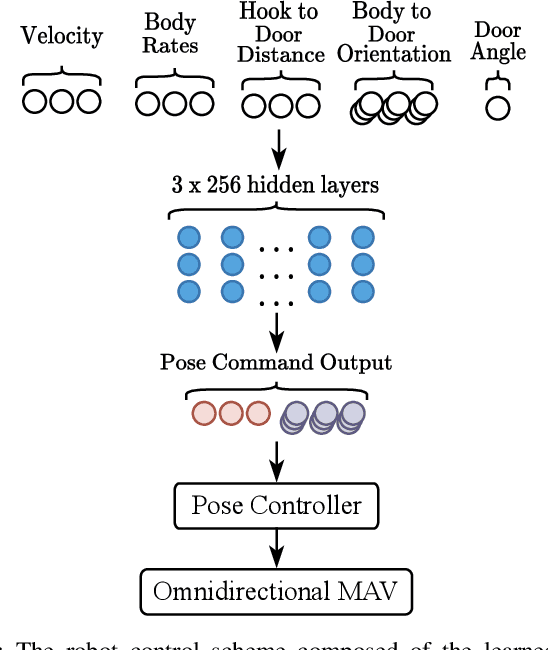

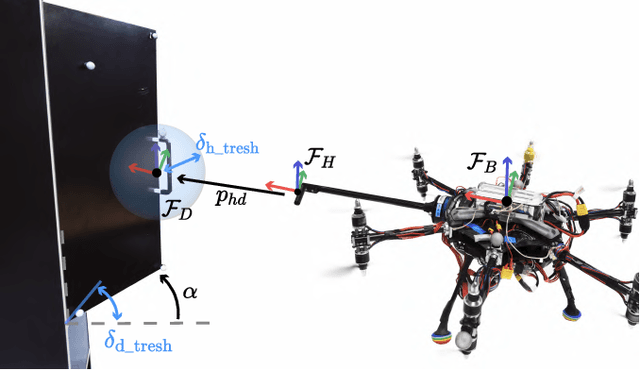

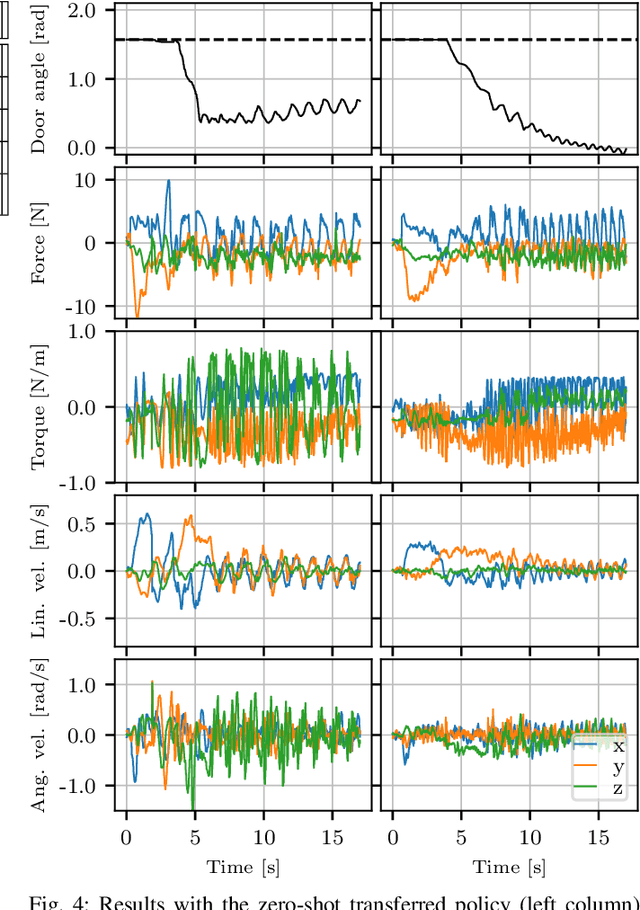

Abstract:The field of aerial manipulation has seen rapid advances, transitioning from push-and-slide tasks to interaction with articulated objects. So far, when more complex actions are performed, the motion trajectory is usually handcrafted or a result of online optimization methods like Model Predictive Control (MPC) or Model Predictive Path Integral (MPPI) control. However, these methods rely on heuristics or model simplifications to efficiently run on onboard hardware, producing results in acceptable amounts of time. Moreover, they can be sensitive to disturbances and differences between the real environment and its simulated counterpart. In this work, we propose a Reinforcement Learning (RL) approach to learn motion behaviors for a manipulation task while producing policies that are robust to disturbances and modeling errors. Specifically, we train a policy to perform a door-opening task with an Omnidirectional Micro Aerial Vehicle (OMAV). The policy is trained in a physics simulator and experiments are presented both in simulation and running onboard the real platform, investigating the simulation to real world transfer. We compare our method against a state-of-the-art MPPI solution, showing a considerable increase in robustness and speed.

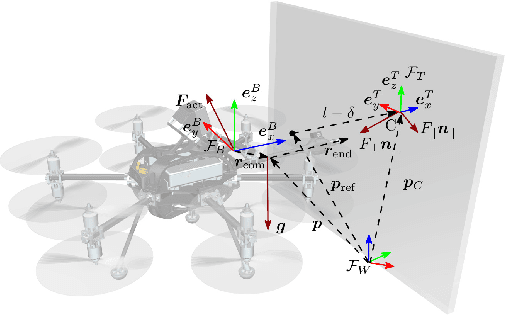

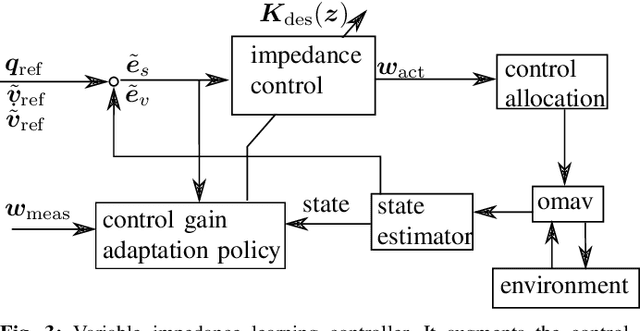

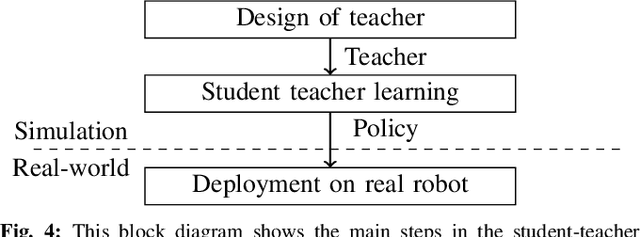

Learning Variable Impedance Control for Aerial Sliding on Uneven Heterogeneous Surfaces by Proprioceptive and Tactile Sensing

Jul 05, 2022

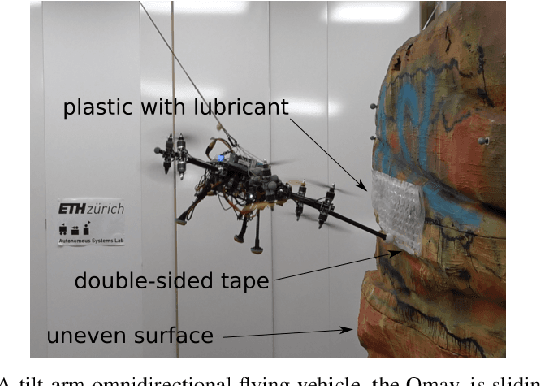

Abstract:The recent development of novel aerial vehicles capable of physically interacting with the environment leads to new applications such as contact-based inspection. These tasks require the robotic system to exchange forces with partially-known environments, which may contain uncertainties including unknown spatially-varying friction properties and discontinuous variations of the surface geometry. Finding a control strategy that is robust against these environmental uncertainties remains an open challenge. This paper presents a learning-based adaptive control strategy for aerial sliding tasks. In particular, the gains of a standard impedance controller are adjusted in real-time by a policy based on the current control signals, proprioceptive measurements, and tactile sensing. This policy is trained in simulation with simplified actuator dynamics in a student-teacher learning setup. The real-world performance of the proposed approach is verified using a tilt-arm omnidirectional flying vehicle. The proposed controller structure combines data-driven and model-based control methods, enabling our approach to successfully transfer directly and without adaptation from simulation to the real platform. Compared to fine-tuned state of the art interaction control methods we achieve reduced tracking error and improved disturbance rejection.

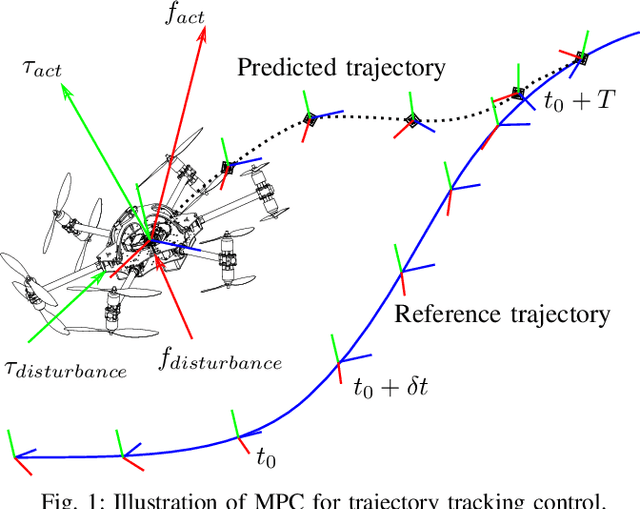

MPC with Learned Residual Dynamics with Application on Omnidirectional MAVs

Jul 04, 2022

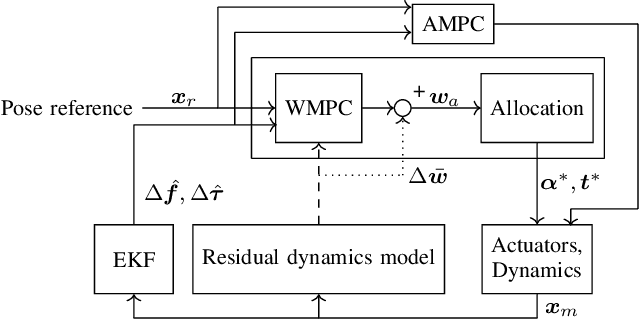

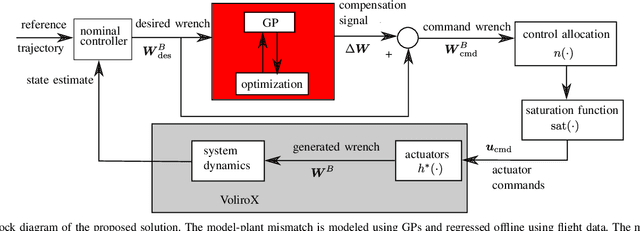

Abstract:The growing field of aerial manipulation often relies on fully actuated or omnidirectional micro aerial vehicles (OMAVs) which can apply arbitrary forces and torques while in contact with the environment. Control methods are usually based on model-free approaches, separating a high-level wrench controller from an actuator allocation. If necessary, disturbances are rejected by online disturbance observers. However, while being general, this approach often produces sub-optimal control commands and cannot incorporate constraints given by the platform design. We present two model-based approaches to control OMAVs for the task of trajectory tracking while rejecting disturbances. The first one optimizes wrench commands and compensates model errors by a model learned from experimental data. The second one optimizes low-level actuator commands, allowing to exploit an allocation nullspace and to consider constraints given by the actuator hardware. The efficacy and real-time feasibility of both approaches is shown and evaluated in real-world experiments.

Active Model Learning using Informative Trajectories for Improved Closed-Loop Control on Real Robots

Jan 20, 2021

Abstract:Model-based controllers on real robots require accurate knowledge of the system dynamics to perform optimally. For complex dynamics, first-principles modeling is not sufficiently precise, and data-driven approaches can be leveraged to learn a statistical model from real experiments. However, the efficient and effective data collection for such a data-driven system on real robots is still an open challenge. This paper introduces an optimization problem formulation to find an informative trajectory that allows for efficient data collection and model learning. We present a sampling-based method that computes an approximation of the trajectory that minimizes the prediction uncertainty of the dynamics model. This trajectory is then executed, collecting the data to update the learned model. In experiments we demonstrate the capabilities of our proposed framework when applied to a complex omnidirectional flying vehicle with tiltable rotors. Using our informative trajectories results in models which outperform models obtained from non-informative trajectory by 13.3\% with the same amount of training data. Furthermore, we show that the model learned from informative trajectories generalizes better than the one learned from non-informative trajectories, achieving better tracking performance on different tasks.

Learning dynamics for improving control of overactuated flying systems

Jun 23, 2020

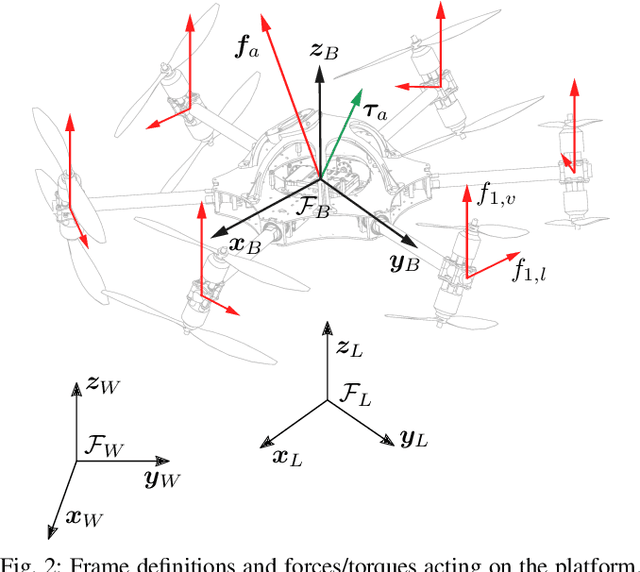

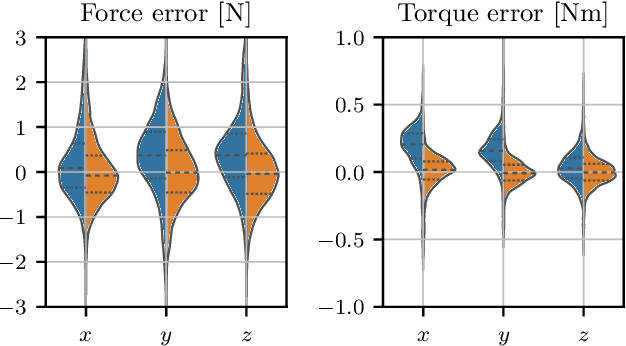

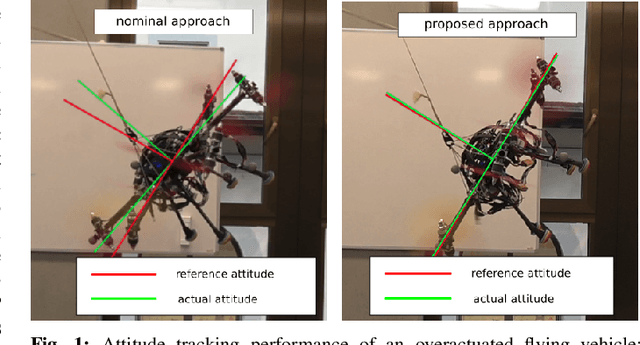

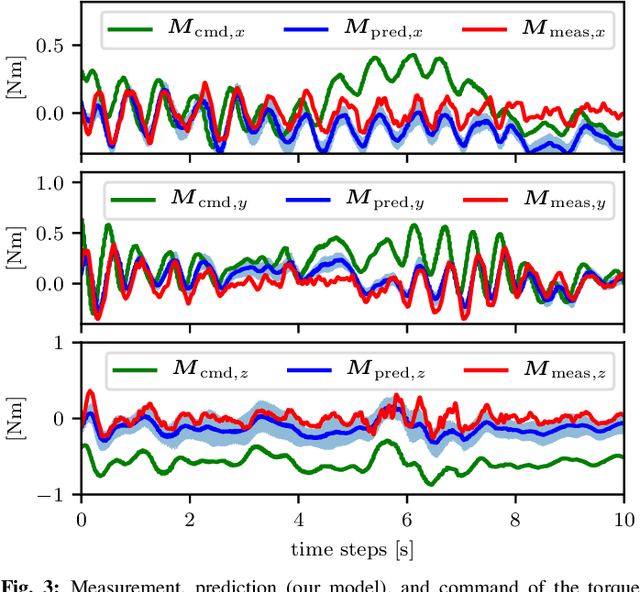

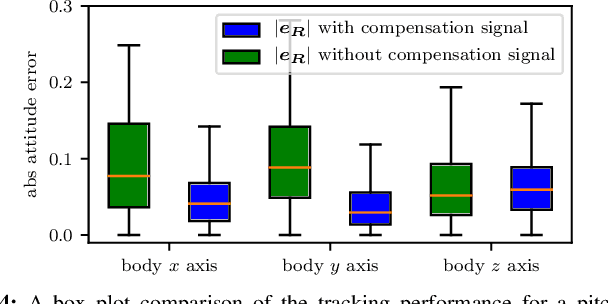

Abstract:Overactuated omnidirectional flying vehicles are capable of generating force and torque in any direction, which is important for applications such as contact-based industrial inspection. This comes at the price of an increase in model complexity. These vehicles usually have non-negligible, repetitive dynamics that are hard to model, such as the aerodynamic interference between the propellers. This makes it difficult for high-performance trajectory tracking using a model-based controller. This paper presents an approach that combines a data-driven and a first-principle model for the system actuation and uses it to improve the controller. In a first step, the first-principle model errors are learned offline using a Gaussian Process (GP) regressor. At runtime, the first-principle model and the GP regressor are used jointly to obtain control commands. This is formulated as an optimization problem, which avoids ambiguous solutions present in a standard inverse model in overactuated systems, by only using forward models. The approach is validated using a tilt-arm overactuated omnidirectional flying vehicle performing attitude trajectory tracking. The results show that with our proposed method, the attitude trajectory error is reduced by 32% on average as compared to a nominal PID controller.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge