Marco Tognon

Domain-specific Hardware Acceleration for Model Predictive Path Integral Control

Jan 17, 2026Abstract:Accurately controlling a robotic system in real time is a challenging problem. To address this, the robotics community has adopted various algorithms, such as Model Predictive Control (MPC) and Model Predictive Path Integral (MPPI) control. The first is difficult to implement on non-linear systems such as unmanned aerial vehicles, whilst the second requires a heavy computational load. GPUs have been successfully used to accelerate MPPI implementations; however, their power consumption is often excessive for autonomous or unmanned targets, especially when battery-powered. On the other hand, custom designs, often implemented on FPGAs, have been proposed to accelerate robotic algorithms while consuming considerably less energy than their GPU (or CPU) implementation. However, no MPPI custom accelerator has been proposed so far. In this work, we present a hardware accelerator for MPPI control and simulate its execution. Results show that the MPPI custom accelerator allows more accurate trajectories than GPU-based MPPI implementations.

Agile and Cooperative Aerial Manipulation of a Cable-Suspended Load

Jan 30, 2025

Abstract:Quadrotors can carry slung loads to hard-to-reach locations at high speed. Since a single quadrotor has limited payload capacities, using a team of quadrotors to collaboratively manipulate a heavy object is a scalable and promising solution. However, existing control algorithms for multi-lifting systems only enable low-speed and low-acceleration operations due to the complex dynamic coupling between quadrotors and the load, limiting their use in time-critical missions such as search and rescue. In this work, we present a solution to significantly enhance the agility of cable-suspended multi-lifting systems. Unlike traditional cascaded solutions, we introduce a trajectory-based framework that solves the whole-body kinodynamic motion planning problem online, accounting for the dynamic coupling effects and constraints between the quadrotors and the load. The planned trajectory is provided to the quadrotors as a reference in a receding-horizon fashion and is tracked by an onboard controller that observes and compensates for the cable tension. Real-world experiments demonstrate that our framework can achieve at least eight times greater acceleration than state-of-the-art methods to follow agile trajectories. Our method can even perform complex maneuvers such as flying through narrow passages at high speed. Additionally, it exhibits high robustness against load uncertainties and does not require adding any sensors to the load, demonstrating strong practicality.

Evaluation of Human-Robot Interfaces based on 2D/3D Visual and Haptic Feedback for Aerial Manipulation

Oct 20, 2024

Abstract:Most telemanipulation systems for aerial robots provide the operator with only 2D screen visual information. The lack of richer information about the robot's status and environment can limit human awareness and, in turn, task performance. While the pilot's experience can often compensate for this reduced flow of information, providing richer feedback is expected to reduce the cognitive workload and offer a more intuitive experience overall. This work aims to understand the significance of providing additional pieces of information during aerial telemanipulation, namely (i) 3D immersive visual feedback about the robot's surroundings through mixed reality (MR) and (ii) 3D haptic feedback about the robot interaction with the environment. To do so, we developed a human-robot interface able to provide this information. First, we demonstrate its potential in a real-world manipulation task requiring sub-centimeter-level accuracy. Then, we evaluate the individual effect of MR vision and haptic feedback on both dexterity and workload through a human subjects study involving a virtual block transportation task. Results show that both 3D MR vision and haptic feedback improve the operator's dexterity in the considered teleoperated aerial interaction tasks. Nevertheless, pilot experience remains the most significant factor.

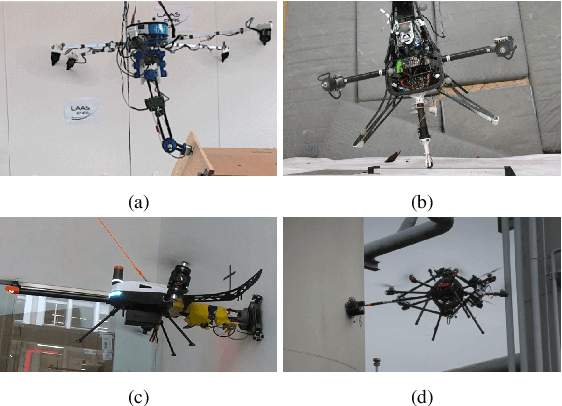

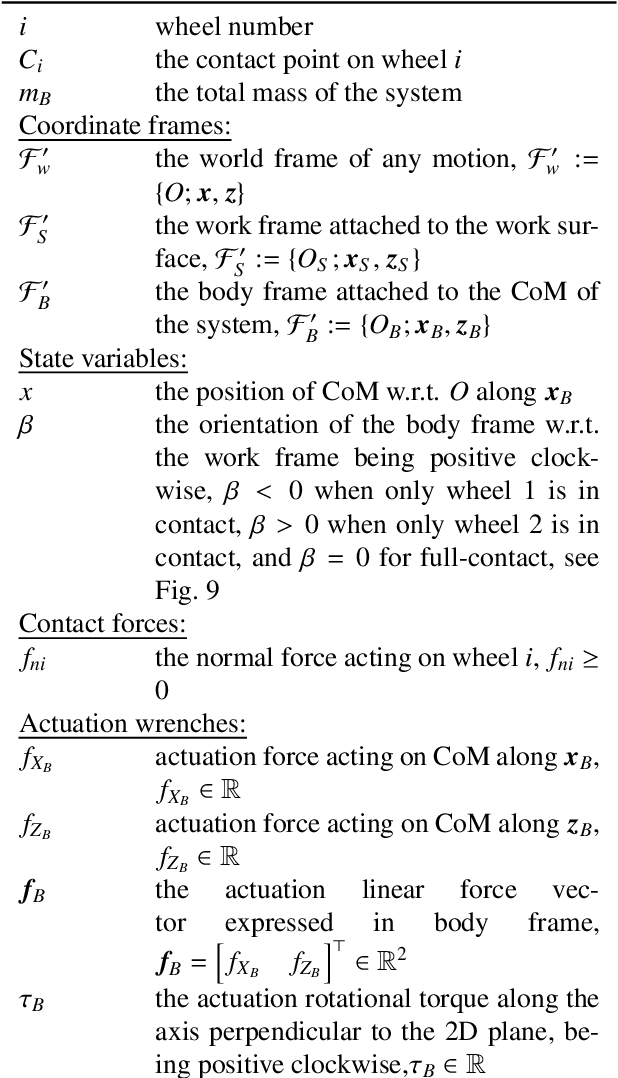

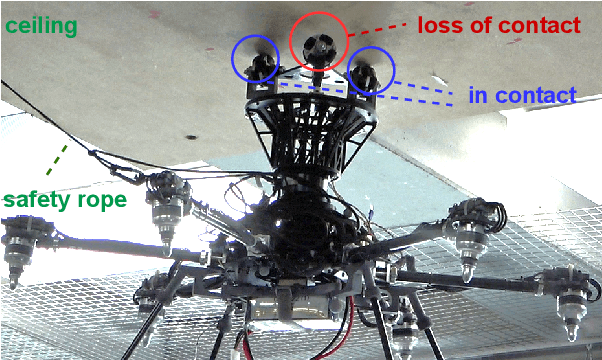

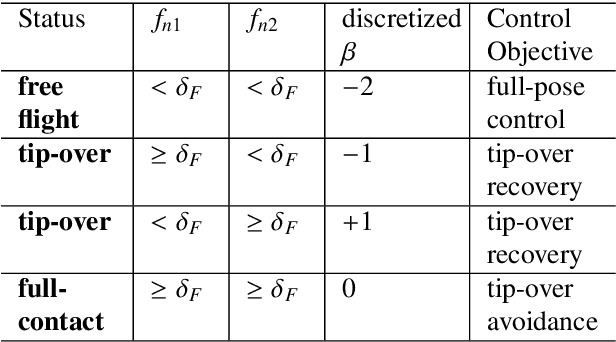

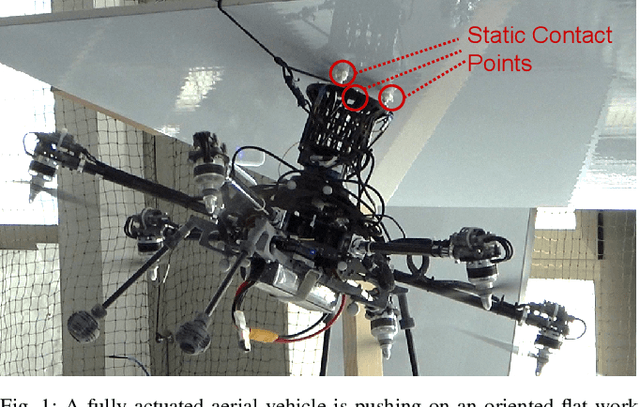

Multi-Wheeled Passive Sliding with Fully-Actuated Aerial Robots: Tip-Over Recovery and Avoidance

May 29, 2024

Abstract:Push-and-slide tasks carried out by fully-actuated aerial robots can be used for inspection and simple maintenance tasks at height, such as non-destructive testing and painting. Often, an end-effector based on multiple non-actuated contact wheels is used to contact the surface. This approach entails challenges in ensuring consistent wheel contact with a surface whose exact orientation and location might be uncertain due to sensor aliasing and drift. Using a standard full-pose controller dependent on the inaccurate surface position and orientation may cause wheels to lose contact during sliding, and subsequently lead to robot tip-over. To address the tip-over issue, we present two approaches: (1) tip-over avoidance guidelines for hardware design, and (2) control for tip-over recovery and avoidance. Physical experiments with a fully-actuated aerial vehicle were executed for a push-and-slide task on a flat surface. The resulting data is used in deriving tip-over avoidance guidelines and designing a simulator that closely captures real-world conditions. We then use the simulator to test the effectiveness and robustness of the proposed approaches in risky scenarios against uncertainties.

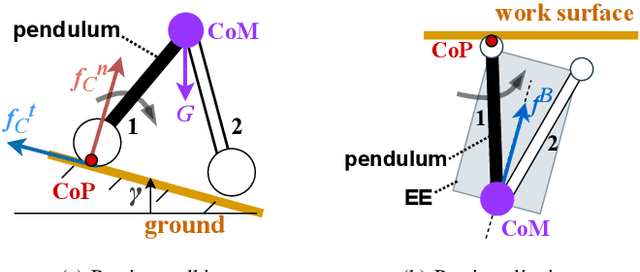

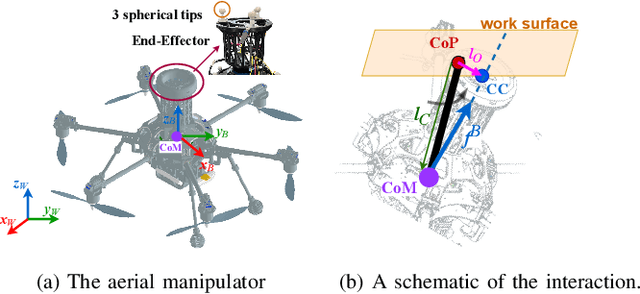

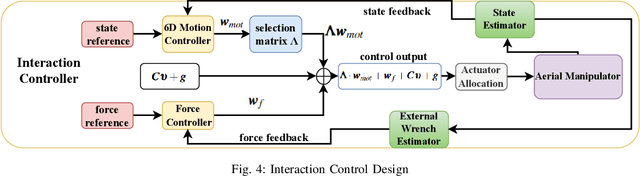

Passive Aligning Physical Interaction of Fully-Actuated Aerial Vehicles for Pushing Tasks

Feb 27, 2024

Abstract:Recently, the utilization of aerial manipulators for performing pushing tasks in non-destructive testing (NDT) applications has seen significant growth. Such operations entail physical interactions between the aerial robotic system and the environment. End-effectors with multiple contact points are often used for placing NDT sensors in contact with a surface to be inspected. Aligning the NDT sensor and the work surface while preserving contact, requires that all available contact points at the end-effector tip are in contact with the work surface. With a standard full-pose controller, attitude errors often occur due to perturbations caused by modeling uncertainties, sensor noise, and environmental uncertainties. Even small attitude errors can cause a loss of contact points between the end-effector tip and the work surface. To preserve full alignment amidst these uncertainties, we propose a control strategy which selectively deactivates angular motion control and enables direct force control in specific directions. In particular, we derive two essential conditions to be met, such that the robot can passively align with flat work surfaces achieving full alignment through the rotation along non-actively controlled axes. Additionally, these conditions serve as hardware design and control guidelines for effectively integrating the proposed control method for practical usage. Real world experiments are conducted to validate both the control design and the guidelines.

Geranos: a Novel Tilted-Rotors Aerial Robot for the Transportation of Poles

Dec 04, 2023

Abstract:In challenging terrains, constructing structures such as antennas and cable-car masts often requires the use of helicopters to transport loads via ropes. The swinging of the load, exacerbated by wind, impairs positioning accuracy, therefore necessitating precise manual placement by ground crews. This increases costs and risk of injuries. Challenging this paradigm, we present Geranos: a specialized multirotor Unmanned Aerial Vehicle (UAV) designed to enhance aerial transportation and assembly. Geranos demonstrates exceptional prowess in accurately positioning vertical poles, achieving this through an innovative integration of load transport and precision. Its unique ring design mitigates the impact of high pole inertia, while a lightweight two-part grasping mechanism ensures secure load attachment without active force. With four primary propellers countering gravity and four auxiliary ones enhancing lateral precision, Geranos achieves comprehensive position and attitude control around hovering. Our experimental demonstration mimicking antenna/cable-car mast installations showcases Geranos ability in stacking poles (3 kg, 2 m long) with remarkable sub-5 cm placement accuracy, without the need of human manual intervention.

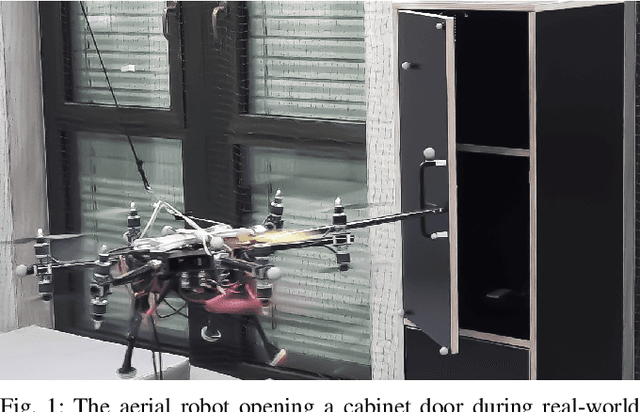

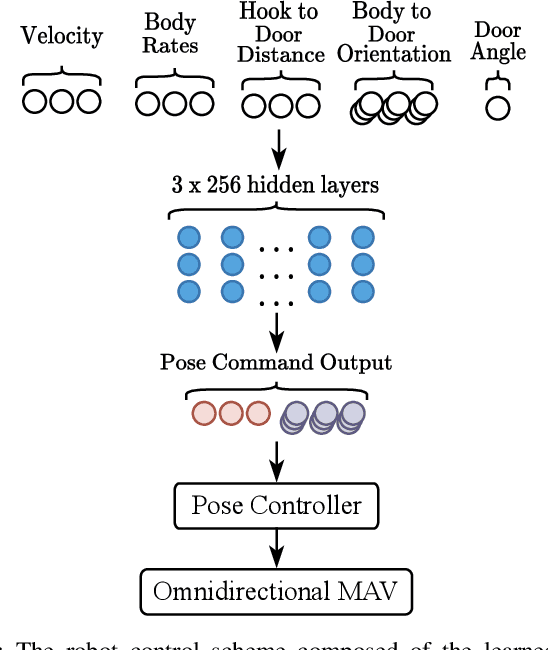

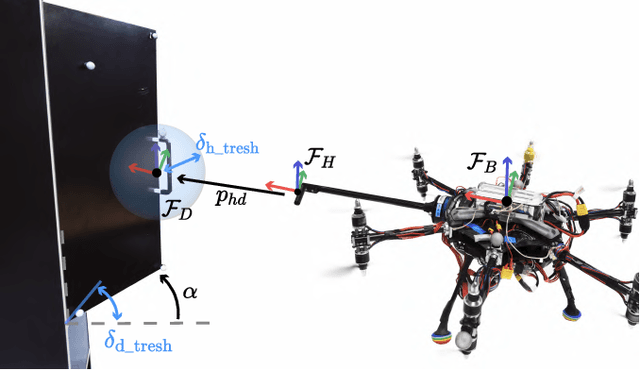

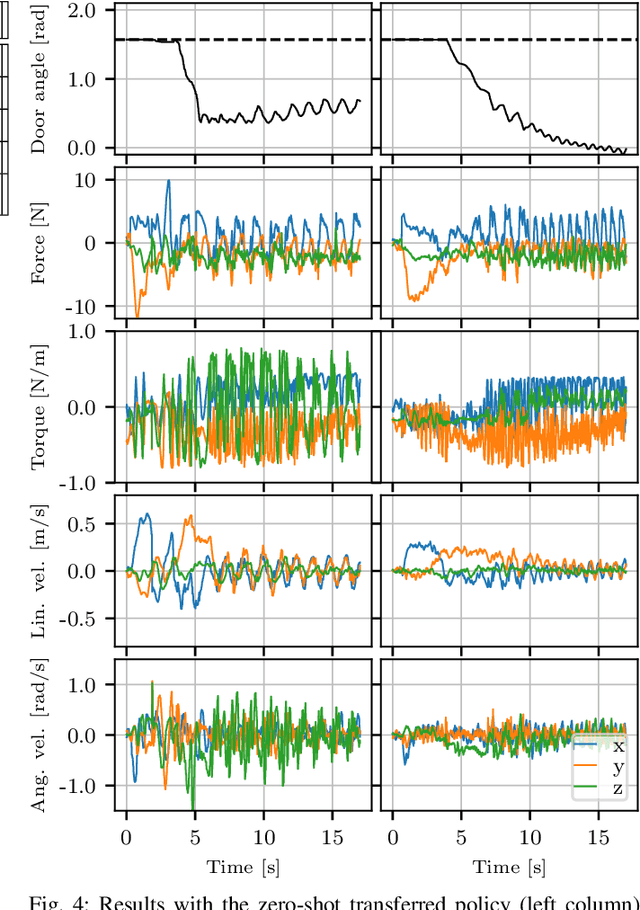

Learning to Open Doors with an Aerial Manipulator

Jul 28, 2023

Abstract:The field of aerial manipulation has seen rapid advances, transitioning from push-and-slide tasks to interaction with articulated objects. So far, when more complex actions are performed, the motion trajectory is usually handcrafted or a result of online optimization methods like Model Predictive Control (MPC) or Model Predictive Path Integral (MPPI) control. However, these methods rely on heuristics or model simplifications to efficiently run on onboard hardware, producing results in acceptable amounts of time. Moreover, they can be sensitive to disturbances and differences between the real environment and its simulated counterpart. In this work, we propose a Reinforcement Learning (RL) approach to learn motion behaviors for a manipulation task while producing policies that are robust to disturbances and modeling errors. Specifically, we train a policy to perform a door-opening task with an Omnidirectional Micro Aerial Vehicle (OMAV). The policy is trained in a physics simulator and experiments are presented both in simulation and running onboard the real platform, investigating the simulation to real world transfer. We compare our method against a state-of-the-art MPPI solution, showing a considerable increase in robustness and speed.

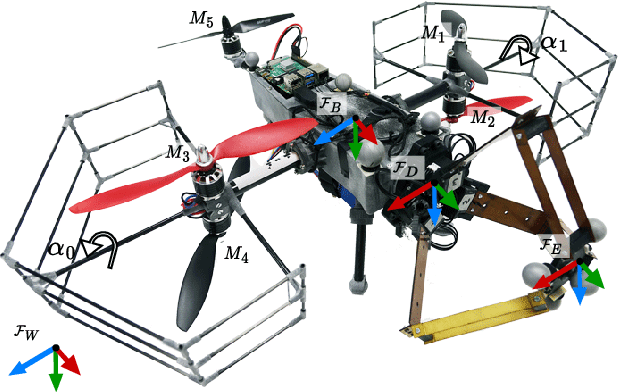

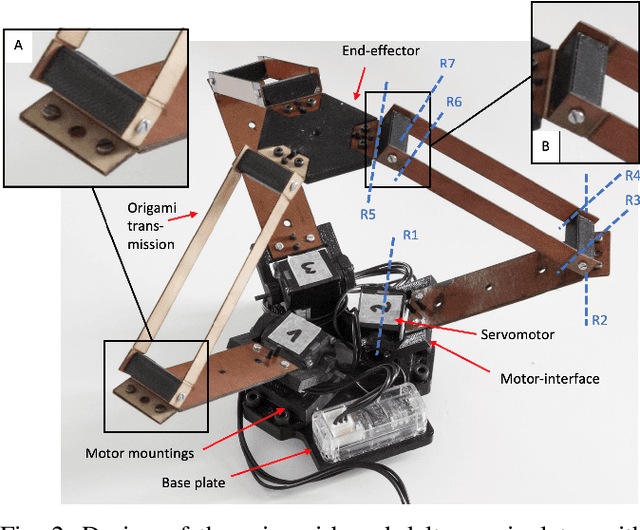

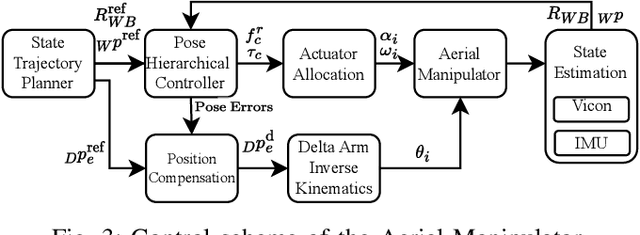

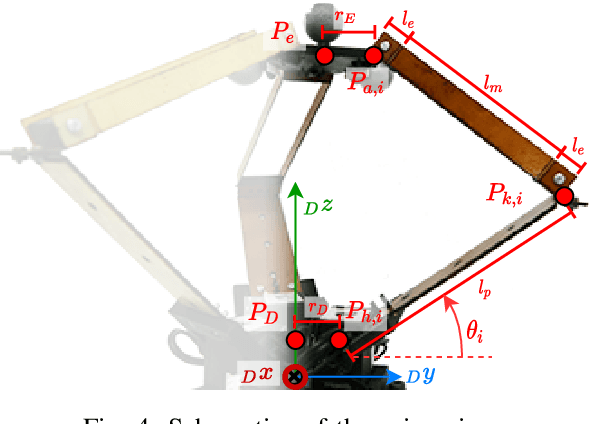

Design and Control of a Micro Overactuated Aerial Robot with an Origami Delta Manipulator

May 03, 2023

Abstract:This work presents the mechanical design and control of a novel small-size and lightweight Micro Aerial Vehicle (MAV) for aerial manipulation. To our knowledge, with a total take-off mass of only 2.0 kg, the proposed system is the most lightweight Aerial Manipulator (AM) that has 8-DOF independently controllable: 5 for the aerial platform and 3 for the articulated arm. We designed the robot to be fully-actuated in the body forward direction. This allows independent pitching and instantaneous force generation, improving the platform's performance during physical interaction. The robotic arm is an origami delta manipulator driven by three servomotors, enabling active motion compensation at the end-effector. Its composite multimaterial links help reduce the weight, while their flexibility allow for compliant aerial interaction with the environment. In particular, the arm's stiffness can be changed according to its configuration. We provide an in depth discussion of the system design and characterize the stiffness of the delta arm. A control architecture to deal with the platform's overactuation while exploiting the delta arm is presented. Its capabilities are experimentally illustrated both in free flight and physical interaction, highlighting advantages and disadvantages of the origami's folding mechanism.

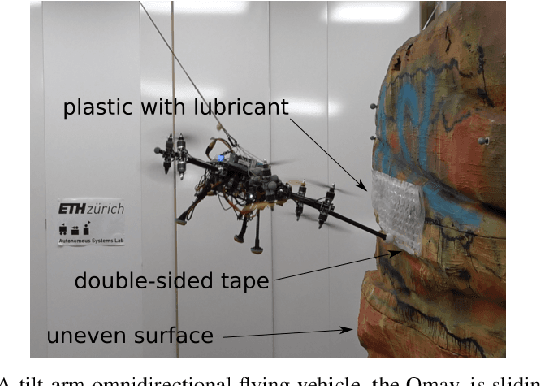

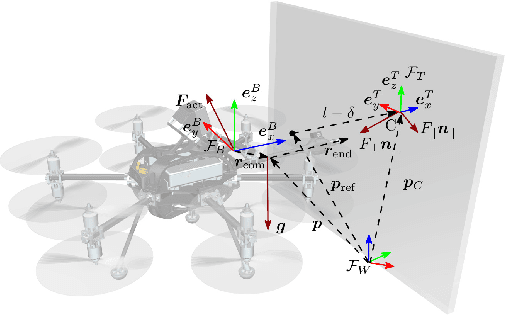

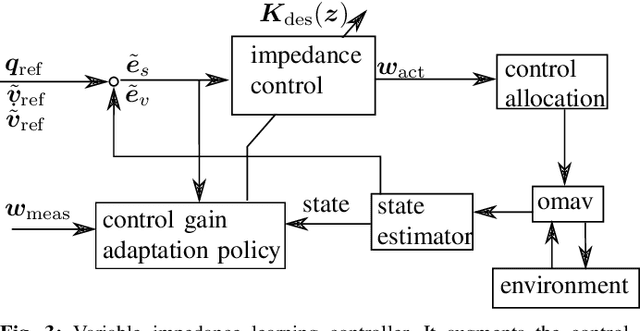

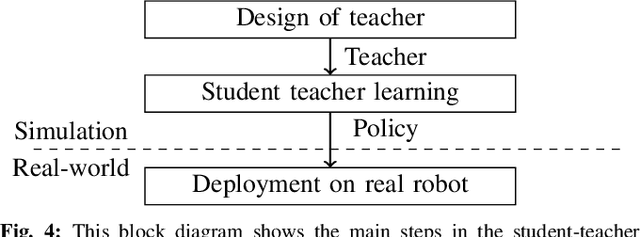

Learning Variable Impedance Control for Aerial Sliding on Uneven Heterogeneous Surfaces by Proprioceptive and Tactile Sensing

Jul 05, 2022

Abstract:The recent development of novel aerial vehicles capable of physically interacting with the environment leads to new applications such as contact-based inspection. These tasks require the robotic system to exchange forces with partially-known environments, which may contain uncertainties including unknown spatially-varying friction properties and discontinuous variations of the surface geometry. Finding a control strategy that is robust against these environmental uncertainties remains an open challenge. This paper presents a learning-based adaptive control strategy for aerial sliding tasks. In particular, the gains of a standard impedance controller are adjusted in real-time by a policy based on the current control signals, proprioceptive measurements, and tactile sensing. This policy is trained in simulation with simplified actuator dynamics in a student-teacher learning setup. The real-world performance of the proposed approach is verified using a tilt-arm omnidirectional flying vehicle. The proposed controller structure combines data-driven and model-based control methods, enabling our approach to successfully transfer directly and without adaptation from simulation to the real platform. Compared to fine-tuned state of the art interaction control methods we achieve reduced tracking error and improved disturbance rejection.

MPC with Learned Residual Dynamics with Application on Omnidirectional MAVs

Jul 04, 2022

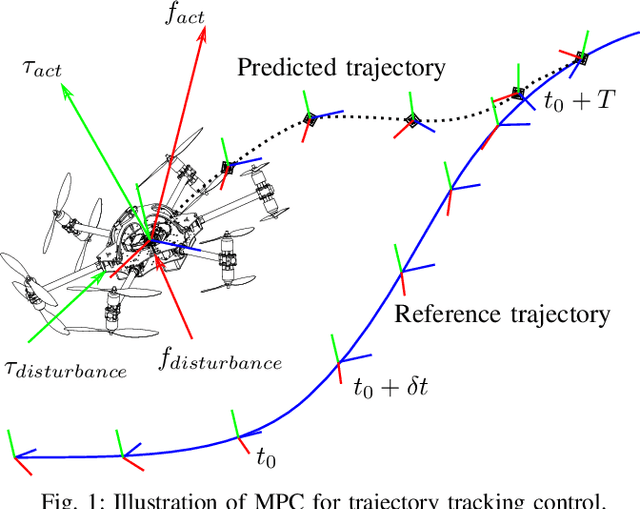

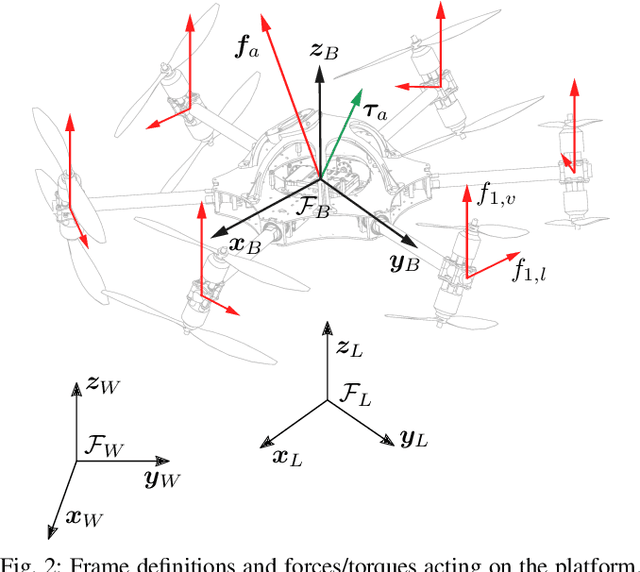

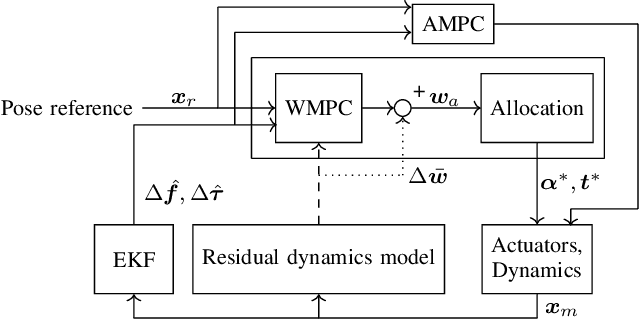

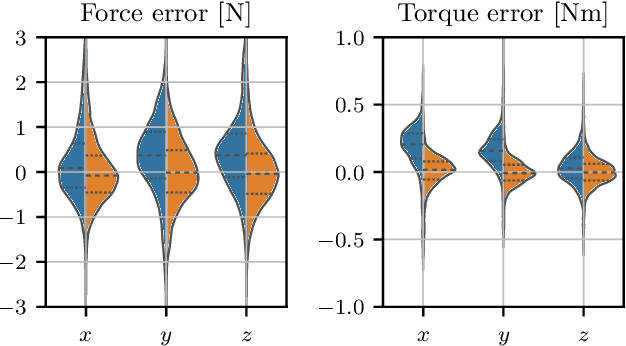

Abstract:The growing field of aerial manipulation often relies on fully actuated or omnidirectional micro aerial vehicles (OMAVs) which can apply arbitrary forces and torques while in contact with the environment. Control methods are usually based on model-free approaches, separating a high-level wrench controller from an actuator allocation. If necessary, disturbances are rejected by online disturbance observers. However, while being general, this approach often produces sub-optimal control commands and cannot incorporate constraints given by the platform design. We present two model-based approaches to control OMAVs for the task of trajectory tracking while rejecting disturbances. The first one optimizes wrench commands and compensates model errors by a model learned from experimental data. The second one optimizes low-level actuator commands, allowing to exploit an allocation nullspace and to consider constraints given by the actuator hardware. The efficacy and real-time feasibility of both approaches is shown and evaluated in real-world experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge