Stefano Mintchev

Bioinspired Soft Quadrotors Jointly Unlock Agility, Squeezability, and Collision Resilience

Nov 07, 2025Abstract:Natural flyers use soft wings to seamlessly enable a wide range of flight behaviours, including agile manoeuvres, squeezing through narrow passageways, and withstanding collisions. In contrast, conventional quadrotor designs rely on rigid frames that support agile flight but inherently limit collision resilience and squeezability, thereby constraining flight capabilities in cluttered environments. Inspired by the anisotropic stiffness and distributed mass-energy structures observed in biological organisms, we introduce FlexiQuad, a soft-frame quadrotor design approach that limits this trade-off. We demonstrate a 405-gram FlexiQuad prototype, three orders of magnitude more compliant than conventional quadrotors, yet capable of acrobatic manoeuvres with peak speeds above 80 km/h and linear and angular accelerations exceeding 3 g and 300 rad/s$^2$, respectively. Analysis demonstrates it can replicate accelerations of rigid counterparts up to a thrust-to-weight ratio of 8. Simultaneously, FlexiQuad exhibits fourfold higher collision resilience, surviving frontal impacts at 5 m/s without damage and reducing destabilising forces in glancing collisions by a factor of 39. Its frame can fully compress, enabling flight through gaps as narrow as 70% of its nominal width. Our analysis identifies an optimal structural softness range, from 0.006 to 0.77 N/mm, comparable to that of natural flyers' wings, whereby agility, squeezability, and collision resilience are jointly achieved for FlexiQuad models from 20 to 3000 grams. FlexiQuad expands hovering drone capabilities in complex environments, enabling robust physical interactions without compromising flight performance.

Optical Tactile Sensing for Aerial Multi-Contact Interaction: Design, Integration, and Evaluation

Jan 30, 2024

Abstract:Distributed tactile sensing for multi-force detection is crucial for various aerial robot interaction tasks. However, current contact sensing solutions on drones only exploit single end-effector sensors and cannot provide distributed multi-contact sensing. Designed to be easily mounted at the bottom of a drone, we propose an optical tactile sensor that features a large and curved soft sensing surface, a hollow structure and a new illumination system. Even when spaced only 2 cm apart, multiple contacts can be detected simultaneously using our software pipeline, which provides real-world quantities of 3D contact locations (mm) and 3D force vectors (N), with an accuracy of 1.5 mm and 0.17 N respectively. We demonstrate the sensor's applicability and reliability onboard and in real-time with two demos related to i) the estimation of the compliance of different perches and subsequent re-alignment and landing on the stiffer one, and ii) the mapping of sparse obstacles. The implementation of our distributed tactile sensor represents a significant step towards attaining the full potential of drones as versatile robots capable of interacting with and navigating within complex environments.

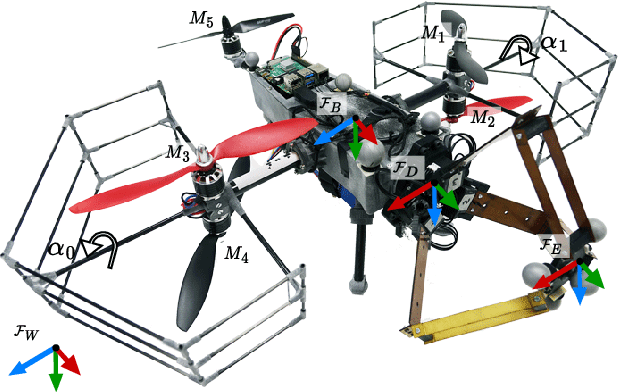

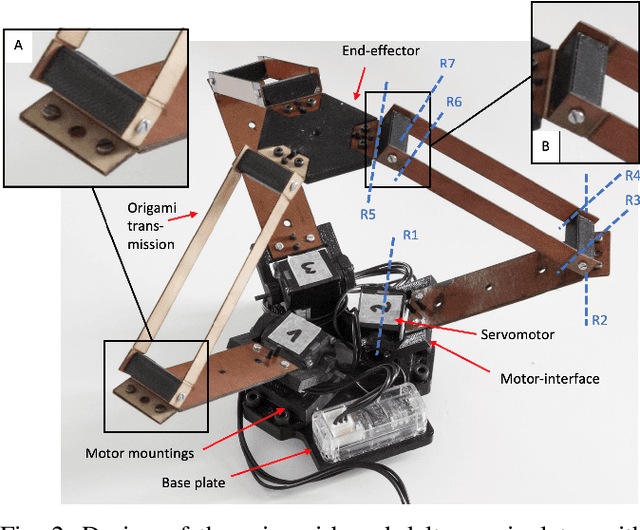

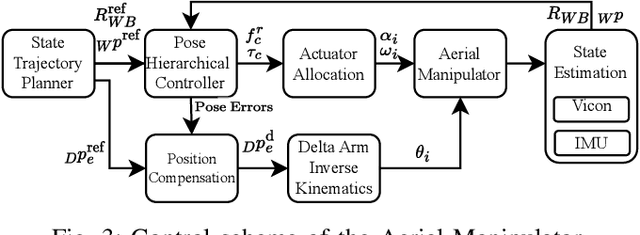

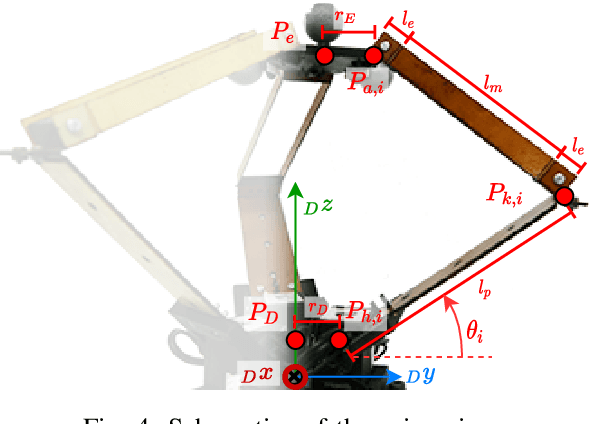

Design and Control of a Micro Overactuated Aerial Robot with an Origami Delta Manipulator

May 03, 2023

Abstract:This work presents the mechanical design and control of a novel small-size and lightweight Micro Aerial Vehicle (MAV) for aerial manipulation. To our knowledge, with a total take-off mass of only 2.0 kg, the proposed system is the most lightweight Aerial Manipulator (AM) that has 8-DOF independently controllable: 5 for the aerial platform and 3 for the articulated arm. We designed the robot to be fully-actuated in the body forward direction. This allows independent pitching and instantaneous force generation, improving the platform's performance during physical interaction. The robotic arm is an origami delta manipulator driven by three servomotors, enabling active motion compensation at the end-effector. Its composite multimaterial links help reduce the weight, while their flexibility allow for compliant aerial interaction with the environment. In particular, the arm's stiffness can be changed according to its configuration. We provide an in depth discussion of the system design and characterize the stiffness of the delta arm. A control architecture to deal with the platform's overactuation while exploiting the delta arm is presented. Its capabilities are experimentally illustrated both in free flight and physical interaction, highlighting advantages and disadvantages of the origami's folding mechanism.

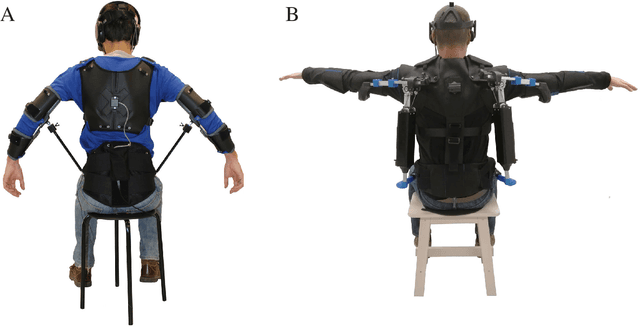

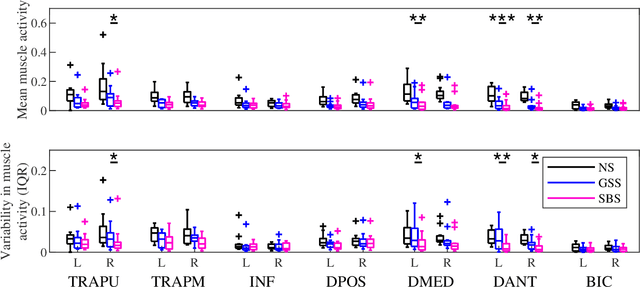

A Portable and Passive Gravity Compensation Arm Support for Drone Teleoperation

Nov 10, 2021

Abstract:Gesture-based interfaces are often used to achieve a more natural and intuitive teleoperation of robots. Yet, sometimes, gesture control requires postures or movements that cause significant fatigue to the user. In a previous user study, we demonstrated that na\"ive users can control a fixed-wing drone with torso movements while their arms are spread out. However, this posture induced significant arm fatigue. In this work, we present a passive arm support that compensates the arm weight with a mean torque error smaller than 0.005 N/kg for more than 97% of the range of motion used by subjects to fly, therefore reducing muscular fatigue in the shoulder of on average 58%. In addition, this arm support is designed to fit users from the body dimension of the 1st percentile female to the 99th percentile male. The performance analysis of the arm support is described with a mechanical model and its implementation is validated with both a mechanical characterization and a user study, which measures the flight performance, the shoulder muscle activity and the user acceptance.

Embodied Flight with a Drone

Jul 06, 2017

Abstract:Most human-robot interfaces, such as joysticks and keyboards, require training and constant cognitive effort and provide a limited degree of awareness of the robots state and its environment. Embodied interactions, instead of interfaces, could bridge the gap between humans and robots, allowing humans to naturally perceive and act through a distal robotic body. Establishing an embodied interaction and mapping human movements and a non-anthropomorphic robot is particularly challenging. In this paper, we describe a natural and immersive embodied interaction that allows users to control and experience drone flight with their own bodies. The setup uses a commercial flight simulator that tracks hand movements and provides haptic and visual feedback. The paper discusses how to integrate the simulator with a real drone, how to map body movement with drone motion, and how the resulting embodied interaction provides a more natural and immersive flight experience to unskilled users with respect to a conventional RC remote controller.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge