Silvestro Micera

Hardware-Efficient EMG Decoding for Next-Generation Hand Prostheses

May 31, 2024

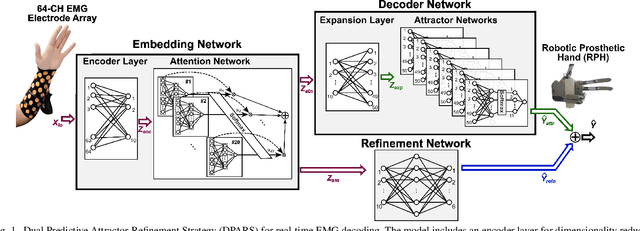

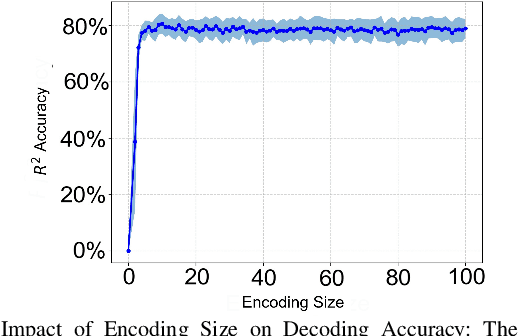

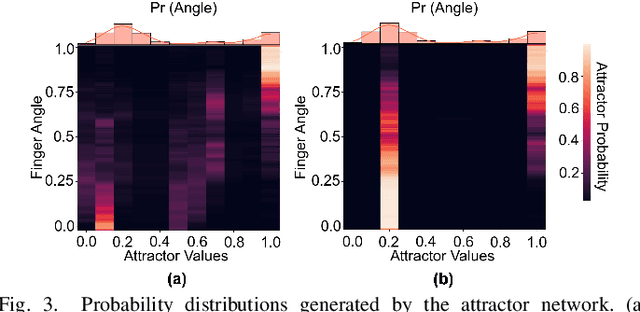

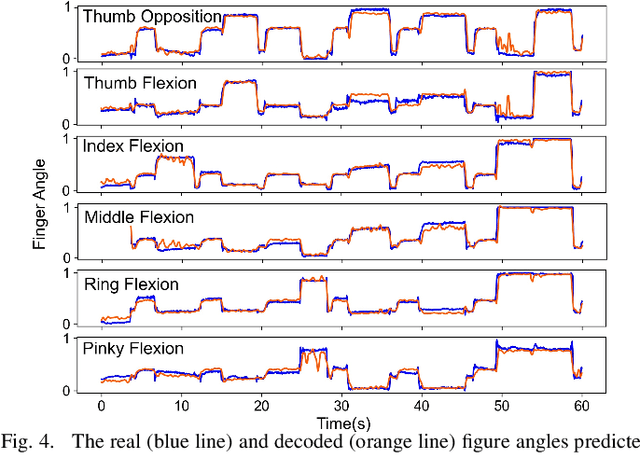

Abstract:Advancements in neural engineering have enabled the development of Robotic Prosthetic Hands (RPHs) aimed at restoring hand functionality. Current commercial RPHs offer limited control through basic on/off commands. Recent progresses in machine learning enable finger movement decoding with higher degrees of freedom, yet the high computational complexity of such models limits their application in portable devices. Future RPH designs must balance portability, low power consumption, and high decoding accuracy to be practical for individuals with disabilities. To this end, we introduce a novel attractor-based neural network to realize on-chip movement decoding for next-generation portable RPHs. The proposed architecture comprises an encoder, an attention layer, an attractor network, and a refinement regressor. We tested our model on four healthy subjects and achieved a decoding accuracy of 80.3%. Our proposed model is over 120 and 50 times more compact compared to state-of-the-art LSTM and CNN models, respectively, with comparable (or superior) decoding accuracy. Therefore, it exhibits minimal hardware complexity and can be effectively integrated as a System-on-Chip.

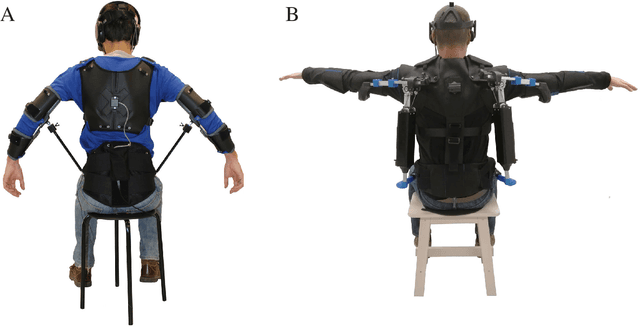

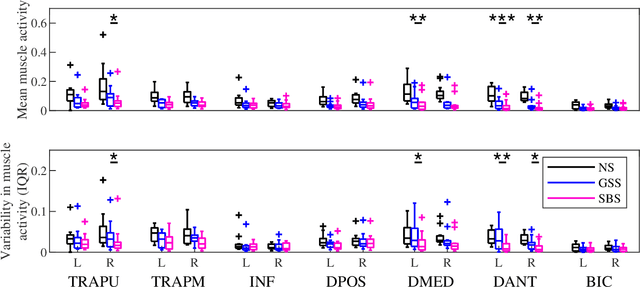

A Portable and Passive Gravity Compensation Arm Support for Drone Teleoperation

Nov 10, 2021

Abstract:Gesture-based interfaces are often used to achieve a more natural and intuitive teleoperation of robots. Yet, sometimes, gesture control requires postures or movements that cause significant fatigue to the user. In a previous user study, we demonstrated that na\"ive users can control a fixed-wing drone with torso movements while their arms are spread out. However, this posture induced significant arm fatigue. In this work, we present a passive arm support that compensates the arm weight with a mean torque error smaller than 0.005 N/kg for more than 97% of the range of motion used by subjects to fly, therefore reducing muscular fatigue in the shoulder of on average 58%. In addition, this arm support is designed to fit users from the body dimension of the 1st percentile female to the 99th percentile male. The performance analysis of the arm support is described with a mechanical model and its implementation is validated with both a mechanical characterization and a user study, which measures the flight performance, the shoulder muscle activity and the user acceptance.

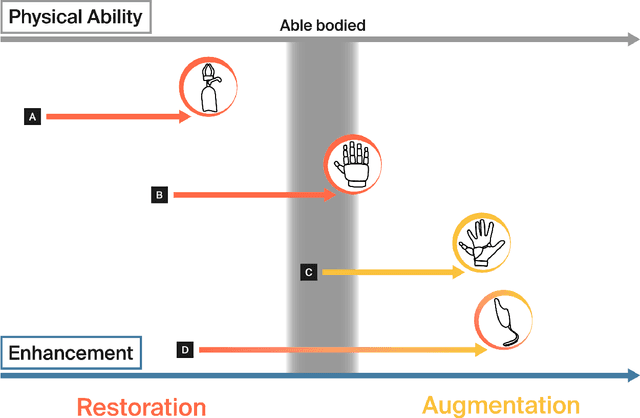

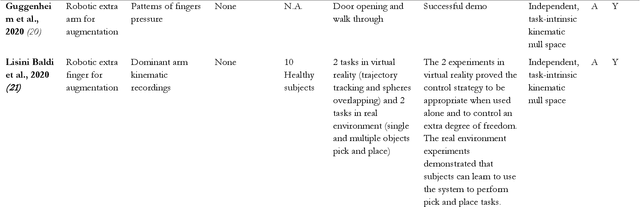

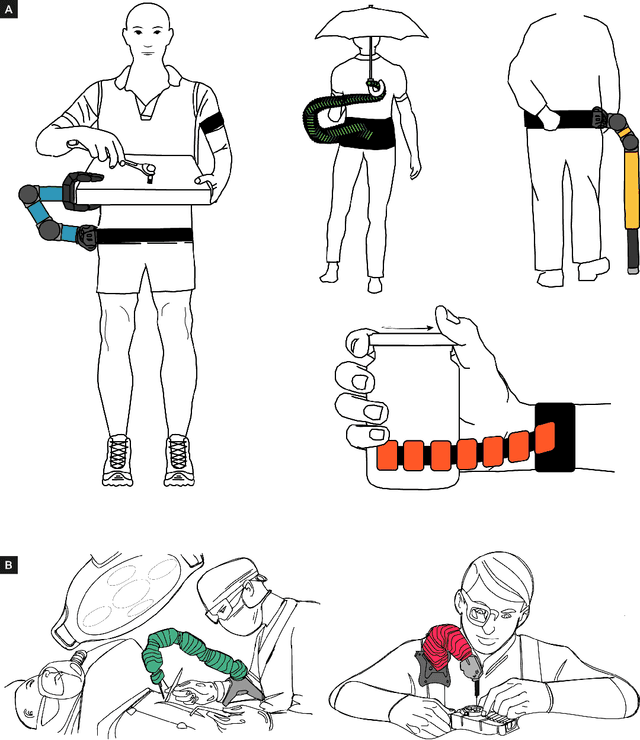

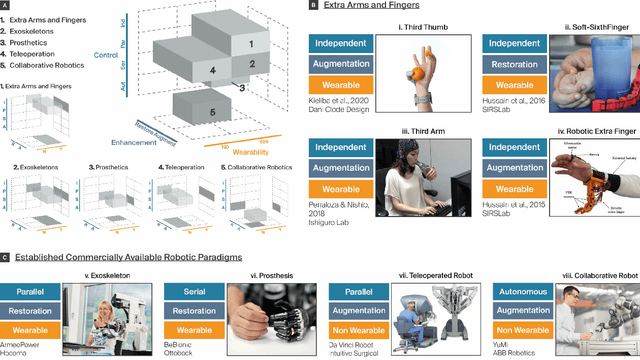

Enhancing human bodies with extra robotic arms and fingers: The Neural Resource Allocation Problem

Mar 31, 2021

Abstract:The emergence of robot-based body augmentation promises exciting innovations that will inform robotics, human-machine interaction, and wearable electronics. Even though augmentative devices like extra robotic arms and fingers in many ways build on restorative technologies, they introduce unique challenges for bidirectional human-machine collaboration. Can humans adapt and learn to operate a new limb collaboratively with their biological limbs without sacrificing their physical abilities? To successfully achieve robotic body augmentation, we need to ensure that by giving a person an additional (artificial) limb, we are not in fact trading off an existing (biological) one. In this manuscript, we introduce the "Neural Resource Allocation" problem, which distinguishes body augmentation from existing robotics paradigms such as teleoperation and prosthetics. We discuss how to allow the effective and effortless voluntary control of augmentative devices without compromising the voluntary control of the biological body. In reviewing the relevant literature on extra robotic fingers and limbs we critically assess the range of potential solutions available for the "Neural Resource Allocation" problem. For this purpose, we combine multiple perspectives from engineering and neuroscience with considerations from human-machine interaction, sensory-motor integration, ethics and law. Altogether we aim to define common foundations and operating principles for the successful implementation of motor augmentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge