Domenico Prattichizzo

Wearable Haptics for a Marionette-inspired Teleoperation of Highly Redundant Robotic Systems

Mar 20, 2025Abstract:The teleoperation of complex, kinematically redundant robots with loco-manipulation capabilities represents a challenge for human operators, who have to learn how to operate the many degrees of freedom of the robot to accomplish a desired task. In this context, developing an easy-to-learn and easy-to-use human-robot interface is paramount. Recent works introduced a novel teleoperation concept, which relies on a virtual physical interaction interface between the human operator and the remote robot equivalent to a "Marionette" control, but whose feedback was limited to only visual feedback on the human side. In this paper, we propose extending the "Marionette" interface by adding a wearable haptic interface to cope with the limitations given by the previous works. Leveraging the additional haptic feedback modality, the human operator gains full sensorimotor control over the robot, and the awareness about the robot's response and interactions with the environment is greatly improved. We evaluated the proposed interface and the related teleoperation framework with naive users, assessing the teleoperation performance and the user experience with and without haptic feedback. The conducted experiments consisted in a loco-manipulation mission with the CENTAURO robot, a hybrid leg-wheel quadruped with a humanoid dual-arm upper body.

* 7 pages, 8 figures

Avatarm: an Avatar With Manipulation Capabilities for the Physical Metaverse

Mar 27, 2023

Abstract:Metaverse is an immersive shared space that remote users can access through virtual and augmented reality interfaces, enabling their avatars to interact with each other and the surrounding. Although digital objects can be manipulated, physical objects cannot be touched, grasped, or moved within the metaverse due to the lack of a suitable interface. This work proposes a solution to overcome this limitation by introducing the concept of a Physical Metaverse enabled by a new interface named "Avatarm". The Avatarm consists in an avatar enhanced with a robotic arm that performs physical manipulation tasks while remaining entirely hidden in the metaverse. The users have the illusion that the avatar is directly manipulating objects without the mediation by a robot. The Avatarm is the first step towards a new metaverse, the "Physical Metaverse", where users can physically interact each other and with the environment.

Learning Skills from Demonstrations: A Trend from Motion Primitives to Experience Abstraction

Oct 14, 2022

Abstract:The uses of robots are changing from static environments in factories to encompass novel concepts such as Human-Robot Collaboration in unstructured settings. Pre-programming all the functionalities for robots becomes impractical, and hence, robots need to learn how to react to new events autonomously, just like humans. However, humans, unlike machines, are naturally skilled in responding to unexpected circumstances based on either experiences or observations. Hence, embedding such anthropoid behaviours into robots entails the development of neuro-cognitive models that emulate motor skills under a robot learning paradigm. Effective encoding of these skills is bound to the proper choice of tools and techniques. This paper studies different motion and behaviour learning methods ranging from Movement Primitives (MP) to Experience Abstraction (EA), applied to different robotic tasks. These methods are scrutinized and then experimentally benchmarked by reconstructing a standard pick-n-place task. Apart from providing a standard guideline for the selection of strategies and algorithms, this paper aims to draw a perspectives on their possible extensions and improvements

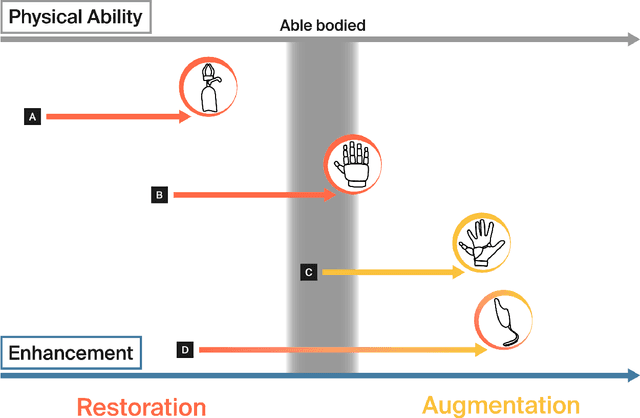

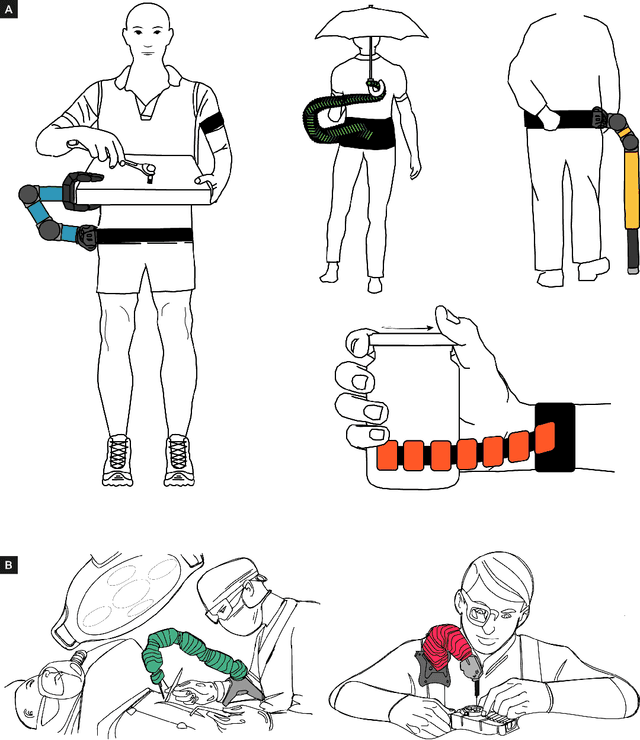

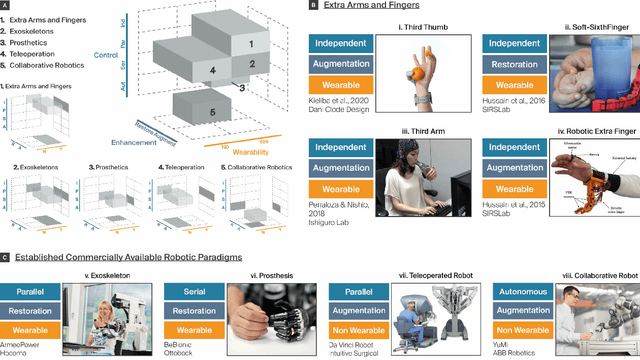

Enhancing human bodies with extra robotic arms and fingers: The Neural Resource Allocation Problem

Mar 31, 2021

Abstract:The emergence of robot-based body augmentation promises exciting innovations that will inform robotics, human-machine interaction, and wearable electronics. Even though augmentative devices like extra robotic arms and fingers in many ways build on restorative technologies, they introduce unique challenges for bidirectional human-machine collaboration. Can humans adapt and learn to operate a new limb collaboratively with their biological limbs without sacrificing their physical abilities? To successfully achieve robotic body augmentation, we need to ensure that by giving a person an additional (artificial) limb, we are not in fact trading off an existing (biological) one. In this manuscript, we introduce the "Neural Resource Allocation" problem, which distinguishes body augmentation from existing robotics paradigms such as teleoperation and prosthetics. We discuss how to allow the effective and effortless voluntary control of augmentative devices without compromising the voluntary control of the biological body. In reviewing the relevant literature on extra robotic fingers and limbs we critically assess the range of potential solutions available for the "Neural Resource Allocation" problem. For this purpose, we combine multiple perspectives from engineering and neuroscience with considerations from human-machine interaction, sensory-motor integration, ethics and law. Altogether we aim to define common foundations and operating principles for the successful implementation of motor augmentation.

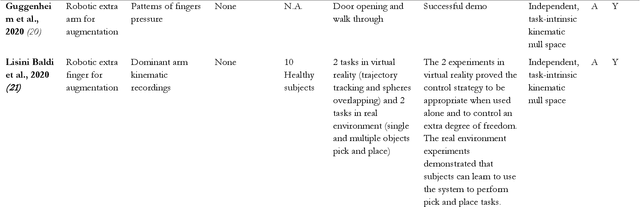

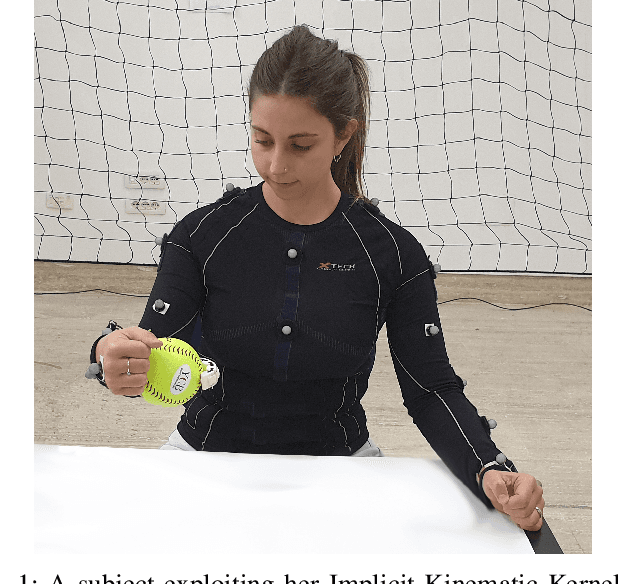

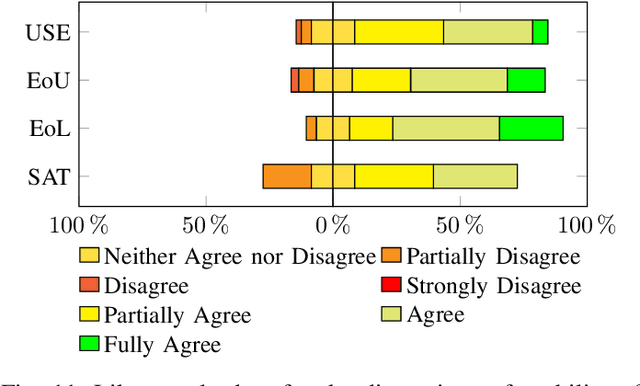

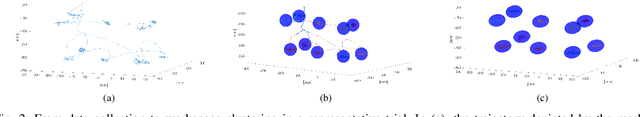

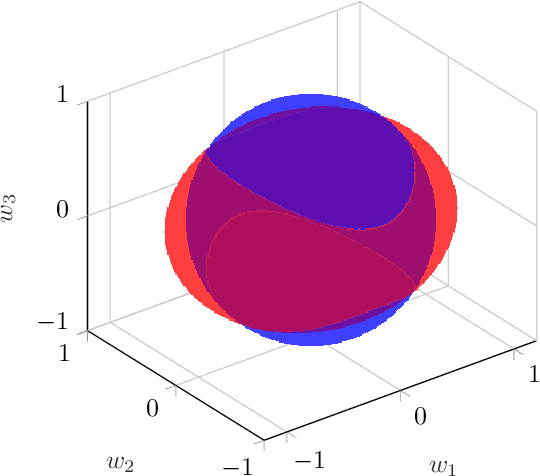

Exploiting Implicit Kinematic Kernel for Controlling a Wearable Robotic Extra-finger

Dec 07, 2020

Abstract:In the last decades, wearable robots have been proposed as technological aids for rehabilitation, assistance, and functional substitution for patients suffering from motor disorders and impairments. Robotic extra-limbs and extra-fingers are representative examples of the technological and scientific achievements in this field. However, successful and intuitive strategies to control and cooperate with the aforementioned wearable aids are still not well established. Against this background, this work introduces an innovative control strategy based on the exploitation of the residual motor capacity of impaired limbs. We aim at helping subjects with a limited mobility and/or physical impairments to control wearable extra-fingers in a natural, comfortable, and intuitive way. The novel idea here presented lies on taking advantage of the redundancy of the human kinematic chain involved in a task to control an extra degree of actuation (DoA). This concept is summarized in the definition of the Implicit Kinematic Kernel (IKK). As first investigation, we developed a procedure for the real time analysis of the body posture and the consequent computation of the IKK-based control signal in the case of single-arm tasks. We considered both bio-mechanical and physiological human features and constraints to allow for an efficient and intuitive control approach. Towards a complete evaluation of the proposed control system, we studied the users' capability of exploiting the Implicit Kinematic Kernel both in virtual and real environments, asking subjects to track different reference signals and to control a robotic extra-finger to accomplish pick-and-place tasks. Obtained results demonstrated that the proposed approach is suitable for controlling a wearable robotic extra-finger in a user-friendly way.

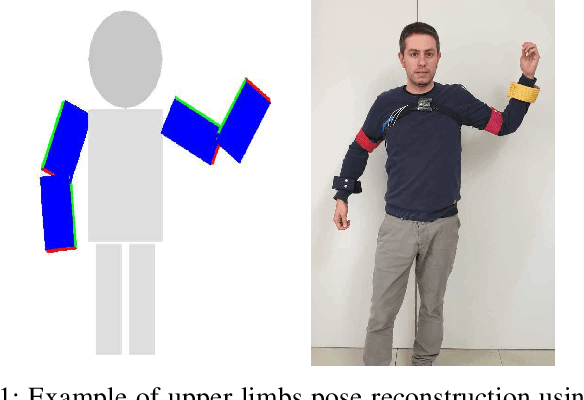

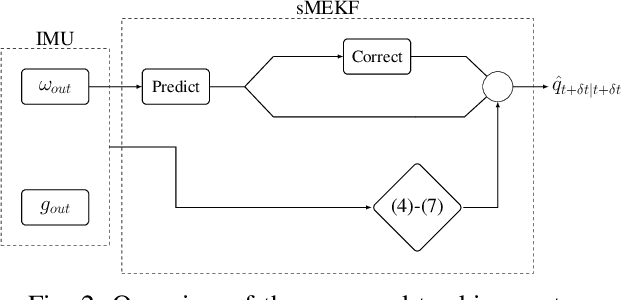

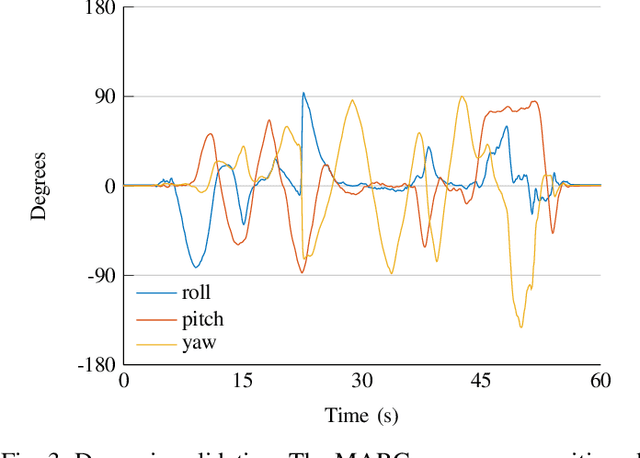

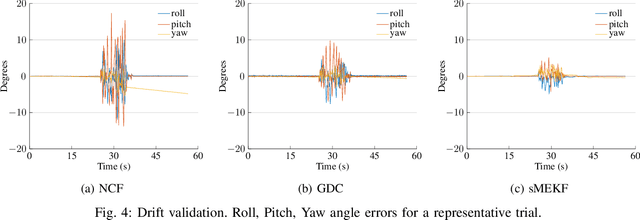

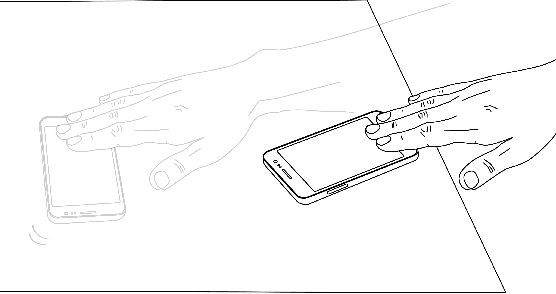

Upper Body Pose Estimation Using Wearable Inertial Sensors and Multiplicative Kalman Filter

Sep 24, 2019

Abstract:Estimating the limbs pose in a wearable way may benefit multiple areas such as rehabilitation, teleoperation, human-robot interaction, gaming, and many more. Several solutions are commercially available, but they are usually expensive or not wearable/portable. We present a wearable pose estimation system (WePosE), based on inertial measurements units (IMUs), for motion analysis and body tracking. Differently from camera-based approaches, the proposed system does not suffer from occlusion problems and lighting conditions, it is cost effective and it can be used in indoor and outdoor environments. Moreover, since only accelerometers and gyroscopes are used to estimate the orientation, the system can be used also in the presence of iron and magnetic disturbances. An experimental validation using a high precision optical tracker has been performed. Results confirmed the effectiveness of the proposed approach.

* 8 pages, 8 figures

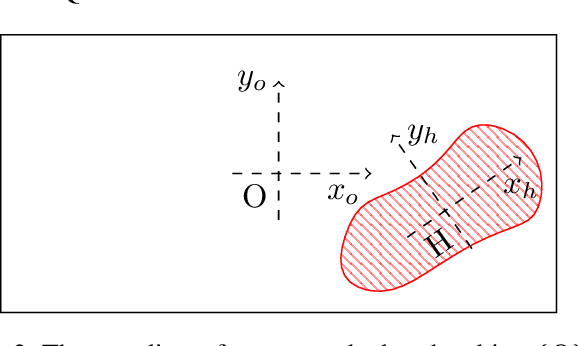

Quasi-static Analysis of Planar Sliding Using Friction Patches

Apr 14, 2019

Abstract:Planar sliding of objects is modeled and analyzed. The model can be used for non-prehensile manipulation of objects lying on a surface. We study possible motions generated by frictional contacts, such as those arising between a soft finger and a flat object on a table. Specifically, using a quasi-static analysis we are able to derive a hybrid dynamical system to predict the motion of the object. The model can be used to find fixed-points of the system and the path taken by the object to reach such configurations. Important information for planning, such as the conditions in which the object sticks to the friction patch, pivots, or completely slides against it are obtained. Experimental results confirm the validity of the model for a wide range of applications.

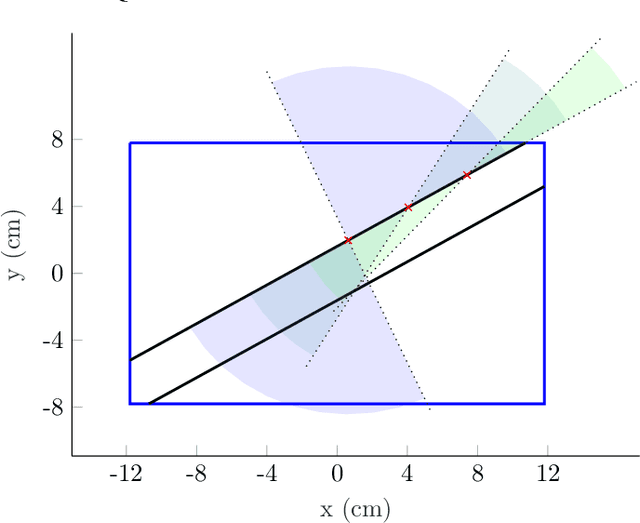

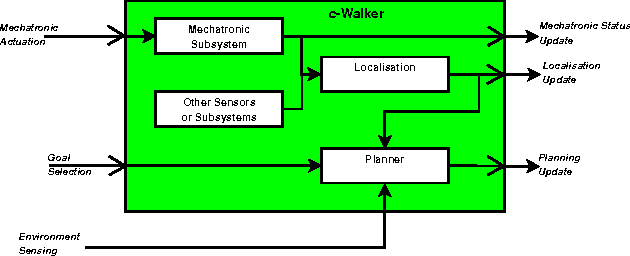

Follow, listen, feel and go: alternative guidance systems for a walking assistance device

Jan 15, 2016

Abstract:In this paper, we propose several solutions to guide an older adult along a safe path using a robotic walking assistant (the c-Walker). We consider four different possibilities to execute the task. One of them is mechanical, with the c-Walker playing an active role in setting the course. The other ones are based on tactile or acoustic stimuli, and suggest a direction of motion that the user is supposed to take on her own will. We describe the technological basis for the hardware components implementing the different solutions, and show specialized path following algorithms for each of them. The paper reports an extensive user validation activity with a quantitative and qualitative analysis of the different solutions. In this work, we test our system just with young participants to establish a safer methodology that will be used in future studies with older adults.

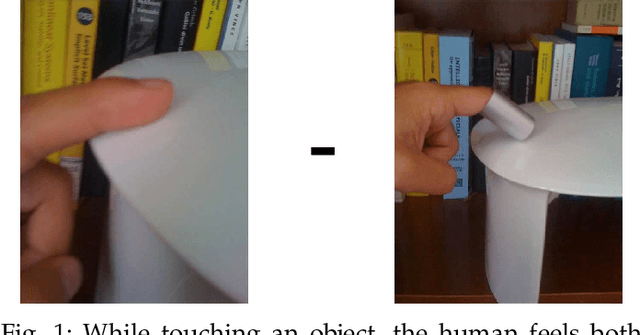

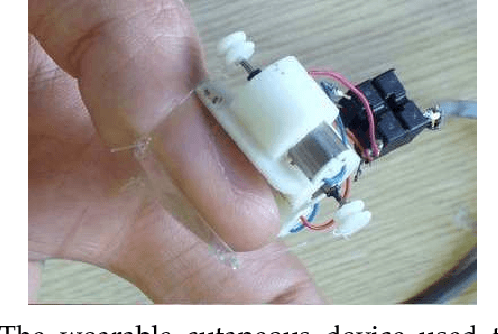

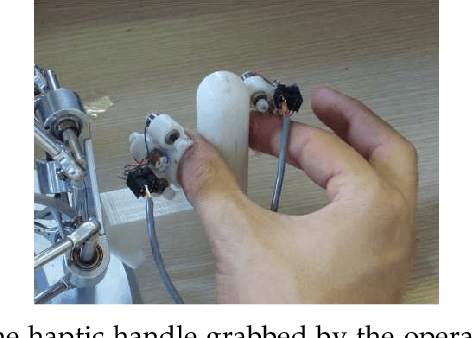

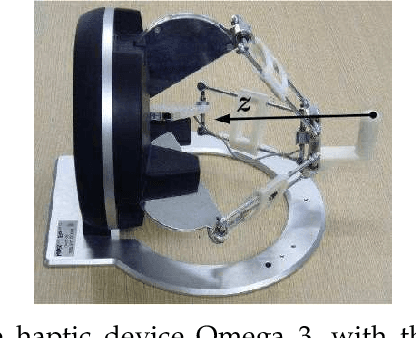

Cutaneous Force Feedback as a Sensory Subtraction Technique in Haptics

Nov 27, 2012

Abstract:A novel sensory substitution technique is presented. Kinesthetic and cutaneous force feedback are substituted by cutaneous feedback (CF) only, provided by two wearable devices able to apply forces to the index finger and the thumb, while holding a handle during a teleoperation task. The force pattern, fed back to the user while using the cutaneous devices, is similar, in terms of intensity and area of application, to the cutaneous force pattern applied to the finger pad while interacting with a haptic device providing both cutaneous and kinesthetic force feedback. The pattern generated using the cutaneous devices can be thought as a subtraction between the complete haptic feedback (HF) and the kinesthetic part of it. For this reason, we refer to this approach as sensory subtraction instead of sensory substitution. A needle insertion scenario is considered to validate the approach. The haptic device is connected to a virtual environment simulating a needle insertion task. Experiments show that the perception of inserting a needle using the cutaneous-only force feedback is nearly indistinguishable from the one felt by the user while using both cutaneous and kinesthetic feedback. As most of the sensory substitution approaches, the proposed sensory subtraction technique also has the advantage of not suffering from stability issues of teleoperation systems due, for instance, to communication delays. Moreover, experiments show that the sensory subtraction technique outperforms sensory substitution with more conventional visual feedback (VF).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge