Nikos Tsagarakis

Vision-Language-Policy Model for Dynamic Robot Task Planning

Dec 22, 2025

Abstract:Bridging the gap between natural language commands and autonomous execution in unstructured environments remains an open challenge for robotics. This requires robots to perceive and reason over the current task scene through multiple modalities, and to plan their behaviors to achieve their intended goals. Traditional robotic task-planning approaches often struggle to bridge low-level execution with high-level task reasoning, and cannot dynamically update task strategies when instructions change during execution, which ultimately limits their versatility and adaptability to new tasks. In this work, we propose a novel language model-based framework for dynamic robot task planning. Our Vision-Language-Policy (VLP) model, based on a vision-language model fine-tuned on real-world data, can interpret semantic instructions and integrate reasoning over the current task scene to generate behavior policies that control the robot to accomplish the task. Moreover, it can dynamically adjust the task strategy in response to changes in the task, enabling flexible adaptation to evolving task requirements. Experiments conducted with different robots and a variety of real-world tasks show that the trained model can efficiently adapt to novel scenarios and dynamically update its policy, demonstrating strong planning autonomy and cross-embodiment generalization. Videos: https://robovlp.github.io/

A Task-Driven, Planner-in-the-Loop Computational Design Framework for Modular Manipulators

Dec 18, 2025Abstract:Modular manipulators composed of pre-manufactured and interchangeable modules offer high adaptability across diverse tasks. However, their deployment requires generating feasible motions while jointly optimizing morphology and mounted pose under kinematic, dynamic, and physical constraints. Moreover, traditional single-branch designs often extend reach by increasing link length, which can easily violate torque limits at the base joint. To address these challenges, we propose a unified task-driven computational framework that integrates trajectory planning across varying morphologies with the co-optimization of morphology and mounted pose. Within this framework, a hierarchical model predictive control (HMPC) strategy is developed to enable motion planning for both redundant and non-redundant manipulators. For design optimization, the CMA-ES is employed to efficiently explore a hybrid search space consisting of discrete morphology configurations and continuous mounted poses. Meanwhile, a virtual module abstraction is introduced to enable bi-branch morphologies, allowing an auxiliary branch to offload torque from the primary branch and extend the achievable workspace without increasing the capacity of individual joint modules. Extensive simulations and hardware experiments on polishing, drilling, and pick-and-place tasks demonstrate the effectiveness of the proposed framework. The results show that: 1) the framework can generate multiple feasible designs that satisfy kinematic and dynamic constraints while avoiding environmental collisions for given tasks; 2) flexible design objectives, such as maximizing manipulability, minimizing joint effort, or reducing the number of modules, can be achieved by customizing the cost functions; and 3) a bi-branch morphology capable of operating in a large workspace can be realized without requiring more powerful basic modules.

INTENTION: Inferring Tendencies of Humanoid Robot Motion Through Interactive Intuition and Grounded VLM

Aug 06, 2025Abstract:Traditional control and planning for robotic manipulation heavily rely on precise physical models and predefined action sequences. While effective in structured environments, such approaches often fail in real-world scenarios due to modeling inaccuracies and struggle to generalize to novel tasks. In contrast, humans intuitively interact with their surroundings, demonstrating remarkable adaptability, making efficient decisions through implicit physical understanding. In this work, we propose INTENTION, a novel framework enabling robots with learned interactive intuition and autonomous manipulation in diverse scenarios, by integrating Vision-Language Models (VLMs) based scene reasoning with interaction-driven memory. We introduce Memory Graph to record scenes from previous task interactions which embodies human-like understanding and decision-making about different tasks in real world. Meanwhile, we design an Intuitive Perceptor that extracts physical relations and affordances from visual scenes. Together, these components empower robots to infer appropriate interaction behaviors in new scenes without relying on repetitive instructions. Videos: https://robo-intention.github.io

CONCERT: a Modular Reconfigurable Robot for Construction

Apr 07, 2025Abstract:This paper presents CONCERT, a fully reconfigurable modular collaborative robot (cobot) for multiple on-site operations in a construction site. CONCERT has been designed to support human activities in construction sites by leveraging two main characteristics: high-power density motors and modularity. In this way, the robot is able to perform a wide range of highly demanding tasks by acting as a co-worker of the human operator or by autonomously executing them following user instructions. Most of its versatility comes from the possibility of rapidly changing its kinematic structure by adding or removing passive or active modules. In this way, the robot can be set up in a vast set of morphologies, consequently changing its workspace and capabilities depending on the task to be executed. In the same way, distal end-effectors can be replaced for the execution of different operations. This paper also includes a full description of the software pipeline employed to automatically discover and deploy the robot morphology. Specifically, depending on the modules installed, the robot updates the kinematic, dynamic, and geometric parameters, taking into account the information embedded in each module. In this way, we demonstrate how the robot can be fully reassembled and made operational in less than ten minutes. We validated the CONCERT robot across different use cases, including drilling, sanding, plastering, and collaborative transportation with obstacle avoidance, all performed in a real construction site scenario. We demonstrated the robot's adaptivity and performance in multiple scenarios characterized by different requirements in terms of power and workspace. CONCERT has been designed and built by the Humanoid and Human-Centered Mechatronics Laboratory (HHCM) at the Istituto Italiano di Tecnologia in the context of the European Project Horizon 2020 CONCERT.

Wearable Haptics for a Marionette-inspired Teleoperation of Highly Redundant Robotic Systems

Mar 20, 2025Abstract:The teleoperation of complex, kinematically redundant robots with loco-manipulation capabilities represents a challenge for human operators, who have to learn how to operate the many degrees of freedom of the robot to accomplish a desired task. In this context, developing an easy-to-learn and easy-to-use human-robot interface is paramount. Recent works introduced a novel teleoperation concept, which relies on a virtual physical interaction interface between the human operator and the remote robot equivalent to a "Marionette" control, but whose feedback was limited to only visual feedback on the human side. In this paper, we propose extending the "Marionette" interface by adding a wearable haptic interface to cope with the limitations given by the previous works. Leveraging the additional haptic feedback modality, the human operator gains full sensorimotor control over the robot, and the awareness about the robot's response and interactions with the environment is greatly improved. We evaluated the proposed interface and the related teleoperation framework with naive users, assessing the teleoperation performance and the user experience with and without haptic feedback. The conducted experiments consisted in a loco-manipulation mission with the CENTAURO robot, a hybrid leg-wheel quadruped with a humanoid dual-arm upper body.

* 7 pages, 8 figures

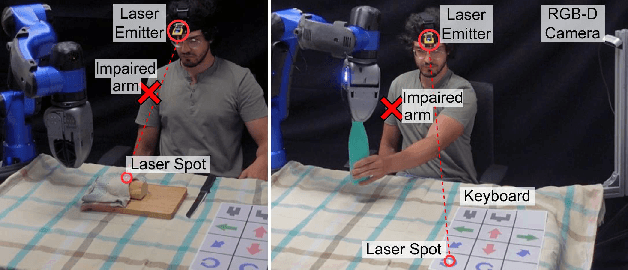

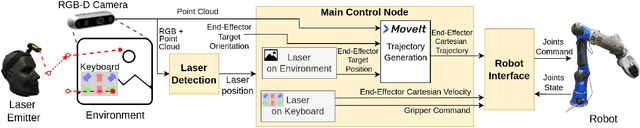

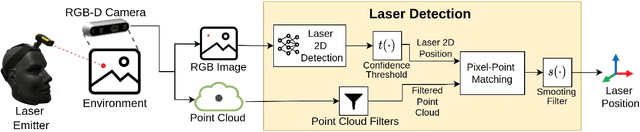

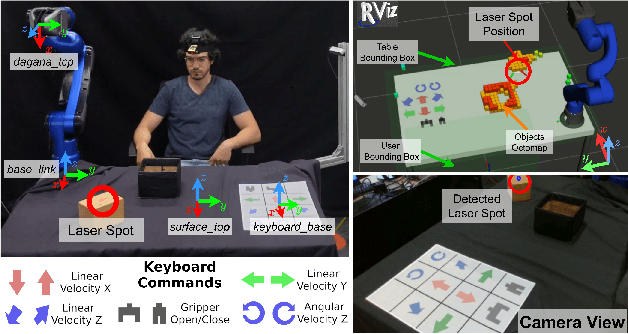

A Laser-guided Interaction Interface for Providing Effective Robot Assistance to People with Upper Limbs Impairments

Mar 20, 2025

Abstract:Robotics has shown significant potential in assisting people with disabilities to enhance their independence and involvement in daily activities. Indeed, a societal long-term impact is expected in home-care assistance with the deployment of intelligent robotic interfaces. This work presents a human-robot interface developed to help people with upper limbs impairments, such as those affected by stroke injuries, in activities of everyday life. The proposed interface leverages on a visual servoing guidance component, which utilizes an inexpensive but effective laser emitter device. By projecting the laser on a surface within the workspace of the robot, the user is able to guide the robotic manipulator to desired locations, to reach, grasp and manipulate objects. Considering the targeted users, the laser emitter is worn on the head, enabling to intuitively control the robot motions with head movements that point the laser in the environment, which projection is detected with a neural network based perception module. The interface implements two control modalities: the first allows the user to select specific locations directly, commanding the robot to reach those points; the second employs a paper keyboard with buttons that can be virtually pressed by pointing the laser at them. These buttons enable a more direct control of the Cartesian velocity of the end-effector and provides additional functionalities such as commanding the action of the gripper. The proposed interface is evaluated in a series of manipulation tasks involving a 6DOF assistive robot manipulator equipped with 1DOF beak-like gripper. The two interface modalities are combined to successfully accomplish tasks requiring bimanual capacity that is usually affected in people with upper limbs impairments.

* 8 pages, 12 figures

Dynamic object goal pushing with mobile manipulators through model-free constrained reinforcement learning

Feb 03, 2025Abstract:Non-prehensile pushing to move and reorient objects to a goal is a versatile loco-manipulation skill. In the real world, the object's physical properties and friction with the floor contain significant uncertainties, which makes the task challenging for a mobile manipulator. In this paper, we develop a learning-based controller for a mobile manipulator to move an unknown object to a desired position and yaw orientation through a sequence of pushing actions. The proposed controller for the robotic arm and the mobile base motion is trained using a constrained Reinforcement Learning (RL) formulation. We demonstrate its capability in experiments with a quadrupedal robot equipped with an arm. The learned policy achieves a success rate of 91.35% in simulation and at least 80% on hardware in challenging scenarios. Through our extensive hardware experiments, we show that the approach demonstrates high robustness against unknown objects of different masses, materials, sizes, and shapes. It reactively discovers the pushing location and direction, thus achieving contact-rich behavior while observing only the pose of the object. Additionally, we demonstrate the adaptive behavior of the learned policy towards preventing the object from toppling.

Grounding Language Models in Autonomous Loco-manipulation Tasks

Sep 02, 2024

Abstract:Humanoid robots with behavioral autonomy have consistently been regarded as ideal collaborators in our daily lives and promising representations of embodied intelligence. Compared to fixed-based robotic arms, humanoid robots offer a larger operational space while significantly increasing the difficulty of control and planning. Despite the rapid progress towards general-purpose humanoid robots, most studies remain focused on locomotion ability with few investigations into whole-body coordination and tasks planning, thus limiting the potential to demonstrate long-horizon tasks involving both mobility and manipulation under open-ended verbal instructions. In this work, we propose a novel framework that learns, selects, and plans behaviors based on tasks in different scenarios. We combine reinforcement learning (RL) with whole-body optimization to generate robot motions and store them into a motion library. We further leverage the planning and reasoning features of the large language model (LLM), constructing a hierarchical task graph that comprises a series of motion primitives to bridge lower-level execution with higher-level planning. Experiments in simulation and real-world using the CENTAURO robot show that the language model based planner can efficiently adapt to new loco-manipulation tasks, demonstrating high autonomy from free-text commands in unstructured scenes.

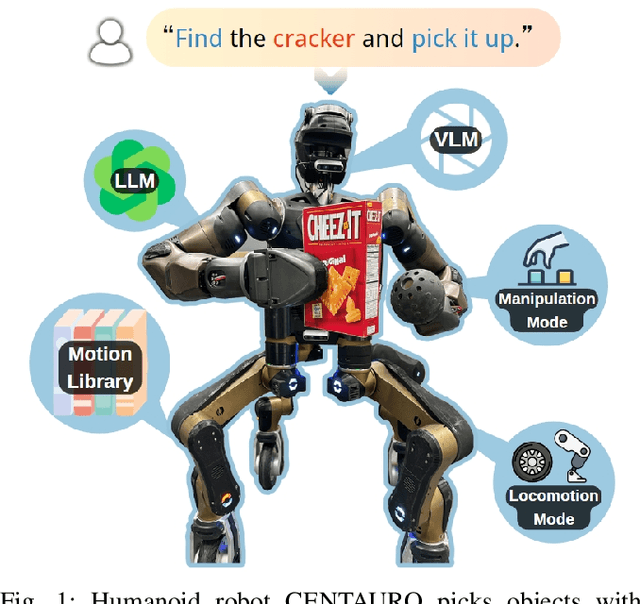

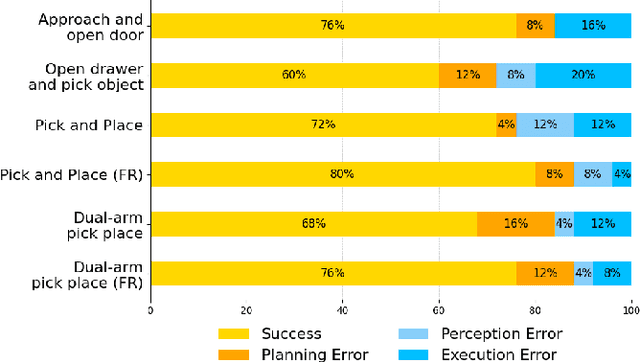

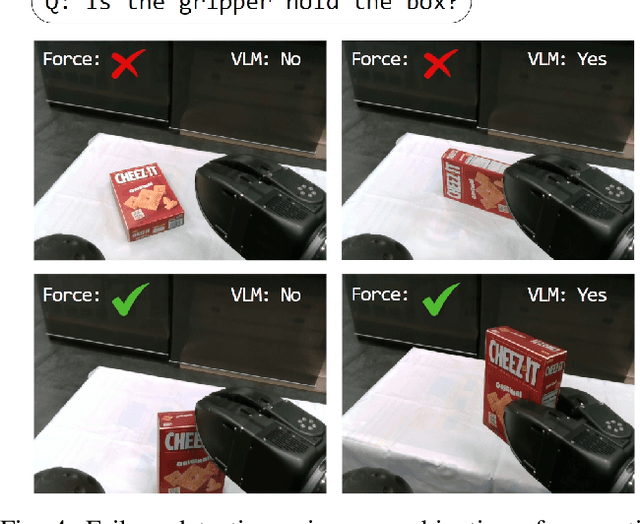

Autonomous Behavior Planning For Humanoid Loco-manipulation Through Grounded Language Model

Aug 15, 2024

Abstract:Enabling humanoid robots to perform autonomously loco-manipulation in unstructured environments is crucial and highly challenging for achieving embodied intelligence. This involves robots being able to plan their actions and behaviors in long-horizon tasks while using multi-modality to perceive deviations between task execution and high-level planning. Recently, large language models (LLMs) have demonstrated powerful planning and reasoning capabilities for comprehension and processing of semantic information through robot control tasks, as well as the usability of analytical judgment and decision-making for multi-modal inputs. To leverage the power of LLMs towards humanoid loco-manipulation, we propose a novel language-model based framework that enables robots to autonomously plan behaviors and low-level execution under given textual instructions, while observing and correcting failures that may occur during task execution. To systematically evaluate this framework in grounding LLMs, we created the robot 'action' and 'sensing' behavior library for task planning, and conducted mobile manipulation tasks and experiments in both simulated and real environments using the CENTAURO robot, and verified the effectiveness and application of this approach in robotic tasks with autonomous behavioral planning.

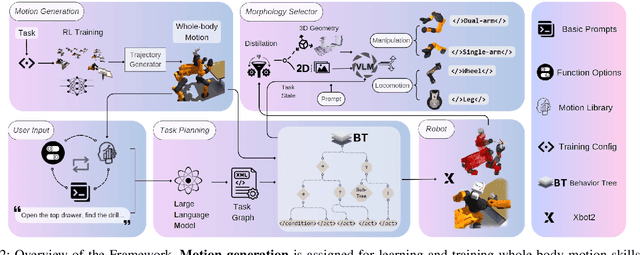

HYPERmotion: Learning Hybrid Behavior Planning for Autonomous Loco-manipulation

Jun 20, 2024

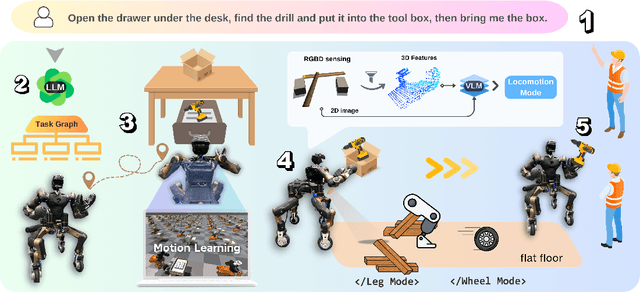

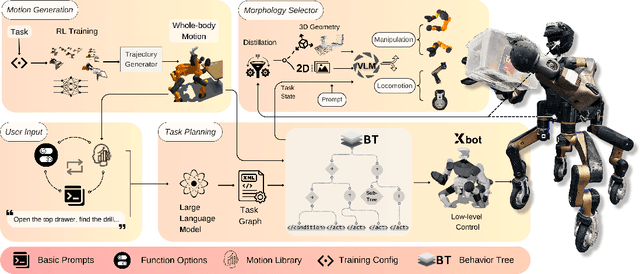

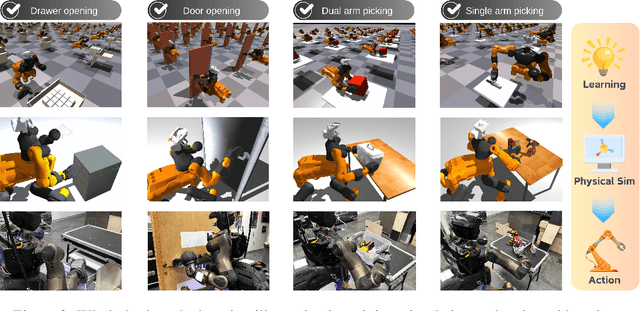

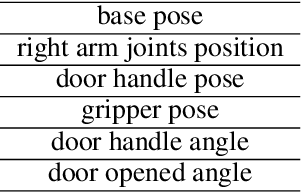

Abstract:Enabling robots to autonomously perform hybrid motions in diverse environments can be beneficial for long-horizon tasks such as material handling, household chores, and work assistance. This requires extensive exploitation of intrinsic motion capabilities, extraction of affordances from rich environmental information, and planning of physical interaction behaviors. Despite recent progress has demonstrated impressive humanoid whole-body control abilities, they struggle to achieve versatility and adaptability for new tasks. In this work, we propose HYPERmotion, a framework that learns, selects and plans behaviors based on tasks in different scenarios. We combine reinforcement learning with whole-body optimization to generate motion for 38 actuated joints and create a motion library to store the learned skills. We apply the planning and reasoning features of the large language models (LLMs) to complex loco-manipulation tasks, constructing a hierarchical task graph that comprises a series of primitive behaviors to bridge lower-level execution with higher-level planning. By leveraging the interaction of distilled spatial geometry and 2D observation with a visual language model (VLM) to ground knowledge into a robotic morphology selector to choose appropriate actions in single- or dual-arm, legged or wheeled locomotion. Experiments in simulation and real-world show that learned motions can efficiently adapt to new tasks, demonstrating high autonomy from free-text commands in unstructured scenes. Videos and website: hy-motion.github.io/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge