Liana Bertoni

CONCERT: a Modular Reconfigurable Robot for Construction

Apr 07, 2025Abstract:This paper presents CONCERT, a fully reconfigurable modular collaborative robot (cobot) for multiple on-site operations in a construction site. CONCERT has been designed to support human activities in construction sites by leveraging two main characteristics: high-power density motors and modularity. In this way, the robot is able to perform a wide range of highly demanding tasks by acting as a co-worker of the human operator or by autonomously executing them following user instructions. Most of its versatility comes from the possibility of rapidly changing its kinematic structure by adding or removing passive or active modules. In this way, the robot can be set up in a vast set of morphologies, consequently changing its workspace and capabilities depending on the task to be executed. In the same way, distal end-effectors can be replaced for the execution of different operations. This paper also includes a full description of the software pipeline employed to automatically discover and deploy the robot morphology. Specifically, depending on the modules installed, the robot updates the kinematic, dynamic, and geometric parameters, taking into account the information embedded in each module. In this way, we demonstrate how the robot can be fully reassembled and made operational in less than ten minutes. We validated the CONCERT robot across different use cases, including drilling, sanding, plastering, and collaborative transportation with obstacle avoidance, all performed in a real construction site scenario. We demonstrated the robot's adaptivity and performance in multiple scenarios characterized by different requirements in terms of power and workspace. CONCERT has been designed and built by the Humanoid and Human-Centered Mechatronics Laboratory (HHCM) at the Istituto Italiano di Tecnologia in the context of the European Project Horizon 2020 CONCERT.

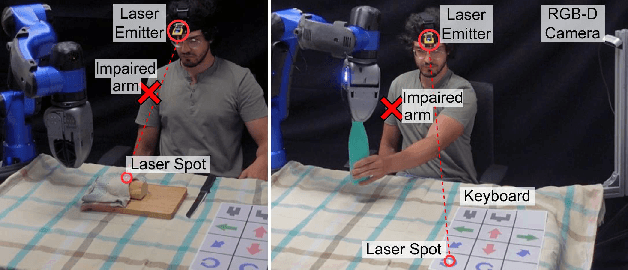

A Laser-guided Interaction Interface for Providing Effective Robot Assistance to People with Upper Limbs Impairments

Mar 20, 2025

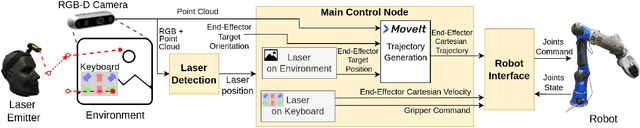

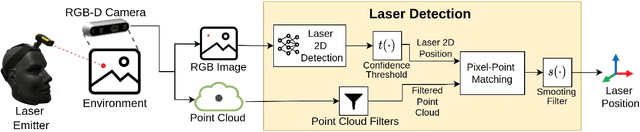

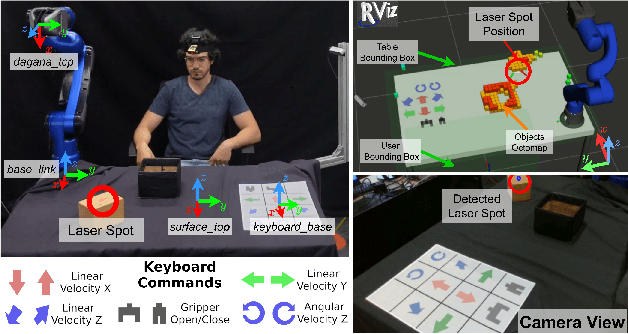

Abstract:Robotics has shown significant potential in assisting people with disabilities to enhance their independence and involvement in daily activities. Indeed, a societal long-term impact is expected in home-care assistance with the deployment of intelligent robotic interfaces. This work presents a human-robot interface developed to help people with upper limbs impairments, such as those affected by stroke injuries, in activities of everyday life. The proposed interface leverages on a visual servoing guidance component, which utilizes an inexpensive but effective laser emitter device. By projecting the laser on a surface within the workspace of the robot, the user is able to guide the robotic manipulator to desired locations, to reach, grasp and manipulate objects. Considering the targeted users, the laser emitter is worn on the head, enabling to intuitively control the robot motions with head movements that point the laser in the environment, which projection is detected with a neural network based perception module. The interface implements two control modalities: the first allows the user to select specific locations directly, commanding the robot to reach those points; the second employs a paper keyboard with buttons that can be virtually pressed by pointing the laser at them. These buttons enable a more direct control of the Cartesian velocity of the end-effector and provides additional functionalities such as commanding the action of the gripper. The proposed interface is evaluated in a series of manipulation tasks involving a 6DOF assistive robot manipulator equipped with 1DOF beak-like gripper. The two interface modalities are combined to successfully accomplish tasks requiring bimanual capacity that is usually affected in people with upper limbs impairments.

* 8 pages, 12 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge