Claudio Pacchierotti

CNRS

Evaluation of Human-Robot Interfaces based on 2D/3D Visual and Haptic Feedback for Aerial Manipulation

Oct 20, 2024

Abstract:Most telemanipulation systems for aerial robots provide the operator with only 2D screen visual information. The lack of richer information about the robot's status and environment can limit human awareness and, in turn, task performance. While the pilot's experience can often compensate for this reduced flow of information, providing richer feedback is expected to reduce the cognitive workload and offer a more intuitive experience overall. This work aims to understand the significance of providing additional pieces of information during aerial telemanipulation, namely (i) 3D immersive visual feedback about the robot's surroundings through mixed reality (MR) and (ii) 3D haptic feedback about the robot interaction with the environment. To do so, we developed a human-robot interface able to provide this information. First, we demonstrate its potential in a real-world manipulation task requiring sub-centimeter-level accuracy. Then, we evaluate the individual effect of MR vision and haptic feedback on both dexterity and workload through a human subjects study involving a virtual block transportation task. Results show that both 3D MR vision and haptic feedback improve the operator's dexterity in the considered teleoperated aerial interaction tasks. Nevertheless, pilot experience remains the most significant factor.

Transferring human emotions to robot motions using Neural Policy Style Transfer

Feb 01, 2024

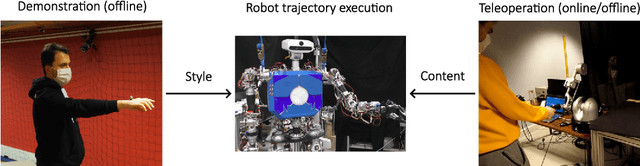

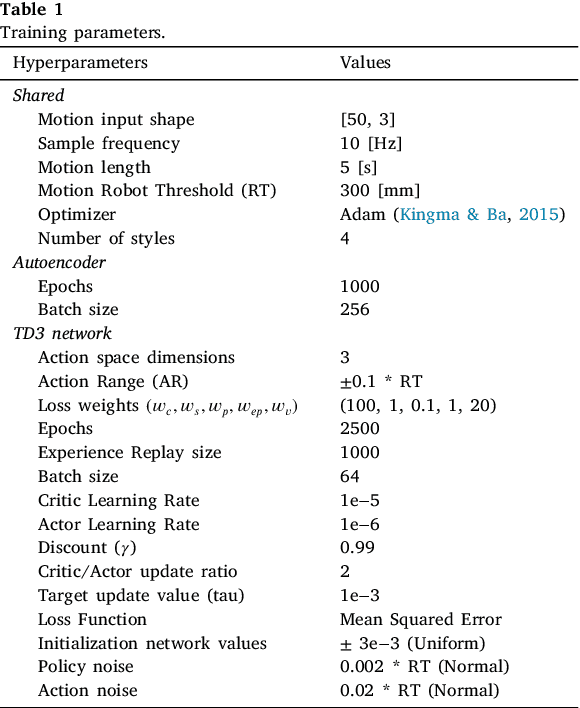

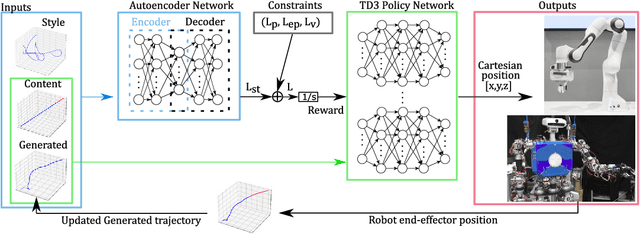

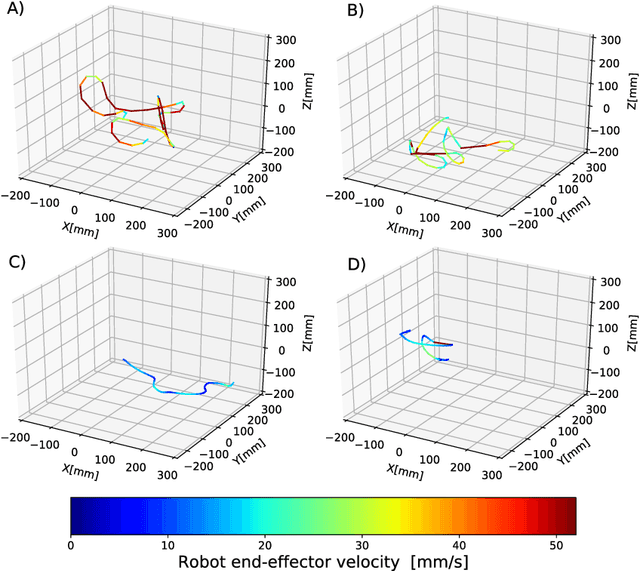

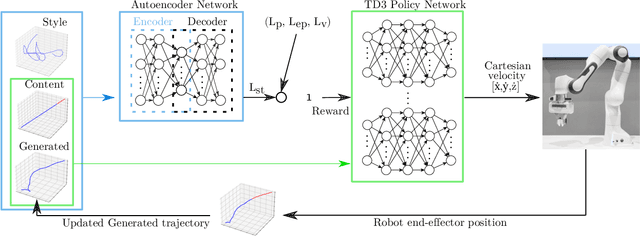

Abstract:Neural Style Transfer (NST) was originally proposed to use feature extraction capabilities of Neural Networks as a way to perform Style Transfer with images. Pre-trained image classification architectures were selected for feature extraction, leading to new images showing the same content as the original but with a different style. In robotics, Style Transfer can be employed to transfer human motion styles to robot motions. The challenge lies in the lack of pre-trained classification architectures for robot motions that could be used for feature extraction. Neural Policy Style Transfer TD3 (NPST3) is proposed for the transfer of human motion styles to robot motions. This framework allows the same robot motion to be executed in different human-centered motion styles, such as in an angry, happy, calm, or sad fashion. The Twin Delayed Deep Deterministic Policy Gradient (TD3) network is introduced for the generation of control policies. An autoencoder network is in charge of feature extraction for the Style Transfer step. The Style Transfer step can be performed both offline and online: offline for the autonomous executions of human-style robot motions, and online for adapting at runtime the style of e.g., a teleoperated robot. The framework is tested using two different robotic platforms: a robotic manipulator designed for telemanipulation tasks, and a humanoid robot designed for social interaction. The proposed approach was evaluated for both platforms, performing a total of 147 questionnaires asking human subjects to recognize the human motion style transferred to the robot motion for a predefined set of actions.

Neural Style Transfer with Twin-Delayed DDPG for Shared Control of Robotic Manipulators

Feb 01, 2024

Abstract:Neural Style Transfer (NST) refers to a class of algorithms able to manipulate an element, most often images, to adopt the appearance or style of another one. Each element is defined as a combination of Content and Style: the Content can be conceptually defined as the what and the Style as the how of said element. In this context, we propose a custom NST framework for transferring a set of styles to the motion of a robotic manipulator, e.g., the same robotic task can be carried out in an angry, happy, calm, or sad way. An autoencoder architecture extracts and defines the Content and the Style of the target robot motions. A Twin Delayed Deep Deterministic Policy Gradient (TD3) network generates the robot control policy using the loss defined by the autoencoder. The proposed Neural Policy Style Transfer TD3 (NPST3) alters the robot motion by introducing the trained style. Such an approach can be implemented either offline, for carrying out autonomous robot motions in dynamic environments, or online, for adapting at runtime the style of a teleoperated robot. The considered styles can be learned online from human demonstrations. We carried out an evaluation with human subjects enrolling 73 volunteers, asking them to recognize the style behind some representative robotic motions. Results show a good recognition rate, proving that it is possible to convey different styles to a robot using this approach.

On the Stability of Gated Graph Neural Networks

May 30, 2023Abstract:In this paper, we aim to find the conditions for input-state stability (ISS) and incremental input-state stability ($\delta$ISS) of Gated Graph Neural Networks (GGNNs). We show that this recurrent version of Graph Neural Networks (GNNs) can be expressed as a dynamical distributed system and, as a consequence, can be analysed using model-based techniques to assess its stability and robustness properties. Then, the stability criteria found can be exploited as constraints during the training process to enforce the internal stability of the neural network. Two distributed control examples, flocking and multi-robot motion control, show that using these conditions increases the performance and robustness of the gated GNNs.

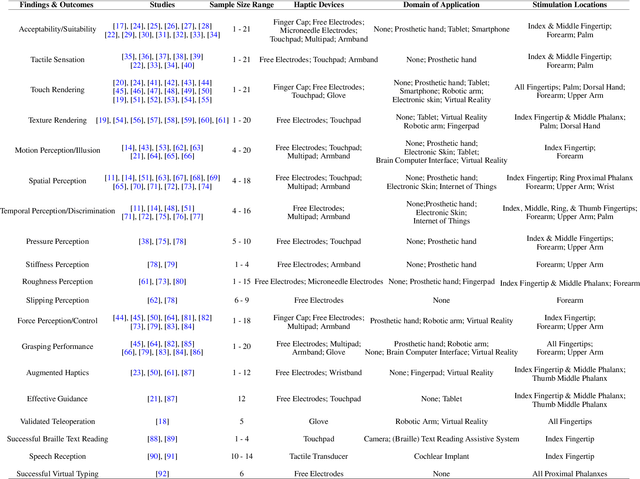

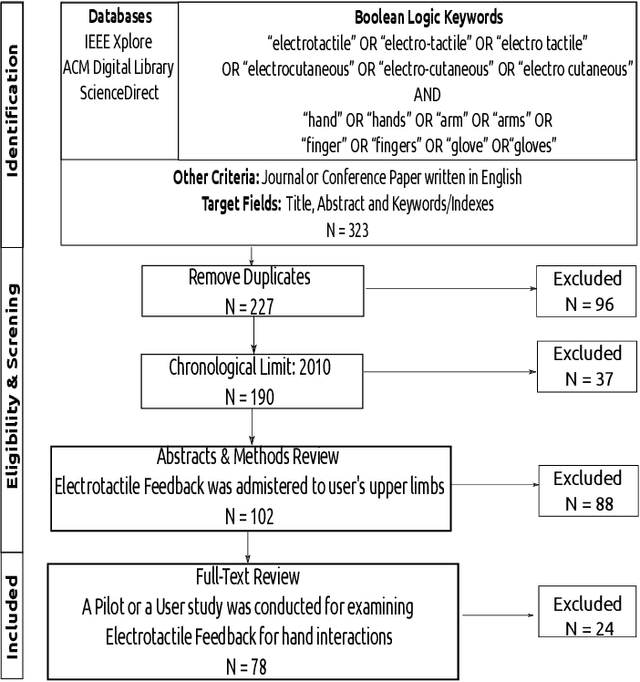

Electrotactile feedback for hand interactions:A systematic review, meta-analysis,and future directions

May 11, 2021

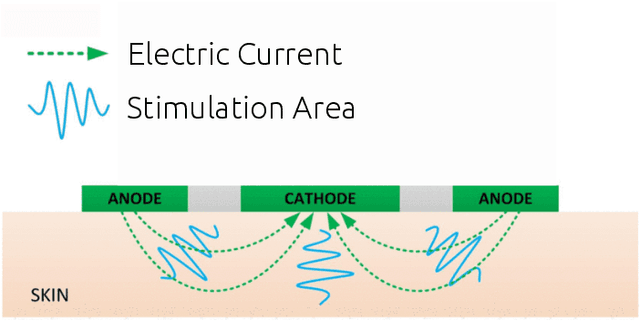

Abstract:Haptic feedback is critical in a broad range of human-machine/computer-interaction applications. However, the high cost and low portability/wearability of haptic devices remains an unresolved issue, severely limiting the adoption of this otherwise promising technology. Electrotactile interfaces have the advantage of being more portable and wearable due to its reduced actuators' size, as well as benefiting from lower power consumption and manufacturing cost. The usages of electrotactile feedback have been explored in human-computer interaction and human-machine-interaction for facilitating hand-based interactions in applications such as prosthetics, virtual reality, robotic teleoperation, surface haptics, portable devices, and rehabilitation. This paper presents a systematic review and meta-analysis of electrotactile feedback systems for hand-based interactions in the last decade. We categorize the different electrotactile systems according to their type of stimulation and implementation/application. We also present and discuss a quantitative congregation of the findings, so as to offer a high-level overview into the state-of-art and suggest future directions. Electrotactile feedback was successful in rendering and/or augmenting most tactile sensations, eliciting perceptual processes, and improving performance in many scenarios, especially in those where the wearability/portability of the system is important. However, knowledge gaps, technical drawbacks, and methodological limitations were detected, which should be addressed in future studies.

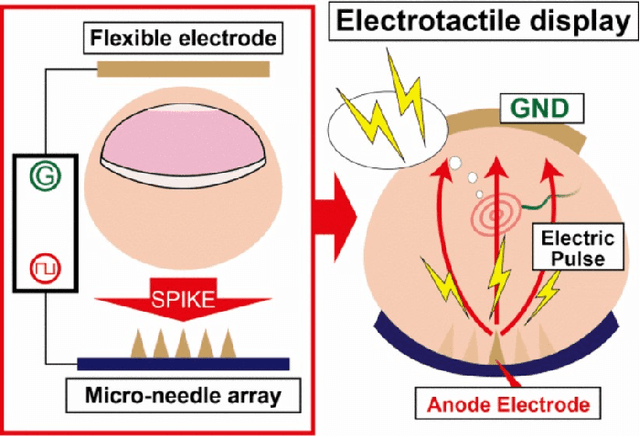

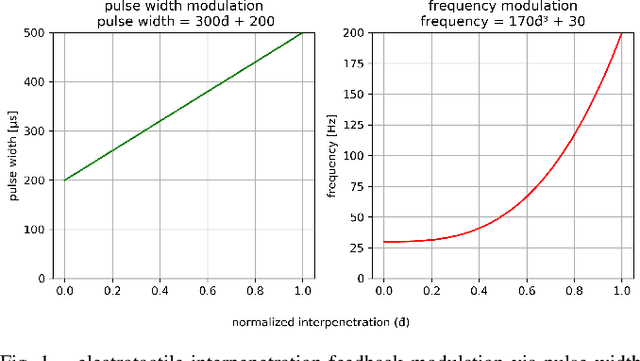

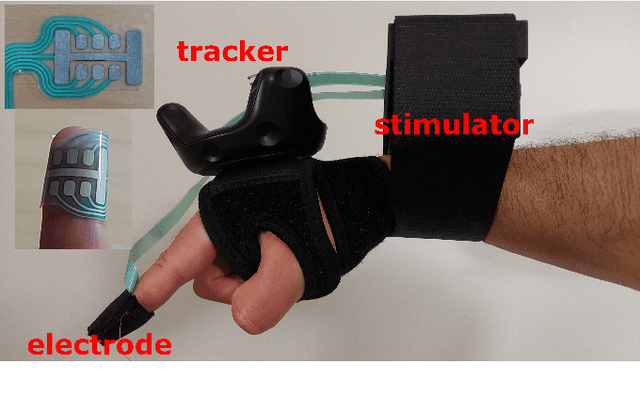

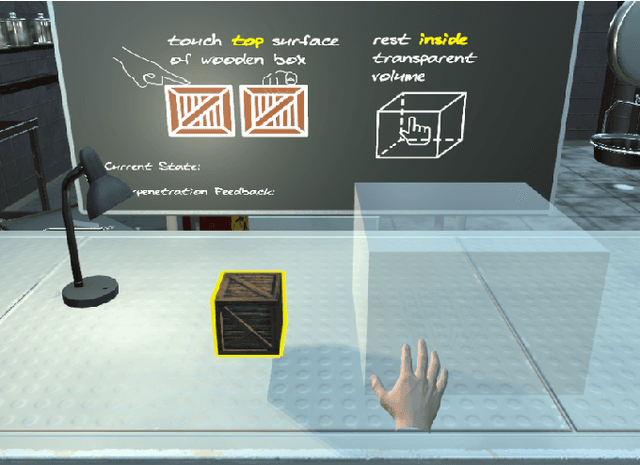

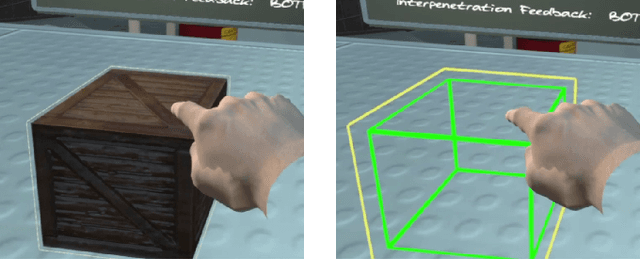

Electrotactile Feedback in Virtual Reality For Precise and Accurate Contact Rendering

Jan 30, 2021

Abstract:This paper presents a wearable electrotactile feedback system to enable precise and accurate contact rendering with virtual objects for mid-air interactions. In particular, we propose the use of electrotactile feedback to render the interpenetration distance between the user's finger and the virtual content is touched. Our approach consists of modulating the perceived intensity (frequency and pulse width modulation) of the electrotactile stimuli according to the registered interpenetration distance. In a user study (N=21), we assessed the performance of four different interpenetration feedback approaches: electrotactile-only, visual-only, electrotactile and visual, and no interpenetration feedback. First, the results showed that contact precision and accuracy were significantly improved when using interpenetration feedback. Second, and more interestingly, there were no significant differences between visual and electrotactile feedback when the calibration was optimized and the user was familiarized with electrotactile feedback. Taken together, these results suggest that electrotactile feedback could be an efficient replacement of visual feedback for accurate and precise contact rendering in virtual reality avoiding the need of active visual focus and the rendering of additional visual artefacts.

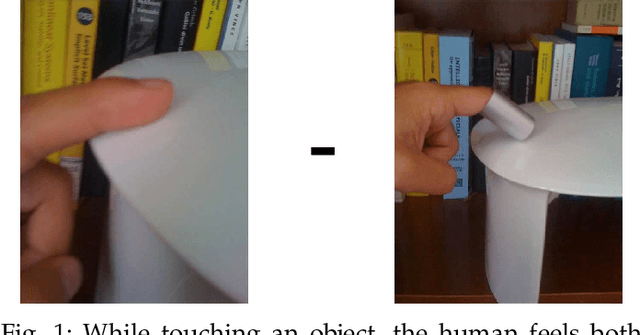

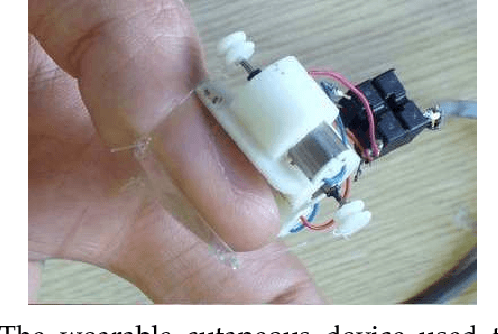

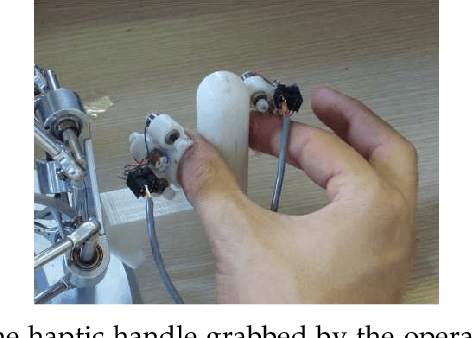

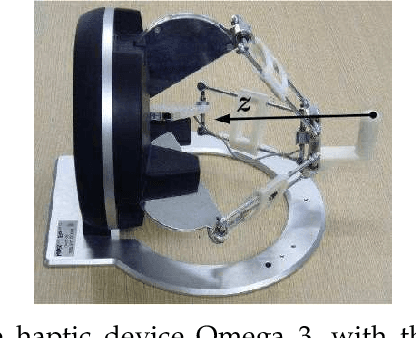

Cutaneous Force Feedback as a Sensory Subtraction Technique in Haptics

Nov 27, 2012

Abstract:A novel sensory substitution technique is presented. Kinesthetic and cutaneous force feedback are substituted by cutaneous feedback (CF) only, provided by two wearable devices able to apply forces to the index finger and the thumb, while holding a handle during a teleoperation task. The force pattern, fed back to the user while using the cutaneous devices, is similar, in terms of intensity and area of application, to the cutaneous force pattern applied to the finger pad while interacting with a haptic device providing both cutaneous and kinesthetic force feedback. The pattern generated using the cutaneous devices can be thought as a subtraction between the complete haptic feedback (HF) and the kinesthetic part of it. For this reason, we refer to this approach as sensory subtraction instead of sensory substitution. A needle insertion scenario is considered to validate the approach. The haptic device is connected to a virtual environment simulating a needle insertion task. Experiments show that the perception of inserting a needle using the cutaneous-only force feedback is nearly indistinguishable from the one felt by the user while using both cutaneous and kinesthetic feedback. As most of the sensory substitution approaches, the proposed sensory subtraction technique also has the advantage of not suffering from stability issues of teleoperation systems due, for instance, to communication delays. Moreover, experiments show that the sensory subtraction technique outperforms sensory substitution with more conventional visual feedback (VF).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge