Vincent Alexandre Mendez

Hardware-Efficient EMG Decoding for Next-Generation Hand Prostheses

May 31, 2024

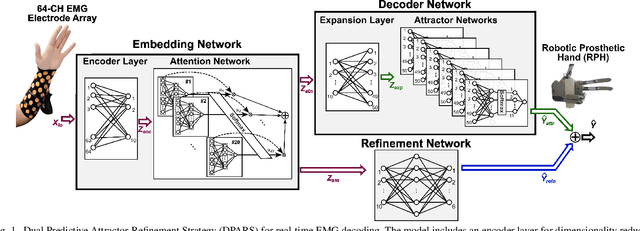

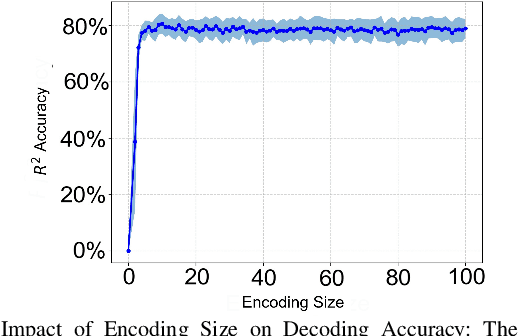

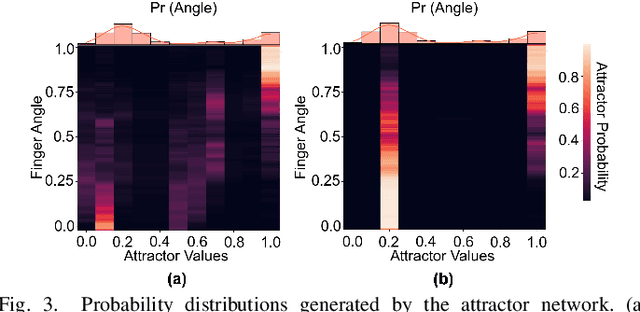

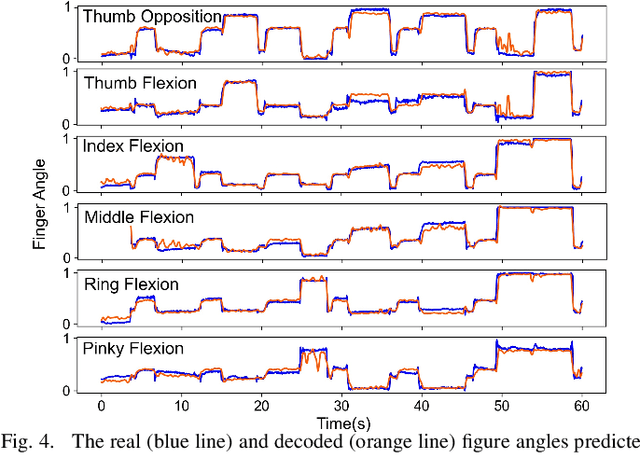

Abstract:Advancements in neural engineering have enabled the development of Robotic Prosthetic Hands (RPHs) aimed at restoring hand functionality. Current commercial RPHs offer limited control through basic on/off commands. Recent progresses in machine learning enable finger movement decoding with higher degrees of freedom, yet the high computational complexity of such models limits their application in portable devices. Future RPH designs must balance portability, low power consumption, and high decoding accuracy to be practical for individuals with disabilities. To this end, we introduce a novel attractor-based neural network to realize on-chip movement decoding for next-generation portable RPHs. The proposed architecture comprises an encoder, an attention layer, an attractor network, and a refinement regressor. We tested our model on four healthy subjects and achieved a decoding accuracy of 80.3%. Our proposed model is over 120 and 50 times more compact compared to state-of-the-art LSTM and CNN models, respectively, with comparable (or superior) decoding accuracy. Therefore, it exhibits minimal hardware complexity and can be effectively integrated as a System-on-Chip.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge