Helen Oleynikova

Allocation for Omnidirectional Aerial Robots: Incorporating Power Dynamics

Dec 20, 2024

Abstract:Tilt-rotor aerial robots are more dynamic and versatile than their fixed-rotor counterparts, since the thrust vector and body orientation are decoupled. However, the coordination of servomotors and propellers (the allocation problem) is not trivial, especially accounting for overactuation and actuator dynamics. We present and compare different methods of actuator allocation for tilt-rotor platforms, evaluating them on a real aerial robot performing dynamic trajectories. We extend the state-of-the-art geometric allocation into a differential allocation, which uses the platform's redundancy and does not suffer from singularities typical of the geometric solution. We expand it by incorporating actuator dynamics and introducing propeller limit curves. These improve the modeling of propeller limits, automatically balancing their usage and allowing the platform to selectively activate and deactivate propellers during flight. We show that actuator dynamics and limits make the tuning of the allocation not only easier, but also allow it to track more dynamic oscillating trajectories with angular velocities up to 4 rad/s, compared to 2.8 rad/s of geometric methods.

Pushing the Limits of Reactive Planning: Learning to Escape Local Minima

Jul 18, 2024

Abstract:When does a robot planner need a map? Reactive methods that use only the robot's current sensor data and local information are fast and flexible, but prone to getting stuck in local minima. Is there a middle-ground between fully reactive methods and map-based path planners? In this paper, we investigate feed forward and recurrent networks to augment a purely reactive sensor-based planner, which should give the robot geometric intuition about how to escape local minima. We train on a large number of extremely cluttered worlds auto-generated from primitive shapes, and show that our system zero-shot transfers to real 3D man-made environments, and can handle up to 30% sensor noise without degeneration of performance. We also offer a discussion of what role network memory plays in our final system, and what insights can be drawn about the nature of reactive vs. map-based navigation.

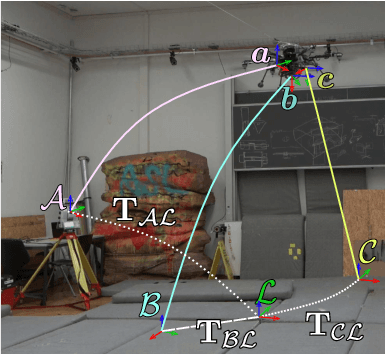

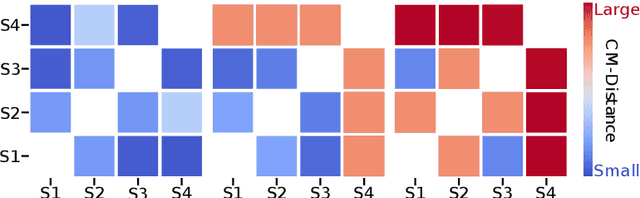

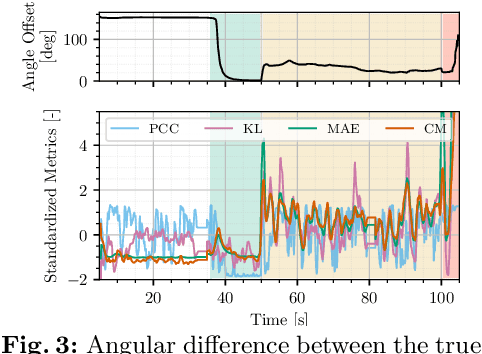

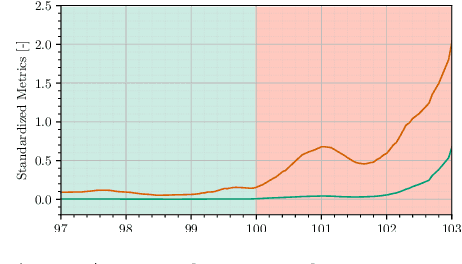

To Fuse or Not to Fuse: Measuring Consistency in Multi-Sensor Fusion for Aerial Robots

Dec 22, 2023

Abstract:Aerial vehicles are no longer limited to flying in open space: recent work has focused on aerial manipulation and up-close inspection. Such applications place stringent requirements on state estimation: the robot must combine state information from many sources, including onboard odometry and global positioning sensors. However, flying close to or in contact with structures is a degenerate case for many sensing modalities, and the robot's state estimation framework must intelligently choose which sensors are currently trustworthy. We evaluate a number of metrics to judge the reliability of sensing modalities in a multi-sensor fusion framework, then introduce a consensus-finding scheme that uses this metric to choose which sensors to fuse or not to fuse. Finally, we show that such a fusion framework is more robust and accurate than fusing all sensors all the time and demonstrate how such metrics can be informative in real-world experiments in indoor-outdoor flight and bridge inspection.

Learning to Fly Omnidirectional Micro Aerial Vehicles with an End-To-End Control Network

Dec 08, 2023Abstract:Overactuated tilt-rotor platforms offer many advantages over traditional fixed-arm drones, allowing the decoupling of the applied force from the attitude of the robot. This expands their application areas to aerial interaction and manipulation, and allows them to overcome disturbances such as from ground or wall effects by exploiting the additional degrees of freedom available to their controllers. However, the overactuation also complicates the control problem, especially if the motors that tilt the arms have slower dynamics than those spinning the propellers. Instead of building a complex model-based controller that takes all of these subtleties into account, we attempt to learn an end-to-end pose controller using reinforcement learning, and show its superior behavior in the presence of inertial and force disturbances compared to a state-of-the-art traditional controller.

cuRobo: Parallelized Collision-Free Minimum-Jerk Robot Motion Generation

Nov 03, 2023

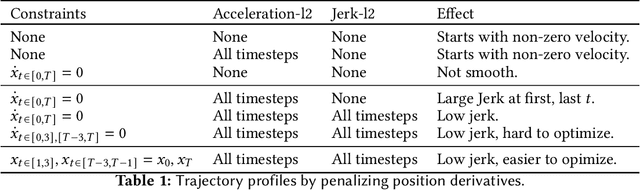

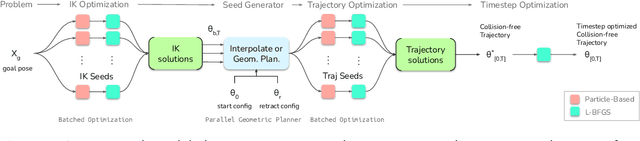

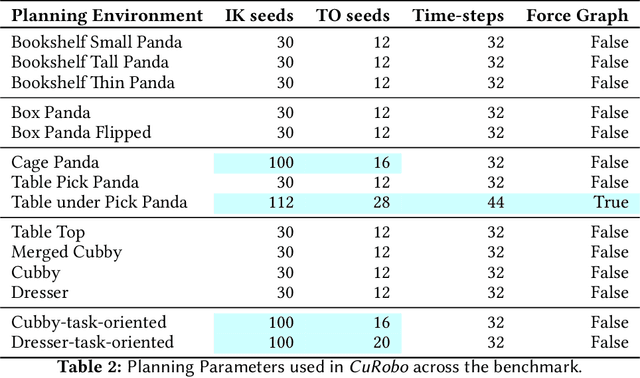

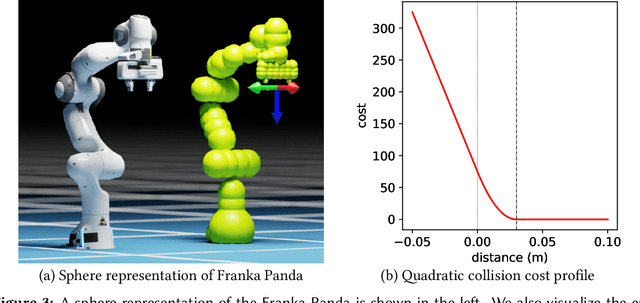

Abstract:This paper explores the problem of collision-free motion generation for manipulators by formulating it as a global motion optimization problem. We develop a parallel optimization technique to solve this problem and demonstrate its effectiveness on massively parallel GPUs. We show that combining simple optimization techniques with many parallel seeds leads to solving difficult motion generation problems within 50ms on average, 60x faster than state-of-the-art (SOTA) trajectory optimization methods. We achieve SOTA performance by combining L-BFGS step direction estimation with a novel parallel noisy line search scheme and a particle-based optimization solver. To further aid trajectory optimization, we develop a parallel geometric planner that plans within 20ms and also introduce a collision-free IK solver that can solve over 7000 queries/s. We package our contributions into a state of the art GPU accelerated motion generation library, cuRobo and release it to enrich the robotics community. Additional details are available at https://curobo.org

nvblox: GPU-Accelerated Incremental Signed Distance Field Mapping

Nov 01, 2023Abstract:Dense, volumetric maps are essential for safe robot navigation through cluttered spaces, as well as interaction with the environment. For latency and robustness, it is best if these can be computed on-board on computationally-constrained hardware from camera or LiDAR-based sensors. Previous works leave a gap between CPU-based systems for robotic mapping, which due to computation constraints limit map resolution or scale, and GPU-based reconstruction systems which omit features that are critical to robotic path planning. We introduce a library, nvblox, that aims to fill this gap, by GPU-accelerating robotic volumetric mapping, and which is optimized for embedded GPUs. nvblox delivers a significant performance improvement over the state of the art, achieving up to a 177x speed-up in surface reconstruction, and up to a 31x improvement in distance field computation, and is available open-source.

COIN-LIO: Complementary Intensity-Augmented LiDAR Inertial Odometry

Oct 02, 2023Abstract:We present COIN-LIO, a LiDAR Inertial Odometry pipeline that tightly couples information from LiDAR intensity with geometry-based point cloud registration. The focus of our work is to improve the robustness of LiDAR-inertial odometry in geometrically degenerate scenarios, like tunnels or flat fields. We project LiDAR intensity returns into an intensity image, and propose an image processing pipeline that produces filtered images with improved brightness consistency within the image as well as across different scenes. To effectively leverage intensity as an additional modality, we present a novel feature selection scheme that detects uninformative directions in the point cloud registration and explicitly selects patches with complementary image information. Photometric error minimization in the image patches is then fused with inertial measurements and point-to-plane registration in an iterated Extended Kalman Filter. The proposed approach improves accuracy and robustness on a public dataset. We additionally publish a new dataset, that captures five real-world environments in challenging, geometrically degenerate scenes. By using the additional photometric information, our approach shows drastically improved robustness against geometric degeneracy in environments where all compared baseline approaches fail.

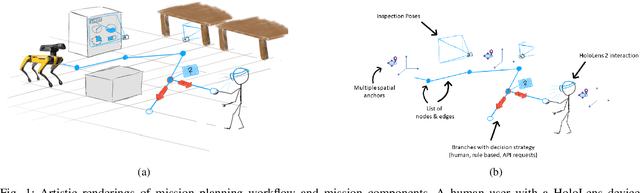

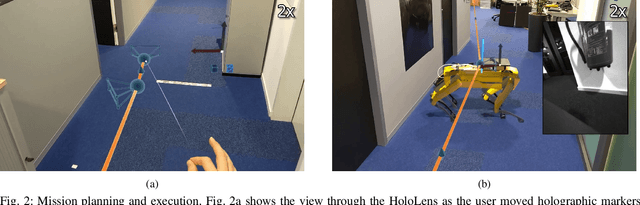

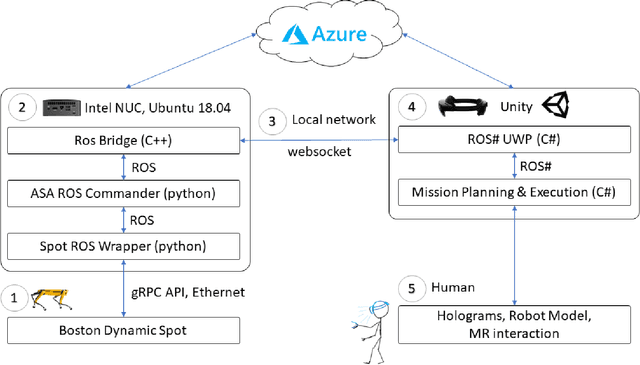

Spatial Computing and Intuitive Interaction: Bringing Mixed Reality and Robotics Together

Feb 03, 2022

Abstract:Spatial computing -- the ability of devices to be aware of their surroundings and to represent this digitally -- offers novel capabilities in human-robot interaction. In particular, the combination of spatial computing and egocentric sensing on mixed reality devices enables them to capture and understand human actions and translate these to actions with spatial meaning, which offers exciting new possibilities for collaboration between humans and robots. This paper presents several human-robot systems that utilize these capabilities to enable novel robot use cases: mission planning for inspection, gesture-based control, and immersive teleoperation. These works demonstrate the power of mixed reality as a tool for human-robot interaction, and the potential of spatial computing and mixed reality to drive the future of human-robot interaction.

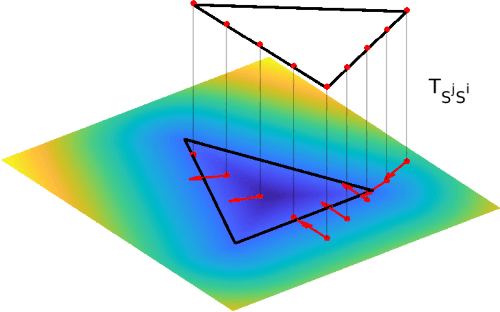

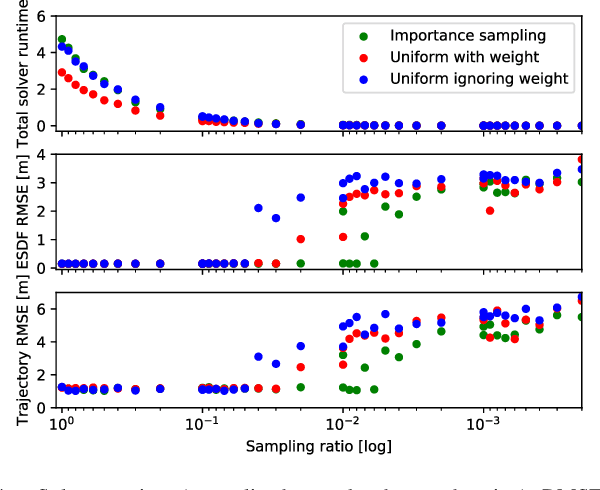

Freetures: Localization in Signed Distance Function Maps

Oct 21, 2020

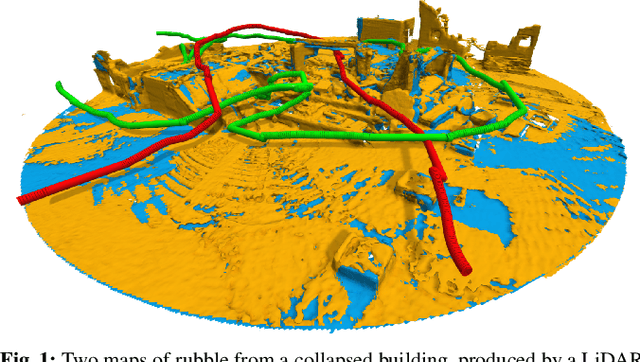

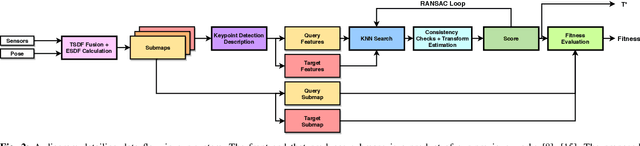

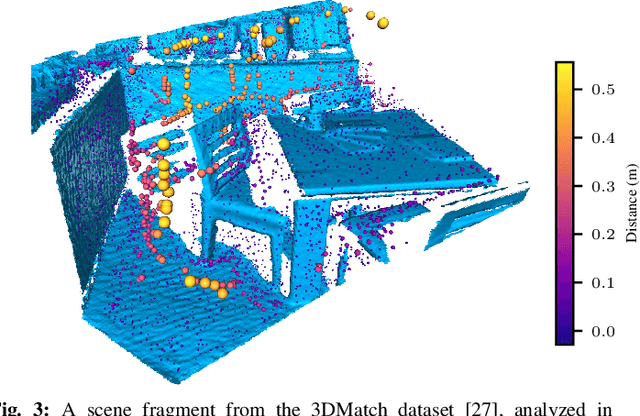

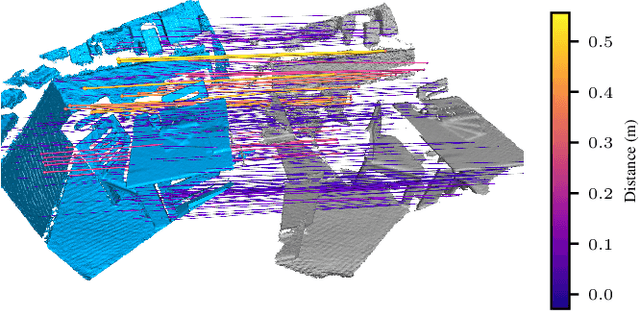

Abstract:Localization of a robotic system within a previously mapped environment is important for reducing estimation drift and for reusing previously built maps. Existing techniques for geometry-based localization have focused on the description of local surface geometry, usually using pointclouds as the underlying representation. We propose a system for geometry-based localization that extracts features directly from an implicit surface representation: the Signed Distance Function (SDF). The SDF varies continuously through space, which allows the proposed system to extract and utilize features describing both surfaces and free-space. Through evaluations on public datasets, we demonstrate the flexibility of this approach, and show an increase in localization performance over state-of-the-art handcrafted surfaces-only descriptors. We achieve an average improvement of ~12% on an RGB-D dataset and ~18% on a LiDAR-based dataset. Finally, we demonstrate our system for localizing a LiDAR-equipped MAV within a previously built map of a search and rescue training ground.

Voxgraph: Globally Consistent, Volumetric Mapping using Signed Distance Function Submaps

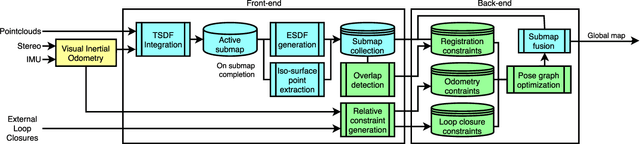

Apr 27, 2020

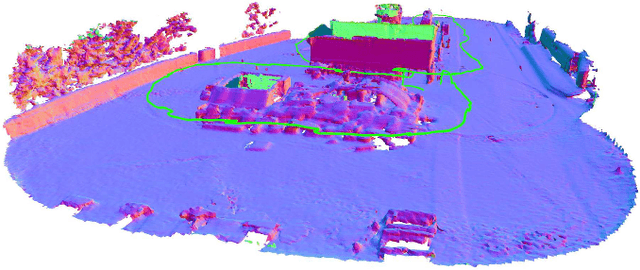

Abstract:Globally consistent dense maps are a key requirement for long-term robot navigation in complex environments. While previous works have addressed the challenges of dense mapping and global consistency, most require more computational resources than may be available on-board small robots. We propose a framework that creates globally consistent volumetric maps on a CPU and is lightweight enough to run on computationally constrained platforms. Our approach represents the environment as a collection of overlapping Signed Distance Function (SDF) submaps, and maintains global consistency by computing an optimal alignment of the submap collection. By exploiting the underlying SDF representation, we generate correspondence free constraints between submap pairs that are computationally efficient enough to optimize the global problem each time a new submap is added. We deploy the proposed system on a hexacopter Micro Aerial Vehicle (MAV) with an Intel i7-8650U CPU in two realistic scenarios: mapping a large-scale area using a 3D LiDAR, and mapping an industrial space using an RGB-D camera. In the large-scale outdoor experiments, the system optimizes a 120x80m map in less than 4s and produces absolute trajectory RMSEs of less than 1m over 400m trajectories. Our complete system, called voxgraph, is available as open source.

* 8 pages, 9 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge