Patrick Pfreundschuh

Informed, Constrained, Aligned: A Field Analysis on Degeneracy-aware Point Cloud Registration in the Wild

Aug 21, 2024

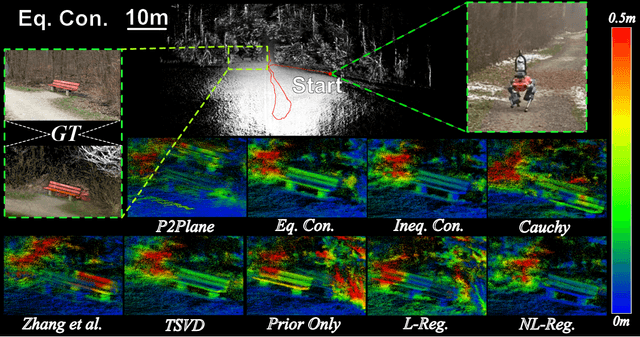

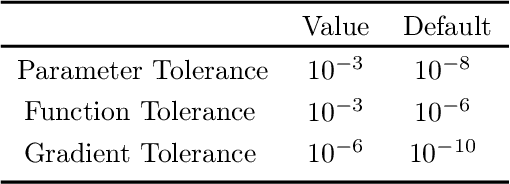

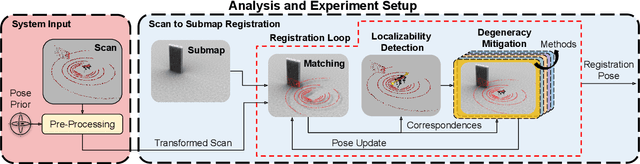

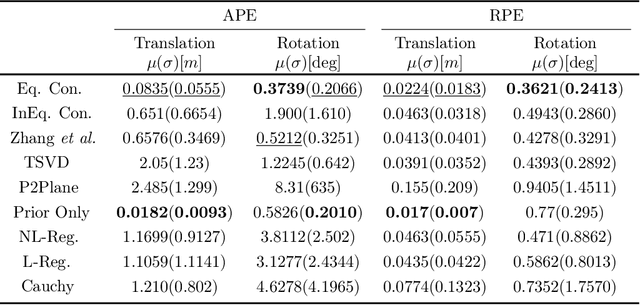

Abstract:The ICP registration algorithm has been a preferred method for LiDAR-based robot localization for nearly a decade. However, even in modern SLAM solutions, ICP can degrade and become unreliable in geometrically ill-conditioned environments. Current solutions primarily focus on utilizing additional sources of information, such as external odometry, to either replace the degenerate directions of the optimization solution or add additional constraints in a sensor-fusion setup afterward. In response, this work investigates and compares new and existing degeneracy mitigation methods for robust LiDAR-based localization and analyzes the efficacy of these approaches in degenerate environments for the first time in the literature at this scale. Specifically, this work proposes and investigates i) the incorporation of different types of constraints into the ICP algorithm, ii) the effect of using active or passive degeneracy mitigation techniques, and iii) the choice of utilizing global point cloud registration methods on the ill-conditioned ICP problem in LiDAR degenerate environments. The study results are validated through multiple real-world field and simulated experiments. The analysis shows that active optimization degeneracy mitigation is necessary and advantageous in the absence of reliable external estimate assistance for LiDAR-SLAM. Furthermore, introducing degeneracy-aware hard constraints in the optimization before or during the optimization is shown to perform better in the wild than by including the constraints after. Moreover, with heuristic fine-tuned parameters, soft constraints can provide equal or better results in complex ill-conditioned scenarios. The implementations used in the analysis of this work are made publicly available to the community.

A robust baro-radar-inertial odometry m-estimator for multicopter navigation in cities and forests

Aug 11, 2024

Abstract:Search and rescue operations require mobile robots to navigate unstructured indoor and outdoor environments. In particular, actively stabilized multirotor drones need precise movement data to balance and avoid obstacles. Combining radial velocities from on-chip radar with MEMS inertial sensing has proven to provide robust, lightweight, and consistent state estimation, even in visually or geometrically degraded environments. Statistical tests robustify these estimators against radar outliers. However, available work with binary outlier filters lacks adaptability to various hardware setups and environments. Other work has predominantly been tested in handheld static environments or automotive contexts. This work introduces a robust baro-radar-inertial odometry (BRIO) m-estimator for quadcopter flights in typical GNSS-denied scenarios. Extensive real-world closed-loop flights in cities and forests demonstrate robustness to moving objects and ghost targets, maintaining a consistent performance with 0.5 % to 3.2 % drift per distance traveled. Benchmarks on public datasets validate the system's generalizability. The code, dataset, and video are available at https://github.com/ethz-asl/rio.

TULIP: Transformer for Upsampling of LiDAR Point Cloud

Dec 14, 2023Abstract:LiDAR Upsampling is a challenging task for the perception systems of robots and autonomous vehicles, due to the sparse and irregular structure of large-scale scene contexts. Recent works propose to solve this problem by converting LiDAR data from 3D Euclidean space into an image super-resolution problem in 2D image space. Although their methods can generate high-resolution range images with fine-grained details, the resulting 3D point clouds often blur out details and predict invalid points. In this paper, we propose TULIP, a new method to reconstruct high-resolution LiDAR point clouds from low-resolution LiDAR input. We also follow a range image-based approach but specifically modify the patch and window geometries of a Swin-Transformer-based network to better fit the characteristics of range images. We conducted several experiments on three different public real-world and simulated datasets. TULIP outperforms state-of-the-art methods in all relevant metrics and generates robust and more realistic point clouds than prior works.

COIN-LIO: Complementary Intensity-Augmented LiDAR Inertial Odometry

Oct 02, 2023Abstract:We present COIN-LIO, a LiDAR Inertial Odometry pipeline that tightly couples information from LiDAR intensity with geometry-based point cloud registration. The focus of our work is to improve the robustness of LiDAR-inertial odometry in geometrically degenerate scenarios, like tunnels or flat fields. We project LiDAR intensity returns into an intensity image, and propose an image processing pipeline that produces filtered images with improved brightness consistency within the image as well as across different scenes. To effectively leverage intensity as an additional modality, we present a novel feature selection scheme that detects uninformative directions in the point cloud registration and explicitly selects patches with complementary image information. Photometric error minimization in the image patches is then fused with inertial measurements and point-to-plane registration in an iterated Extended Kalman Filter. The proposed approach improves accuracy and robustness on a public dataset. We additionally publish a new dataset, that captures five real-world environments in challenging, geometrically degenerate scenes. By using the additional photometric information, our approach shows drastically improved robustness against geometric degeneracy in environments where all compared baseline approaches fail.

Dynablox: Real-time Detection of Diverse Dynamic Objects in Complex Environments

Apr 21, 2023Abstract:Real-time detection of moving objects is an essential capability for robots acting autonomously in dynamic environments. We thus propose Dynablox, a novel online mapping-based approach for robust moving object detection in complex environments. The central idea of our approach is to incrementally estimate high confidence free-space areas by modeling and accounting for sensing, state estimation, and mapping limitations during online robot operation. The spatio-temporally conservative free space estimate enables robust detection of moving objects without making any assumptions on the appearance of objects or environments. This allows deployment in complex scenes such as multi-storied buildings or staircases, and for diverse moving objects such as people carrying various items, doors swinging or even balls rolling around. We thoroughly evaluate our approach on real-world data sets, achieving 86% IoU at 17 FPS in typical robotic settings. The method outperforms a recent appearance-based classifier and approaches the performance of offline methods. We demonstrate its generality on a novel data set with rare moving objects in complex environments. We make our efficient implementation and the novel data set available as open-source.

Resilient Terrain Navigation with a 5 DOF Metal Detector Drone

Dec 15, 2022Abstract:Micro aerial vehicles (MAVs) hold the potential for performing autonomous and contactless land surveys for the detection of landmines and explosive remnants of war (ERW). Metal detectors are the standard tool, but have to be operated close to and parallel to the terrain. As this requires advanced flight capabilities, they have not been successfully combined with MAVs before. To this end, we present a full system to autonomously survey challenging undulated terrain using a metal detector mounted on a 5 degrees of freedom (DOF) MAV. Based on an online estimate of the terrain, our receding-horizon planner efficiently covers the area, aligning the detector to the surface while considering the kinematic and visibility constraints of the platform. For resilient localization, we propose a factor-graph approach for online fusion of GNSS, IMU and LiDAR measurements. A simulated ablation study shows that the proposed planner reduces coverage duration and improves trajectory smoothness. Real-world flight experiments showcase autonomous mapping of buried metallic objects in undulated and obstructed terrain. The proposed localization approach is resilient to individual sensor degeneracy.

Team CERBERUS Wins the DARPA Subterranean Challenge: Technical Overview and Lessons Learned

Jul 11, 2022

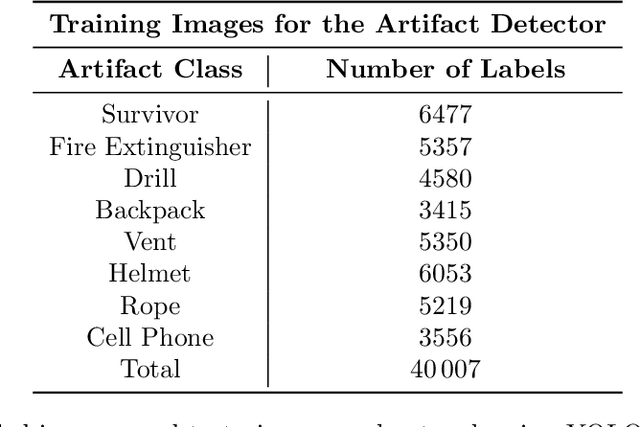

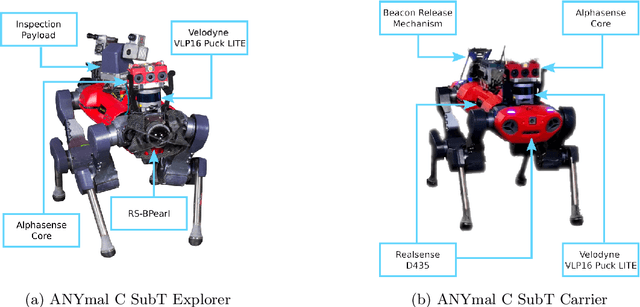

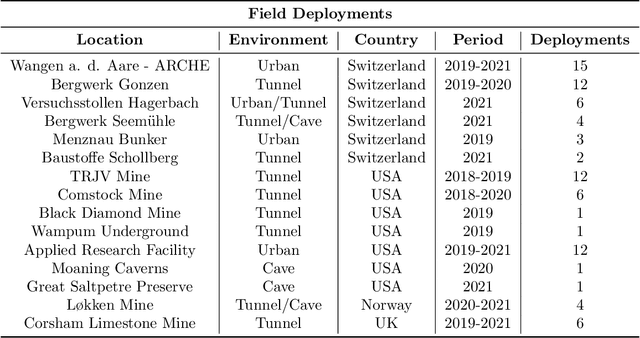

Abstract:This article presents the CERBERUS robotic system-of-systems, which won the DARPA Subterranean Challenge Final Event in 2021. The Subterranean Challenge was organized by DARPA with the vision to facilitate the novel technologies necessary to reliably explore diverse underground environments despite the grueling challenges they present for robotic autonomy. Due to their geometric complexity, degraded perceptual conditions combined with lack of GPS support, austere navigation conditions, and denied communications, subterranean settings render autonomous operations particularly demanding. In response to this challenge, we developed the CERBERUS system which exploits the synergy of legged and flying robots, coupled with robust control especially for overcoming perilous terrain, multi-modal and multi-robot perception for localization and mapping in conditions of sensor degradation, and resilient autonomy through unified exploration path planning and local motion planning that reflects robot-specific limitations. Based on its ability to explore diverse underground environments and its high-level command and control by a single human supervisor, CERBERUS demonstrated efficient exploration, reliable detection of objects of interest, and accurate mapping. In this article, we report results from both the preliminary runs and the final Prize Round of the DARPA Subterranean Challenge, and discuss highlights and challenges faced, alongside lessons learned for the benefit of the community.

Dynamic Object Aware LiDAR SLAM based on Automatic Generation of Training Data

Apr 08, 2021

Abstract:Highly dynamic environments, with moving objects such as cars or humans, can pose a performance challenge for LiDAR SLAM systems that assume largely static scenes. To overcome this challenge and support the deployment of robots in real world scenarios, we propose a complete solution for a dynamic object aware LiDAR SLAM algorithm. This is achieved by leveraging a real-time capable neural network that can detect dynamic objects, thus allowing our system to deal with them explicitly. To efficiently generate the necessary training data which is key to our approach, we present a novel end-to-end occupancy grid based pipeline that can automatically label a wide variety of arbitrary dynamic objects. Our solution can thus generalize to different environments without the need for expensive manual labeling and at the same time avoids assumptions about the presence of a predefined set of known objects in the scene. Using this technique, we automatically label over 12000 LiDAR scans collected in an urban environment with a large amount of pedestrians and use this data to train a neural network, achieving an average segmentation IoU of 0.82. We show that explicitly dealing with dynamic objects can improve the LiDAR SLAM odometry performance by 39.6% while yielding maps which better represent the environments. A supplementary video as well as our test data are available online.

MOZARD: Multi-Modal Localization for Autonomous Vehicles in Urban Outdoor Environments

Mar 03, 2020

Abstract:Visually poor scenarios are one of the main sources of failure in visual localization systems in outdoor environments. To address this challenge, we present MOZARD, a multi-modal localization system for urban outdoor environments using vision and LiDAR. By extending our preexisting key-point based visual multi-session local localization approach with the use of semantic data, an improved localization recall can be achieved across vastly different appearance conditions. In particular we focus on the use of curbstone information because of their broad distribution and reliability within urban environments. We present thorough experimental evaluations on several driving kilometers in challenging urban outdoor environments, analyze the recall and accuracy of our localization system and demonstrate in a case study possible failure cases of each subsystem. We demonstrate that MOZARD is able to bridge scenarios where our previous work VIZARD fails, hence yielding an increased recall performance, while a similar localization accuracy of 0.2m is achieved

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge