Frank Mascarich

Team CERBERUS Wins the DARPA Subterranean Challenge: Technical Overview and Lessons Learned

Jul 11, 2022

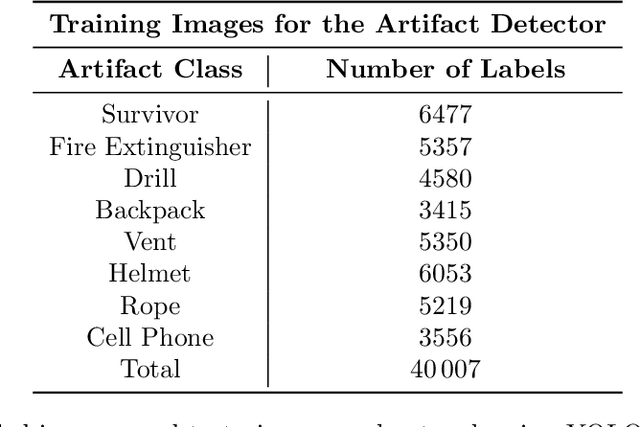

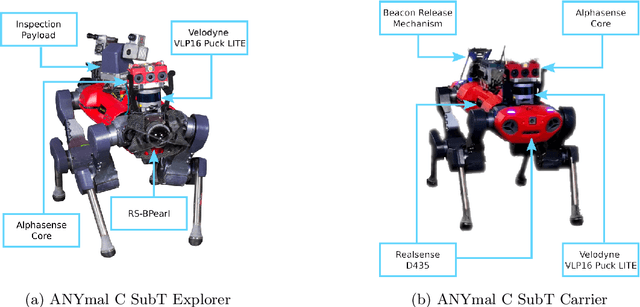

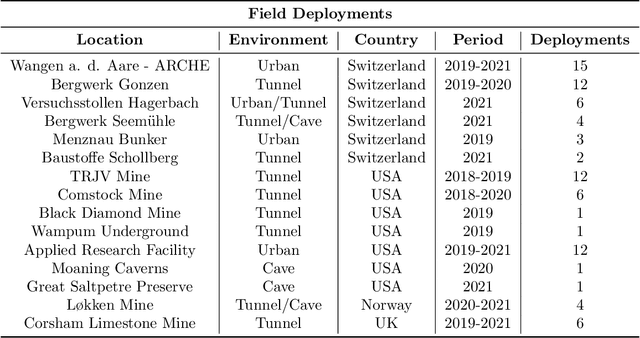

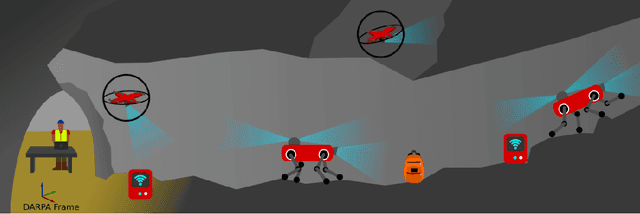

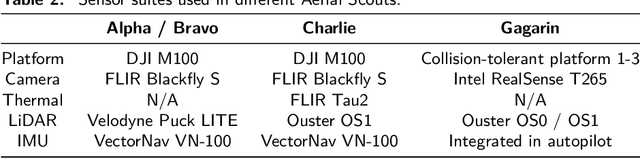

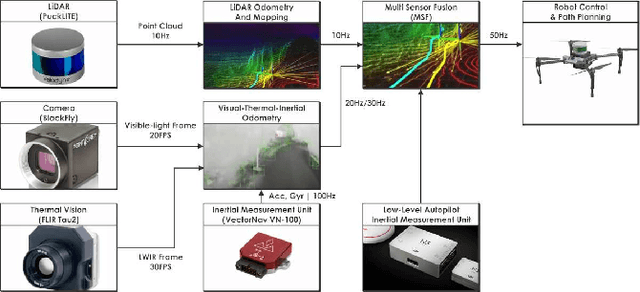

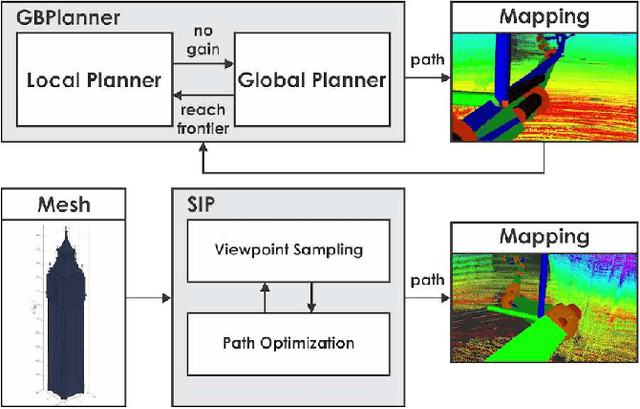

Abstract:This article presents the CERBERUS robotic system-of-systems, which won the DARPA Subterranean Challenge Final Event in 2021. The Subterranean Challenge was organized by DARPA with the vision to facilitate the novel technologies necessary to reliably explore diverse underground environments despite the grueling challenges they present for robotic autonomy. Due to their geometric complexity, degraded perceptual conditions combined with lack of GPS support, austere navigation conditions, and denied communications, subterranean settings render autonomous operations particularly demanding. In response to this challenge, we developed the CERBERUS system which exploits the synergy of legged and flying robots, coupled with robust control especially for overcoming perilous terrain, multi-modal and multi-robot perception for localization and mapping in conditions of sensor degradation, and resilient autonomy through unified exploration path planning and local motion planning that reflects robot-specific limitations. Based on its ability to explore diverse underground environments and its high-level command and control by a single human supervisor, CERBERUS demonstrated efficient exploration, reliable detection of objects of interest, and accurate mapping. In this article, we report results from both the preliminary runs and the final Prize Round of the DARPA Subterranean Challenge, and discuss highlights and challenges faced, alongside lessons learned for the benefit of the community.

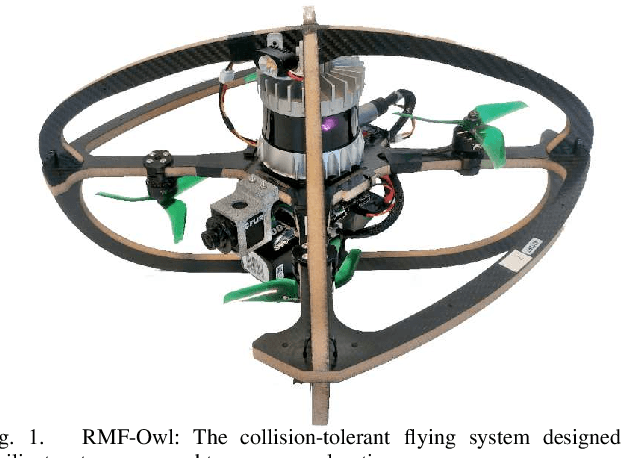

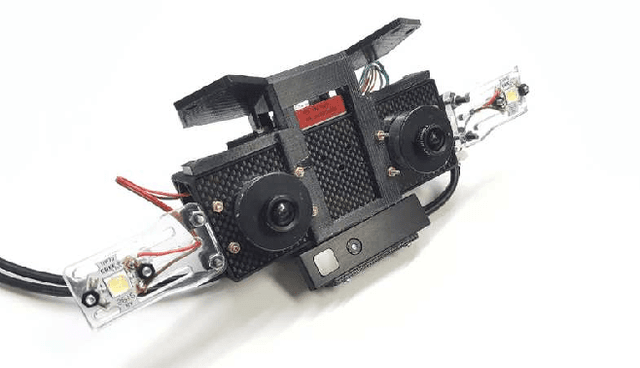

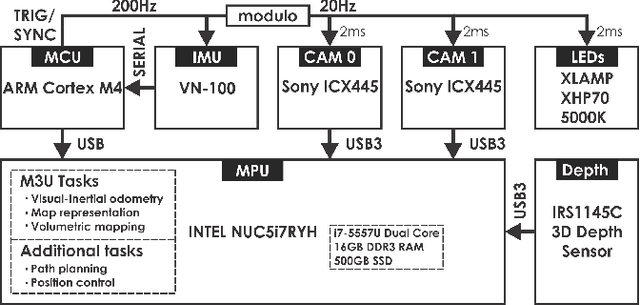

RMF-Owl: A Collision-Tolerant Flying Robot for Autonomous Subterranean Exploration

Feb 22, 2022

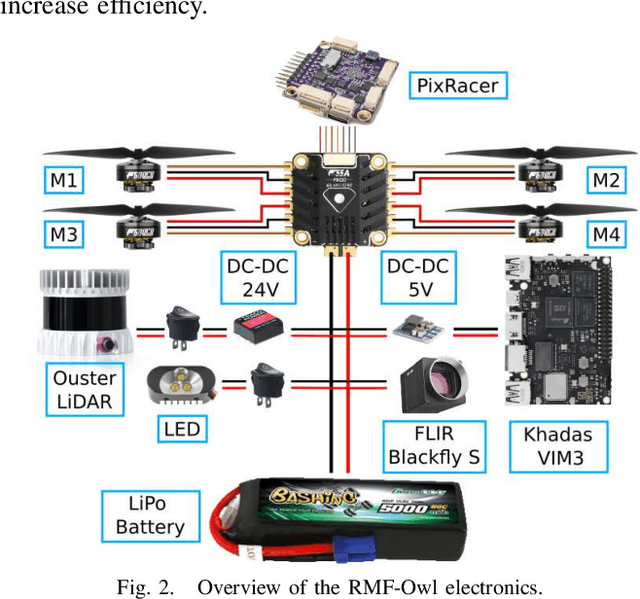

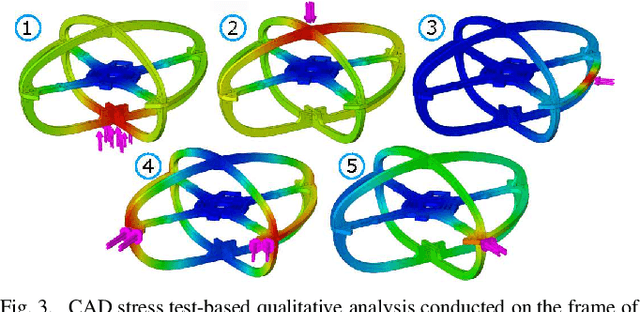

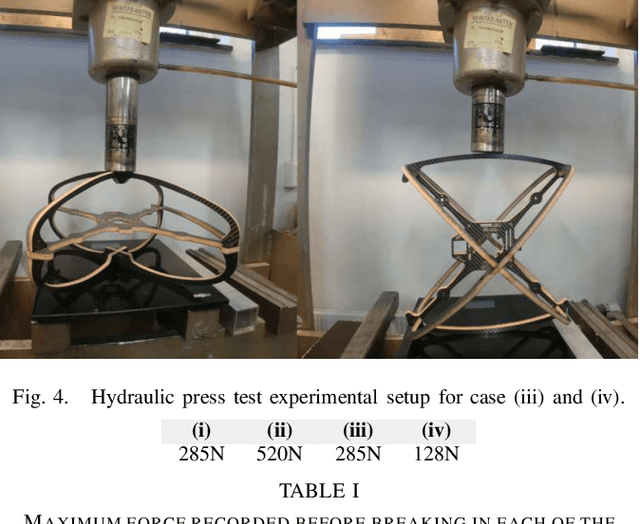

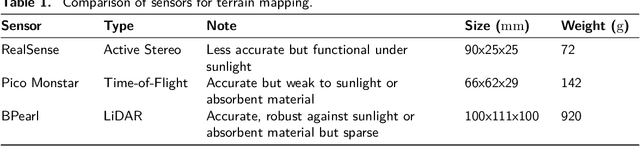

Abstract:This work presents the design, hardware realization, autonomous exploration and object detection capabilities of RMF-Owl, a new collision-tolerant aerial robot tailored for resilient autonomous subterranean exploration. The system is custom built for underground exploration with focus on collision tolerance, resilient autonomy with robust localization and mapping, alongside high-performance exploration path planning in confined, obstacle-filled and topologically complex underground environments. Moreover, RMF-Owl offers the ability to search, detect and locate objects of interest which can be particularly useful in search and rescue missions. A series of results from field experiments are presented in order to demonstrate the system's ability to autonomously explore challenging unknown underground environments.

CERBERUS: Autonomous Legged and Aerial Robotic Exploration in the Tunnel and Urban Circuits of the DARPA Subterranean Challenge

Jan 18, 2022

Abstract:Autonomous exploration of subterranean environments constitutes a major frontier for robotic systems as underground settings present key challenges that can render robot autonomy hard to achieve. This has motivated the DARPA Subterranean Challenge, where teams of robots search for objects of interest in various underground environments. In response, the CERBERUS system-of-systems is presented as a unified strategy towards subterranean exploration using legged and flying robots. As primary robots, ANYmal quadruped systems are deployed considering their endurance and potential to traverse challenging terrain. For aerial robots, both conventional and collision-tolerant multirotors are utilized to explore spaces too narrow or otherwise unreachable by ground systems. Anticipating degraded sensing conditions, a complementary multi-modal sensor fusion approach utilizing camera, LiDAR, and inertial data for resilient robot pose estimation is proposed. Individual robot pose estimates are refined by a centralized multi-robot map optimization approach to improve the reported location accuracy of detected objects of interest in the DARPA-defined coordinate frame. Furthermore, a unified exploration path planning policy is presented to facilitate the autonomous operation of both legged and aerial robots in complex underground networks. Finally, to enable communication between the robots and the base station, CERBERUS utilizes a ground rover with a high-gain antenna and an optical fiber connection to the base station, alongside breadcrumbing of wireless nodes by our legged robots. We report results from the CERBERUS system-of-systems deployment at the DARPA Subterranean Challenge Tunnel and Urban Circuits, along with the current limitations and the lessons learned for the benefit of the community.

Visual-Thermal Camera Dataset Release and Multi-Modal Alignment without Calibration Information

Dec 29, 2020

Abstract:This report accompanies a dataset release on visual and thermal camera data and details a procedure followed to align such multi-modal camera frames in order to provide pixel-level correspondence between the two without using intrinsic or extrinsic calibration information. To achieve this goal we benefit from progress in the domain of multi-modal image alignment and specifically employ the Mattes Mutual Information Metric to guide the registration process. In the released dataset we release both the raw visual and thermal camera data, as well as the aligned frames, alongside calibration parameters with the goal to better facilitate the investigation on common local/global features across such multi-modal image streams.

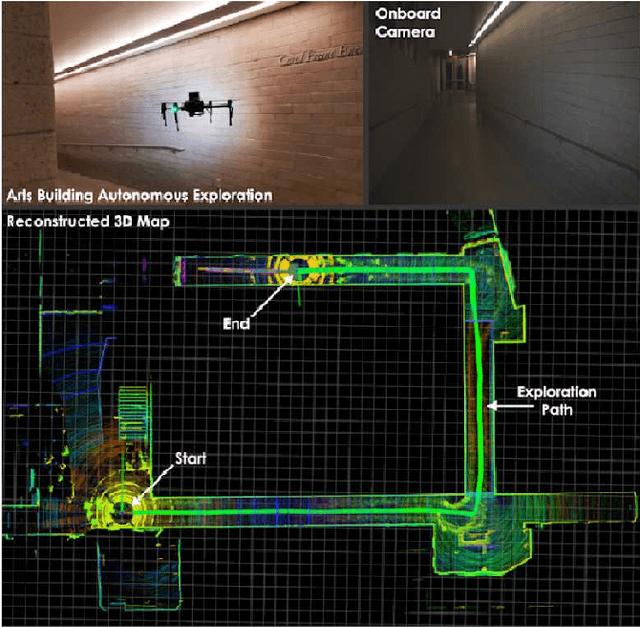

Autonomous Aerial Robotic Surveying and Mapping with Application to Construction Operations

May 09, 2020

Abstract:In this paper we present an overview of the methods and systems that give rise to a flying robotic system capable of autonomous inspection, surveying, comprehensive multi-modal mapping and inventory tracking of construction sites with high degree of systematicity. The robotic system can operate assuming either no prior knowledge of the environment or by integrating a prior model of it. In the first case, autonomous exploration is provided which returns a high fidelity $3\textrm{D}$ map associated with color and thermal vision information. In the second case, the prior model of the structure can be used to provide optimized and repetitive coverage paths. The robot delivers its mapping result autonomously, while simultaneously being able to detect and localize objects of interest thus supporting inventory tracking tasks. The system has been field verified in a collection of environments and has been tested inside a construction project related to public housing.

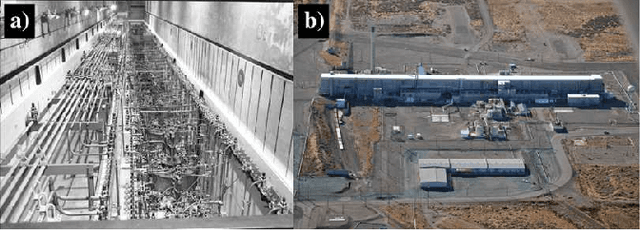

Towards Robotically Supported Decommissioning of Nuclear Sites

May 18, 2017

Abstract:This paper overviews certain radiation detection, perception, and planning challenges for nuclearized robotics that aim to support the waste management and decommissioning mission. To enable the autonomous monitoring, inspection and multi-modal characterization of nuclear sites, we discuss important problems relevant to the tasks of navigation in degraded visual environments, localizability-aware exploration and mapping without any prior knowledge of the environment, as well as robotic radiation detection. Future contributions will focus on each of the relevant problems, will aim to deliver a comprehensive multi-modal mapping result, and will emphasize on extensive field evaluation and system verification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge