Christos Papachristos

Verification and Validation of a Vision-Based Landing System for Autonomous VTOL Air Taxis

Dec 11, 2024

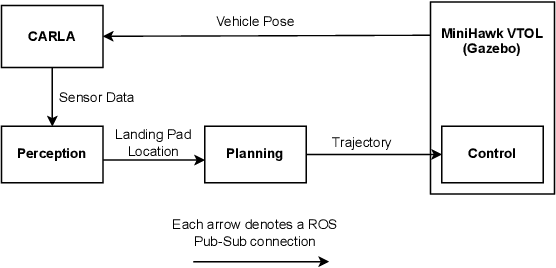

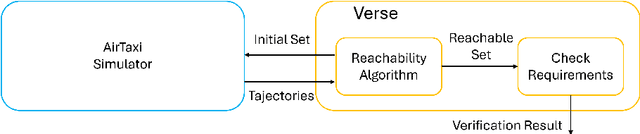

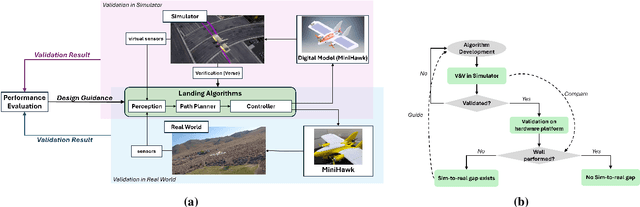

Abstract:Autonomous air taxis are poised to revolutionize urban mass transportation, however, ensuring their safety and reliability remains an open challenge. Validating autonomy solutions on air taxis in the real world presents complexities, risks, and costs that further convolute this challenge. Verification and Validation (V&V) frameworks play a crucial role in the design and development of highly reliable systems by formally verifying safety properties and validating algorithm behavior across diverse operational scenarios. Advancements in high-fidelity simulators have significantly enhanced their capability to emulate real-world conditions, encouraging their use for validating autonomous air taxi solutions, especially during early development stages. This evolution underscores the growing importance of simulation environments, not only as complementary tools to real-world testing but as essential platforms for evaluating algorithms in a controlled, reproducible, and scalable manner. This work presents a V&V framework for a vision-based landing system for air taxis with vertical take-off and landing (VTOL) capabilities. Specifically, we use Verse, a tool for formal verification, to model and verify the safety of the system by obtaining and analyzing the reachable sets. To conduct this analysis, we utilize a photorealistic simulation environment. The simulation environment, built on Unreal Engine, provides realistic terrain, weather, and sensor characteristics to emulate real-world conditions with high fidelity. To validate the safety analysis results, we conduct extensive scenario-based testing to assess the reachability set and robustness of the landing algorithm in various conditions. This approach showcases the representativeness of high-fidelity simulators, offering an effective means to analyze and refine algorithms before real-world deployment.

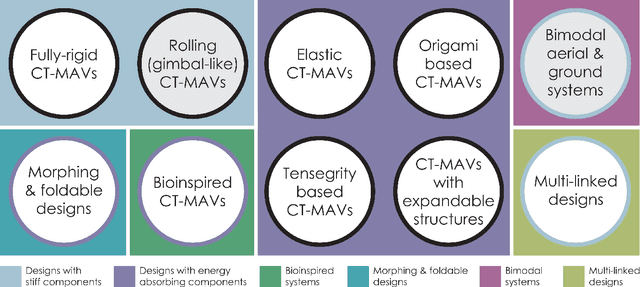

Collision-tolerant Aerial Robots: A Survey

Dec 06, 2022

Abstract:As aerial robots are tasked to navigate environments of increased complexity, embedding collision tolerance in their design becomes important. In this survey we review the current state-of-the-art within the niche field of collision-tolerant micro aerial vehicles and present different design approaches identified in the literature, as well as methods that have focused on autonomy functionalities that exploit collision resilience. Subsequently, we discuss the relevance to biological systems and provide our view on key directions of future fruitful research.

GA-DRL: Genetic Algorithm-Based Function Optimizer in Deep Reinforcement Learning for Robotic Manipulation Tasks

Feb 28, 2022

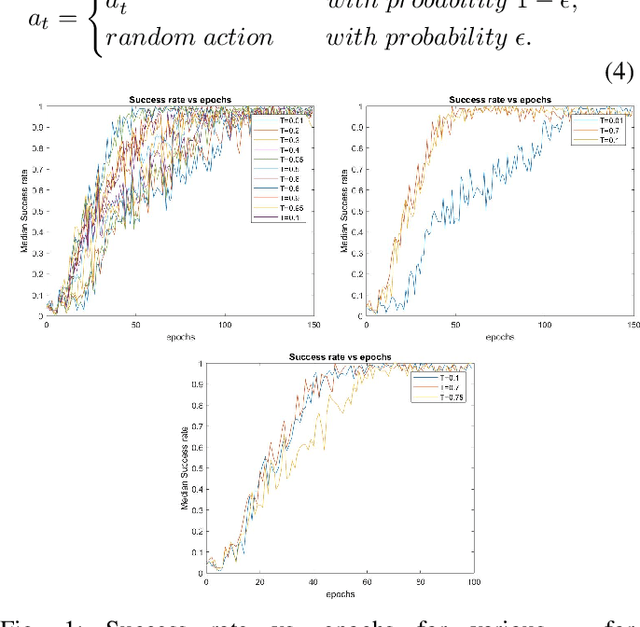

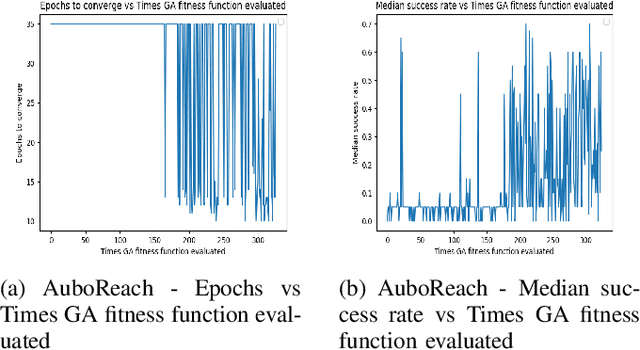

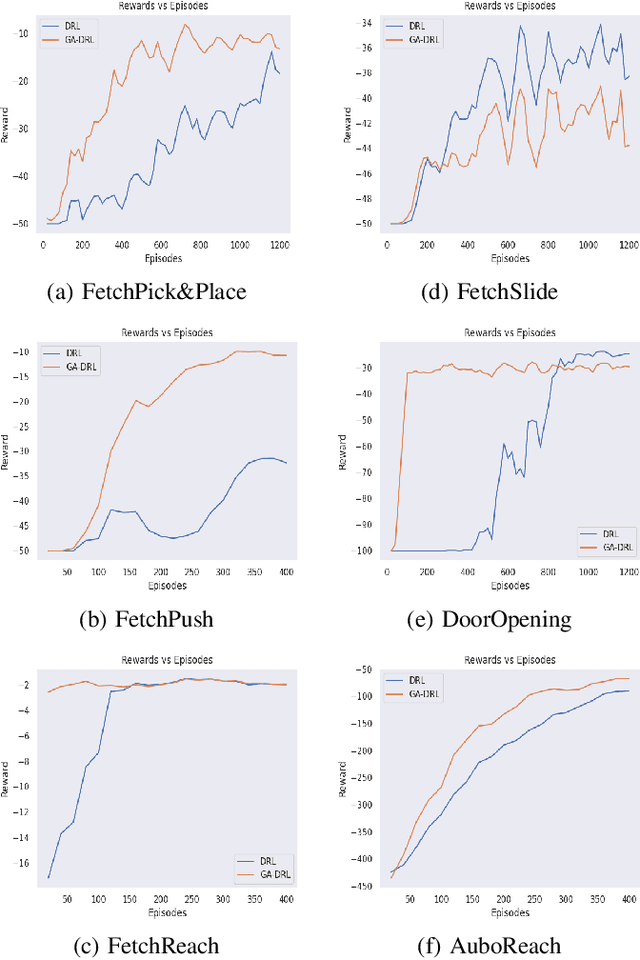

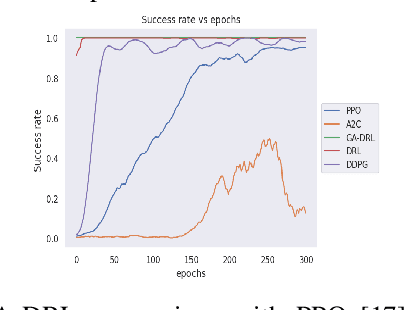

Abstract:Reinforcement learning (RL) enables agents to make a decision based on a reward function. However, in the process of learning, the choice of values for learning algorithm parameters can significantly impact the overall learning process. In this paper, we proposed a Genetic Algorithm-based Deep Deterministic Policy Gradient and Hindsight Experience Replay method (called GA-DRL) to find near-optimal values of learning parameters. We used the proposed GA-DRL method on fetch-reach, slide, push, pick and place, and door opening in robotic manipulation tasks. With some modifications, our proposed GA-DRL method was also applied to the auboreach environment. Our experimental evaluation shows that our method leads to significantly better performance, faster than the original algorithm. Also, we provide evidence that GA-DRL performs better than the existing methods.

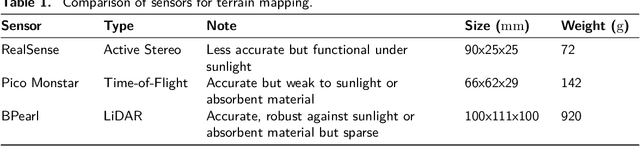

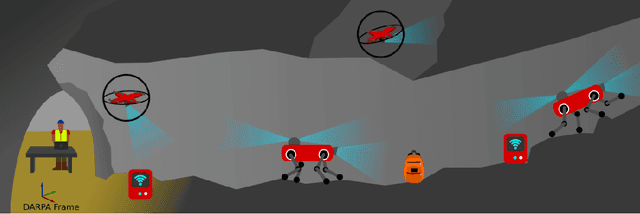

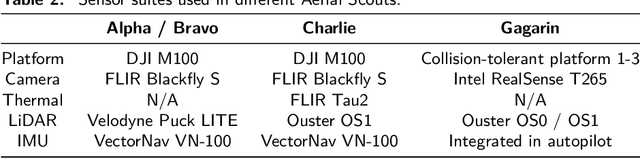

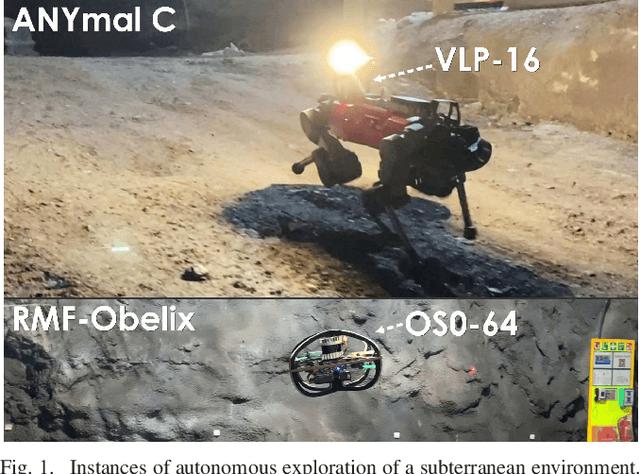

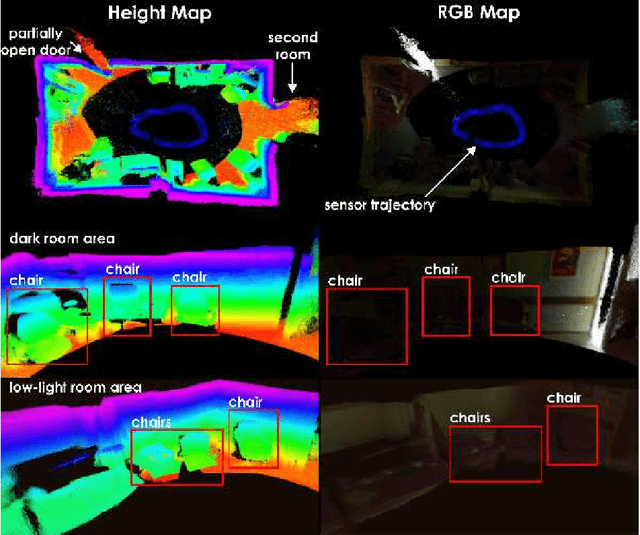

CERBERUS: Autonomous Legged and Aerial Robotic Exploration in the Tunnel and Urban Circuits of the DARPA Subterranean Challenge

Jan 18, 2022

Abstract:Autonomous exploration of subterranean environments constitutes a major frontier for robotic systems as underground settings present key challenges that can render robot autonomy hard to achieve. This has motivated the DARPA Subterranean Challenge, where teams of robots search for objects of interest in various underground environments. In response, the CERBERUS system-of-systems is presented as a unified strategy towards subterranean exploration using legged and flying robots. As primary robots, ANYmal quadruped systems are deployed considering their endurance and potential to traverse challenging terrain. For aerial robots, both conventional and collision-tolerant multirotors are utilized to explore spaces too narrow or otherwise unreachable by ground systems. Anticipating degraded sensing conditions, a complementary multi-modal sensor fusion approach utilizing camera, LiDAR, and inertial data for resilient robot pose estimation is proposed. Individual robot pose estimates are refined by a centralized multi-robot map optimization approach to improve the reported location accuracy of detected objects of interest in the DARPA-defined coordinate frame. Furthermore, a unified exploration path planning policy is presented to facilitate the autonomous operation of both legged and aerial robots in complex underground networks. Finally, to enable communication between the robots and the base station, CERBERUS utilizes a ground rover with a high-gain antenna and an optical fiber connection to the base station, alongside breadcrumbing of wireless nodes by our legged robots. We report results from the CERBERUS system-of-systems deployment at the DARPA Subterranean Challenge Tunnel and Urban Circuits, along with the current limitations and the lessons learned for the benefit of the community.

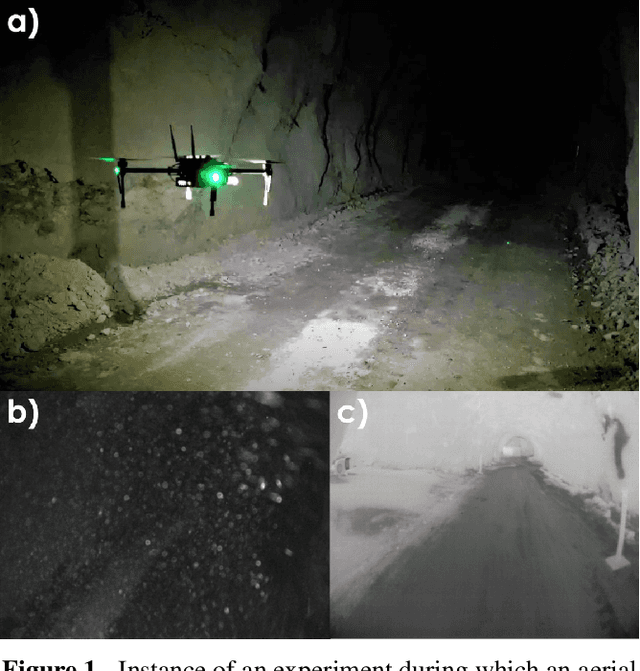

Autonomous Teamed Exploration of Subterranean Environments using Legged and Aerial Robots

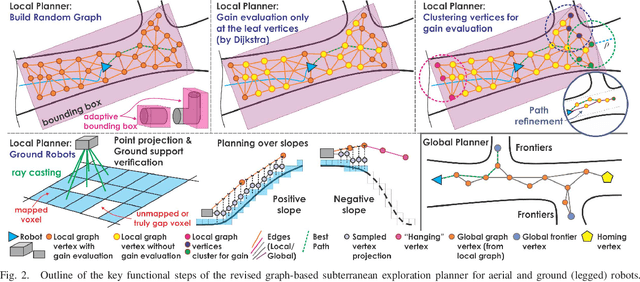

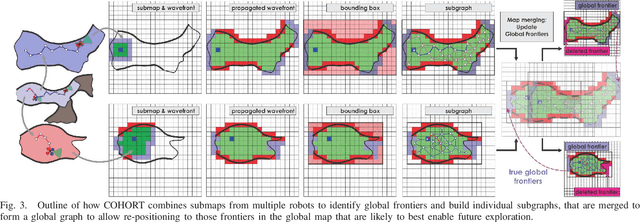

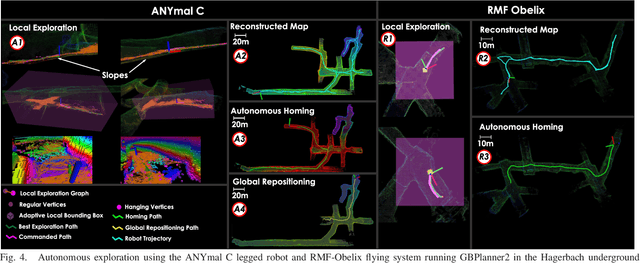

Nov 11, 2021

Abstract:This paper presents a novel strategy for autonomous teamed exploration of subterranean environments using legged and aerial robots. Tailored to the fact that subterranean settings, such as cave networks and underground mines, often involve complex, large-scale and multi-branched topologies, while wireless communication within them can be particularly challenging, this work is structured around the synergy of an onboard exploration path planner that allows for resilient long-term autonomy, and a multi-robot coordination framework. The onboard path planner is unified across legged and flying robots and enables navigation in environments with steep slopes, and diverse geometries. When a communication link is available, each robot of the team shares submaps to a centralized location where a multi-robot coordination framework identifies global frontiers of the exploration space to inform each system about where it should re-position to best continue its mission. The strategy is verified through a field deployment inside an underground mine in Switzerland using a legged and a flying robot collectively exploring for 45 min, as well as a longer simulation study with three systems.

Vision-Depth Landmarks and Inertial Fusion for Navigation in Degraded Visual Environments

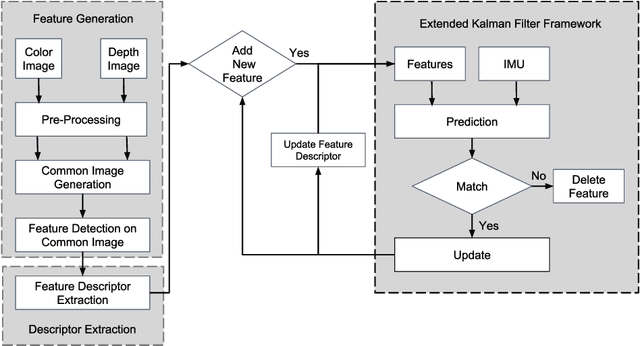

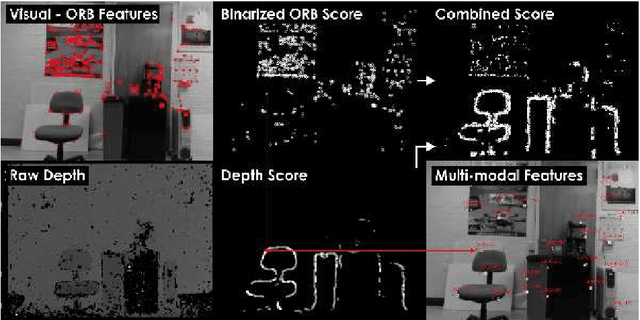

Mar 05, 2019

Abstract:This paper proposes a method for tight fusion of visual, depth and inertial data in order to extend robotic capabilities for navigation in GPS-denied, poorly illuminated, and texture-less environments. Visual and depth information are fused at the feature detection and descriptor extraction levels to augment one sensing modality with the other. These multimodal features are then further integrated with inertial sensor cues using an extended Kalman filter to estimate the robot pose, sensor bias terms, and landmark positions simultaneously as part of the filter state. As demonstrated through a set of hand-held and Micro Aerial Vehicle experiments, the proposed algorithm is shown to perform reliably in challenging visually-degraded environments using RGB-D information from a lightweight and low-cost sensor and data from an IMU.

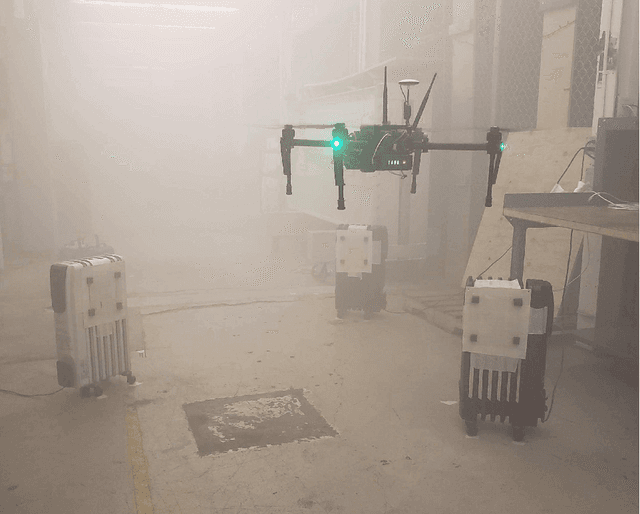

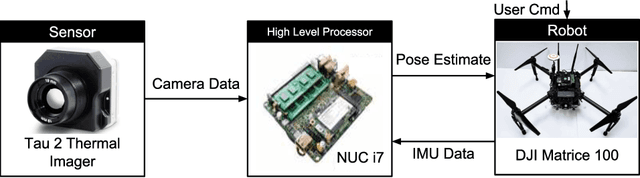

Visual-Thermal Landmarks and Inertial Fusion for Navigation in Degraded Visual Environments

Mar 05, 2019

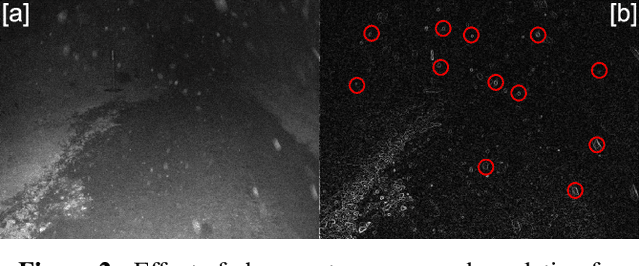

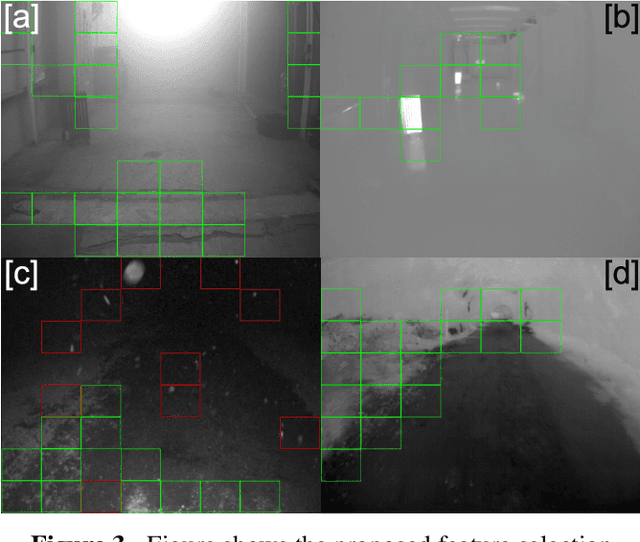

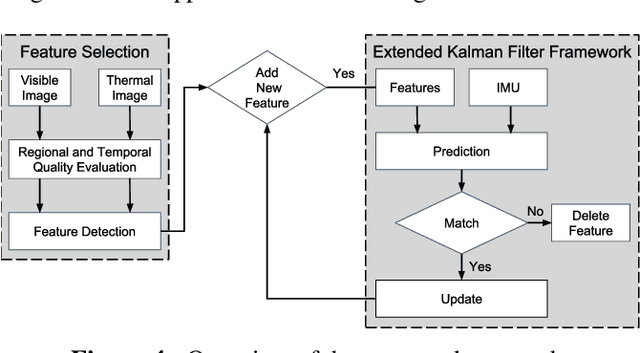

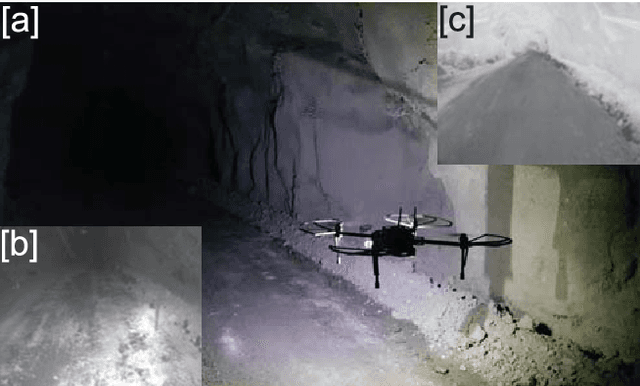

Abstract:With an ever-widening domain of aerial robotic applications, including many mission critical tasks such as disaster response operations, search and rescue missions and infrastructure inspections taking place in GPS-denied environments, the need for reliable autonomous operation of aerial robots has become crucial. Operating in GPS-denied areas aerial robots rely on a multitude of sensors to localize and navigate. Visible spectrum cameras are the most commonly used sensors due to their low cost and weight. However, in environments that are visually-degraded such as in conditions of poor illumination, low texture, or presence of obscurants including fog, smoke and dust, the reliability of visible light cameras deteriorates significantly. Nevertheless, maintaining reliable robot navigation in such conditions is essential. In contrast to visible light cameras, thermal cameras offer visibility in the infrared spectrum and can be used in a complementary manner with visible spectrum cameras for robot localization and navigation tasks, without paying the significant weight and power penalty typically associated with carrying other sensors. Exploiting this fact, in this work we present a multi-sensor fusion algorithm for reliable odometry estimation in GPS-denied and degraded visual environments. The proposed method utilizes information from both the visible and thermal spectra for landmark selection and prioritizes feature extraction from informative image regions based on a metric over spatial entropy. Furthermore, inertial sensing cues are integrated to improve the robustness of the odometry estimation process. To verify our solution, a set of challenging experiments were conducted inside a) an obscurant filed machine shop-like industrial environment, as well as b) a dark subterranean mine in the presence of heavy airborne dust.

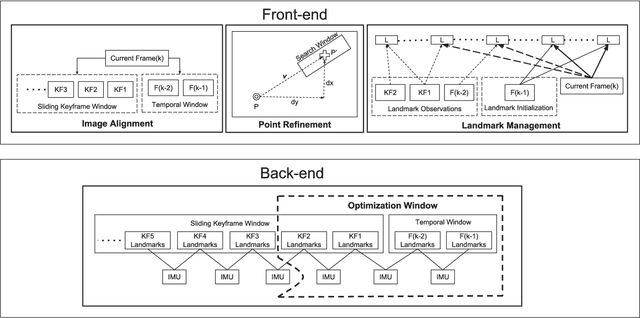

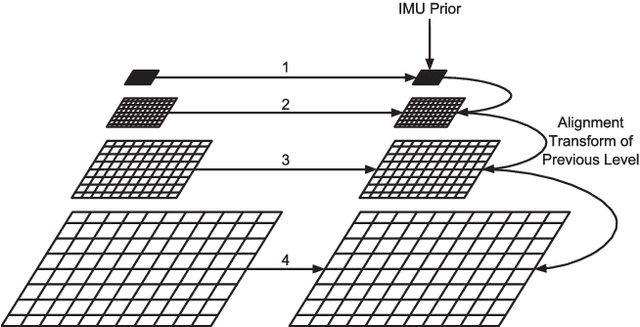

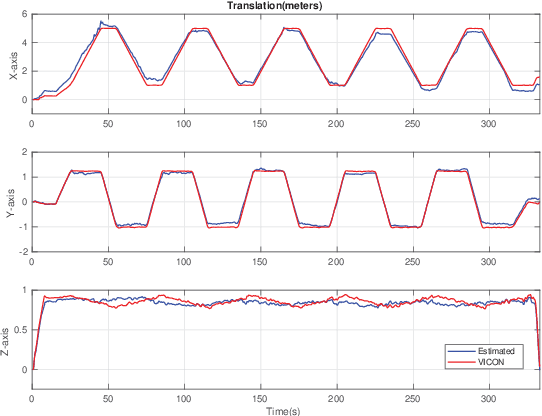

Keyframe-based Direct Thermal-Inertial Odometry

Mar 03, 2019

Abstract:This paper proposes an approach for fusing direct radiometric data from a thermal camera with inertial measurements to extend the robotic capabilities of aerial robots for navigation in GPS-denied and visually degraded environments in the conditions of darkness and in the presence of airborne obscurants such as dust, fog and smoke. An optimization based approach is developed that jointly minimizes the re-projection error of 3D landmarks and inertial measurement errors. The developed solution is extensively verified against both ground-truth in an indoor laboratory setting, as well as inside an underground mine under severely visually degraded conditions.

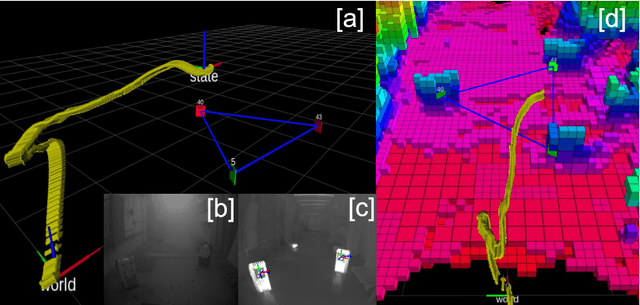

Marker based Thermal-Inertial Localization for Aerial Robots in Obscurant Filled Environments

Mar 02, 2019

Abstract:For robotic inspection tasks in known environments fiducial markers provide a reliable and low-cost solution for robot localization. However, detection of such markers relies on the quality of RGB camera data, which degrades significantly in the presence of visual obscurants such as fog and smoke. The ability to navigate known environments in the presence of obscurants can be critical for inspection tasks especially, in the aftermath of a disaster. Addressing such a scenario, this work proposes a method for the design of fiducial markers to be used with thermal cameras for the pose estimation of aerial robots. Our low cost markers are designed to work in the long wave infrared spectrum, which is not affected by the presence of obscurants, and can be affixed to any object that has measurable temperature difference with respect to its surroundings. Furthermore, the estimated pose from the fiducial markers is fused with inertial measurements in an extended Kalman filter to remove high frequency noise and error present in the fiducial pose estimates. The proposed markers and the pose estimation method are experimentally evaluated in an obscurant filled environment using an aerial robot carrying a thermal camera.

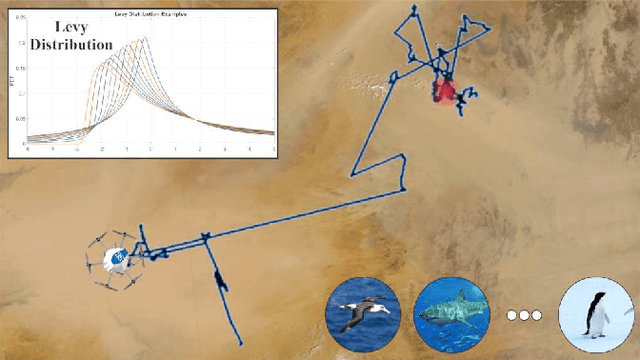

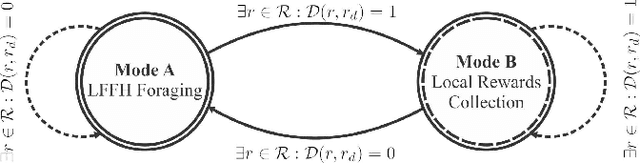

Lévy Flight Foraging Hypothesis-based Autonomous Memoryless Search Under Sparse Rewards

Dec 12, 2018

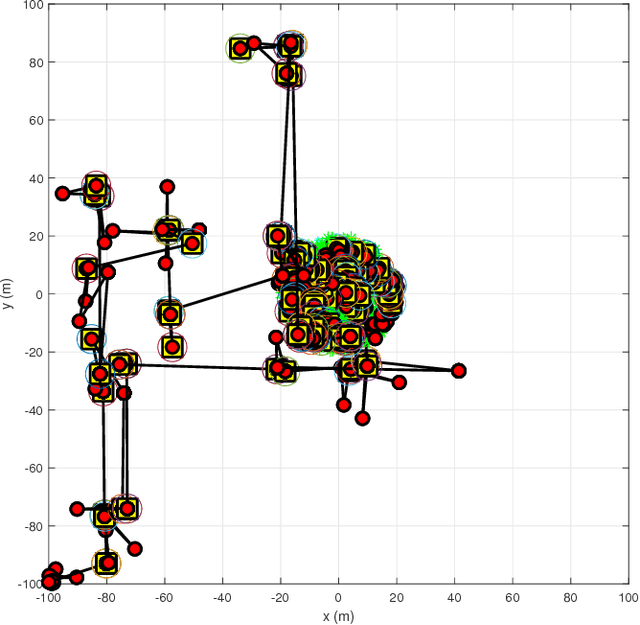

Abstract:Autonomous robots are commonly tasked with the problem of area exploration and search for certain targets or artifacts of interest to be tracked. Traditionally, the problem formulation considered is that of complete search and thus - ideally - identification of all targets of interest. An important problem however which is not often addressed is that of time-efficient memoryless search under sparse rewards that may be worth visited any number of items. In this paper we specifically address the largely understudied problem of optimizing the "time-of-arrival" or "time-of-detection" to robotically search for sparsely distributed rewards (detect targets of interest) within large-scale environments and subject to memoryless exploration. At the core of the proposed solution is the fact that a search-based L\'evy walk consisting of a constant velocity search following a L\'evy flight path is optimal for searching sparse and randomly distributed target regions in the lack of map memory. A set of results accompany the presentation of the method, demonstrate its properties and justify the purpose of its use towards large-scale area exploration autonomy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge