Mihir Kulkarni

Reinforcement Learning for Active Perception in Autonomous Navigation

Feb 01, 2026Abstract:This paper addresses the challenge of active perception within autonomous navigation in complex, unknown environments. Revisiting the foundational principles of active perception, we introduce an end-to-end reinforcement learning framework in which a robot must not only reach a goal while avoiding obstacles, but also actively control its onboard camera to enhance situational awareness. The policy receives observations comprising the robot state, the current depth frame, and a particularly local geometry representation built from a short history of depth readings. To couple collision-free motion planning with information-driven active camera control, we augment the navigation reward with a voxel-based information metric. This enables an aerial robot to learn a robust policy that balances goal-directed motion with exploratory sensing. Extensive evaluation demonstrates that our strategy achieves safer flight compared to using fixed, non-actuated camera baselines while also inducing intrinsic exploratory behaviors.

Isaac Lab: A GPU-Accelerated Simulation Framework for Multi-Modal Robot Learning

Nov 06, 2025

Abstract:We present Isaac Lab, the natural successor to Isaac Gym, which extends the paradigm of GPU-native robotics simulation into the era of large-scale multi-modal learning. Isaac Lab combines high-fidelity GPU parallel physics, photorealistic rendering, and a modular, composable architecture for designing environments and training robot policies. Beyond physics and rendering, the framework integrates actuator models, multi-frequency sensor simulation, data collection pipelines, and domain randomization tools, unifying best practices for reinforcement and imitation learning at scale within a single extensible platform. We highlight its application to a diverse set of challenges, including whole-body control, cross-embodiment mobility, contact-rich and dexterous manipulation, and the integration of human demonstrations for skill acquisition. Finally, we discuss upcoming integration with the differentiable, GPU-accelerated Newton physics engine, which promises new opportunities for scalable, data-efficient, and gradient-based approaches to robot learning. We believe Isaac Lab's combination of advanced simulation capabilities, rich sensing, and data-center scale execution will help unlock the next generation of breakthroughs in robotics research.

Performance-guided Task-specific Optimization for Multirotor Design

Oct 06, 2025Abstract:This paper introduces a methodology for task-specific design optimization of multirotor Micro Aerial Vehicles. By leveraging reinforcement learning, Bayesian optimization, and covariance matrix adaptation evolution strategy, we optimize aerial robot designs guided exclusively by their closed-loop performance in a considered task. Our approach systematically explores the design space of motor pose configurations while ensuring manufacturability constraints and minimal aerodynamic interference. Results demonstrate that optimized designs achieve superior performance compared to conventional multirotor configurations in agile waypoint navigation tasks, including against fully actuated designs from the literature. We build and test one of the optimized designs in the real world to validate the sim2real transferability of our approach.

Semantically-driven Deep Reinforcement Learning for Inspection Path Planning

May 20, 2025Abstract:This paper introduces a novel semantics-aware inspection planning policy derived through deep reinforcement learning. Reflecting the fact that within autonomous informative path planning missions in unknown environments, it is often only a sparse set of objects of interest that need to be inspected, the method contributes an end-to-end policy that simultaneously performs semantic object visual inspection combined with collision-free navigation. Assuming access only to the instantaneous depth map, the associated segmentation image, the ego-centric local occupancy, and the history of past positions in the robot's neighborhood, the method demonstrates robust generalizability and successful crossing of the sim2real gap. Beyond simulations and extensive comparison studies, the approach is verified in experimental evaluations onboard a flying robot deployed in novel environments with previously unseen semantics and overall geometric configurations.

A Neural Network Mode for PX4 on Embedded Flight Controllers

May 01, 2025Abstract:This paper contributes an open-sourced implementation of a neural-network based controller framework within the PX4 stack. We develop a custom module for inference on the microcontroller while retaining all of the functionality of the PX4 autopilot. Policies trained in the Aerial Gym Simulator are converted to the TensorFlow Lite format and then built together with PX4 and flashed to the flight controller. The policies substitute the control-cascade within PX4 to offer an end-to-end position-setpoint tracking controller directly providing normalized motor RPM setpoints. Experiments conducted in simulation and the real-world show similar tracking performance. We thus provide a flight-ready pipeline for testing neural control policies in the real world. The pipeline simplifies the deployment of neural networks on embedded flight controller hardware thereby accelerating research on learning-based control. Both the Aerial Gym Simulator and the PX4 module are open-sourced at https://github.com/ntnu-arl/aerial_gym_simulator and https://github.com/SindreMHegre/PX4-Autopilot-public/tree/for_paper. Video: https://youtu.be/lY1OKz_UOqM?si=VtzL243BAY3lblTJ.

MapQA: Open-domain Geospatial Question Answering on Map Data

Mar 10, 2025Abstract:Geospatial question answering (QA) is a fundamental task in navigation and point of interest (POI) searches. While existing geospatial QA datasets exist, they are limited in both scale and diversity, often relying solely on textual descriptions of geo-entities without considering their geometries. A major challenge in scaling geospatial QA datasets for reasoning lies in the complexity of geospatial relationships, which require integrating spatial structures, topological dependencies, and multi-hop reasoning capabilities that most text-based QA datasets lack. To address these limitations, we introduce MapQA, a novel dataset that not only provides question-answer pairs but also includes the geometries of geo-entities referenced in the questions. MapQA is constructed using SQL query templates to extract question-answer pairs from OpenStreetMap (OSM) for two study regions: Southern California and Illinois. It consists of 3,154 QA pairs spanning nine question types that require geospatial reasoning, such as neighborhood inference and geo-entity type identification. Compared to existing datasets, MapQA expands both the number and diversity of geospatial question types. We explore two approaches to tackle this challenge: (1) a retrieval-based language model that ranks candidate geo-entities by embedding similarity, and (2) a large language model (LLM) that generates SQL queries from natural language questions and geo-entity attributes, which are then executed against an OSM database. Our findings indicate that retrieval-based methods effectively capture concepts like closeness and direction but struggle with questions that require explicit computations (e.g., distance calculations). LLMs (e.g., GPT and Gemini) excel at generating SQL queries for one-hop reasoning but face challenges with multi-hop reasoning, highlighting a key bottleneck in advancing geospatial QA systems.

Aerial Gym Simulator: A Framework for Highly Parallelized Simulation of Aerial Robots

Mar 03, 2025Abstract:This paper contributes the Aerial Gym Simulator, a highly parallelized, modular framework for simulation and rendering of arbitrary multirotor platforms based on NVIDIA Isaac Gym. Aerial Gym supports the simulation of under-, fully- and over-actuated multirotors offering parallelized geometric controllers, alongside a custom GPU-accelerated rendering framework for ray-casting capable of capturing depth, segmentation and vertex-level annotations from the environment. Multiple examples for key tasks, such as depth-based navigation through reinforcement learning are provided. The comprehensive set of tools developed within the framework makes it a powerful resource for research on learning for control, planning, and navigation using state information as well as exteroceptive sensor observations. Extensive simulation studies are conducted and successful sim2real transfer of trained policies is demonstrated. The Aerial Gym Simulator is open-sourced at: https://github.com/ntnu-arl/aerial_gym_simulator.

Neural Control Barrier Functions for Safe Navigation

Jul 29, 2024Abstract:Autonomous robot navigation can be particularly demanding, especially when the surrounding environment is not known and safety of the robot is crucial. This work relates to the synthesis of Control Barrier Functions (CBFs) through data for safe navigation in unknown environments. A novel methodology to jointly learn CBFs and corresponding safe controllers, in simulation, inspired by the State Dependent Riccati Equation (SDRE) is proposed. The CBF is used to obtain admissible commands from any nominal, possibly unsafe controller. An approach to apply the CBF inside a safety filter without the need for a consistent map or position estimate is developed. Subsequently, the resulting reactive safety filter is deployed on a multirotor platform integrating a LiDAR sensor both in simulation and real-world experiments.

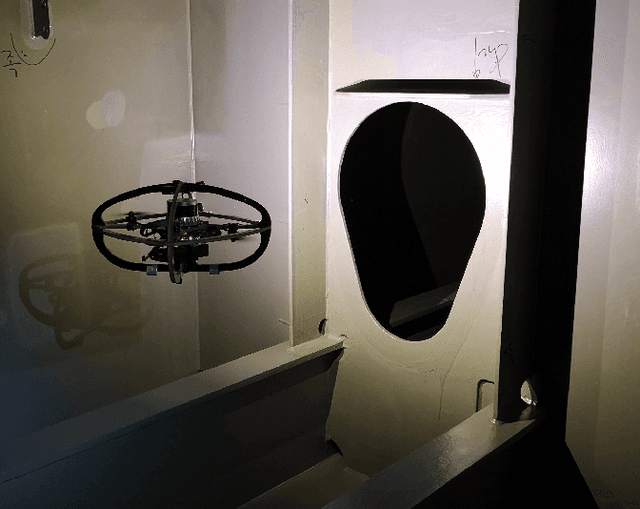

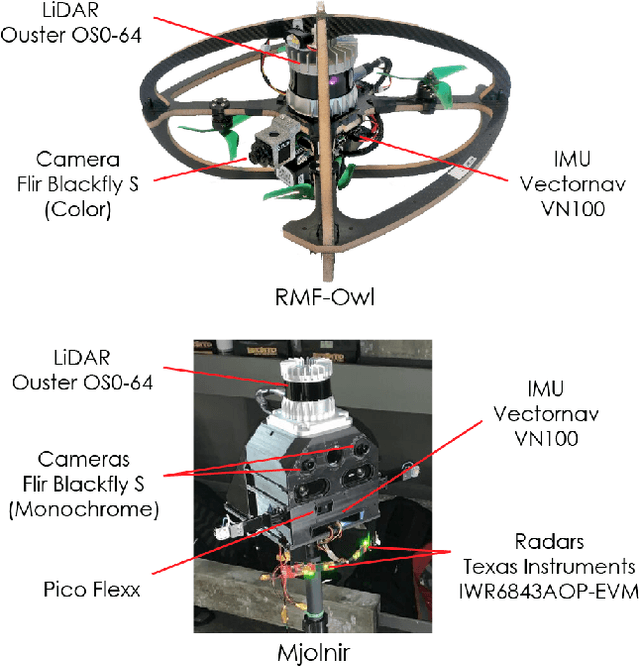

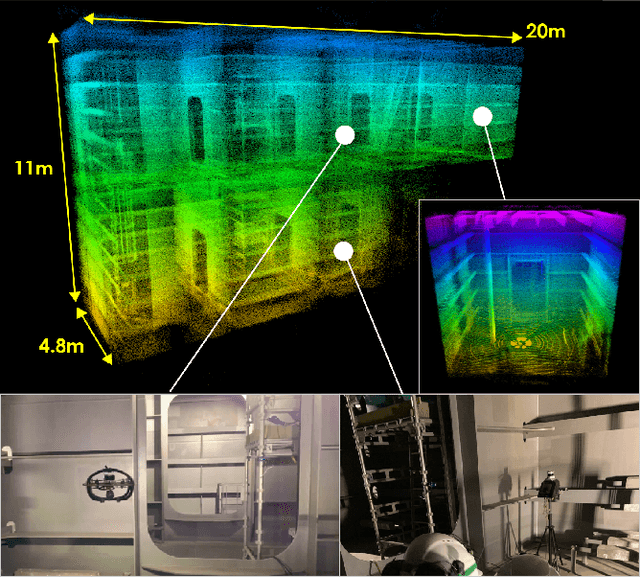

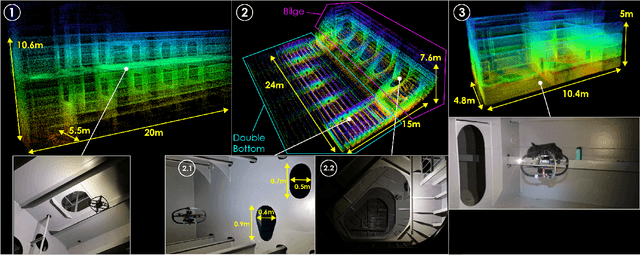

Maritime Vessel Tank Inspection using Aerial Robots: Experience from the field and dataset release

Apr 29, 2024

Abstract:This paper presents field results and lessons learned from the deployment of aerial robots inside ship ballast tanks. Vessel tanks including ballast tanks and cargo holds present dark, dusty environments having simultaneously very narrow openings and wide open spaces that create several challenges for autonomous navigation and inspection operations. We present a system for vessel tank inspection using an aerial robot along with its autonomy modules. We show the results of autonomous exploration and visual inspection in 3 ships spanning across 7 distinct types of sections of the ballast tanks. Additionally, we comment on the lessons learned from the field and possible directions for future work. Finally, we release a dataset consisting of the data from these missions along with data collected with a handheld sensor stick.

Reinforcement Learning for Collision-free Flight Exploiting Deep Collision Encoding

Feb 06, 2024

Abstract:This work contributes a novel deep navigation policy that enables collision-free flight of aerial robots based on a modular approach exploiting deep collision encoding and reinforcement learning. The proposed solution builds upon a deep collision encoder that is trained on both simulated and real depth images using supervised learning such that it compresses the high-dimensional depth data to a low-dimensional latent space encoding collision information while accounting for the robot size. This compressed encoding is combined with an estimate of the robot's odometry and the desired target location to train a deep reinforcement learning navigation policy that offers low-latency computation and robust sim2real performance. A set of simulation and experimental studies in diverse environments are conducted and demonstrate the efficiency of the emerged behavior and its resilience in real-life deployments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge