David Wisth

Hilti-Oxford Dataset: A Millimetre-Accurate Benchmark for Simultaneous Localization and Mapping

Aug 21, 2022

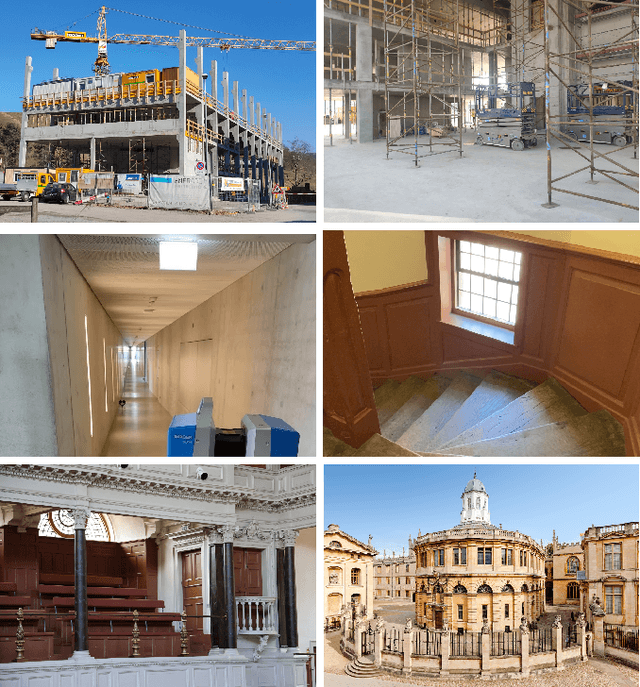

Abstract:Simultaneous Localization and Mapping (SLAM) is being deployed in real-world applications, however many state-of-the-art solutions still struggle in many common scenarios. A key necessity in progressing SLAM research is the availability of high-quality datasets and fair and transparent benchmarking. To this end, we have created the Hilti-Oxford Dataset, to push state-of-the-art SLAM systems to their limits. The dataset has a variety of challenges ranging from sparse and regular construction sites to a 17th century neoclassical building with fine details and curved surfaces. To encourage multi-modal SLAM approaches, we designed a data collection platform featuring a lidar, five cameras, and an IMU (Inertial Measurement Unit). With the goal of benchmarking SLAM algorithms for tasks where accuracy and robustness are paramount, we implemented a novel ground truth collection method that enables our dataset to accurately measure SLAM pose errors with millimeter accuracy. To further ensure accuracy, the extrinsics of our platform were verified with a micrometer-accurate scanner, and temporal calibration was managed online using hardware time synchronization. The multi-modality and diversity of our dataset attracted a large field of academic and industrial researchers to enter the second edition of the Hilti SLAM challenge, which concluded in June 2022. The results of the challenge show that while the top three teams could achieve accuracy of 2cm or better for some sequences, the performance dropped off in more difficult sequences.

Team CERBERUS Wins the DARPA Subterranean Challenge: Technical Overview and Lessons Learned

Jul 11, 2022

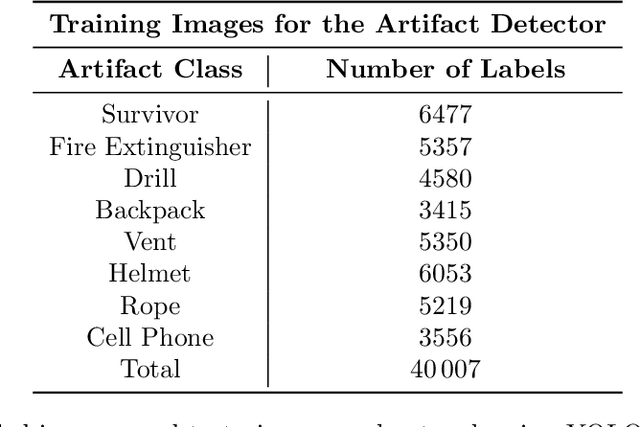

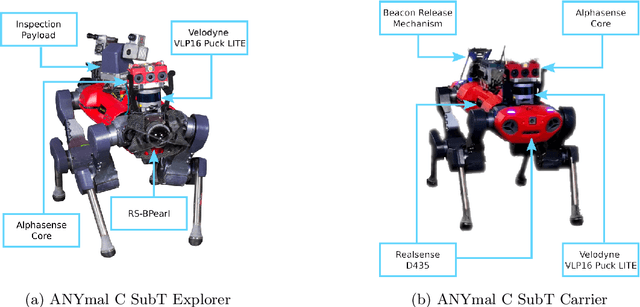

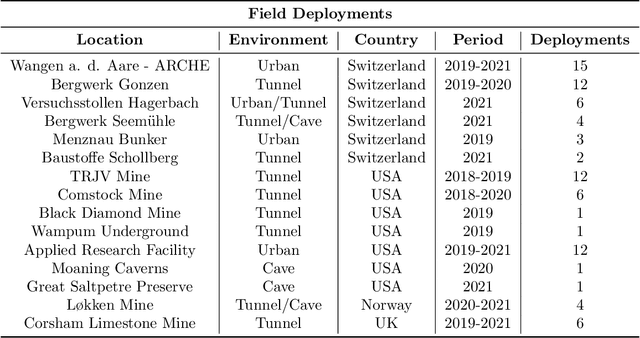

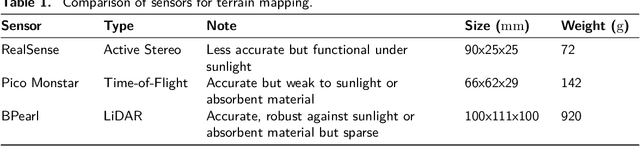

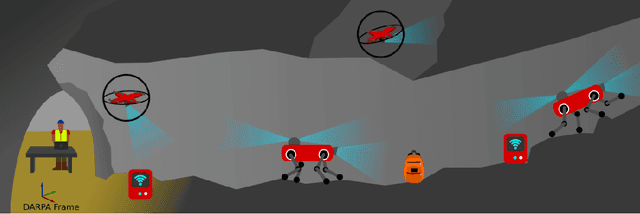

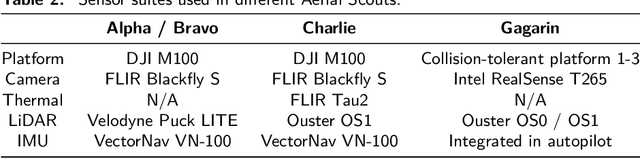

Abstract:This article presents the CERBERUS robotic system-of-systems, which won the DARPA Subterranean Challenge Final Event in 2021. The Subterranean Challenge was organized by DARPA with the vision to facilitate the novel technologies necessary to reliably explore diverse underground environments despite the grueling challenges they present for robotic autonomy. Due to their geometric complexity, degraded perceptual conditions combined with lack of GPS support, austere navigation conditions, and denied communications, subterranean settings render autonomous operations particularly demanding. In response to this challenge, we developed the CERBERUS system which exploits the synergy of legged and flying robots, coupled with robust control especially for overcoming perilous terrain, multi-modal and multi-robot perception for localization and mapping in conditions of sensor degradation, and resilient autonomy through unified exploration path planning and local motion planning that reflects robot-specific limitations. Based on its ability to explore diverse underground environments and its high-level command and control by a single human supervisor, CERBERUS demonstrated efficient exploration, reliable detection of objects of interest, and accurate mapping. In this article, we report results from both the preliminary runs and the final Prize Round of the DARPA Subterranean Challenge, and discuss highlights and challenges faced, alongside lessons learned for the benefit of the community.

CERBERUS: Autonomous Legged and Aerial Robotic Exploration in the Tunnel and Urban Circuits of the DARPA Subterranean Challenge

Jan 18, 2022

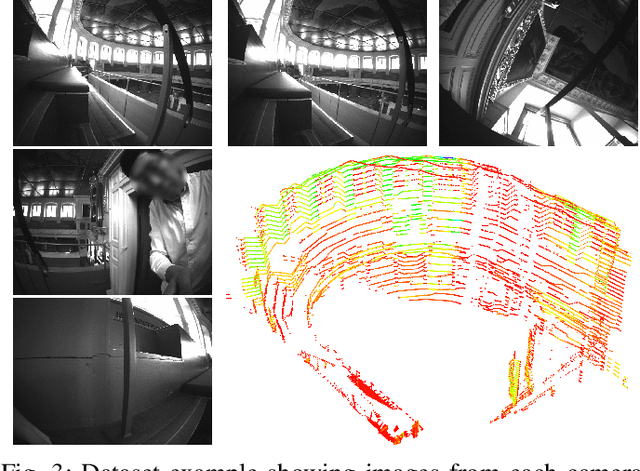

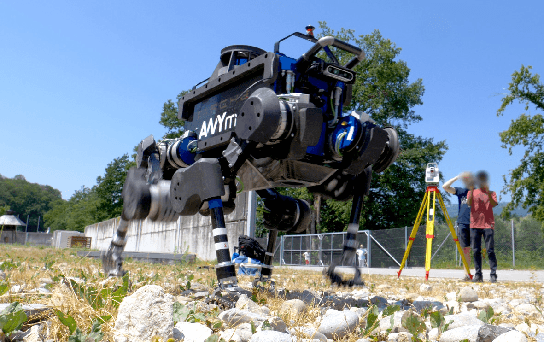

Abstract:Autonomous exploration of subterranean environments constitutes a major frontier for robotic systems as underground settings present key challenges that can render robot autonomy hard to achieve. This has motivated the DARPA Subterranean Challenge, where teams of robots search for objects of interest in various underground environments. In response, the CERBERUS system-of-systems is presented as a unified strategy towards subterranean exploration using legged and flying robots. As primary robots, ANYmal quadruped systems are deployed considering their endurance and potential to traverse challenging terrain. For aerial robots, both conventional and collision-tolerant multirotors are utilized to explore spaces too narrow or otherwise unreachable by ground systems. Anticipating degraded sensing conditions, a complementary multi-modal sensor fusion approach utilizing camera, LiDAR, and inertial data for resilient robot pose estimation is proposed. Individual robot pose estimates are refined by a centralized multi-robot map optimization approach to improve the reported location accuracy of detected objects of interest in the DARPA-defined coordinate frame. Furthermore, a unified exploration path planning policy is presented to facilitate the autonomous operation of both legged and aerial robots in complex underground networks. Finally, to enable communication between the robots and the base station, CERBERUS utilizes a ground rover with a high-gain antenna and an optical fiber connection to the base station, alongside breadcrumbing of wireless nodes by our legged robots. We report results from the CERBERUS system-of-systems deployment at the DARPA Subterranean Challenge Tunnel and Urban Circuits, along with the current limitations and the lessons learned for the benefit of the community.

Balancing the Budget: Feature Selection and Tracking for Multi-Camera Visual-Inertial Odometry

Sep 13, 2021

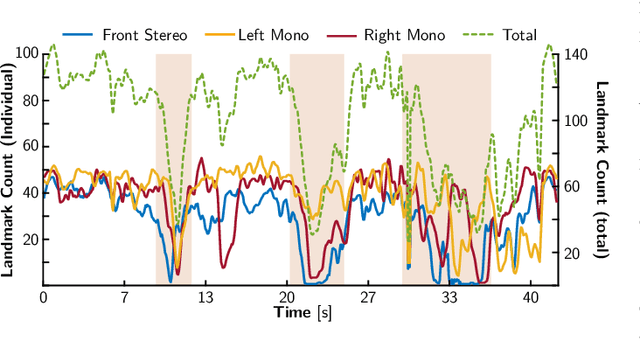

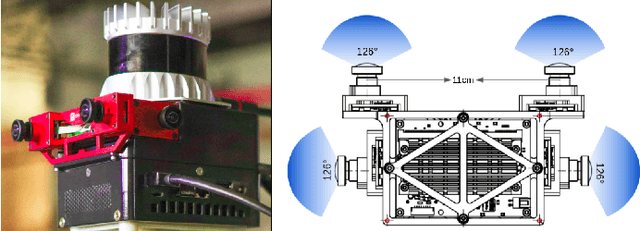

Abstract:We present a multi-camera visual-inertial odometry system based on factor graph optimization which estimates motion by using all cameras simultaneously while retaining a fixed overall feature budget. We focus on motion tracking in challenging environments such as in narrow corridors and dark spaces with aggressive motions and abrupt lighting changes. These scenarios cause traditional monocular or stereo odometry to fail. While tracking motion across extra cameras should theoretically prevent failures, it causes additional complexity and computational burden. To overcome these challenges, we introduce two novel methods to improve multi-camera feature tracking. First, instead of tracking features separately in each camera, we track features continuously as they move from one camera to another. This increases accuracy and achieves a more compact factor graph representation. Second, we select a fixed budget of tracked features which are spread across the cameras to ensure that the limited computational budget is never exceeded. We have found that using a smaller set of informative features can maintain the same tracking accuracy while reducing back-end optimization time. Our proposed method was extensively tested using a hardware-synchronized device containing an IMU and four cameras (a front stereo pair and two lateral) in scenarios including an underground mine, large open spaces, and building interiors with narrow stairs and corridors. Compared to stereo-only state-of-the-art VIO methods, our approach reduces the drift rate (RPE) by up to 80% in translation and 39% in rotation.

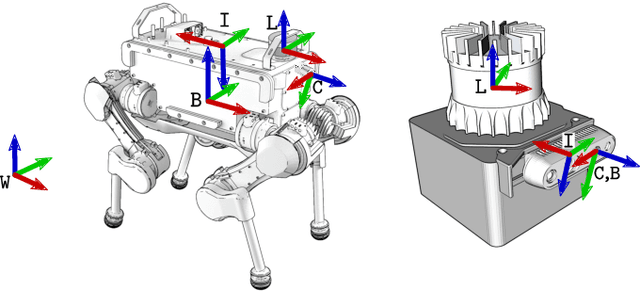

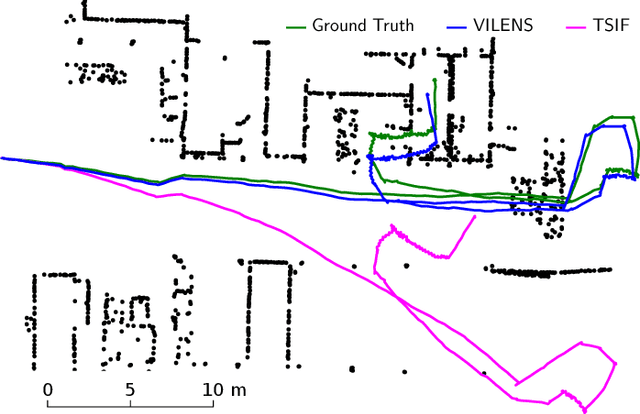

VILENS: Visual, Inertial, Lidar, and Leg Odometry for All-Terrain Legged Robots

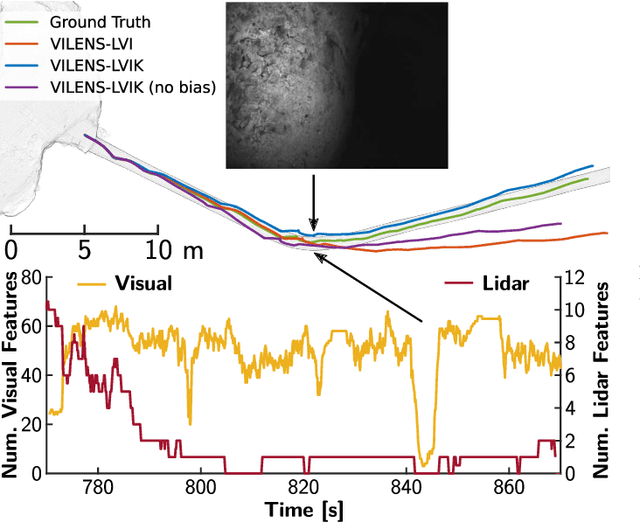

Jul 15, 2021

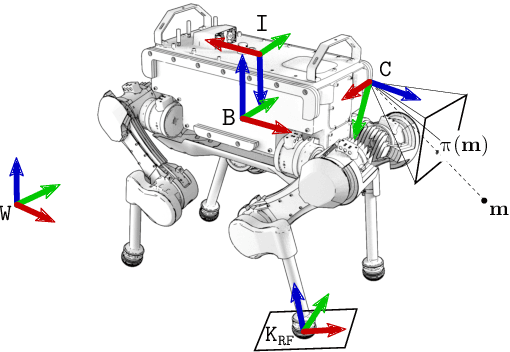

Abstract:We present VILENS (Visual Inertial Lidar Legged Navigation System), an odometry system for legged robots based on factor graphs. The key novelty is the tight fusion of four different sensor modalities to achieve reliable operation when the individual sensors would otherwise produce degenerate estimation. To minimize leg odometry drift, we extend the robot's state with a linear velocity bias term which is estimated online. This bias is only observable because of the tight fusion of this preintegrated velocity factor with vision, lidar, and IMU factors. Extensive experimental validation on the ANYmal quadruped robots is presented, for a total duration of 2 h and 1.8 km traveled. The experiments involved dynamic locomotion over loose rocks, slopes, and mud; these included perceptual challenges, such as dark and dusty underground caverns or open, feature-deprived areas, as well as mobility challenges such as slipping and terrain deformation. We show an average improvement of 62% translational and 51% rotational errors compared to a state-of-the-art loosely coupled approach. To demonstrate its robustness, VILENS was also integrated with a perceptive controller and a local path planner.

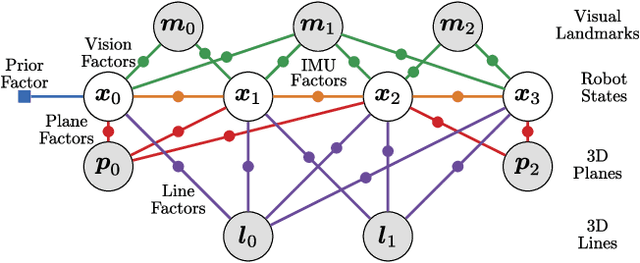

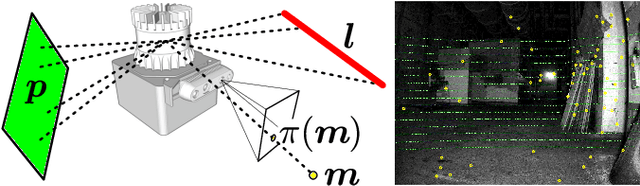

Unified Multi-Modal Landmark Tracking for Tightly Coupled Lidar-Visual-Inertial Odometry

Nov 13, 2020

Abstract:We present an efficient multi-sensor odometry system for mobile platforms that jointly optimizes visual, lidar, and inertial information within a single integrated factor graph. This runs in real-time at full framerate using fixed lag smoothing. To perform such tight integration, a new method to extract 3D line and planar primitives from lidar point clouds is presented. This approach overcomes the suboptimality of typical frame-to-frame tracking methods by treating the primitives as landmarks and tracking them over multiple scans. True integration of lidar features with standard visual features and IMU is made possible using a subtle passive synchronization of lidar and camera frames. The lightweight formulation of the 3D features allows for real-time execution on a single CPU. Our proposed system has been tested on a variety of platforms and scenarios, including underground exploration with a legged robot and outdoor scanning with a dynamically moving handheld device, for a total duration of 96 min and 2.4 km traveled distance. In these test sequences, using only one exteroceptive sensor leads to failure due to either underconstrained geometry (affecting lidar) and textureless areas caused by aggressive lighting changes (affecting vision). In these conditions, our factor graph naturally uses the best information available from each sensor modality without any hard switches.

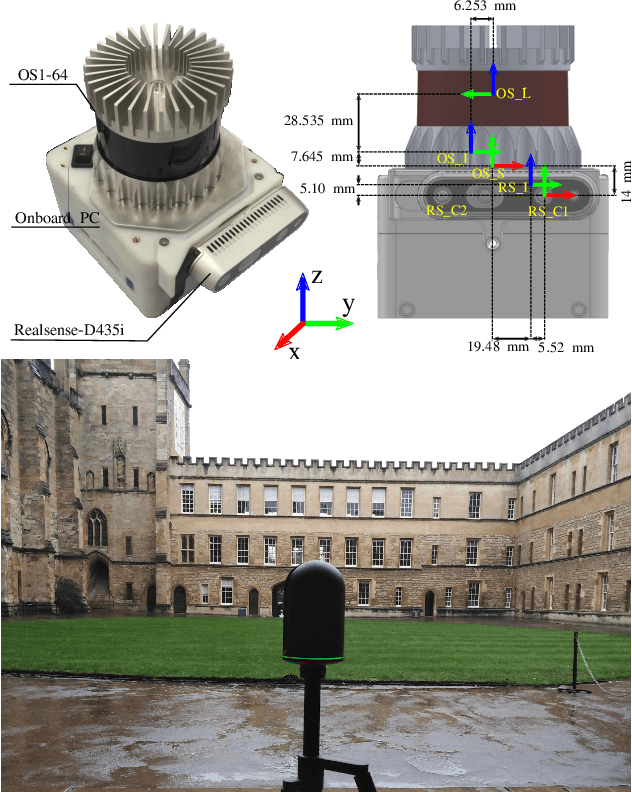

The Newer College Dataset: Handheld LiDAR, Inertial and Vision with Ground Truth

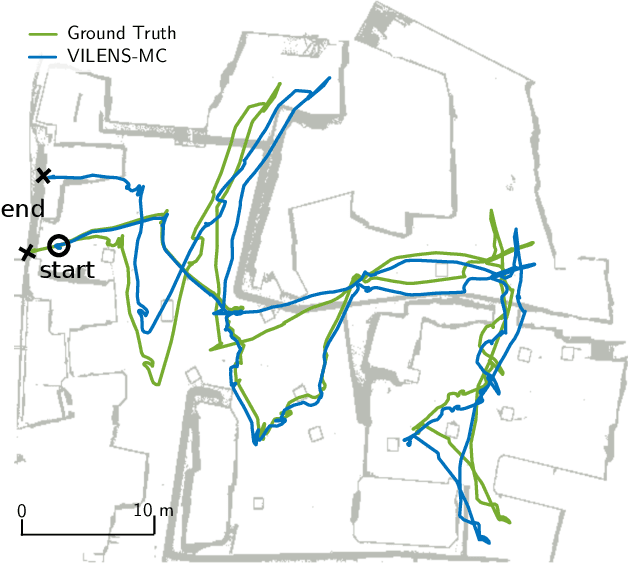

Mar 12, 2020

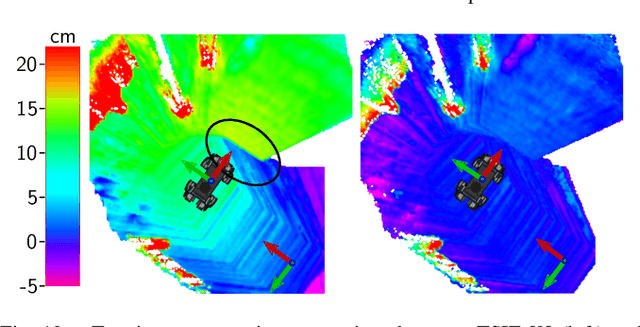

Abstract:In this paper we present a large dataset with a variety of mobile mapping sensors collected using a handheld device carried at typical walking speeds for nearly 2.2 km through New College, Oxford. The dataset includes data from two commercially available devices - a stereoscopic-inertial camera and a multi-beam 3D LiDAR, which also provides inertial measurements. Additionally, we used a tripod-mounted survey grade LiDAR scanner to capture a detailed millimeter-accurate 3D map of the test location (containing $\sim$290 million points). Using the map we inferred centimeter-accurate 6 Degree of Freedom (DoF) ground truth for the position of the device for each LiDAR scan to enable better evaluation of LiDAR and vision localisation, mapping and reconstruction systems. This ground truth is the particular novel contribution of this dataset and we believe that it will enable systematic evaluation which many similar datasets have lacked. The dataset combines both built environments, open spaces and vegetated areas so as to test localization and mapping systems such as vision-based navigation, visual and LiDAR SLAM, 3D LIDAR reconstruction and appearance-based place recognition. The dataset is available at: ori.ox.ac.uk/datasets/newer-college-dataset

Preintegrated Velocity Bias Estimation to Overcome Contact Nonlinearities in Legged Robot Odometry

Oct 22, 2019

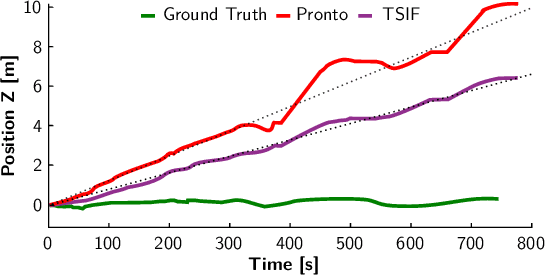

Abstract:In this paper, we present a novel factor graph formulation to estimate the pose and velocity of a quadruped robot on slippery and deformable terrains. The factor graph includes a new type of preintegrated velocity factor that incorporates velocity inputs from leg odometry. To accommodate for leg odometry drift, we extend the robot's state vector with a bias term for this preintegrated velocity factor. This term incorporates all the effects of unmodeled uncertainties at the contact point, such as slippery or deformable grounds and leg flexibility. The bias term can be accurately estimated thanks to the tight fusion of the preintegrated velocity factor with stereo vision and IMU factors, without which it would be unobservable. The system has been validated on several scenarios that involve dynamic motions of the ANYmal robot on loose rocks, slopes and muddy ground. We demonstrate a 26% improvement of relative pose error compared to our previous work and 52% compared to a state-of-the-art proprioceptive state estimator.

Robust Legged Robot State Estimation Using Factor Graph Optimization

Apr 05, 2019

Abstract:Legged robots, specifically quadrupeds, are becoming increasingly attractive for industrial applications such as inspection. However, to leave the laboratory and to become useful to an end user requires reliability in harsh conditions. From the perspective of perception, it is essential to be able to accurately estimate the robot's state despite challenges such as uneven or slippery terrain, textureless and reflective scenes, as well as dynamic camera occlusions. We are motivated to reduce the dependency on foot contact classifications, which fail when slipping, and to reduce position drift during dynamic motions such as trotting. To this end, we present a factor graph optimization method for state estimation which tightly fuses and smooths inertial navigation, leg odometry and visual odometry. The effectiveness of the approach is demonstrated using the ANYmal quadruped robot navigating in a realistic outdoor industrial environment. This experiment included trotting, walking, crossing obstacles and ascending a staircase. The proposed approach decreased the relative position error by up to 55% and absolute position error by 76% compared to kinematic-inertial odometry.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge