Yiduo Wang

RePose: A Real-Time 3D Human Pose Estimation and Biomechanical Analysis Framework for Rehabilitation

Jan 02, 2026Abstract:We propose a real-time 3D human pose estimation and motion analysis method termed RePose for rehabilitation training. It is capable of real-time monitoring and evaluation of patients'motion during rehabilitation, providing immediate feedback and guidance to assist patients in executing rehabilitation exercises correctly. Firstly, we introduce a unified pipeline for end-to-end real-time human pose estimation and motion analysis using RGB video input from multiple cameras which can be applied to the field of rehabilitation training. The pipeline can help to monitor and correct patients'actions, thus aiding them in regaining muscle strength and motor functions. Secondly, we propose a fast tracking method for medical rehabilitation scenarios with multiple-person interference, which requires less than 1ms for tracking for a single frame. Additionally, we modify SmoothNet for real-time posture estimation, effectively reducing pose estimation errors and restoring the patient's true motion state, making it visually smoother. Finally, we use Unity platform for real-time monitoring and evaluation of patients' motion during rehabilitation, and to display the muscle stress conditions to assist patients with their rehabilitation training.

Disentangling Hardness from Noise: An Uncertainty-Driven Model-Agnostic Framework for Long-Tailed Remote Sensing Classification

Jan 01, 2026Abstract:Long-Tailed distributions are pervasive in remote sensing due to the inherently imbalanced occurrence of grounded objects. However, a critical challenge remains largely overlooked, i.e., disentangling hard tail data samples from noisy ambiguous ones. Conventional methods often indiscriminately emphasize all low-confidence samples, leading to overfitting on noisy data. To bridge this gap, building upon Evidential Deep Learning, we propose a model-agnostic uncertainty-aware framework termed DUAL, which dynamically disentangles prediction uncertainty into Epistemic Uncertainty (EU) and Aleatoric Uncertainty (AU). Specifically, we introduce EU as an indicator of sample scarcity to guide a reweighting strategy for hard-to-learn tail samples, while leveraging AU to quantify data ambiguity, employing an adaptive label smoothing mechanism to suppress the impact of noise. Extensive experiments on multiple datasets across various backbones demonstrate the effectiveness and generalization of our framework, surpassing strong baselines such as TGN and SADE. Ablation studies provide further insights into the crucial choices of our design.

Online Dynamic SLAM with Incremental Smoothing and Mapping

Sep 10, 2025Abstract:Dynamic SLAM methods jointly estimate for the static and dynamic scene components, however existing approaches, while accurate, are computationally expensive and unsuitable for online applications. In this work, we present the first application of incremental optimisation techniques to Dynamic SLAM. We introduce a novel factor-graph formulation and system architecture designed to take advantage of existing incremental optimisation methods and support online estimation. On multiple datasets, we demonstrate that our method achieves equal to or better than state-of-the-art in camera pose and object motion accuracy. We further analyse the structural properties of our approach to demonstrate its scalability and provide insight regarding the challenges of solving Dynamic SLAM incrementally. Finally, we show that our formulation results in problem structure well-suited to incremental solvers, while our system architecture further enhances performance, achieving a 5x speed-up over existing methods.

DynoSAM: Open-Source Smoothing and Mapping Framework for Dynamic SLAM

Jan 21, 2025Abstract:Traditional Visual Simultaneous Localization and Mapping (vSLAM) systems focus solely on static scene structures, overlooking dynamic elements in the environment. Although effective for accurate visual odometry in complex scenarios, these methods discard crucial information about moving objects. By incorporating this information into a Dynamic SLAM framework, the motion of dynamic entities can be estimated, enhancing navigation whilst ensuring accurate localization. However, the fundamental formulation of Dynamic SLAM remains an open challenge, with no consensus on the optimal approach for accurate motion estimation within a SLAM pipeline. Therefore, we developed DynoSAM, an open-source framework for Dynamic SLAM that enables the efficient implementation, testing, and comparison of various Dynamic SLAM optimization formulations. DynoSAM integrates static and dynamic measurements into a unified optimization problem solved using factor graphs, simultaneously estimating camera poses, static scene, object motion or poses, and object structures. We evaluate DynoSAM across diverse simulated and real-world datasets, achieving state-of-the-art motion estimation in indoor and outdoor environments, with substantial improvements over existing systems. Additionally, we demonstrate DynoSAM utility in downstream applications, including 3D reconstruction of dynamic scenes and trajectory prediction, thereby showcasing potential for advancing dynamic object-aware SLAM systems. DynoSAM is open-sourced at https://github.com/ACFR-RPG/DynOSAM.

DynORecon: Dynamic Object Reconstruction for Navigation

Sep 30, 2024Abstract:This paper presents DynORecon, a Dynamic Object Reconstruction system that leverages the information provided by Dynamic SLAM to simultaneously generate a volumetric map of observed moving entities while estimating free space to support navigation. By capitalising on the motion estimations provided by Dynamic SLAM, DynORecon continuously refines the representation of dynamic objects to eliminate residual artefacts from past observations and incrementally reconstructs each object, seamlessly integrating new observations to capture previously unseen structures. Our system is highly efficient (~20 FPS) and produces accurate (~10 cm) reconstructions of dynamic objects using simulated and real-world outdoor datasets.

The Importance of Coordinate Frames in Dynamic SLAM

Dec 07, 2023Abstract:Most Simultaneous localisation and mapping (SLAM) systems have traditionally assumed a static world, which does not align with real-world scenarios. To enable robots to safely navigate and plan in dynamic environments, it is essential to employ representations capable of handling moving objects. Dynamic SLAM is an emerging field in SLAM research as it improves the overall system accuracy while providing additional estimation of object motions. State-of-the-art literature informs two main formulations for Dynamic SLAM, representing dynamic object points in either the world or object coordinate frame. While expressing object points in a local reference frame may seem intuitive, it may not necessarily lead to the most accurate and robust solutions. This paper conducts and presents a thorough analysis of various Dynamic SLAM formulations, identifying the best approach to address the problem. To this end, we introduce a front-end agnostic framework using GTSAM that can be used to evaluate various Dynamic SLAM formulations.

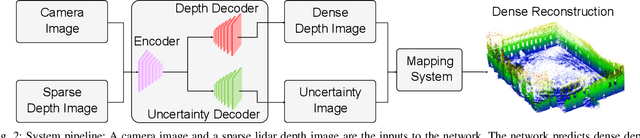

3D Lidar Reconstruction with Probabilistic Depth Completion for Robotic Navigation

Jul 25, 2022

Abstract:Safe motion planning in robotics requires planning into space which has been verified to be free of obstacles. However, obtaining such environment representations using lidars is challenging by virtue of the sparsity of their depth measurements. We present a learning-aided 3D lidar reconstruction framework that upsamples sparse lidar depth measurements with the aid of overlapping camera images so as to generate denser reconstructions with more definitively free space than can be achieved with the raw lidar measurements alone. We use a neural network with an encoder-decoder structure to predict dense depth images along with depth uncertainty estimates which are fused using a volumetric mapping system. We conduct experiments on real-world outdoor datasets captured using a handheld sensing device and a legged robot. Using input data from a 16-beam lidar mapping a building network, our experiments showed that the amount of estimated free space was increased by more than 40% with our approach. We also show that our approach trained on a synthetic dataset generalises well to real-world outdoor scenes without additional fine-tuning. Finally, we demonstrate how motion planning tasks can benefit from these denser reconstructions.

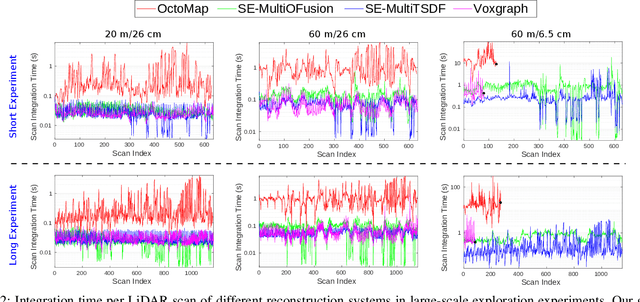

Scalable and Elastic LiDAR Reconstruction in Complex Environments Through Spatial Analysis

Jun 29, 2021

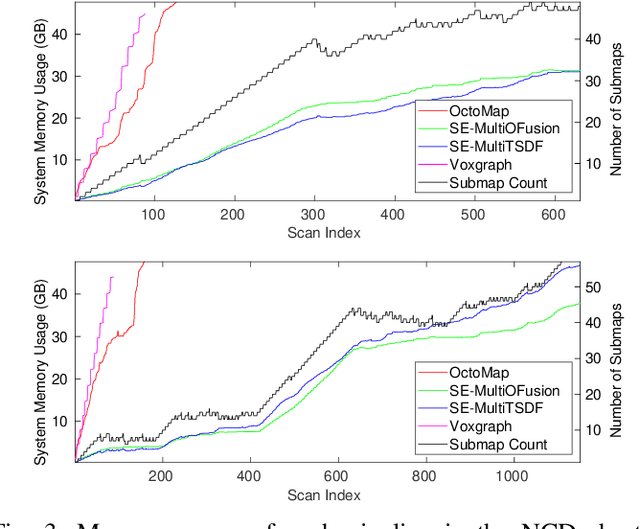

Abstract:This paper presents novel strategies for spawning and fusing submaps within an elastic dense 3D reconstruction system. The proposed system uses spatial understanding of the scanned environment to control memory usage growth by fusing overlapping submaps in different ways. This allows the number of submaps and memory consumption to scale with the size of the environment rather than the duration of exploration. By analysing spatial overlap, our system segments distinct spaces, such as rooms and stairwells on the fly during exploration. Additionally, we present a new mathematical formulation of relative uncertainty between poses to improve the global consistency of the reconstruction. Performance is demonstrated using a multi-floor multi-room indoor experiment, a large-scale outdoor experiment and a simulated dataset. Relative to our baseline, the presented approach demonstrates improved scalability and accuracy.

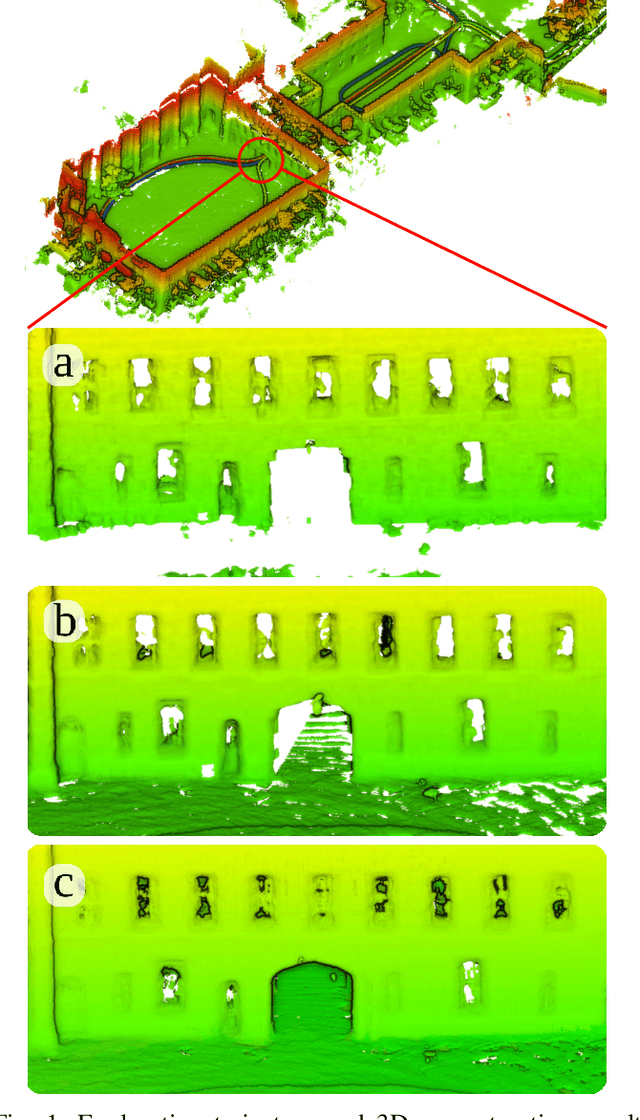

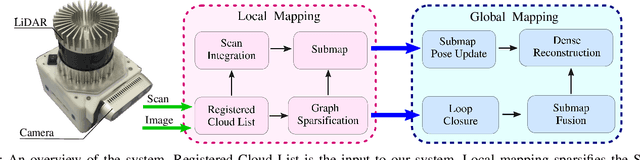

Elastic and Efficient LiDAR Reconstruction for Large-Scale Exploration Tasks

Oct 19, 2020

Abstract:We present an efficient, elastic 3D LiDAR reconstruction framework which can reconstruct up to maximum LiDAR ranges (60 m) at multiple frames per second, thus enabling robot exploration in large-scale environments. Our approach only requires a CPU. We focus on three main challenges of large-scale reconstruction: integration of long-range LiDAR scans at high frequency, the capacity to deform the reconstruction after loop closures are detected, and scalability for long-duration exploration. Our system extends upon a state-of-the-art efficient RGB-D volumetric reconstruction technique, called supereight, to support LiDAR scans and a newly developed submapping technique to allow for dynamic correction of the 3D reconstruction. We then introduce a novel pose graph sparsification and submap fusion feature to make our system more scalable for large environments. We evaluate the performance using a published dataset captured by a handheld mapping device scanning a set of buildings, and with a mobile robot exploring an underground room network. Experimental results demonstrate that our system can reconstruct at 3 Hz with 60 m sensor range and ~5 cm resolution, while state-of-the-art approaches can only reconstruct to 25 cm resolution or 20 m range at the same frequency.

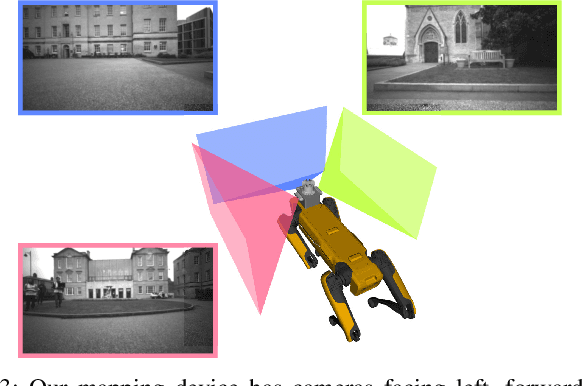

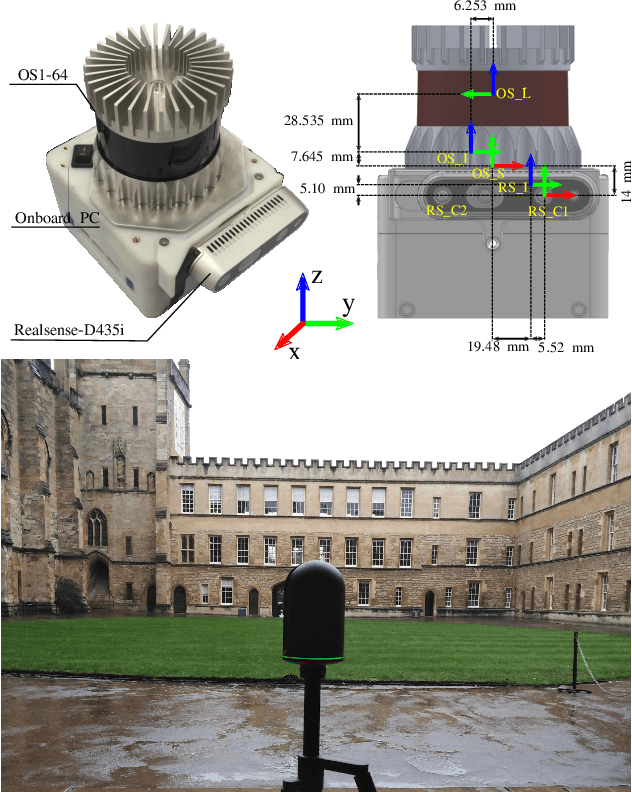

The Newer College Dataset: Handheld LiDAR, Inertial and Vision with Ground Truth

Mar 12, 2020

Abstract:In this paper we present a large dataset with a variety of mobile mapping sensors collected using a handheld device carried at typical walking speeds for nearly 2.2 km through New College, Oxford. The dataset includes data from two commercially available devices - a stereoscopic-inertial camera and a multi-beam 3D LiDAR, which also provides inertial measurements. Additionally, we used a tripod-mounted survey grade LiDAR scanner to capture a detailed millimeter-accurate 3D map of the test location (containing $\sim$290 million points). Using the map we inferred centimeter-accurate 6 Degree of Freedom (DoF) ground truth for the position of the device for each LiDAR scan to enable better evaluation of LiDAR and vision localisation, mapping and reconstruction systems. This ground truth is the particular novel contribution of this dataset and we believe that it will enable systematic evaluation which many similar datasets have lacked. The dataset combines both built environments, open spaces and vegetated areas so as to test localization and mapping systems such as vision-based navigation, visual and LiDAR SLAM, 3D LIDAR reconstruction and appearance-based place recognition. The dataset is available at: ori.ox.ac.uk/datasets/newer-college-dataset

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge