Marija Popović

Active Informative Planning for UAV-based Weed Mapping using Discrete Gaussian Process Representations

Jan 19, 2026Abstract:Accurate agricultural weed mapping using unmanned aerial vehicles (UAVs) is crucial for precision farming. While traditional methods rely on rigid, pre-defined flight paths and intensive offline processing, informative path planning (IPP) offers a way to collect data adaptively where it is most needed. Gaussian process (GP) mapping provides a continuous model of weed distribution with built-in uncertainty. However, GPs must be discretised for practical use in autonomous planning. Many discretisation techniques exist, but the impact of discrete representation choice remains poorly understood. This paper investigates how different discrete GP representations influence both mapping quality and mission-level performance in UAV-based weed mapping. Considering a UAV equipped with a downward-facing camera, we implement a receding-horizon IPP strategy that selects sampling locations based on the map uncertainty, travel cost, and coverage penalties. We investigate multiple discretisation strategies for representing the GP posterior and use their induced map partitions to generate candidate viewpoints for planning. Experiments on real-world weed distributions show that representation choice significantly affects exploration behaviour and efficiency. Overall, our results demonstrate that discretisation is not only a representational detail but a key design choice that shapes planning dynamics, coverage efficiency, and computational load in online UAV weed mapping.

An Informative Planning Framework for Target Tracking and Active Mapping in Dynamic Environments with ASVs

Aug 21, 2025Abstract:Mobile robot platforms are increasingly being used to automate information gathering tasks such as environmental monitoring. Efficient target tracking in dynamic environments is critical for applications such as search and rescue and pollutant cleanups. In this letter, we study active mapping of floating targets that drift due to environmental disturbances such as wind and currents. This is a challenging problem as it involves predicting both spatial and temporal variations in the map due to changing conditions. We propose an informative path planning framework to map an arbitrary number of moving targets with initially unknown positions in dynamic environments. A key component of our approach is a spatiotemporal prediction network that predicts target position distributions over time. We propose an adaptive planning objective for target tracking that leverages these predictions. Simulation experiments show that our proposed planning objective improves target tracking performance compared to existing methods that consider only entropy reduction as the planning objective. Finally, we validate our approach in field tests using an autonomous surface vehicle, showcasing its ability to track targets in real-world monitoring scenarios.

Uncertainty-Informed Active Perception for Open Vocabulary Object Goal Navigation

Jun 16, 2025Abstract:Mobile robots exploring indoor environments increasingly rely on vision-language models to perceive high-level semantic cues in camera images, such as object categories. Such models offer the potential to substantially advance robot behaviour for tasks such as object-goal navigation (ObjectNav), where the robot must locate objects specified in natural language by exploring the environment. Current ObjectNav methods heavily depend on prompt engineering for perception and do not address the semantic uncertainty induced by variations in prompt phrasing. Ignoring semantic uncertainty can lead to suboptimal exploration, which in turn limits performance. Hence, we propose a semantic uncertainty-informed active perception pipeline for ObjectNav in indoor environments. We introduce a novel probabilistic sensor model for quantifying semantic uncertainty in vision-language models and incorporate it into a probabilistic geometric-semantic map to enhance spatial understanding. Based on this map, we develop a frontier exploration planner with an uncertainty-informed multi-armed bandit objective to guide efficient object search. Experimental results demonstrate that our method achieves ObjectNav success rates comparable to those of state-of-the-art approaches, without requiring extensive prompt engineering.

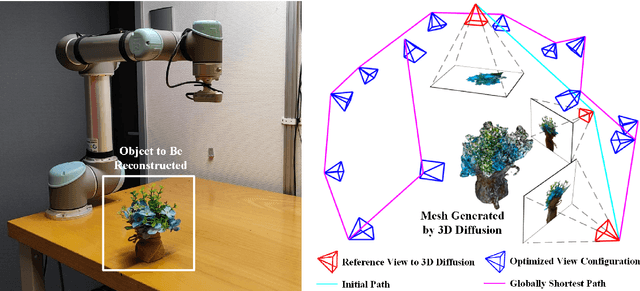

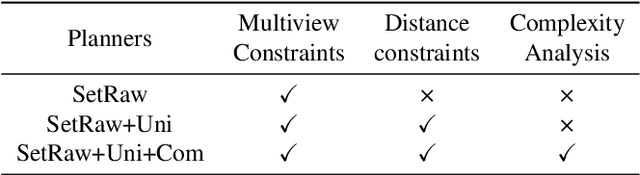

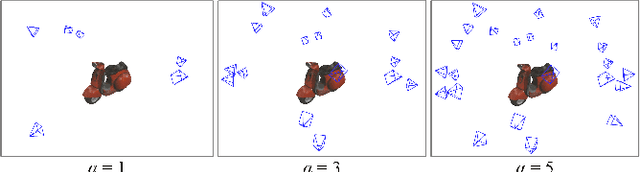

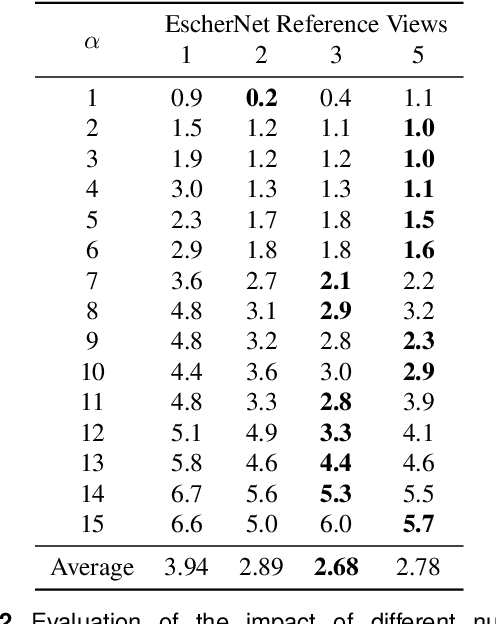

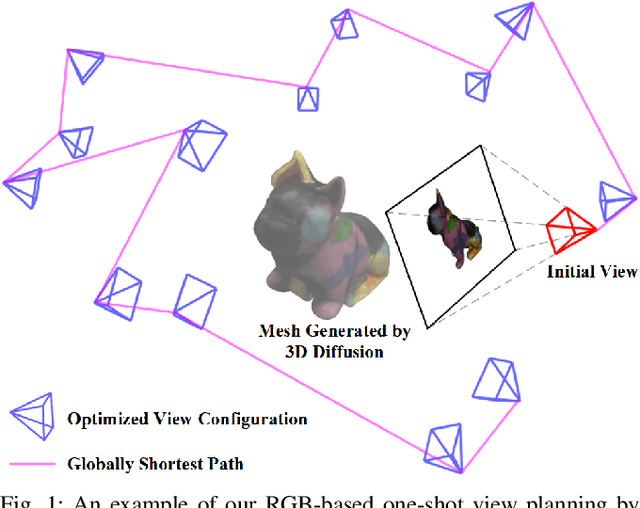

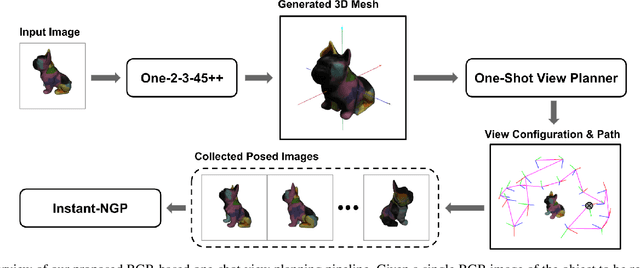

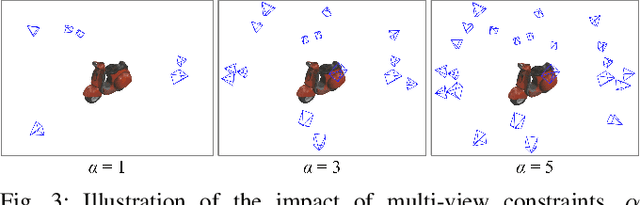

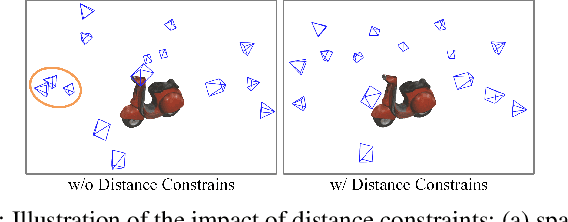

DM-OSVP++: One-Shot View Planning Using 3D Diffusion Models for Active RGB-Based Object Reconstruction

Apr 16, 2025

Abstract:Active object reconstruction is crucial for many robotic applications. A key aspect in these scenarios is generating object-specific view configurations to obtain informative measurements for reconstruction. One-shot view planning enables efficient data collection by predicting all views at once, eliminating the need for time-consuming online replanning. Our primary insight is to leverage the generative power of 3D diffusion models as valuable prior information. By conditioning on initial multi-view images, we exploit the priors from the 3D diffusion model to generate an approximate object model, serving as the foundation for our view planning. Our novel approach integrates the geometric and textural distributions of the object model into the view planning process, generating views that focus on the complex parts of the object to be reconstructed. We validate the proposed active object reconstruction system through both simulation and real-world experiments, demonstrating the effectiveness of using 3D diffusion priors for one-shot view planning.

PINGS: Gaussian Splatting Meets Distance Fields within a Point-Based Implicit Neural Map

Feb 09, 2025Abstract:Robots require high-fidelity reconstructions of their environment for effective operation. Such scene representations should be both, geometrically accurate and photorealistic to support downstream tasks. While this can be achieved by building distance fields from range sensors and radiance fields from cameras, the scalable incremental mapping of both fields consistently and at the same time with high quality remains challenging. In this paper, we propose a novel map representation that unifies a continuous signed distance field and a Gaussian splatting radiance field within an elastic and compact point-based implicit neural map. By enforcing geometric consistency between these fields, we achieve mutual improvements by exploiting both modalities. We devise a LiDAR-visual SLAM system called PINGS using the proposed map representation and evaluate it on several challenging large-scale datasets. Experimental results demonstrate that PINGS can incrementally build globally consistent distance and radiance fields encoded with a compact set of neural points. Compared to the state-of-the-art methods, PINGS achieves superior photometric and geometric rendering at novel views by leveraging the constraints from the distance field. Furthermore, by utilizing dense photometric cues and multi-view consistency from the radiance field, PINGS produces more accurate distance fields, leading to improved odometry estimation and mesh reconstruction.

ActiveGS: Active Scene Reconstruction using Gaussian Splatting

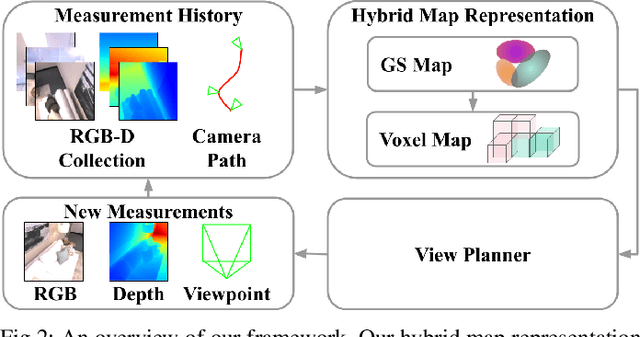

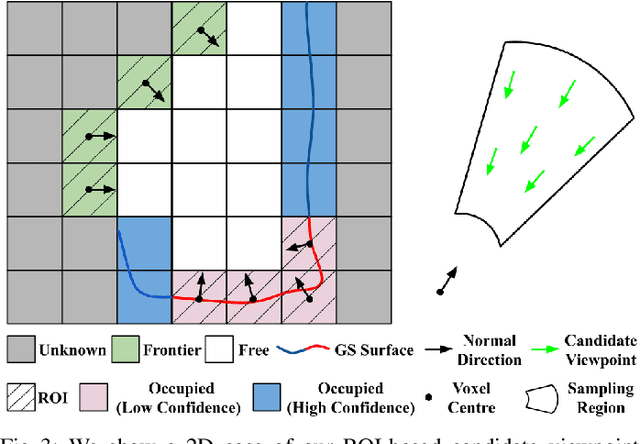

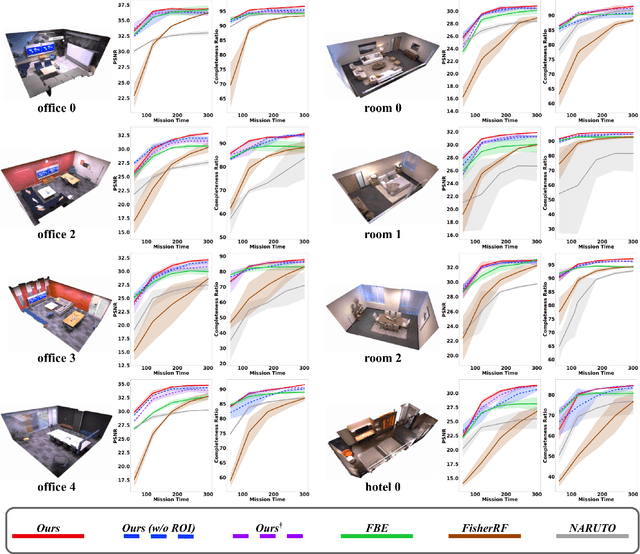

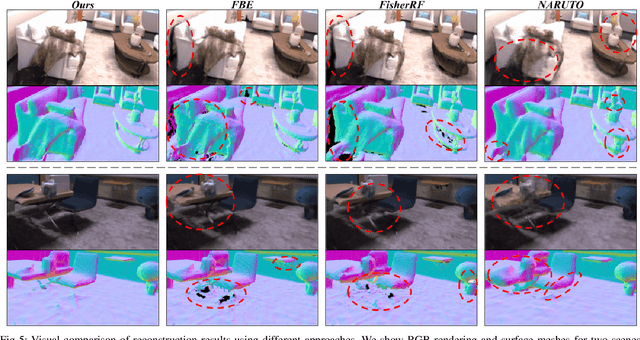

Dec 23, 2024

Abstract:Robotics applications often rely on scene reconstructions to enable downstream tasks. In this work, we tackle the challenge of actively building an accurate map of an unknown scene using an on-board RGB-D camera. We propose a hybrid map representation that combines a Gaussian splatting map with a coarse voxel map, leveraging the strengths of both representations: the high-fidelity scene reconstruction capabilities of Gaussian splatting and the spatial modelling strengths of the voxel map. The core of our framework is an effective confidence modelling technique for the Gaussian splatting map to identify under-reconstructed areas, while utilising spatial information from the voxel map to target unexplored areas and assist in collision-free path planning. By actively collecting scene information in under-reconstructed and unexplored areas for map updates, our approach achieves superior Gaussian splatting reconstruction results compared to state-of-the-art approaches. Additionally, we demonstrate the applicability of our active scene reconstruction framework in the real world using an unmanned aerial vehicle.

Towards Map-Agnostic Policies for Adaptive Informative Path Planning

Oct 22, 2024

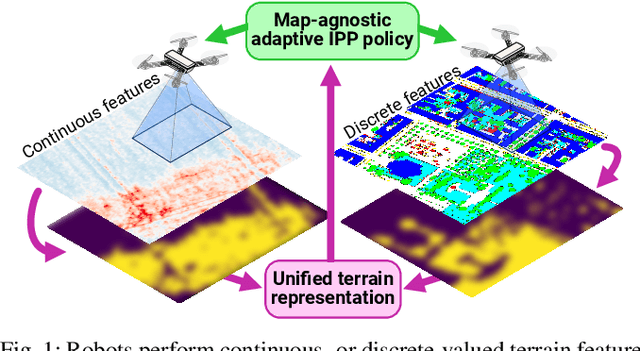

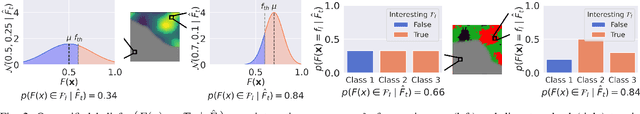

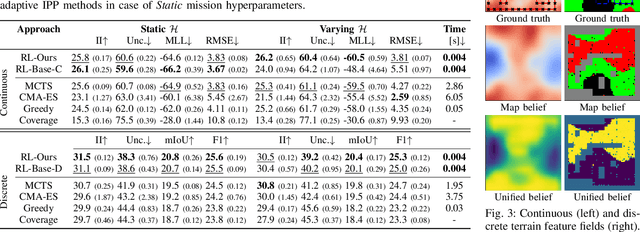

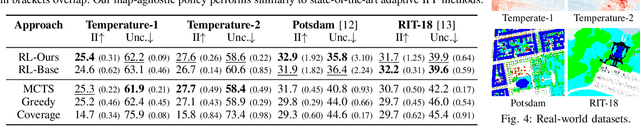

Abstract:Robots are frequently tasked to gather relevant sensor data in unknown terrains. A key challenge for classical path planning algorithms used for autonomous information gathering is adaptively replanning paths online as the terrain is explored given limited onboard compute resources. Recently, learning-based approaches emerged that train planning policies offline and enable computationally efficient online replanning performing policy inference. These approaches are designed and trained for terrain monitoring missions assuming a single specific map representation, which limits their applicability to different terrains. To address these issues, we propose a novel formulation of the adaptive informative path planning problem unified across different map representations, enabling training and deploying planning policies in a larger variety of monitoring missions. Experimental results validate that our novel formulation easily integrates with classical non-learning-based planning approaches while maintaining their performance. Our trained planning policy performs similarly to state-of-the-art map-specifically trained policies. We validate our learned policy on unseen real-world terrain datasets.

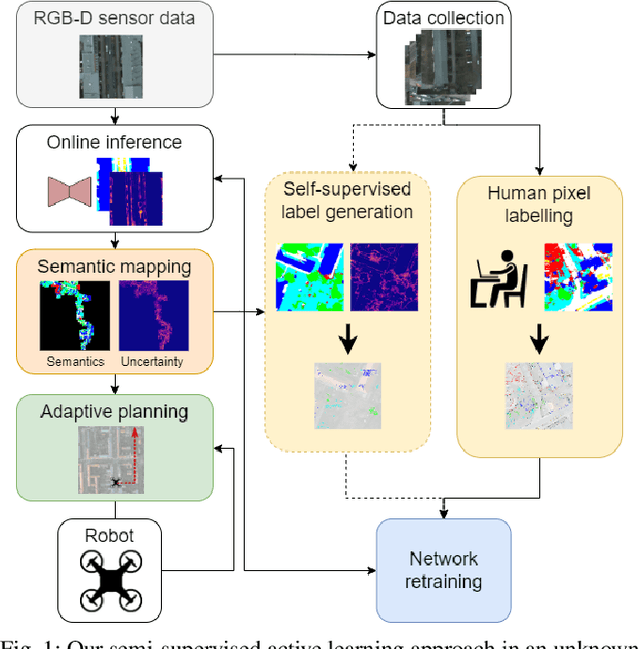

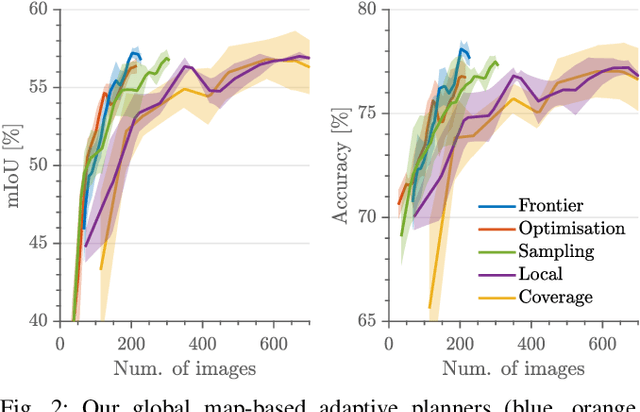

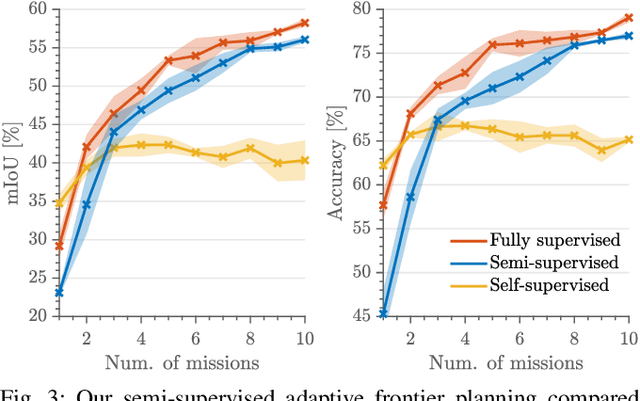

Active Learning of Robot Vision Using Adaptive Path Planning

Oct 14, 2024

Abstract:Robots need robust and flexible vision systems to perceive and reason about their environments beyond geometry. Most of such systems build upon deep learning approaches. As autonomous robots are commonly deployed in initially unknown environments, pre-training on static datasets cannot always capture the variety of domains and limits the robot's vision performance during missions. Recently, self-supervised as well as fully supervised active learning methods emerged to improve robotic vision. These approaches rely on large in-domain pre-training datasets or require substantial human labelling effort. To address these issues, we present a recent adaptive planning framework for efficient training data collection to substantially reduce human labelling requirements in semantic terrain monitoring missions. To this end, we combine high-quality human labels with automatically generated pseudo labels. Experimental results show that the framework reaches segmentation performance close to fully supervised approaches with drastically reduced human labelling effort while outperforming purely self-supervised approaches. We discuss the advantages and limitations of current methods and outline valuable future research avenues towards more robust and flexible robotic vision systems in unknown environments.

Exploiting Priors from 3D Diffusion Models for RGB-Based One-Shot View Planning

Mar 25, 2024

Abstract:Object reconstruction is relevant for many autonomous robotic tasks that require interaction with the environment. A key challenge in such scenarios is planning view configurations to collect informative measurements for reconstructing an initially unknown object. One-shot view planning enables efficient data collection by predicting view configurations and planning the globally shortest path connecting all views at once. However, geometric priors about the object are required to conduct one-shot view planning. In this work, we propose a novel one-shot view planning approach that utilizes the powerful 3D generation capabilities of diffusion models as priors. By incorporating such geometric priors into our pipeline, we achieve effective one-shot view planning starting with only a single RGB image of the object to be reconstructed. Our planning experiments in simulation and real-world setups indicate that our approach balances well between object reconstruction quality and movement cost.

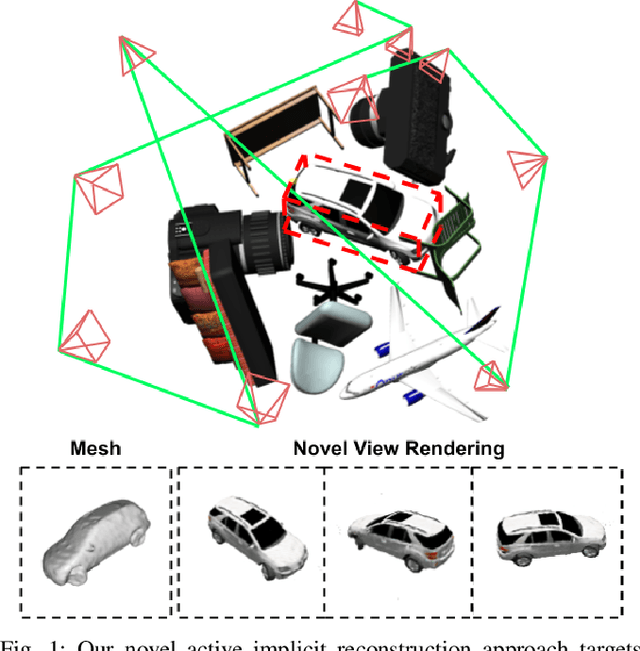

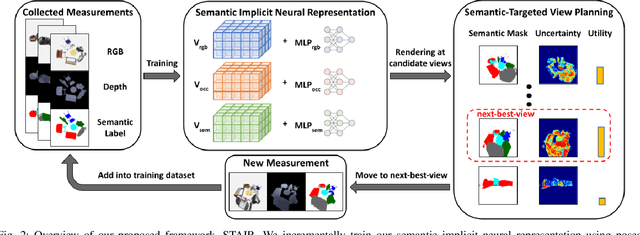

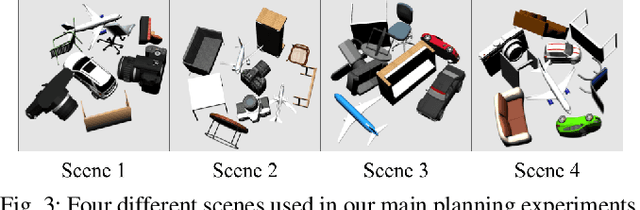

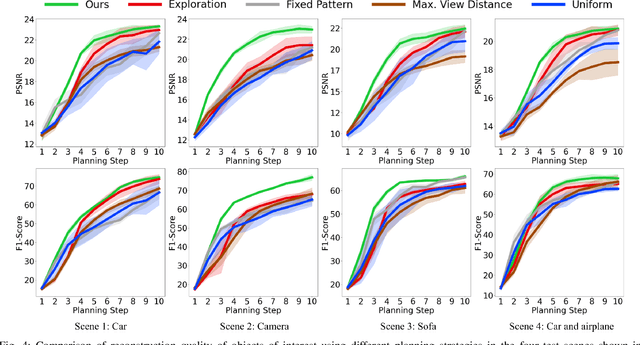

STAIR: Semantic-Targeted Active Implicit Reconstruction

Mar 17, 2024

Abstract:Many autonomous robotic applications require object-level understanding when deployed. Actively reconstructing objects of interest, i.e. objects with specific semantic meanings, is therefore relevant for a robot to perform downstream tasks in an initially unknown environment. In this work, we propose a novel framework for semantic-targeted active reconstruction using posed RGB-D measurements and 2D semantic labels as input. The key components of our framework are a semantic implicit neural representation and a compatible planning utility function based on semantic rendering and uncertainty estimation, enabling adaptive view planning to target objects of interest. Our planning approach achieves better reconstruction performance in terms of mesh and novel view rendering quality compared to implicit reconstruction baselines that do not consider semantics for view planning. Our framework further outperforms a state-of-the-art semantic-targeted active reconstruction pipeline based on explicit maps, justifying our choice of utilising implicit neural representations to tackle semantic-targeted active reconstruction problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge