Liren Jin

DM-OSVP++: One-Shot View Planning Using 3D Diffusion Models for Active RGB-Based Object Reconstruction

Apr 16, 2025

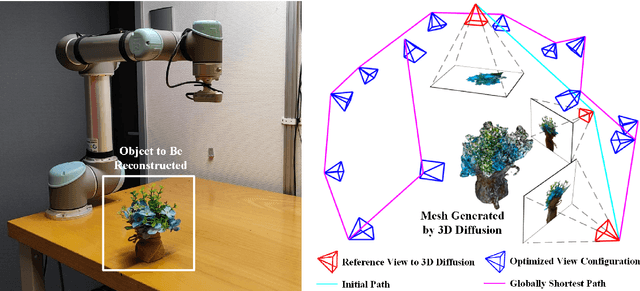

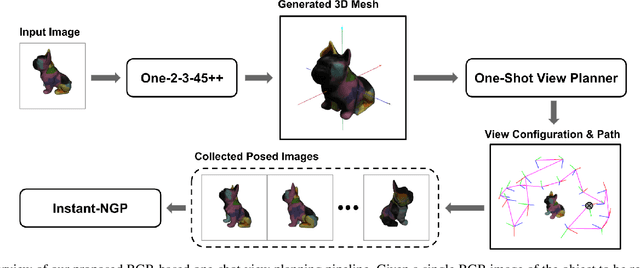

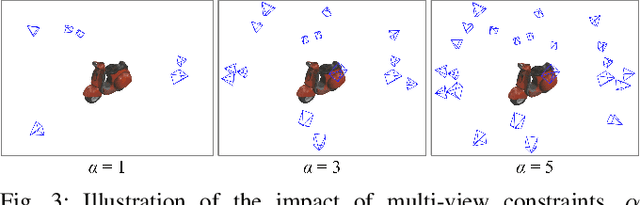

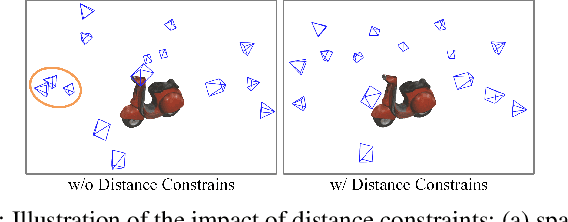

Abstract:Active object reconstruction is crucial for many robotic applications. A key aspect in these scenarios is generating object-specific view configurations to obtain informative measurements for reconstruction. One-shot view planning enables efficient data collection by predicting all views at once, eliminating the need for time-consuming online replanning. Our primary insight is to leverage the generative power of 3D diffusion models as valuable prior information. By conditioning on initial multi-view images, we exploit the priors from the 3D diffusion model to generate an approximate object model, serving as the foundation for our view planning. Our novel approach integrates the geometric and textural distributions of the object model into the view planning process, generating views that focus on the complex parts of the object to be reconstructed. We validate the proposed active object reconstruction system through both simulation and real-world experiments, demonstrating the effectiveness of using 3D diffusion priors for one-shot view planning.

PINGS: Gaussian Splatting Meets Distance Fields within a Point-Based Implicit Neural Map

Feb 09, 2025Abstract:Robots require high-fidelity reconstructions of their environment for effective operation. Such scene representations should be both, geometrically accurate and photorealistic to support downstream tasks. While this can be achieved by building distance fields from range sensors and radiance fields from cameras, the scalable incremental mapping of both fields consistently and at the same time with high quality remains challenging. In this paper, we propose a novel map representation that unifies a continuous signed distance field and a Gaussian splatting radiance field within an elastic and compact point-based implicit neural map. By enforcing geometric consistency between these fields, we achieve mutual improvements by exploiting both modalities. We devise a LiDAR-visual SLAM system called PINGS using the proposed map representation and evaluate it on several challenging large-scale datasets. Experimental results demonstrate that PINGS can incrementally build globally consistent distance and radiance fields encoded with a compact set of neural points. Compared to the state-of-the-art methods, PINGS achieves superior photometric and geometric rendering at novel views by leveraging the constraints from the distance field. Furthermore, by utilizing dense photometric cues and multi-view consistency from the radiance field, PINGS produces more accurate distance fields, leading to improved odometry estimation and mesh reconstruction.

ActiveGS: Active Scene Reconstruction using Gaussian Splatting

Dec 23, 2024

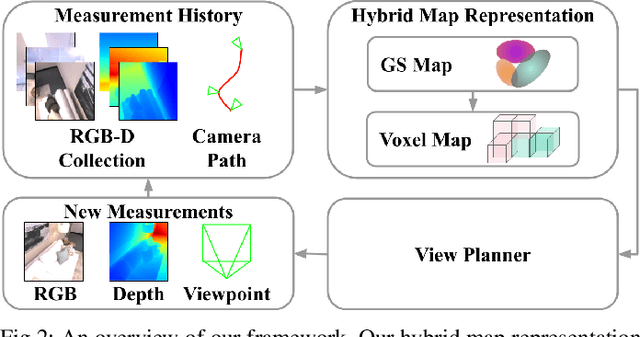

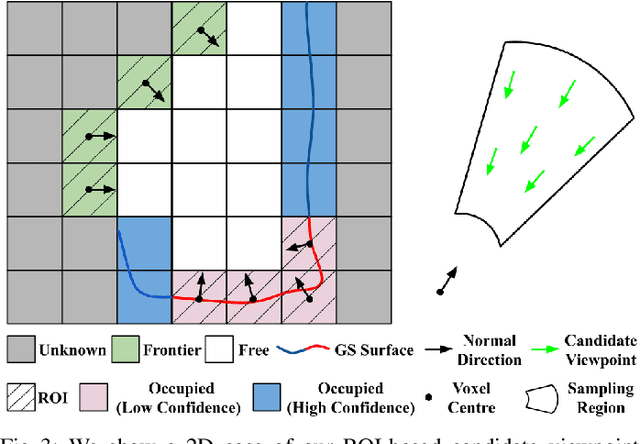

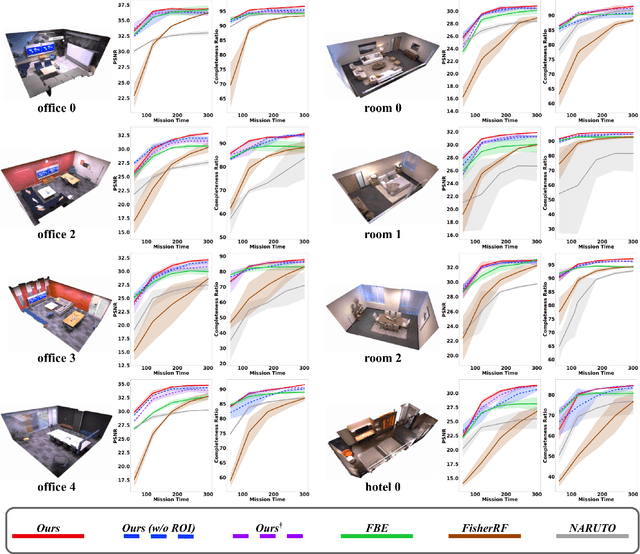

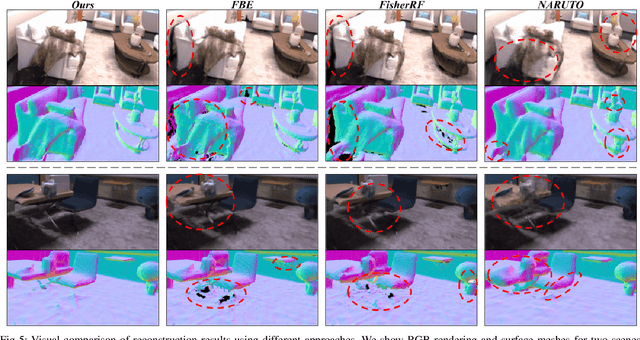

Abstract:Robotics applications often rely on scene reconstructions to enable downstream tasks. In this work, we tackle the challenge of actively building an accurate map of an unknown scene using an on-board RGB-D camera. We propose a hybrid map representation that combines a Gaussian splatting map with a coarse voxel map, leveraging the strengths of both representations: the high-fidelity scene reconstruction capabilities of Gaussian splatting and the spatial modelling strengths of the voxel map. The core of our framework is an effective confidence modelling technique for the Gaussian splatting map to identify under-reconstructed areas, while utilising spatial information from the voxel map to target unexplored areas and assist in collision-free path planning. By actively collecting scene information in under-reconstructed and unexplored areas for map updates, our approach achieves superior Gaussian splatting reconstruction results compared to state-of-the-art approaches. Additionally, we demonstrate the applicability of our active scene reconstruction framework in the real world using an unmanned aerial vehicle.

Exploiting Priors from 3D Diffusion Models for RGB-Based One-Shot View Planning

Mar 25, 2024

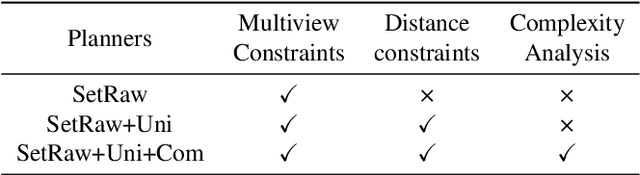

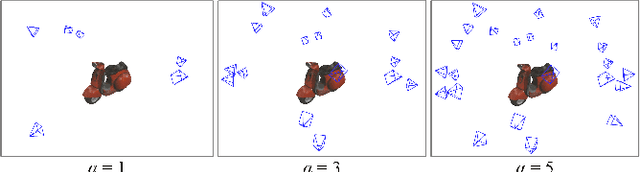

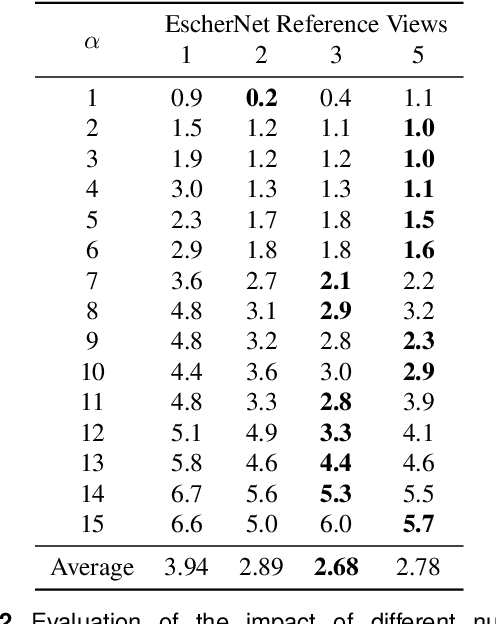

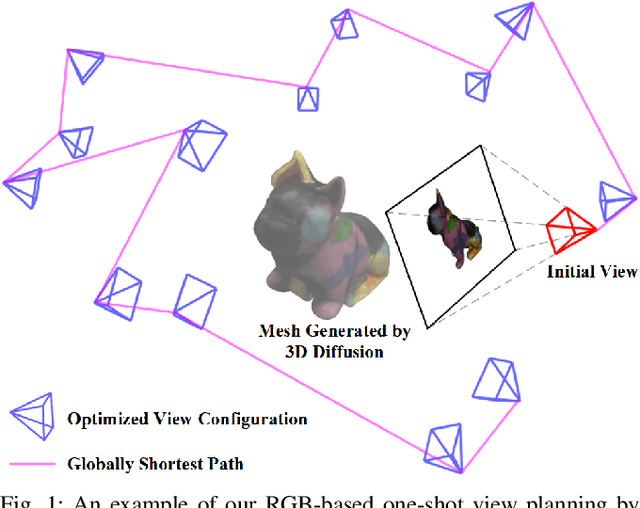

Abstract:Object reconstruction is relevant for many autonomous robotic tasks that require interaction with the environment. A key challenge in such scenarios is planning view configurations to collect informative measurements for reconstructing an initially unknown object. One-shot view planning enables efficient data collection by predicting view configurations and planning the globally shortest path connecting all views at once. However, geometric priors about the object are required to conduct one-shot view planning. In this work, we propose a novel one-shot view planning approach that utilizes the powerful 3D generation capabilities of diffusion models as priors. By incorporating such geometric priors into our pipeline, we achieve effective one-shot view planning starting with only a single RGB image of the object to be reconstructed. Our planning experiments in simulation and real-world setups indicate that our approach balances well between object reconstruction quality and movement cost.

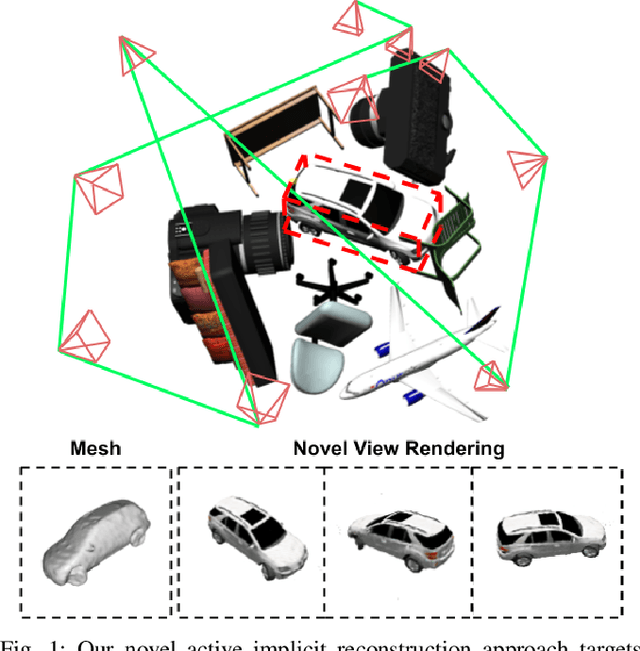

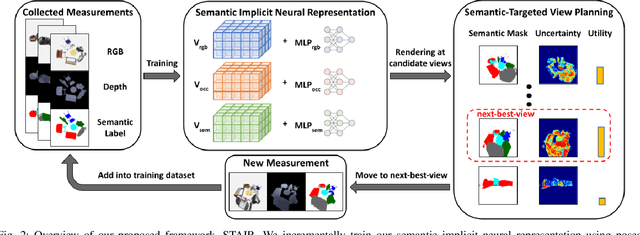

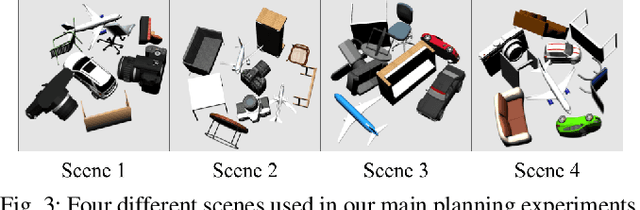

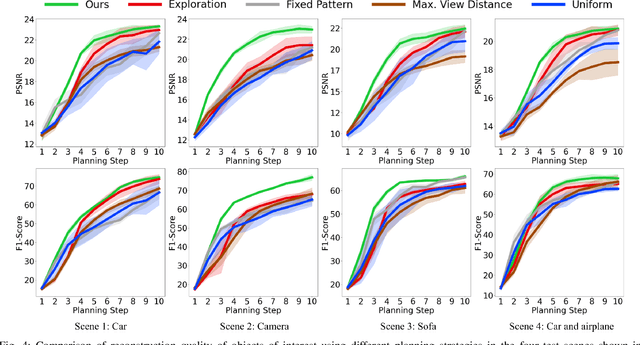

STAIR: Semantic-Targeted Active Implicit Reconstruction

Mar 17, 2024

Abstract:Many autonomous robotic applications require object-level understanding when deployed. Actively reconstructing objects of interest, i.e. objects with specific semantic meanings, is therefore relevant for a robot to perform downstream tasks in an initially unknown environment. In this work, we propose a novel framework for semantic-targeted active reconstruction using posed RGB-D measurements and 2D semantic labels as input. The key components of our framework are a semantic implicit neural representation and a compatible planning utility function based on semantic rendering and uncertainty estimation, enabling adaptive view planning to target objects of interest. Our planning approach achieves better reconstruction performance in terms of mesh and novel view rendering quality compared to implicit reconstruction baselines that do not consider semantics for view planning. Our framework further outperforms a state-of-the-art semantic-targeted active reconstruction pipeline based on explicit maps, justifying our choice of utilising implicit neural representations to tackle semantic-targeted active reconstruction problems.

How Many Views Are Needed to Reconstruct an Unknown Object Using NeRF?

Oct 01, 2023Abstract:Neural Radiance Fields (NeRFs) are gaining significant interest for online active object reconstruction due to their exceptional memory efficiency and requirement for only posed RGB inputs. Previous NeRF-based view planning methods exhibit computational inefficiency since they rely on an iterative paradigm, consisting of (1) retraining the NeRF when new images arrive; and (2) planning a path to the next best view only. To address these limitations, we propose a non-iterative pipeline based on the Prediction of the Required number of Views (PRV). The key idea behind our approach is that the required number of views to reconstruct an object depends on its complexity. Therefore, we design a deep neural network, named PRVNet, to predict the required number of views, allowing us to tailor the data acquisition based on the object complexity and plan a globally shortest path. To train our PRVNet, we generate supervision labels using the ShapeNet dataset. Simulated experiments show that our PRV-based view planning method outperforms baselines, achieving good reconstruction quality while significantly reducing movement cost and planning time. We further justify the generalization ability of our approach in a real-world experiment.

Active Implicit Reconstruction Using One-Shot View Planning

Oct 01, 2023Abstract:Active object reconstruction using autonomous robots is gaining great interest. A primary goal in this task is to maximize the information of the object to be reconstructed, given limited on-board resources. Previous view planning methods exhibit inefficiency since they rely on an iterative paradigm based on explicit representations, consisting of (1) planning a path to the next-best view only; and (2) requiring a considerable number of less-gain views in terms of surface coverage. To address these limitations, we integrated implicit representations into the One-Shot View Planning (OSVP). The key idea behind our approach is to use implicit representations to obtain the small missing surface areas instead of observing them with extra views. Therefore, we design a deep neural network, named OSVP, to directly predict a set of views given a dense point cloud refined from an initial sparse observation. To train our OSVP network, we generate supervision labels using dense point clouds refined by implicit representations and set covering optimization problems. Simulated experiments show that our method achieves sufficient reconstruction quality, outperforming several baselines under limited view and movement budgets. We further demonstrate the applicability of our approach in a real-world object reconstruction scenario.

NeU-NBV: Next Best View Planning Using Uncertainty Estimation in Image-Based Neural Rendering

Mar 02, 2023Abstract:Autonomous robotic tasks require actively perceiving the environment to achieve application-specific goals. In this paper, we address the problem of positioning an RGB camera to collect the most informative images to represent an unknown scene, given a limited measurement budget. We propose a novel mapless planning framework to iteratively plan the next best camera view based on collected image measurements. A key aspect of our approach is a new technique for uncertainty estimation in image-based neural rendering, which guides measurement acquisition at the most uncertain view among view candidates, thus maximising the information value during data collection. By incrementally adding new measurements into our image collection, our approach efficiently explores an unknown scene in a mapless manner. We show that our uncertainty estimation is generalisable and valuable for view planning in unknown scenes. Our planning experiments using synthetic and real-world data verify that our uncertainty-guided approach finds informative images leading to more accurate scene representations when compared against baselines.

Informative Path Planning for Active Learning in Aerial Semantic Mapping

Mar 03, 2022

Abstract:Semantic segmentation of aerial imagery is an important tool for mapping and earth observation. However, supervised deep learning models for segmentation rely on large amounts of high-quality labelled data, which is labour-intensive and time-consuming to generate. To address this, we propose a new approach for using unmanned aerial vehicles (UAVs) to autonomously collect useful data for model training. We exploit a Bayesian approach to estimate model uncertainty in semantic segmentation. During a mission, the semantic predictions and model uncertainty are used as input for terrain mapping. A key aspect of our pipeline is to link the mapped model uncertainty to a robotic planning objective based on active learning. This enables us to adaptively guide a UAV to gather the most informative terrain images to be labelled by a human for model training. Our experimental evaluation on real-world data shows the benefit of using our informative planning approach in comparison to static coverage paths in terms of maximising model performance and reducing labelling efforts.

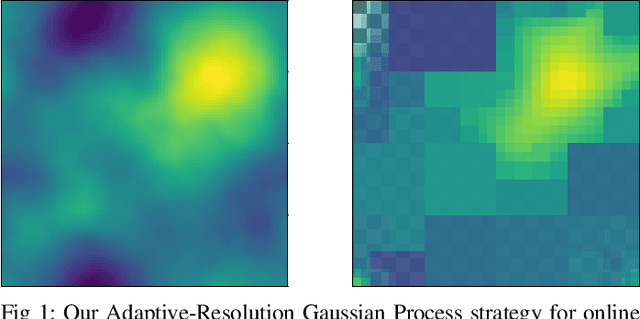

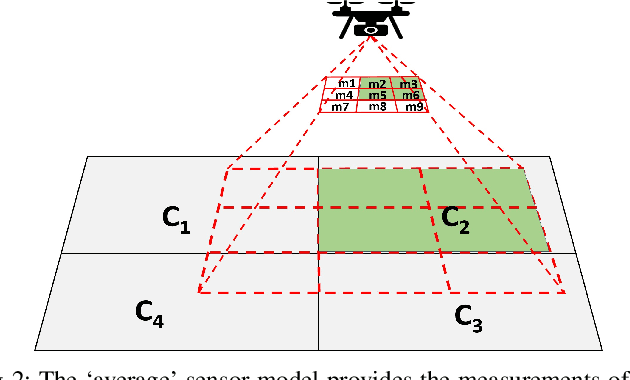

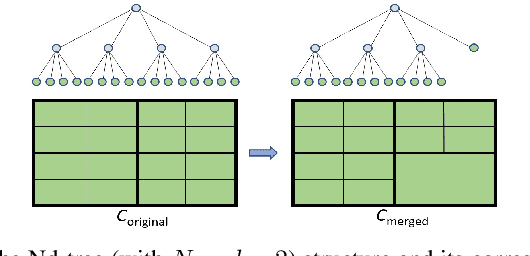

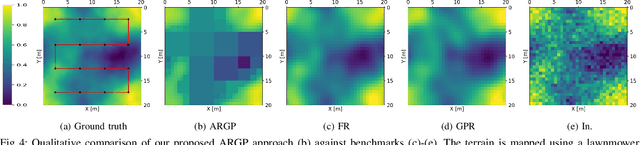

Adaptive-Resolution Gaussian Process Mapping for Efficient UAV-based Terrain Monitoring

Sep 29, 2021

Abstract:Unmanned aerial vehicles (UAVs) are rapidly gaining popularity in a variety of environmental monitoring tasks. A key requirement for autonomous operation is the ability to perform efficient environmental mapping and path planning online, given their limited on-board resources constraining operation time and computational capacity. To address this, we present an adaptive-resolution approach for terrain mapping based on the Nd-tree structure and Gaussian Processes (GPs). Our approach enables retaining details in areas of interest using higher map resolutions while compressing information in uninteresting areas at coarser resolutions to achieve a compact map representation of the environment. A key aspect of our approach is an integral kernel encoding spatial correlation of 2D grid cells, which enables merging uninteresting grid cells in a theoretically sound way. Results show that our approach is more efficient in terms of time and memory consumption without compromising on mapping quality. The resulting adaptive-resolution map accelerates the on-line adaptive path planning as well. Both performance enhancement in mapping and planning facilitate the efficiency of autonomous environmental monitoring with UAVs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge